chapter 4 the binomial and normal probability

advertisement

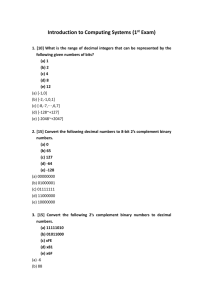

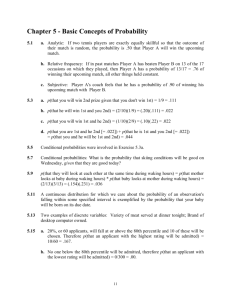

Statistics Pre-Term Program – Chapter Four Some Special Probability Models CHAPTER 4 THE BINOMIAL AND NORMAL PROBABILITY MODELS TABLE OF CONTENTS Page 1 The Binomial Probability Distribution Conditions for a Binomial Experiment, Bernoulli Trials Mean and Standard Deviation of a Binomial Random Variable Using Excel and Table 1 to Calculate Binomial Probabilities 2 The Normal Probability Model The Standard Normal Distribution Standardizing Normal Distributions Mensa Example Tele-Evangelist Example 3 Approximating a Binomial Model with the Normal Distribution 112 112 112 113 119 119 125 126 127 127 Advertising Campaign and Dear Abby Examples ESP Example 128 129 4 Fundamental Ideas From The Fourth Session 130 Six Probability Model Exercises Answer Sketches for Six Probability Model Exercises 5 Table One: The Binomial Distribution 6 Table Two: The Normal Distribution 131 133 135 143 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 111 Statistics Pre-Term Program – Chapter Four Some Special Probability Models The Binomial Probability Distribution The simplest data-gathering process is counting the number of times a certain event occurs. We can reduce almost an endless variety of situations to this simple counting process. Consumer preference and opinion polls (i.e., sample surveys) are conducted frequently in business. Some recent examples include polls to determine the number of voters in favor of legalizing casino gambling in a particular state, and the number of Americans in favor of government-controlled health care. The Binomial distribution is a discrete probability distribution that is extremely useful for describing many phenomena. The random variable of interest that follows the Binomial distribution is the number of successes obtained in a sample of n observations. The Binomial distribution can be applied to numerous applications, such as: • • • • What is the probability that in a sample of 20 tires of the same type none will be defective if 10% of all such tires produced at a particular plant are defective? What is the probability that red will come up 15 or more times in 20 spins of the American roulette wheel (38 spaces)? What is the probability that a student can pass (that is, get at least 60% correct on) a 30-question multiple-choice exam (each question containing four choices) if the student guesses each question? What is the probability that a particular stock will show an increase in its closing price on a daily basis over the next ten (consecutive) trading sessions if stock market price changes really are random? Conditions Required for a Binomial Experiment 1. There is a set of n trials, which can be classified as either “successes” or “failures.” 2. The probability of a success, π, is constant over the n trials. 3. The outcome for any one trial is independent of the outcome for any other trial. These first three conditions specify Bernoulli Trials. 4. The Binomial random variable X counts the number of “successes” in n trials. Mean and Standard Deviation of the Binomial Probability Distribution A random variable following the Binomial distribution is completely specified by the two parameters n and π. Note that for a random variable X following the Binomial (n, π) distribution: µ X = E[ X ] = nπ , and σ X = n π (1 − π ) We can obtain binomial probabilities using a Table (like Table 1) or Excel. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 112 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Using Excel to find Binomial Probabilities To obtain the probability Pr(Y = k) for a Binomial random variable Y with parameters n and π using Excel, use the function =BINOMDIST(k, n, π, 0). Suppose Y is a Binomial random variable with n = 5 and π = .50. To find Pr(Y = 0), enter =BINOMDIST(0, 5, 0.5, 0) in any empty cell and press Enter to obtain the probability .03125. In this way, a complete Binomial table for n = 5 and π = .50 can be obtained: k 0 1 2 3 4 5 Pr(Y = k) .03125 .15625 .31250 .31250 .15625 .03125 Sometimes, we will want to obtain a cumulative (“less than or equal to”) probability. For instance, suppose we want to find the probability that Y ≤ 4. To do this, we can either • add together the individual probabilities: Pr(Y ≤ 4) = Pr(Y = 0) + Pr(Y = 1) + Pr(Y = 2) + Pr(Y = 3) + Pr(Y = 4) • OR adjust the Excel command and use the function BINOMDIST(k, n, π, 1). For instance, in this setting, we’d use =BINOMDIST(4, 5, 0.5, 1). The “1” in the last part of the command asks for a cumulative (“less than or equal to”) probability instead of a simple (“equal to”) probability. A complete cumulative probability table for the Binomial distribution with n = 5 and π = .50 is easily generated from the information above: k 0 1 2 3 4 5 Pr(Y ≤ k) .03125 .18750 .50000 .81250 .96875 1.00000 Using Table 1 to find Binomial Probabilities (see pp. 135-142) To use the Table of Binomial Probabilities [Table 1], we first select the appropriate subtable for our n and then the correct probability π. The table gives the probability distribution of X, the number of successes, for a wide variety of small Binomial experiments. Note that values of π below .50 can be read at the top of the block and values of x are read on the left. For values of π above .5, values of π are read at the bottom, and values of x on the right. Suppose n = 5 and π = .30. Here’s the n = 5 section of Table 1, pulled from the table on page 135. Note the agreement of the shaded column with the Excel results. n=5 k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 5 .7738 .2036 .0214 .0011 .0000 .0000 .5905 .3281 .0729 .0081 .0005 .0000 .4437 .3915 .1382 .0244 .0022 .0001 .3277 .4096 .2048 .0512 .0064 .0003 .2373 .3955 .2637 .0879 .0146 .0010 .1681 .3602 .3087 .1323 .0284 .0024 .1160 .3124 .3364 .1811 .0488 .0053 .0778 .2592 .3456 .2304 .0768 .0102 .0503 .2059 .3369 .2757 .1128 .0185 .0313 .1563 .3125 .3125 .1563 .0313 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 5 4 3 2 1 0 k↑ Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 113 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Example 4.1. A Simple Binomial Distribution Example Let X be a Binomial random variable with n = 4 trials and probability of success π = .70. Let x be the number of successes observed. (a) Find Pr(x = 3). (b) Find Pr(x < 2). (c) Find Pr(x ≥ 2). (d) What would be the modal (most common) outcome (number of successes)? We could, for instance, draw a tree of the n = 4 trials, as shown on the next page. From the tree, we can directly find the probability distribution of X. For instance, PX(0) = Pr(X = 0) = .0081, and PX(1) = Pr(X = 1) = 4(.0189) = .0756, etc. In Excel, we can use statements of the form =BINOMDIST(k, 4, 0.7, 0) to find the simple (“equal to”) probability distribution for X. For instance, • =BINOMDIST(0, 4, 0.7, 0) produces 0.0081 • =BINOMDIST(1, 4, 0.7, 0) produces 0.0756 • =BINOMDIST(2, 4, 0.7, 0) produces 0.2646, etc. As before, we can find cumulative probabilities using =BINOMDIST(k, 4, 0.7, 1). To use Table 1, we look at the n = 4 section of the table on page 135, and π = .70, which is reproduced (and shaded) here: n=4 k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 .8145 .1715 .0135 .0005 .0000 .6561 .2916 .0486 .0036 .0001 .5220 .3685 .0975 .0115 .0005 .4096 .4096 .1536 .0256 .0016 .3164 .4219 .2109 .0469 .0039 .2401 .4116 .2646 .0756 .0081 .1785 .3845 .3105 .1115 .0150 .1296 .3456 .3456 .1536 .0256 .0915 .2995 .3675 .2005 .0410 .0625 .2500 .3750 .2500 .0625 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 4 3 2 1 0 k↑ Note that we must read up from the bottom (since π here is .70, not .30), we have Number of successes, x 0 1 2 3 4 PX(x) .0081 .0756 .2646 .4116 .2401 We can use Table 1 to solve the problems posed previously: (a) The probability that x equals 3 is just PX(3) = Pr(X = 3) = .4116. (b) Pr(X < 2) = Pr(X = 0) + Pr(X = 1) = .0081 + .0756 = .0837. (c) Pr(X ≥ 2) = Pr(X = 2) + Pr(X = 3) + Pr(X = 4) = .2646 + .4116 + .2401 = .9163. (d) There are five possible outcomes: 0, 1, 2, 3 or 4 successes. The outcome with the highest probability is 3 successes: Pr(X = 3) = .4116, which is higher than Pr(X = 0) = .0081, Pr(X = 1) = .0756, Pr(X = 2) = .2646, or Pr(X = 4) = .2401. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 114 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Trial One Trial Two Trial Three Trial Four S on 4th (.70) X (# of S’s) 4 Probability (.7) 4 = .2401 F on 4 th (.30) 3 (.7) 3 (.3) 1 = .1029 S on 4th (.70) 3 (.7) 3 (.3) 1 = .1029 F on 4 th (.30) 2 (.7) 2 (.3) 2 = .0441 S on 4th (.70) 3 (.7) 3 (.3) 1 = .1029 F on 4 th (.30) 2 (.7) 2 (.3) 2 = .0441 S on 4th (.70) 2 (.7) 2 (.3) 2 = .0441 F on 4 th (.30) 1 (.7) 1 (.3) 3 = .0189 S on 4th (.70) 3 (.7) 3 (.3) 1 = .1029 F on 4 th (.30) 2 (.7) 2 (.3) 2 = .0441 S on 4th (.70) 2 (.7) 2 (.3) 2 = .0441 F on 4 th (.30) 1 (.7) 1 (.3) 3 = .0189 S on 4th (.70) 2 (.7) 2 (.3) 2 = .0441 F on 4 th (.30) 1 (.7) 1 (.3) 3 = .0189 S on 4th (.70) 1 (.7) 1 (.3) 3 = .0189 F on 4 th (.30) 0 (.3) 4 = .0081 S on 3 rd (.70) S on 2nd (.70) F on 3 rd (.30) Success (S) on 1 st Trial (.70 = probability) S on 3rd (.70) F on 2 nd (.30) F on 3 rd (.30) S on 3rd (.70) S on 2nd (.70) F on 3 rd (.30) Failure (F) on 1 st Trial S on 3rd (.70) (.30 = probability) F on 2 nd (.30) F on 3 rd (.30) Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 115 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Answering the Four Questions from the Start of the Chapter • What is the probability that in a sample of 20 tires of the same type none will be defective if 10% of all such tires produced at a particular plant are defective? Consider each tire inspection to be a Bernoulli trial, with the event “sampled tire is defective” defined as a success. We need the probability of 0 successes in n = 20 trials, with π = Pr(success) = .10 The relevant Table 1 section follows. The probability of k = 0 successes is .1216. n = 20 k↓ 0 • π = .10 .1216 What is the probability that red will come up 15 or more times in 20 spins of the American roulette wheel (38 spaces)? Consider each spin of the wheel to be a Bernoulli trial, with the event “ball stops in red space” defined as a success. Here, we want to know the probability of 15 or more successes in n = 20 trials. If each of the 38 spaces is equally likely, then since there are 18 red spaces, the probability of a success is 9 π = 18 38 = 19 =.474 . Since Table 1 does not give exact probabilities for this value of π, let’s use Excel to solve the problem. As we have seen, the appropriate Excel statement to find the “equal to k” probability distribution for X is of the form =BINOMDIST(k, n, π, 0). In this case, we have n = 20, π = 9/19, and k = 15, …, 20. Probability Statement Pr(X = 15) Pr(X = 16) Pr(X = 17) Pr(X = 18) Pr(X = 19) Pr(X = 20) Excel Statement (n = 20, π = 9/19) =BINOMDIST(15, 20, 9/19, 0) =BINOMDIST(16, 20, 9/19, 0) =BINOMDIST(17, 20, 9/19, 0) =BINOMDIST(18, 20, 9/19, 0) =BINOMDIST(19, 20, 9/19, 0) =BINOMDIST(20, 20, 9/19, 0) Result 0.00849 0.00239 0.00051 0.00008 7.59E-06 3.23E-07 Summing these results, the probability of 15 or more “red” is approximately .0115 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 116 Statistics Pre-Term Program – Chapter Four • Some Special Probability Models What is the probability that a student can pass (that is, get at least 60% correct on) a 30-question multiple-choice exam (each question containing four choices) if the student guesses each question? Consider each question to be a Bernoulli trial, with the event “answer is correct” defined as a success. We then want to know the probability of at least 18 (30 × 60%) successes in n = 30 trials, with π = Pr(success) = ¼ = .25. Note that Pr(X is at least 18) = Pr(X ≥ 18) = Pr(X = 18) + Pr(X = 19) + … + Pr(X = 30) in this case. The relevant part of Table 1 is summarized below. The probability appears to be 0 (to four decimal places). n = 30 k↓ 17 18 ≥ 19 • π = .25 .0002 .0000 .0000 What is the probability that a particular stock will show an increase in its closing price on a daily basis over the next ten (consecutive) trading sessions if stock market price changes really are random? Consider each day’s price change (increase or decrease) to be a Bernoulli trial, with the event “stock price increases” defined as a success. We assume that “price changes are random” means that the probability of an increase equals the probability of a decrease and that there is no chance that the price will stay the same. We then want to know the probability of 10 successes in n = 10 trials, with π = Pr(increase) = ½ = 0.5. From Table 1, we can read off the probability as 0.0010. Example 4.2. Bad Seafood1 In a study, Consumer Reports (February 1992) found widespread contamination and mislabeling of seafood in supermarkets in New York City and Chicago. The study revealing one alarming statistic: 40% of the swordfish pieces available for sale had a level of mercury above the Food and Drug Administration (FDA) maximum amount. In a random sample of twelve swordfish pieces, find the probability that: a. Exactly five of the swordfish pieces have mercury levels above the FDA maximum. b. At least ten swordfish pieces have a mercury level above the FDA maximum. c. At most seven swordfish pieces have a mercury level above the FDA maximum. 1 Sincich, Exercise 5.22. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 117 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Example 4.3. Office Supply Bids An office supply company currently holds 30% of the market for supplying suburban governments. This share has been quite stable and there is no reason to think it will change. The company has three bids outstanding, prepared by its standard procedure. Let Y be the number of the company’s bids that are accepted. a. Find the probability distribution of Y. b. What assumptions did you make in answering part a.? unreasonable? c. Find the expected value and variance of Y. d. It could be argued that if the company loses on the first bid, that signals that a competitor is cutting prices and the company is more likely to lose the other bids as well. Similarly, if the company wins on the first bid, that signals that competitors are trying to improve profit margins and the company is more likely to win the other bids also. If this argument is correct, and the two sides of the argument balance out to a 30% market share for the company, will the expected value and variance of Y be increased or decreased compared to the values found in part c.? Are any of these assumptions Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 118 Statistics Pre-Term Program – Chapter Four Some Special Probability Models The Normal Probability Model We now turn our attention to continuous probability density functions, which arise due to some measuring process on a phenomenon of interest. When a mathematical expression is available to represent some underlying continuous phenomenon, the probability that various values of the random variable occur within certain ranges or intervals may be calculated. However, the exact probability of a particular value from a continuous distribution is zero. A particular continuous distribution, the Normal, or Gaussian, model is of vital importance. 1. Numerous continuous phenomena seem to follow it or can be approximated to it. 2. We can use it to approximate various discrete probability distributions and thereby avoid much computational drudgery. 3. It provides the basis for classical statistical inference because of its relationship to the Central Limit Theorem. Crucial theoretical properties of the Normal distribution include: 1. It is bell-shaped and symmetrical in its appearance. 2. Its measures of central tendency (mean, median and mode) are all identical. 3. The Empirical Rule holds exactly for the Normal model. The Normal probability density function is: f Y ( y) = 1 − 1 ( y − µ )2 σ 2 e ( 2) = 2πσ 1 2πσ ( y − µ )2 − 2 e 2σ The values µ and σ in the normal density function are in fact the mean and standard deviation of Y. The Normal distribution is completely determined once the parameters µ and σ are specified. A whole family of normal distributions exists, but one differs from another only in the location of its mean µ and in the variance σ2 of its values. The Standard Normal Distribution (Table 2) Tables of normal curve areas (probabilities) are always given for the Standard Normal Distribution. With a little effort, we can calculate any Normal probability based on just this one table. • • The Standard Normal random variable Z has mean µZ = 0 and standard deviation σZ = 1. Like all Normal distributions, the standard normal is symmetric around zero, so zero is the median (50th percentile) of the distribution, so Pr(Z ≤ 0) = Pr(Z ≥ 0) = .5 Table 2 (pages 143-144) gives the probability that a Standard Normal distribution will be less than z. Page 143 shows negative values of z, page 144 shows positive z values. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 119 Statistics Pre-Term Program – Chapter Four • Some Special Probability Models Since the Z distribution is continuous, the probability that Z is exactly (to an infinite number of decimal places) equal to any particular value x is essentially zero, so, for instance: Pr(Z ≤ x) = Pr(Z < x) for all x, and similarly, Pr(Z ≥ y) = Pr(Z > y) for all y. There are, in total, seven different forms of probability statements that we might need to solve for the standard Normal model, all of which can be solved by working with the entries in Table 1 (pp. 143144). Here they are: Scenario 1. Pr (Z < a) = Pr (Z ≤ a) where a is any negative number. As an example, let’s find Pr (Z < -2.03). This setting is especially easy – we just read out the value provided by the Table. In this case, we need the table value at –2.03, which can be found on page 143 (the relevant row of the table is shown below): z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 -2.00 .02275 .02222 .02169 .02118 .02068 .02018 .01970 .01923 .01876 .01831 -2.03 The table gives the probability of a standard Normal value being less than or equal to z, so Pr(Z ≤ 2.03) = Pr(Z < -2.03) = .02118. This number corresponds to the area of the shaded region on the picture. Scenario 2. Pr (Z < b) = Pr (Z ≤ b) where b is any positive number. Now, let’s find Pr (Z < 1.48). As in Scenario 1., we can just read the value we need from the Table – the row we need is on page 144, and is reproduced below. z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 1.40 .91924 .92073 .92220 .92364 .92507 .92647 .92785 .92922 .93056 .93189 So Pr(Z < 1.48) = Pr(Z ≤ 1.48) = .93056. This number is the area of the shaded region in the next picture. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 120 Statistics Pre-Term Program – Chapter Four Some Special Probability Models 1.48 Scenario 3. Pr (Z > c) = Pr (Z ≥ c) where c is any negative number. We can simply use the complements law in this setting. If we want to find Pr(Z > -1.96), we find the table value corresponding to –1.96, as shown below. z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 -1.90 .02872 .02807 .02743 .02680 .02619 .02559 .02500 .02442 .02385 .02330 Thus, Pr(Z < -1.96) = .025, and by the complements law, Pr(Z > -1.96) = 1 - .025 = .97500 Scenario 4. Pr (Z > d) = Pr (Z ≥ d) where d is any positive number. Again, we can use the complements law. Suppose we want to find Pr(Z > 0.39): z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 0.30 .61791 .62172 .62552 .62930 .63307 .63683 .64058 .64431 .64803 .65173 From Table 1, Pr(Z < 0.39) = .65173. Thus, Pr(Z > 0.39) = 1 - .65173 = .34827 Scenario 5. Pr (e < Z < f) = Pr (e < Z ≤ f) = Pr (e ≤ Z < f) = Pr (e ≤ Z ≤ f) where e and f are both negative numbers. Suppose we want to find Pr(-1.82 < Z < -0.47). The relevant rows in the table are: z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 -1.80 .03593 .03515 .03438 .03362 .03288 .03216 .03144 .03074 .03005 .02938 Z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 -0.40 .34458 .34090 .33724 .33360 .32997 .32636 .32276 .31918 .31561 .31207 Pr(Z < -1.82) = .03438, and Pr(Z < -0.47) = .31918. Consider the picture below. The shaded double arrow indicates the area we need to find: Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 121 Statistics Pre-Term Program – Chapter Four Some Special Probability Models .31918 .03438 -1.82 -0.47 Now, we see that in order to find the probability of falling in between –1.82 and –0.47, i.e. Pr(-1.82 ≤ Z ≤ -0.47), we need to subtract .03438 from .31918 – so the result is Pr(-1.82 ≤ Z ≤ -0.47) = .31918 - .03438 = .28480 A General Rule for Using Table 2 If we want to find Pr(a ≤ Z ≤ b) where a and b are any numbers with a < b, we have the following relationship: Pr(a ≤ Z ≤ b) = Pr(Z ≤ b) – Pr(Z ≤ a), or, equivalently, Pr(a < Z < b) = Pr(Z < b) – Pr(Z < a). Scenario 6. Pr (e < Z < f) = Pr (e < Z ≤ f) = Pr (e ≤ Z < f) = Pr (e ≤ Z ≤ f) where e is negative and f is a positive number. Suppose we want to find Pr(-2.33 < Z < +1.96). First, we use Table 1 to get the values: Z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 -2.30 .01072 .01044 .01017 .00990 .00964 .00939 .00914 .00889 .00866 .00842 z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 1.90 .97128 .97193 .97257 .97320 .97381 .97441 .97500 .97558 .97615 .97670 From the General Rule above, we see that Pr(-2.33 < Z < 1.96) = Pr(Z < 1.96) – Pr(Z < -2.33) = .97500 - .00990 = .96510 So there’s the answer. Just in case you’re not convinced that the rule works, here’s the graphical evidence: Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 122 Statistics Pre-Term Program – Chapter Four Some Special Probability Models .97500 .00990 -2.33 1.96 Again, the shaded double arrow indicates the area we need – and we obtain the value by subtracting .00990 from .97500 to get .96510. Scenario 7. Pr (e < Z < f) = Pr (e < Z ≤ f) = Pr (e ≤ Z < f) = Pr (e ≤ Z ≤ f) where e and f are both positive numbers. Suppose we want to find Pr(0.93 < Z < 2.08). We first pull the appropriate Table 1 values. Z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 0.90 .81594 .81859 .82121 .82381 .82639 .82894 .83147 .83398 .83646 .83891 Z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 2.00 .97725 .97778 .97831 .97882 .97932 .97982 .98030 .98077 .98124 .98169 From the General Rule above, we see that Pr(0.93 < Z < 2.08) = Pr(Z < 2.08) – Pr(Z < 0.93) = .98124 - .82381 = .15743 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 123 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Example 4.4. Using the Standard Normal Distribution Table (Table 2) Let Z be a standard Normal random variable. Find • k so that Pr(0 ≤ Z ≤ k) = .40 .500 .400 0 k To find the Z score that cuts off the data as above, we look in the body of Table 2 for the closest value to .90000, then use the Z score associated with that value. Here, we have .89973 .90147 1.28 1.29 Thus, k = 1.28 (we usually don’t bother to interpolate…) • j so that Pr(-j ≤ Z ≤ j) = .60 .30000 -j .30000 0 j To find the Z score that cuts off the data as above, we look in the body of Table 2 for the closest value to .80000 (why?), then use the Z score associated with that value. We have .80234 .79955 0.84 0.85 Thus, j = 0.84, since it is closer to the desired probability. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 124 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Standardizing the Normal Distribution By using the transformation formula Z = X − µX , any Normal random variable X (with mean µX and σX standard deviation σX) is converted to a standardized Normal random variable Z, which is Normal with mean 0 and standard deviation 1. Thus, we only need one table to find probabilities for all Normal random variables. For a given value of X, the corresponding value of Z, sometimes called a z-score, is the number of standard deviations σX that X lies away from µX. Example 4.5. Using Table 2 for Unstandardized Normal probabilities If X is a Normal random variable with µ = 100 and σ = 20, an X value of 130 lies 1.5 standard deviations above (to the right of) the mean µ and the corresponding z-score is 1.50. An X value of 85 is .75 standard deviations below the mean since z= 1. 85 − 100 = −0.75 20 Find Pr(85 ≤ X ≤ 130). 130 − 100 85 − 100 Pr(85 ≤ X ≤ 130) = Pr ≤Z≤ 20 20 = Pr ( − 0.75 ≤ Z ≤ 150 . ) So we now need to find the sum of areas A and B in the following picture of the Standard Normal (Z) distribution: Area B - 0.75 Area A 0 1.50 From our general rule, Pr(in Area A or Area B) = Pr(Z < 1.50) – Pr(Z < -0.75), From Table 2, we have Pr(Z < 1.50) = .93319 and Pr(Z < -0.75) = .22663 So the difference (and our answer) is .93319 - .22663 = .70656. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 125 Statistics Pre-Term Program – Chapter Four Some Special Probability Models 2. Find Pr(80 ≤ X). 80 − 100 Pr(80 ≤ X ) = Pr ≤ Z 20 = Pr( − 2.00 ≤ Z ) So we need to find the area marked by the shaded arrow in the following picture: .02275 - 2.00 We know that Pr(Z ≤ -2.00) = .02275 from Table 2, and so our area is 1 - .02275 = .97725 Example 4.6. Mensa and Standardized Test Scores The Stanford-Binet IQ test is standardized that the IQ scores of the population will follow a Normal distribution with mean 100, and standard deviation 16. Mensa is an organization that only allows people to join if their IQs are in the top 2% of the population. a. What is the lowest Stanford-Binet IQ that still makes one eligible to join Mensa? Let X be IQ, then X has a Normal distribution with µ = 100 and σ = 16. We need to find k so that k − 100 Pr(X > k) = .02. Converting to Z scores, we have Pr Z > =.02 . Now, Pr(Z > 2.05) = .02, 16 k − 100 since Pr(Z < 2.05) ≈ .98. Thus, = 2.05 , so k = 132.8. 16 b. According to the model, what percentage of the population score below 84 on the StanfordBinet measure? Let X be IQ, then X has a Normal distribution with µ = 100 and σ = 16. The question asks for the probability of a score below 84, i.e. Pr(X < 84). Converting our probability statement to Z scores, we 84 − 100 obtain Pr( X < 84) = Pr Z < = Pr ( Z < −1.00) . From Table 2, Pr(Z < -1.00) = .15866. 16 So a bit less than 16% of the population score below 84. Note the similarity to the empirical rule in this setting. Since roughly 68% of the scores should be within one standard deviation of the mean, 32% should be outside that range, half of which (or 16%) will be below it, and half (or 16%) above it. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 126 Statistics Pre-Term Program – Chapter Four Some Special Probability Models c. Mensa also allows members to qualify on the basis of certain standard tests. The GRE is standardized to have a Normal population distribution with mean 497, and standard deviation 115. If you were to try to qualify on the basis of the GRE exam, what score would you need? Let Y be GRE, then Y has a Normal distribution with µ = 497 and σ = 115. Again we must find k so k − 497 that Pr(Y > k) = .02. Converting, we have Pr Z > =.02 . From Table 2, Pr(Z > 2.05) = .02, 115 k − 497 since Pr(Z < 2.05) ≈ .98. Thus, = 2.05 , so k = 732.75. 115 Example 4.7. Tele-Evangelist Collections 2 Records for the past several years show that the amount of money collected daily by a prominent teleevangelist is Normally distributed with a mean (µ) of $2000 and a standard deviation (σ) of $500. 1. What is the chance that tomorrow’s donations will be less than $1500? 2. What is the probability that tomorrow’s donations will be between $2000 and $3000? 3. What is the chance that tomorrow’s donations will exceed $3000? Approximating a Binomial with the Normal Distribution The Normal distribution may be used to approximate probabilities for many other distributions. For instance, we can approximate the Binomial distribution by a Normal distribution for certain values of n and π. The approximation works well as long as both nπ and n(1 - π) are greater than 5. The basic idea is to pretend that a Binomial random variable Y has a Normal distribution, using the Binomial mean µ = n π, and standard deviation σ = nπ (1 − π ) . For example, we can treat a Binomial random variable with n = 400 and π = .20 as approximately normally distributed with µ = 400(.20) = 80 and σ2 = 400(.20)(.80) = 64, so σ = 8. To approximate Pr(Y > 96), we use Table 2 to obtain [96 − 80] P(Y > 96 ) = P Z > = P( Z > 2.00 ) =.0228 8 2 Larsen, Marx and Cooil, Question 6.3.1. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 127 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Example 4.8. Advertising Campaign An advertising campaign for a new product is targeted to make 20% of the adult population in a metropolitan area aware of the product. After the campaign, a random sample of 400 adults in the metropolitan area is obtained. a. Find the approximate probability that 57 or fewer adults in the sample are aware of the product. X, the number of adults aware of the product, follows a Binomial distribution with n = 400, and π = .20 (assuming that the ad campaign worked). Thus, E(X) = µ = (400)(.2) = 80.0, and SD(X) = σ = 400(.2)(.8) = 8 . We use a Normal approximation for X, and we want to find Pr(X ≤ 57). Converting to Z 57 − 80 Pr Z ≤ = Pr(Z ≤ -2.88) = .00199 8 b. Should the approximation in part a. be accurate? scores, Pr(X ≤ 57) = Sure. nπ = (400)(.20) = 80.0, and n(1-π) = (400)(.80) = 320.0, both much larger than 5. c. The sample showed that 57 adults were aware of the product. The marketing manager argues that the low awareness rate is a random fluke of the particular sample. Based on your answer to part a., do you agree? No. A similar calculation to part a. suggests that the probability of a result this bad (low) in terms of awareness turns out to be about .002 if the ad campaign really has met its target. Example 4.9. Dear Abby Example3 Dear Abby, You wrote in your column that a woman is pregnant for 266 days. Who said so? I carried my baby for ten months and five days, and there is no doubt about it because I know the exact date my baby was conceived. My husband is in the Navy and it couldn’t have possibly been conceived any other time because I saw him only once for an hour, and I didn’t see him again until the day before the baby was born. I don’t drink or run around, and there is no way this baby isn’t his, so please print a retraction about the 266-day carrying time because otherwise I am in a lot of trouble. San Diego Reader Whether or not San Diego Reader is telling the truth is a judgment that lies beyond the scope of any statistical analysis (other than maybe a paternity test!), but quantifying the plausibility of her story doesn’t. According to the collective experience of generations of pediatricians, pregnancy durations, Y, tend to be Normally distributed with µ = 266 days and σ = 16 days. What do these figures imply about San Diego Reader’s credibility? Do you believe her? Consider both a Normal probability model, as well as the Excel simulation results which follow… 3 Larsen, Marx and Cooil, Question 5.3.12. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 128 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Simulation of 100 pregnancy durations using Excel’s Data Analysis Tool for Random Number Generation (Normal Distribution, with mean µ = 266 days, and σ = 16 days). 100 Simulated Pregnancy Durations from Excel (sorted) 234.37 238.40 239.88 241.02 241.50 241.90 243.72 244.89 246.35 246.47 248.95 249.61 251.61 251.96 251.98 252.28 252.55 252.92 252.98 253.06 253.78 254.35 254.41 254.63 255.94 255.95 257.14 257.58 258.54 259.32 260.01 260.07 260.70 261.12 261.14 261.39 261.86 262.03 262.11 262.49 262.65 262.66 262.92 263.20 263.51 263.67 263.91 263.94 263.95 264.04 264.22 265.86 266.27 266.37 266.46 268.45 268.86 268.86 269.58 269.68 270.79 271.03 271.03 271.32 271.65 271.73 272.01 272.42 272.63 273.06 273.22 273.61 275.10 275.35 275.81 275.99 277.59 277.83 279.57 279.77 281.26 281.34 281.86 282.10 282.24 282.63 283.17 284.63 285.19 286.08 288.31 288.54 289.86 289.92 291.44 293.01 294.09 294.52 294.75 306.03 Example 4.10. ESP and The Normal Approximation Research in extrasensory perception has ranged from the slightly unconventional to the downright bizarre. Beginning around 1910, though, experimenters moved out of seance parlors and into laboratories, where they began setting up controlled studies that could be analyzed statistically. In 1938, Pratt and Woodruff designed an experiment that became a prototype for an entire generation of ESP research. The investigator and a subject sat at opposite ends of a table. Between them was a partition with a large gap at the bottom. Five blank cards, visible to both participants, were placed side by side on the table beneath the partition. On the subject’s side of the partition, one of the standard ESP symbols (square, wavy lines, circle, star and plus) was hung over each of the blank cards. The experimenter shuffled a deck of ESP cards, looked at the top one, and concentrated. The subject tried to guess the card. If (s)he thought it was a circle, (s)he would point to the bland card on the table that was beneath the circle card hanging on the subject’s side of the partition. The procedure was then repeated. Altogether, 32 subjects, all students, took part in the study. They made 60,000 guesses and were correct 12,489 times. What can we deduce from these numbers? Have Pratt and Woodruff presented convincing evidence in favor of ESP, or should 12,489 right answers out of 60,000 attempts be considered inconclusive? X follows a Binomial distribution, with n = 60,000 and π = .20. Since we don’t have a Binomial table for n = 60,000, we’ll use a Normal approximation. It should work well since nπ = (60,000)(.20) = 12,000, and n(1-π) = (60,000)(.80) = 48,000 are both much larger than 5. To use the Normal approximation, we need the mean and standard deviation of X. In this case, we have µ = nπ = (60,000)(.20) = 12,000, and σ = (60000)(.2)(.8) = 97.98 . Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 129 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Fundamental Ideas From The Fourth Session The Binomial Probability Distribution • • Bernoulli Trials • There is a set of n dichotomous (0/1 or “success” / “failure”) trials. • The probability of a success, π, is constant over the n trials. • The outcome of a trial is independent of the outcome for any other trial. Binomial r.v. X = number of “successes” in n Bernoulli trials, each with prob. π: Mean µ X = E[ X ] = nπ , and SD σ X = n π (1 − π ) Using Excel to find Binomial Probabilities (See Bulkpack Chapter 4) • • If X follows a Binomial distribution with parameters n and π, to find Pr(X = k), enter =BINOMDIST(k, n, π, 0) If X follows a Binomial distribution with parameters n and π, to find Pr(X ≤ k), enter =BINOMDIST(k, n, π, 1) Using Table 1 to find Binomial Probabilities • • n = 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 15, 20, 25, 30, 50 and 100 and π = .05, .10, .15, …, .90, .95 The Normal Probability Distribution • • • • • • • • Crucial properties Bell-shaped and symmetrical distribution. Mean, Median and Mode all identical. Empirical Rule holds exactly. Completely specified by Mean µ and Standard Deviation σ Applies in many settings, thanks mainly to Central Limit Theorem (to come), and to use of Normal approximation to other distributions (like the Binomial). The Standard Normal Distribution Standard Normal random variable Z has mean µZ = 0 and standard deviation σZ = 1. Table 2 gives the Standard Normal distribution • Table 2 gives probabilities between 0 and a positive number z. Z scores convert Normal random variables to Standard Normal random variables X − µX Z= σX Using the Normal Distribution to Approximate Other Distributions Find the mean µ and standard deviation σ of the desired distribution, then use Normal probabilities with these parameters to approximate. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 130 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Six Probability Model Exercises (Binomial & Normal Models ) Answer Sketches follow the exercises 1. [Keller, Warrack and Bartel, p. 182] A warehouse engages in acceptance sampling to determine if it will accept or reject incoming lots of designer sunglasses, some of which invariably are defective. Specifically, the warehouse has a policy of examining a sample of 50 sunglasses from each lot and of accepting the lot only if the sample contains no more than four defective pairs. What is the probability of a lot’s being accepted if, in fact, 5% of the sunglasses in the lot are defective? 2. [KWB, p. 178] A student majoring in accounting is trying to decide upon the number of firms to which she should apply. Given her work experience, grades, and extracurricular activities, she has been told by a placement counselor that she can expect to receive a job offer from 80% of the firms to which she applies. Wanting to save time, the student applies to only five firms. a) b) c) 3. [KWB, p. 202] A firm’s marketing manager believes that total sales for the firm can be modeled by using a Normal distribution, with a mean of $2.5 million and a standard deviation of $300,000. a) b) c) d) 4. Determine the probability that the student receives no offers. What is the probability of five offers? What is the chance of at most two offers? What is the probability that the firm’s sales will exceed $3 Million? What is the probability that the firm’s sales will fall within $150,000 of the expected level of sales? In order to cover fixed costs, the firm’s sales must exceed the break-even level of $1.8 million. What is the probability that sales will exceed the break-even level? Find the sales level that has only a 9% chance of being exceeded next year. [KWB, p. 202] Empirical studies have provided support for the belief that a common stock’s annual rate of return is approximately Normally distributed. Suppose you have invested in the stock of a company for which the annual return has an expected value of 16% and a standard deviation of 10%. a) b) c) d) Find the probability that your one-year return will exceed 30%. Find the probability that your one-year return will be negative. Suppose this company embarks on a new high-risk, but potentially highly profitable venture. As a result, the return on the stock now has an expected value of 25% and a standard deviation of 20%. Answer parts (a) and (b) in light of the revised estimates regarding the stock’s return. As an investor, would you approve of the company’s decision to embark on the new venture? Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 131 Statistics Pre-Term Program – Chapter Four 5. 6. Some Special Probability Models [Carlson & Thorne, p. 218] A building owner purchases four new refrigerators, which are guaranteed by the manufacturer. The probability that each refrigerator is defective is 0.20. Define the random variable X = total number of defective refrigerators. a) Construct the probability distribution of X. b) What are the mean and variance of X ? c) The cost of repair, to honor the guarantee, consists of a fixed fee of $40 plus a cost of $60 to repair each defective refrigerator. What is the average cost to repair these four refrigerators? Note that the fixed fee of $40 is incurred only if there is at least one defective refrigerator. It is believed by certain parapsychologists that hypnosis can bring out a person’s ESP ability. To test their theory, they hypnotize 15 volunteers and ask each to make 100 guesses of ESP cards under conditions much like those of Pratt and Woodruff described in Example 4.F in the Lecture Notes. A total of X = 326 correct responses were recorded. To what extent does the 326 support the hypnosis hypothesis? To answer the question, compute Pr(X ≥ 326). Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 132 Statistics Pre-Term Program – Chapter Four Some Special Probability Models Six Probability Model Exercises: Answer Sketches 1. We have to find the probability of accepting a lot when in fact 5% of the sunglasses in the lot are defective. We accept the lot if a sample of n = 50 sunglasses produces no more than 4 defective pairs. If we take a random sample from the lot, we should be able to assume independence of sunglasses and a constant probability of defects. Thus, we have a Binomial experiment. Our random variable is X = the number of defective sunglasses in our sample from the lot. So we know that X follows a Binomial distribution with n = 50 and π = .05, and we want to find the probability that X is no more than four. Again, we can use Table 1. Pr(X is no more than 4) = Pr(X ≤ 4) = Pr(X=0) + Pr(X=1) + Pr(X=2) + Pr(X=3) + Pr(X=4) = .0769 + .2025 + .2611 + .2199 + .1360 = .8964 Or, we could use Excel’s BINOMDIST function. In this case, we’d want the cumulative (“less than or equal to”) for X = 4 in a Binomial with n = 50 trials, and probability of success π = .05, so we use =BINOMDIST(4,50,0.05,1) and obtain the value 0.896383. 2. The number of firms making offers, X, follows a Binomial distribution with n = 5, and π = .80. So the probability that the student receives no offers is Pr(X = 0) = .0003, according to Table 1. The probability of five offers is Pr(X = 5) = .3277, and the probability of at most two offers is Pr(X is at most 2) = Pr(X ≤ 2) = Pr(X = 0) + Pr(X = 1) + Pr(X = 2) = .0003 + .0064 + .0512 = .0579. 3. Sales, Y, can be modeled with a Normal distribution with µ = $2,500,000 and σ = $300,000. − 3, 000 , 000 a) Pr(Y > $3,000,000) = Pr Z > 2, 500, 000 = Pr(Z > -1.67). From Table 2, we 300 , 000 [ ] have Pr(Z < -1.67) = .04746, so Pr(Z > -1.67) = 1 - .04746 = .95254 b) To fall within 150,000 of the expected value, 2,500,000, we must find Pr (2,350,000 < − 2 , 500, 000 2 , 650, 000− 2 ,500, 000 Y < 2,650,000) = Pr( 2, 350 , 000 <Z< ) = Pr(-0.50 < Z < 0.50). 300 ,000 300 , 000 From Table 2, we have Pr(Z < .50) = .69146 and Pr(Z < -.50) = .30854, so Pr(2,350,000 < Y < 2,650,000) = Pr(Z < 0.50) – Pr(Z < -0.50) = .69146 - .30854 = .38292 c) [ Pr(Y > 1,800,000) = Pr Z > 1, 800, 000 − 2 ,500 ,000 300 , 000 ] = Pr(Z > -2.33). From Table 2, we have Pr(Z < -2.33) = .00990. So Pr(Y > 1,800,000) = Pr(Z > -2.33) = 1 - .00990 = .99010 d) We want to find the value x such that Pr(Y > x) = .0900. To do this, we first note that 2 ,500, 000 this means that we have Pr Z > x −300 = .09. Now, the value from Table 2 that cuts , 000 [ ] off probability .09 in the upper tail of the standard Normal distribution is the value that cuts off probability .91 in the lowe tail (why?). This value is approximately Z = 1.34, since Pr(Z < 1.34) = .90988, so that Pr(Z > 1.34) = .09012. Thus, we need to solve Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 133 Statistics Pre-Term Program – Chapter Four Some Special Probability Models x − 2,500,000 the following equation for x: = 134 . . So we have x - 2,500,000 = 300,000 402,000 and so x = $2,902,000, which is the sales level that has only a 9% chance of being exceeded next year. 4. 5. 6. Y, annual rate of return, follows a Normal distribution with mean 16 and standard deviation 10. a) Pr(Y > 30) = Pr(Z > [30-16]/10) = Pr(Z > 1.40). From Table 2, Pr(Z < 1.40) = .91924, so Pr(Y > 30) = Pr(Z > 1.40) = 1 - .91924 = .08076 b) Pr(Y > 0) = Pr(Z > [0-16]/10) = Pr(Z > -1.60). From Table 2, Pr(Z < -1.60) = .05480, so Pr(Y > 0) = Pr(Z > -1.60) = 1 - .0548 = .94520 c) [Revised part a] Pr(Y > 30) = Pr(Z > [30-25]/20) = Pr(Z > 0.25). From Table 2, Pr(Z < 0.25) = .59871, so Pr(Y > 30) = Pr(Z > 0.25) = 1 - .59871 = .40129. [Revised part b] Pr(Y > 0) = Pr(Z > [0-25]/20) = Pr(Z > -1.25). From Table 2, Pr(Z < -1.25) = .10565, so Pr(Y > 0) = Pr(Z > -1.25) = 1 - .10565 = .89435. d) According to these calculations, the chance of earning 30% or more is increased, as is the chance of a loss. Thus, your decision as to whether to support the decision depends on how risk-averse you are. If you are very risk-adverse, you won’t support it. If you are not, you might support it. X follows a Binomial distribution with n = 4 and π = .20 a) X, from Table 1, has the following probability distribution: Pr(X = 0) = .4096, Pr(X = 1) = .4096, Pr(X = 2) = .1536, Pr(X = 3) = .0256, Pr(X = 4) = .0016 b) E(X) = nπ = (4)(.2) = 0.8, and Var(X) = n π (1 - π) = 4(.2)(.8) = 0.64 c) We can either slog this out directly, or use the fact that Variable Cost = 60X, so E(Variable Cost) = E(60X) = 60E(X) = 60(.8) = $48. Also, note that Fixed Cost = 40 if X ≥ 1. Pr(X ≥ 1) = 1 - Pr(X = 0) = .5904 so the expected fixed cost is 40(.5904) = $23.62. Thus, the expected total cost should be 23.62 + 48 = $71.62 X follows a Binomial distribution, with n = 1500 and π = .20. The Normal approximation should work well since nπ = (1500)(.20) = 300, and n(1-π) = (1500)(.80) = 1200 are both much larger than 5. Here, we have mean µ = nπ = (1500)(.20) = 300, and standard deviation σ = (1500)(.2)(.8) = 15.49 . Converting our probability statement to Z scores, we have 326 − 300 Pr(X ≥ 326) = Pr Z ≥ = Pr(Z ≥ 1.68) = 1 - .95352 = .04648 15.49 So the chances of observing a result this strongly or more in favor of the hypnosis hypothesis are just a bit less than 1 in 20. Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 134 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 1 – The Binomial Probability Distribution Tabulated Values are Pr(X = k) [Values are rounded to four decimal places.] Examples: For n = 2, π = .35, Pr(X = 0) = .4225; For n = 3, π = .60, Pr(X = 1) = .2880 n=2 k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 .9025 .0950 .0025 .8100 .1800 .0100 .7225 .2550 .0225 .6400 .3200 .0400 .5625 .3750 .0625 .4900 .4200 .0900 .4225 .4550 .1225 .3600 .4800 .1600 .3025 .4950 .2025 .2500 .5000 .2500 2 1 0 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 .8574 .1354 .0071 .0001 .7290 .2430 .0270 .0010 .6141 .3251 .0574 .0034 .5120 .3840 .0960 .0080 .4219 .4219 .1406 .0156 .3430 .4410 .1890 .0270 .2746 .4436 .2389 .0429 .2160 .4320 .2880 .0640 .1664 .4084 .3341 .0911 .1250 .3750 .3750 .1250 3 2 1 0 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 .8145 .1715 .0135 .0005 .0000 .6561 .2916 .0486 .0036 .0001 .5220 .3685 .0975 .0115 .0005 .4096 .4096 .1536 .0256 .0016 .3164 .4219 .2109 .0469 .0039 .2401 .4116 .2646 .0756 .0081 .1785 .3845 .3105 .1115 .0150 .1296 .3456 .3456 .1536 .0256 .0915 .2995 .3675 .2005 .0410 .0625 .2500 .3750 .2500 .0625 4 3 2 1 0 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 5 .7738 .2036 .0214 .0011 .0000 .0000 .5905 .3281 .0729 .0081 .0005 .0000 .4437 .3915 .1382 .0244 .0022 .0001 .3277 .4096 .2048 .0512 .0064 .0003 .2373 .3955 .2637 .0879 .0146 .0010 .1681 .3602 .3087 .1323 .0284 .0024 .1160 .3124 .3364 .1811 .0488 .0053 .0778 .2592 .3456 .2304 .0768 .0102 .0503 .2059 .3369 .2757 .1128 .0185 .0313 .1563 .3125 .3125 .1563 .0313 5 4 3 2 1 0 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 5 6 .7351 .2321 .0305 .0021 .0001 .0000 .0000 .5314 .3543 .0984 .0146 .0012 .0001 .0000 .3771 .3993 .1762 .0415 .0055 .0004 .0000 .2621 .3932 .2458 .0819 .0154 .0015 .0001 .1780 .3560 .2966 .1318 .0330 .0044 .0002 .1176 .3025 .3241 .1852 .0595 .0102 .0007 .0754 .2437 .3280 .2355 .0951 .0205 .0018 .0467 .1866 .3110 .2765 .1382 .0369 .0041 .0277 .1359 .2780 .3032 .1861 .0609 .0083 .0156 .0938 .2344 .3125 .2344 .0938 .0156 6 5 4 3 2 1 0 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ n=3 n=4 n=5 n=6 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 135 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 1 – The Binomial Probability Distribution Tabulated Values are Pr(X = k) [Values are rounded to four decimal places.] Examples: For n = 7, π = .15, Pr(X = 2) = .2097; For n = 8, π = .55, Pr(X = 3) = .1719 n=7 k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 5 6 7 .6983 .2573 .0406 .0036 .0002 .0000 .0000 .0000 .4783 .3720 .1240 .0230 .0026 .0002 .0000 .0000 .3206 .3960 .2097 .0617 .0109 .0012 .0001 .0000 .2097 .3670 .2753 .1147 .0287 .0043 .0004 .0000 .1335 .3115 .3115 .1730 .0577 .0115 .0013 .0001 .0824 .2471 .3177 .2269 .0972 .0250 .0036 .0002 .0490 .1848 .2985 .2679 .1442 .0466 .0084 .0006 .0280 .1306 .2613 .2903 .1935 .0774 .0172 .0016 .0152 .0872 .2140 .2918 .2388 .1172 .0320 .0037 .0078 .0547 .1641 .2734 .2734 .1641 .0547 .0078 7 6 5 4 3 2 1 0 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 5 6 7 8 .6634 .2793 .0515 .0054 .0004 .0000 .0000 .0000 .0000 .4305 .3826 .1488 .0331 .0046 .0004 .0000 .0000 .0000 .2725 .3847 .2376 .0839 .0185 .0026 .0002 .0000 .0000 .1678 .3355 .2936 .1468 .0459 .0092 .0011 .0001 .0000 .1001 .2670 .3115 .2076 .0865 .0231 .0038 .0004 .0000 .0576 .1977 .2965 .2541 .1361 .0467 .0100 .0012 .0001 .0319 .1373 .2587 .2786 .1875 .0808 .0217 .0033 .0002 .0168 .0896 .2090 .2787 .2322 .1239 .0413 .0079 .0007 .0084 .0548 .1569 .2568 .2627 .1719 .0703 .0164 .0017 .0039 .0313 .1094 .2188 .2734 .2188 .1094 .0313 .0039 8 7 6 5 4 3 2 1 0 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 5 6 7 8 9 .6302 .2985 .0629 .0077 .0006 .0000 .0000 .0000 .0000 .0000 .3874 .3874 .1722 .0446 .0074 .0008 .0001 .0000 .0000 .0000 .2316 .3679 .2597 .1069 .0283 .0050 .0006 .0000 .0000 .0000 .1342 .3020 .3020 .1762 .0661 .0165 .0028 .0003 .0000 .0000 .0751 .2253 .3003 .2336 .1168 .0389 .0087 .0012 .0001 .0000 .0404 .1556 .2668 .2668 .1715 .0735 .0210 .0039 .0004 .0000 .0207 .1004 .2162 .2716 .2194 .1181 .0424 .0098 .0013 .0001 .0101 .0605 .1612 .2508 .2508 .1672 .0743 .0212 .0035 .0003 .0046 .0339 .1110 .2119 .2600 .2128 .1160 .0407 .0083 .0008 .0020 .0176 .0703 .1641 .2461 .2461 .1641 .0703 .0176 .0020 9 8 7 6 5 4 3 2 1 0 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ n=8 n=9 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 136 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 1 – The Binomial Probability Distribution Tabulated Values are Pr(X = k) [Values are rounded to four decimal places.] Examples: For n = 10, π = .20, Pr(X = 1) = .2684; For n = 11, π = .75, Pr(X = 4) = .0064 n = 10 k↓ 0 1 2 3 4 5 6 7 8 9 10 π = .05 .5987 .3151 .0746 .0105 .0010 .0001 .0000 .0000 .0000 .0000 .0000 .95 .10 .3487 .3874 .1937 .0574 .0112 .0015 .0001 .0000 .0000 .0000 .0000 .90 .15 .1969 .3474 .2759 .1298 .0401 .0085 .0012 .0001 .0000 .0000 .0000 .85 .20 .1074 .2684 .3020 .2013 .0881 .0264 .0055 .0008 .0001 .0000 .0000 .80 .25 .0563 .1877 .2816 .2503 .1460 .0584 .0162 .0031 .0004 .0000 .0000 .75 .30 .0282 .1211 .2335 .2668 .2001 .1029 .0368 .0090 .0014 .0001 .0000 .70 .35 .0135 .0725 .1757 .2522 .2377 .1536 .0689 .0212 .0043 .0005 .0000 .65 .40 .0060 .0403 .1209 .2150 .2508 .2007 .1115 .0425 .0106 .0016 .0001 .60 .45 .0025 .0207 .0763 .1665 .2384 .2340 .1596 .0746 .0229 .0042 .0003 .55 .50 .0010 .0098 .0439 .1172 .2051 .2461 .2051 .1172 .0439 .0098 .0010 .50 10 9 8 7 6 5 4 3 2 1 0 k↑ π = .05 .5688 .3293 .0867 .0137 .0014 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .95 .10 .3138 .3835 .2131 .0710 .0158 .0025 .0003 .0000 .0000 .0000 .0000 .0000 .90 .15 .1673 .3248 .2866 .1517 .0536 .0132 .0023 .0003 .0000 .0000 .0000 .0000 .85 .20 .0859 .2362 .2953 .2215 .1107 .0388 .0097 .0017 .0002 .0000 .0000 .0000 .80 .25 .0422 .1549 .2581 .2581 .1721 .0803 .0268 .0064 .0011 .0001 .0000 .0000 .75 .30 .0198 .0932 .1998 .2568 .2201 .1321 .0566 .0173 .0037 .0005 .0000 .0000 .70 .35 .0088 .0518 .1395 .2254 .2428 .1830 .0985 .0379 .0102 .0018 .0002 .0000 .65 .40 .0036 .0266 .0887 .1774 .2365 .2207 .1471 .0701 .0234 .0052 .0007 .0000 .60 .45 .0014 .0125 .0513 .1259 .2060 .2360 .1931 .1128 .0462 .0126 .0021 .0002 .55 .50 .0005 .0054 .0269 .0806 .1611 .2256 .2256 .1611 .0806 .0269 .0054 .0005 .50 11 10 9 8 7 6 5 4 3 2 1 0 k↑ π = .05 .5404 .3413 .0988 .0173 .0021 .0002 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .95 .10 .2824 .3766 .2301 .0852 .0213 .0038 .0005 .0000 .0000 .0000 .0000 .0000 .0000 .90 .15 .1422 .3012 .2924 .1720 .0683 .0193 .0040 .0006 .0001 .0000 .0000 .0000 .0000 .85 .20 .0687 .2062 .2835 .2362 .1329 .0532 .0155 .0033 .0005 .0001 .0000 .0000 .0000 .80 .25 .0317 .1267 .2323 .2581 .1936 .1032 .0401 .0115 .0024 .0004 .0000 .0000 .0000 .75 .30 .0138 .0712 .1678 .2397 .2311 .1585 .0792 .0291 .0078 .0015 .0002 .0000 .0000 .70 .35 .0057 .0368 .1088 .1954 .2367 .2039 .1281 .0591 .0199 .0048 .0008 .0001 .0000 .65 .40 .0022 .0174 .0639 .1419 .2128 .2270 .1766 .1009 .0420 .0125 .0025 .0003 .0000 .60 .45 .0008 .0075 .0339 .0923 .1700 .2225 .2124 .1489 .0762 .0277 .0068 .0010 .0001 .55 .50 .0002 .0029 .0161 .0537 .1208 .1934 .2256 .1934 .1208 .0537 .0161 .0029 .0002 .50 12 11 10 9 8 7 6 5 4 3 2 1 0 k↑ n = 11 k↓ 0 1 2 3 4 5 6 7 8 9 10 11 n = 12 k↓ 0 1 2 3 4 5 6 7 8 9 10 11 12 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 137 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 1 – The Binomial Probability Distribution Tabulated Values are Pr(X = k) [Values are rounded to four decimal places.] Examples: For n = 15, π = .10, Pr(X = 6) = .0019; For n = 20, π = .85, Pr(X = 15) = .1028 n = 15 k↓ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 π = .05 .4633 .3658 .1348 .0307 .0049 .0006 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .95 .10 .2059 .3432 .2669 .1285 .0428 .0105 .0019 .0003 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .90 .15 .0874 .2312 .2856 .2184 .1156 .0449 .0132 .0030 .0005 .0001 .0000 .0000 .0000 .0000 .0000 .85 .20 .0352 .1319 .2309 .2501 .1876 .1032 .0430 .0138 .0035 .0007 .0001 .0000 .0000 .0000 .0000 .80 .25 .0134 .0668 .1559 .2252 .2252 .1651 .0917 .0393 .0131 .0034 .0007 .0001 .0000 .0000 .0000 .75 .30 .0047 .0305 .0916 .1700 .2186 .2061 .1472 .0811 .0348 .0116 .0030 .0006 .0001 .0000 .0000 .70 .35 .0016 .0126 .0476 .1110 .1792 .2123 .1906 .1319 .0710 .0298 .0096 .0024 .0004 .0001 .0000 .65 .40 .0005 .0047 .0219 .0634 .1268 .1859 .2066 .1771 .1181 .0612 .0245 .0074 .0016 .0003 .0000 .60 .45 .0001 .0016 .0090 .0318 .0780 .1404 .1914 .2013 .1647 .1048 .0515 .0191 .0052 .0010 .0001 .55 .50 .0000 .0005 .0032 .0139 .0417 .0916 .1527 .1964 .1964 .1527 .0916 .0417 .0139 .0032 .0005 .50 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 k↑ π = .05 .3585 .3774 .1887 .0596 .0133 .0022 .0003 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .95 .10 .1216 .2702 .2852 .1901 .0898 .0319 .0089 .0020 .0004 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .90 .15 .0388 .1368 .2293 .2428 .1821 .1028 .0454 .0160 .0046 .0011 .0002 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .85 .20 .0115 .0576 .1369 .2054 .2182 .1746 .1091 .0545 .0222 .0074 .0020 .0005 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .80 .25 .0032 .0211 .0669 .1339 .1897 .2023 .1686 .1124 .0609 .0271 .0099 .0030 .0008 .0002 .0000 .0000 .0000 .0000 .0000 .75 .30 .0008 .0068 .0278 .0716 .1304 .1789 .1916 .1643 .1144 .0654 .0308 .0120 .0039 .0010 .0002 .0000 .0000 .0000 .0000 .70 .35 .0002 .0020 .0100 .0323 .0738 .1272 .1712 .1844 .1614 .1158 .0686 .0336 .0136 .0045 .0012 .0003 .0000 .0000 .0000 .65 .40 .0000 .0005 .0031 .0123 .0350 .0746 .1244 .1659 .1797 .1597 .1171 .0710 .0355 .0146 .0049 .0013 .0003 .0000 .0000 .60 .45 .0000 .0001 .0008 .0040 .0139 .0365 .0746 .1221 .1623 .1771 .1593 .1185 .0727 .0366 .0150 .0049 .0013 .0002 .0000 .55 .50 .0000 .0000 .0002 .0011 .0046 .0148 .0370 .0739 .1201 .1602 .1762 .1602 .1201 .0739 .0370 .0148 .0046 .0011 .0002 .50 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 k↑ n = 20 k↓ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 138 Statistics Pre-Term Program – Chapter Four Some Special Probability Models n = 25 k↓ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 π = .05 .2774 .3650 .2305 .0930 .0269 .0060 .0010 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .95 .10 .0718 .1994 .2659 .2265 .1384 .0646 .0239 .0072 .0018 .0004 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .90 .15 .0172 .0759 .1607 .2174 .2110 .1564 .0920 .0441 .0175 .0058 .0016 .0004 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .85 .20 .0038 .0236 .0708 .1358 .1867 .1960 .1633 .1108 .0623 .0294 .0118 .0040 .0012 .0003 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .80 .25 .0008 .0063 .0251 .0641 .1175 .1645 .1828 .1654 .1241 .0781 .0417 .0189 .0074 .0025 .0007 .0002 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .75 .30 .0001 .0014 .0074 .0243 .0572 .1030 .1472 .1712 .1651 .1336 .0916 .0536 .0268 .0115 .0042 .0013 .0004 .0001 .0000 .0000 .0000 .0000 .0000 .70 .35 .0000 .0003 .0018 .0076 .0224 .0506 .0908 .1327 .1607 .1635 .1409 .1034 .0650 .0350 .0161 .0064 .0021 .0006 .0001 .0000 .0000 .0000 .0000 .65 .40 .0000 .0000 .0004 .0019 .0071 .0199 .0442 .0800 .1200 .1511 .1612 .1465 .1140 .0760 .0434 .0212 .0088 .0031 .0009 .0002 .0000 .0000 .0000 .60 .45 .0000 .0000 .0001 .0004 .0018 .0063 .0172 .0381 .0701 .1084 .1419 .1583 .1511 .1236 .0867 .0520 .0266 .0115 .0042 .0013 .0003 .0001 .0000 .55 .50 .0000 .0000 .0000 .0001 .0004 .0016 .0053 .0143 .0322 .0609 .0974 .1328 .1550 .1550 .1328 .0974 .0609 .0322 .0143 .0053 .0016 .0004 .0001 .50 25 24 23 22 21 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 k↑ π = .05 .2146 .3389 .2586 .1270 .0451 .0124 .0027 .0005 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .95 .10 .0424 .1413 .2277 .2361 .1771 .1023 .0474 .0180 .0058 .0016 .0004 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .90 .15 .0076 .0404 .1034 .1703 .2028 .1861 .1368 .0828 .0420 .0181 .0067 .0022 .0006 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .85 .20 .0012 .0093 .0337 .0785 .1325 .1723 .1795 .1538 .1106 .0676 .0355 .0161 .0064 .0022 .0007 .0002 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .80 .25 .0002 .0018 .0086 .0269 .0604 .1047 .1455 .1662 .1593 .1298 .0909 .0551 .0291 .0134 .0054 .0019 .0006 .0002 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .75 .30 .0000 .0003 .0018 .0072 .0208 .0464 .0829 .1219 .1501 .1573 .1416 .1103 .0749 .0444 .0231 .0106 .0042 .0015 .0005 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .70 .35 .0000 .0000 .0003 .0015 .0056 .0157 .0353 .0652 .1009 .1328 .1502 .1471 .1254 .0935 .0611 .0351 .0177 .0079 .0031 .0010 .0003 .0001 .0000 .0000 .0000 .0000 .65 .40 .0000 .0000 .0000 .0003 .0012 .0041 .0115 .0263 .0505 .0823 .1152 .1396 .1474 .1360 .1101 .0783 .0489 .0269 .0129 .0054 .0020 .0006 .0002 .0000 .0000 .0000 .60 .45 .0000 .0000 .0000 .0000 .0002 .0008 .0029 .0081 .0191 .0382 .0656 .0976 .1265 .1433 .1424 .1242 .0953 .0642 .0379 .0196 .0088 .0034 .0012 .0003 .0001 .0000 .55 .50 .0000 .0000 .0000 .0000 .0000 .0001 .0006 .0019 .0055 .0133 .0280 .0509 .0806 .1115 .1354 .1445 .1354 .1115 .0806 .0509 .0280 .0133 .0055 .0019 .0006 .0001 .50 30 29 28 27 26 25 24 23 22 21 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 k↑ n = 30 k↓ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 139 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 1 – The Binomial Probability Distribution n = 50 k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 .0769 .2025 .2611 .2199 .1360 .0658 .0260 .0086 .0024 .0006 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0052 .0286 .0779 .1386 .1809 .1849 .1541 .1076 .0643 .0333 .0152 .0061 .0022 .0007 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0003 .0026 .0113 .0319 .0661 .1072 .1419 .1575 .1493 .1230 .0890 .0571 .0328 .0169 .0079 .0033 .0013 .0005 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0002 .0011 .0044 .0128 .0295 .0554 .0870 .1169 .1364 .1398 .1271 .1033 .0755 .0499 .0299 .0164 .0082 .0037 .0016 .0006 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0004 .0016 .0049 .0123 .0259 .0463 .0721 .0985 .1194 .1294 .1261 .1110 .0888 .0648 .0432 .0264 .0148 .0077 .0036 .0016 .0006 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0006 .0018 .0048 .0110 .0220 .0386 .0602 .0838 .1050 .1189 .1223 .1147 .0983 .0772 .0558 .0370 .0227 .0128 .0067 .0032 .0014 .0006 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0002 .0006 .0017 .0042 .0093 .0182 .0319 .0502 .0714 .0923 .1088 .1171 .1156 .1048 .0875 .0673 .0478 .0313 .0190 .0106 .0055 .0026 .0012 .0005 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0002 .0005 .0014 .0035 .0076 .0147 .0260 .0415 .0606 .0808 .0987 .1109 .1146 .1091 .0959 .0778 .0584 .0405 .0259 .0154 .0084 .0043 .0020 .0009 .0003 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0004 .0011 .0027 .0059 .0116 .0207 .0339 .0508 .0700 .0888 .1038 .1119 .1115 .1026 .0873 .0687 .0500 .0336 .0208 .0119 .0063 .0031 .0014 .0006 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0003 .0008 .0020 .0044 .0087 .0160 .0270 .0419 .0598 .0788 .0960 .1080 .1123 .1080 .0960 .0788 .0598 .0419 .0270 .0160 .0087 .0044 .0020 .0008 .0003 .0001 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 50 49 48 47 46 45 44 43 42 41 40 39 38 37 36 35 34 33 32 31 30 29 28 27 26 25 24 23 22 21 20 19 18 17 16 15 14 13 12 k↑ Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 140 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 1 – The Binomial Probability Distribution Table Continues on Next Page!! n = 100 k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 .0059 .0312 .0812 .1396 .1781 .1800 .1500 .1060 .0649 .0349 .0167 .0072 .0028 .0010 .0003 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0003 .0016 .0059 .0159 .0339 .0596 .0889 .1148 .1304 .1319 .1199 .0988 .0743 .0513 .0327 .0193 .0106 .0054 .0026 .0012 .0005 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0003 .0011 .0031 .0075 .0153 .0276 .0444 .0640 .0838 .1001 .1098 .1111 .1041 .0908 .0739 .0563 .0402 .0270 .0171 .0103 .0058 .0031 .0016 .0008 .0004 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0002 .0006 .0015 .0034 .0069 .0128 .0216 .0335 .0481 .0638 .0789 .0909 .0981 .0993 .0946 .0849 .0720 .0577 .0439 .0316 .0217 .0141 .0088 .0052 .0029 .0016 .0008 .0004 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0003 .0006 .0014 .0030 .0057 .0100 .0165 .0254 .0365 .0493 .0626 .0749 .0847 .0906 .0918 .0883 .0806 .0701 .0580 .0458 .0344 .0248 .0170 .0112 .0070 .0042 .0024 .0013 .0007 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0002 .0006 .0012 .0024 .0044 .0076 .0124 .0190 .0277 .0380 .0496 .0613 .0720 .0804 .0856 .0868 .0840 .0776 .0685 .0579 .0468 .0362 .0268 .0191 .0130 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0002 .0004 .0009 .0017 .0032 .0055 .0090 .0140 .0207 .0290 .0388 .0494 .0601 .0698 .0774 .0821 .0834 .0811 .0755 .0674 .0577 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0001 .0003 .0006 .0012 .0022 .0038 .0063 .0100 .0151 .0217 .0297 .0391 .0491 .0591 .0682 .0754 .0799 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0002 .0004 .0008 .0014 .0025 .0043 .0069 .0106 .0157 .0222 .0301 .0391 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0001 .0001 .0002 .0005 .0009 .0016 .0027 .0045 .0071 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 100 99 98 97 96 95 94 93 92 91 90 89 88 87 86 85 84 83 82 81 80 79 78 77 76 75 74 73 72 71 70 69 68 67 66 65 64 63 62 61 k↑ Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 141 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 1 – The Binomial Probability Distribution n = 100 k↓ π= .05 .10 .15 .20 .25 .30 .35 .40 .45 .50 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0004 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0085 .0053 .0032 .0019 .0010 .0005 .0003 .0001 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0474 .0373 .0282 .0205 .0143 .0096 .0062 .0038 .0023 .0013 .0007 .0004 .0002 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0812 .0792 .0742 .0667 .0576 .0478 .0381 .0292 .0215 .0152 .0103 .0068 .0042 .0026 .0015 .0008 .0004 .0002 .0001 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0000 .0488 .0584 .0672 .0741 .0786 .0800 .0782 .0736 .0665 .0577 .0482 .0386 .0298 .0221 .0157 .0108 .0071 .0045 .0027 .0016 .0009 .0005 .0002 .0001 .0001 .0000 .0000 .0000 .0000 .0000 .0000 .0108 .0159 .0223 .0301 .0390 .0485 .0580 .0666 .0735 .0780 .0796 .0780 .0735 .0666 .0580 .0485 .0390 .0301 .0223 .0159 .0108 .0071 .0045 .0027 .0016 .0009 .0005 .0002 .0001 .0001 .0000 60 59 58 57 56 55 54 53 52 51 50 49 48 47 46 45 44 43 42 41 40 39 38 37 36 35 34 33 32 31 30 .95 .90 .85 .80 .75 .70 .65 .60 .55 .50 k↑ Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 142 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 2 – Standard Normal Curve Areas Tabulated Values are Pr(Z ≤ z) [Values are rounded to five decimal places.] • • • From Table, Pr(Z ≤ -2.86) = .00212 so Pr(-2.86 ≤ Z ≤ 0) = ..5 - .00212 = .49788 since Pr(Z ≤ 0) = .5 Also Pr(Z ≥ -2.86) = 1 - .00212 = .99788 since Pr(Z ≥ -2.86) + Pr(Z ≤ -2.86) = 1.00000 To find k so that Pr(0 ≥ Z ≥ k) = .20000, convert to a statement in the form of Pr(Z ≤ k), work backwards Here since Pr(0 ≥ Z ≥ k) = .20000 and Pr(Z ≤ 0) = .50000, Pr(Z ≤ k) = .30000, and so k = -0.52 is best, since Pr(Z ≤ -0.52) = .30153 is closer to desired .30000 than Pr(Z ≤ -0.53) = .29806 z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 -3.00 -2.90 -2.80 -2.70 -2.60 -2.50 -2.40 -2.30 -2.20 -2.10 -2.00 -1.90 -1.80 -1.70 -1.60 -1.50 -1.40 -1.30 -1.20 -1.10 -1.00 -0.90 -0.80 -0.70 -0.60 -0.50 -0.40 -0.30 -0.20 -0.10 -0.00 .00135 .00187 .00256 .00347 .00466 .00621 .00820 .01072 .01390 .01786 .02275 .02872 .03593 .04457 .05480 .06681 .08076 .09680 .11507 .13567 .15866 .18406 .21186 .24196 .27425 .30854 .34458 .38209 .42074 .46017 .50000 .00131 .00181 .00248 .00336 .00453 .00604 .00798 .01044 .01355 .01743 .02222 .02807 .03515 .04363 .05370 .06552 .07927 .09510 .11314 .13350 .15625 .18141 .20897 .23885 .27093 .30503 .34090 .37828 .41683 .45620 .49601 .00126 .00175 .00240 .00326 .00440 .00587 .00776 .01017 .01321 .01700 .02169 .02743 .03438 .04272 .05262 .06426 .07780 .09342 .11123 .13136 .15386 .17879 .20611 .23576 .26763 .30153 .33724 .37448 .41294 .45224 .49202 .00122 .00169 .00233 .00317 .00427 .00570 .00755 .00990 .01287 .01659 .02118 .02680 .03362 .04182 .05155 .06301 .07636 .09176 .10935 .12924 .15151 .17619 .20327 .23270 .26435 .29806 .33360 .37070 .40905 .44828 .48803 .00118 .00164 .00226 .00307 .00415 .00554 .00734 .00964 .01255 .01618 .02068 .02619 .03288 .04093 .05050 .06178 .07493 .09012 .10749 .12714 .14917 .17361 .20045 .22965 .26109 .29460 .32997 .36693 .40517 .44433 .48405 .00114 .00159 .00219 .00298 .00402 .00539 .00714 .00939 .01222 .01578 .02018 .02559 .03216 .04006 .04947 .06057 .07353 .08851 .10565 .12507 .14686 .17106 .19766 .22663 .25785 .29116 .32636 .36317 .40129 .44038 .48006 .00111 .00154 .00212 .00289 .00391 .00523 .00695 .00914 .01191 .01539 .01970 .02500 .03144 .03920 .04846 .05938 .07215 .08692 .10383 .12302 .14457 .16853 .19489 .22363 .25463 .28774 .32276 .35942 .39743 .43644 .47608 .00107 .00149 .00205 .00280 .00379 .00508 .00676 .00889 .01160 .01500 .01923 .02442 .03074 .03836 .04746 .05821 .07078 .08534 .10204 .12100 .14231 .16602 .19215 .22065 .25143 .28434 .31918 .35569 .39358 .43251 .47210 .00104 .00144 .00199 .00272 .00368 .00494 .00657 .00866 .01130 .01463 .01876 .02385 .03005 .03754 .04648 .05705 .06944 .08379 .10027 .11900 .14007 .16354 .18943 .21770 .24825 .28096 .31561 .35197 .38974 .42858 .46812 .00100 .00139 .00193 .00264 .00357 .00480 .00639 .00842 .01101 .01426 .01831 .02330 .02938 .03673 .04551 .05592 .06811 .08226 .09853 .11702 .13786 .16109 .18673 .21476 .24510 .27760 .31207 .34827 .38591 .42465 .46414 z area -3.10 -3.20 -3.30 -3.40 -3.50 -4.00 -4.50 -5.00 .000968 .000687 .000483 .000337 .000233 .000032 .000003 .0000003 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 143 Statistics Pre-Term Program – Chapter Four Some Special Probability Models TABLE 2 – Standard Normal Curve Areas Tabulated Values are Pr(Z ≤ z) [Values are rounded to five decimal places.] • • From Table, Pr(Z ≤ 0.03) = .51197 so Pr(0 ≤ Z ≤ 0.03) = .01197 since Pr(Z ≤ 0) = Pr(Z ≥ 0) = .50000 Also Pr(Z ≥ 0.03) = 1 - .51197 = .48803 since Pr(Z ≥ 0.03) + Pr(Z ≤ 0.03) = 1.00000 To find k so that Pr(0 ≤ Z ≤ k) = .30000, convert to a statement in the form of Pr(Z ≤ k), work backwards Here since Pr(0 ≤ Z ≤ k) = .30000 and Pr(Z ≤ 0) = .50000, Pr(Z ≤ k) = .80000, and so k = 0.84 is best, since Pr(Z ≤ 0.84) = .79955 is closer to desired .80000 than Pr(Z ≤ 0.85) = .80234 z .00 .01 .02 .03 .04 .05 .06 .07 .08 .09 0.00 0.10 0.20 0.30 0.40 0.50 0.60 0.70 0.80 0.90 1.00 1.10 1.20 1.30 1.40 1.50 1.60 1.70 1.80 1.90 2.00 2.10 2.20 2.30 2.40 2.50 2.60 2.70 2.80 2.90 3.00 .50000 .53983 .57926 .61791 .65542 .69146 .72575 .75804 .78814 .81594 .84134 .86433 .88493 .90320 .91924 .93319 .94520 .95543 .96407 .97128 .97725 .98214 .98610 .98928 .99180 .99379 .99534 .99653 .99744 .99813 .99865 .50399 .54380 .58317 .62172 .65910 .69497 .72907 .76115 .79103 .81859 .84375 .86650 .88686 .90490 .92073 .93448 .94630 .95637 .96485 .97193 .97778 .98257 .98645 .98956 .99202 .99396 .99547 .99664 .99752 .99819 .99869 .50798 .54776 .58706 .62552 .66276 .69847 .73237 .76424 .79389 .82121 .84614 .86864 .88877 .90658 .92220 .93574 .94738 .95728 .96562 .97257 .97831 .98300 .98679 .98983 .99224 .99413 .99560 .99674 .99760 .99825 .99874 .51197 .55172 .59095 .62930 .66640 .70194 .73565 .76730 .79673 .82381 .84849 .87076 .89065 .90824 .92364 .93699 .94845 .95818 .96638 .97320 .97882 .98341 .98713 .99010 .99245 .99430 .99573 .99683 .99767 .99831 .99878 .51595 .55567 .59483 .63307 .67003 .70540 .73891 .77035 .79955 .82639 .85083 .87286 .89251 .90988 .92507 .93822 .94950 .95907 .96712 .97381 .97932 .98382 .98745 .99036 .99266 .99446 .99585 .99693 .99774 .99836 .99882 .51994 .55962 .59871 .63683 .67364 .70884 .74215 .77337 .80234 .82894 .85314 .87493 .89435 .91149 .92647 .93943 .95053 .95994 .96784 .97441 .97982 .98422 .98778 .99061 .99286 .99461 .99598 .99702 .99781 .99841 .99886 .52392 .56356 .60257 .64058 .67724 .71226 .74537 .77637 .80511 .83147 .85543 .87698 .89617 .91308 .92785 .94062 .95154 .96080 .96856 .97500 .98030 .98461 .98809 .99086 .99305 .99477 .99609 .99711 .99788 .99846 .99889 .52790 .56749 .60642 .64431 .68082 .71566 .74857 .77935 .80785 .83398 .85769 .87900 .89796 .91466 .92922 .94179 .95254 .96164 .96926 .97558 .98077 .98500 .98840 .99111 .99324 .99492 .99621 .99720 .99795 .99851 .99893 .53188 .57142 .61026 .64803 .68439 .71904 .75175 .78230 .81057 .83646 .85993 .88100 .89973 .91621 .93056 .94295 .95352 .96246 .96995 .97615 .98124 .98537 .98870 .99134 .99343 .99506 .99632 .99728 .99801 .99856 .99896 .53586 .57535 .61409 .65173 .68793 .72240 .75490 .78524 .81327 .83891 .86214 .88298 .90147 .91774 .93189 .94408 .95449 .96327 .97062 .97670 .98169 .98574 .98899 .99158 .99361 .99520 .99643 .99736 .99807 .99861 .99900 z area 3.10 3.20 3.30 3.40 3.50 4.00 4.50 5.00 .999032 .999313 .999517 .99966 .999767 .999968 .999997 .9999997 Statistics Pre-Term Program – T. Love & V. Babich – Chapter 4 - Page 144