The Actor–Observer Asymmetry in Attribution: A (Surprising) Meta

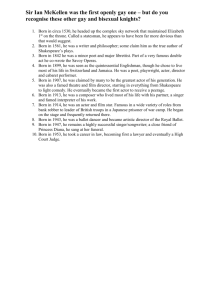

advertisement