Prototyping The VISION Digital Video Library System

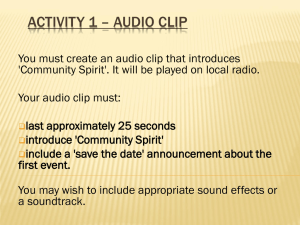

advertisement