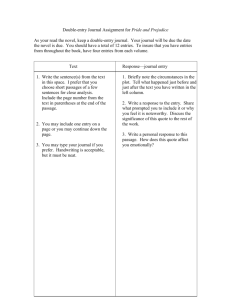

Data Mining Journal Entries for Fraud Detection: A Pilot Study

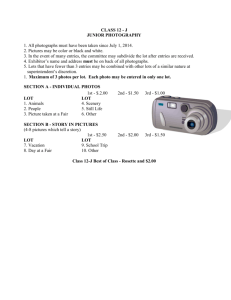

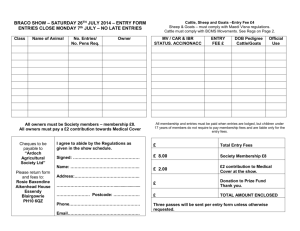

advertisement