Using Secure Computerized Testing to Direct Success

advertisement

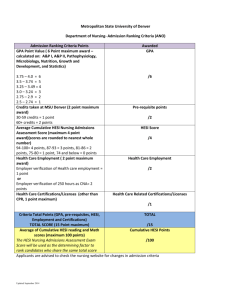

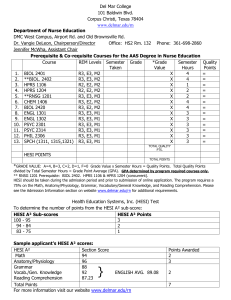

Using Secure Computerized Testing to Direct Success Impact of Nursing Education Using Secure Computerized Testing to Direct Success Pamela Willson, PhD, RN, FNP,BC Director of Research Elsevier, Review and Testing Symposium Title Using Secure Computerized Testing to Direct Success with National Licensure, Advanced Practice Certification, and Hospital Orientation Competencies of Registered Nurses. Learning Objectives Discuss evidence-based program data that influences NCLEX® or certification pass rates, and guides focused orientation plans. Discuss the pros and cons for adopting a progression/hospital policy based upon outcomes of a critical thinking specialty, NCLEX® or certification simulation exam. Session Agenda Three presentations will describe how the use of valid and reliable critical thinking tests informed remediation, enriched clinical experiences and focused nurse orientations Question and Answers Abstract: EBP for NCLEX® Success National Council Licensure Examination (NCLEX®) failure rates in 2002 prompted faculty members to implement a number of changes including an NCLEX® preparation course, remediation plan and an NCLEX® simulation exam. Methods: Systematic program evaluation allowed faculty to reflect on best practices for teaching and learning in order to positively affect student outcomes on the NCLEX®. Analysis of student performance was conducted and analyzed for all courses taught using correlation and descriptive statistics. Results: The required passing score of 85 (now equivalent to 850) for the examination was the initial benchmark set in 2003. Using aggregated data for continuous program evaluation, the passing score was raised to 900 in 2008. The progression policy has had a positive impact on improving student performance. Conclusion: Evidence-based decision-making has dramatically improved NCLEX® pass rates in this program. Nursing Program Assessment and Evaluation: Evidence-Based Decision-Making Improves Outcomes Jeanne Sewell Flor Culpa-Bondal Martha Colvin Georgia College & State University Objectives • Discuss evidence-based data that influence NCLEX® pass rates at the • Course level • Program level • Discuss the pros & cons for adopting a nursing program progression policy based on outcomes Needs for Change • Increased complexity of NCLEX® exams • NCLEX® simulation exit exam requirement for graduation • Shift towards problem-based learning • Integration of critical thinking component in classroom teaching and learning Course Level Changes Example: Mental Health Nursing Previous Teaching Methodologies • Lecture/Discussion (PowerPoint slides) • Case Studies (paper) • 4 Unit Tests & Final • Clinical Practice o Process Recording o Traditional Nursing Care o Teaching Plan o Journals Plan New Teaching Methodologies • • • • • • • Mini-lecture Case studies incorporating films Simulations Online quizzes prior to class 3 Unit tests & NCLEX® simulation final Self-study modules Games New Teaching Methodologies Clinical Practices • Care/Concept Maps-incorporate nursing care, process recording & self reflection (CMAP) • Reflective thinking questions for all clinical rotation journals • Grading rubrics for all clinical rotation journals • Research articles and discussion Course Transformations • Adopted the HESI specialty exam as the course final exam • Raised the HESI score to count 20% of course final grade • Created a blueprint for all unit exams • Computerized all unit exams • Administered all unit exams in GeorgiaView with settings parallel to HESI exams Course Level: For Example… Student Feedback “I just wanted you both to know that I have NEVER in my life worked so hard to pass a class! My first test grade was a 60 and I improved a little more each test until I made an 87.7 on my final. I can't tell you how relieved I am and proud I am of the class as well as myself. If you need any words of encouragement for the incoming nursing students, tell them "It CAN be done!" Thank you so much for a great semester! Although it was stressful at times and challenging, I found it very rewarding. I learned many things that I will carry with me through the program and the years to come. Thanks Again…” What were the key discipline and course specific assessment and demographic components to use for course process improvement? Brainstorm: What would or would not apply to your course? Pros/Cons Yes! No! • Pros o o Students engaged in learning Improved outcomes on simulated NCLEX® exams • Cons o Time intensive for faculty Creating/refining teaching resources & methods Clinical grading-initial feedback & final assessments Program Level Changes NRSG 4981 Integrated Clinical Concepts New Course • • • • • • • • Adopted in 2003 1 Semester hour credit Faculty-student mentoring 1:8 ratios 10 week course (hybrid) Weekly small-group meetings Learning contract Learning styles survey Text anxiety inventory Teaching Methodologies • Weekly homework & reflection • Student focus: • How to: • Use previous NCLEX® simulation exam data as a learning guide • Use positive affirmations to achieve goal • Understand the anatomy of a question stem • Use an algorithm to determine question answer • Practice with test question with associated talk aloud learning Teaching Methodologies • Attendance optional if HESI average ≥ 1050 • Remediation testing • Required on for specialty tests with scores <900 • Optional for others • NCLEX® Simulation Testing – 2 attempts Student Feedback Student quotes about what they learned: How to study for boards and exit! I learned how to read and analyze HESI questions and rule out incorrect answers. Where I was going wrong in answering questions. How to apply nursing knowledge learned from clinical and class time to one big test at the end. Also I learned some great test taking skills that will prepare me for NCLEX®! Course Analysis • Before the course: • Analyze NCLEX® specialty exam scores • Identify at-risk students • Analyze HESI sub-scores • Review previous course grades • Identify strategies that map to success • Afterwards: • Analyze course evaluations • Trend and analyze data over time • Follow NCLEX® pass rates How can program assessment and evaluation improve program outcomes? Program Level: NCLEX® Simulation, For Example 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% Exit V1 % Scores ≥ 900 Program Level NCLEX® Pass Rates NCLEX Pass Rate - 1st Time Takers 100% Percent 80% 60% 40% 20% 0% Pros/Cons Yes! • Pros Improved outcomes on simulated NCLEX® exams o Improved NCLEX® pass rates o Student pride when they pass the NCLEX® o • Cons o o o o Student reaction when not successful Student appeal process Can be time-consuming for faculty mentors Don’t always have 100% faculty buy-in No! Conclusion Evidence-based decision making can improve program outcomes Policy changes require tough choices Abstract: EBP to Certification Success Family Nurse Practitioner (FNP) Programs at three campuses instituted an Evolve HESI APRN FNP specialty exam testing between 2005 and 2007. Methods: An electronic survey was sent to students who took the FNP exam between 2005 and 2007. Student outcomes on the Evolve exam, and the American Nursing Credentialing Center (ANCC) or American Academy of Nurse Practitioner (AANP) certification test results were compared. Results: The predictive validity of the HESI scores for students achieving scores >800 on the exams with their ANCC or AANP certification exam outcomes was again evaluated for accuracy, and the data analysis indicated acceptable and recommended scoring levels. Conclusion: The standardized FNP and ANP exams were effective in assessing students’ preparedness for the specialty accreditation exams and also provided evidenced-based measures of curricular outcomes. Using Computerized Exams to Predict Nurse Practitioner Certification Exam Success: Exam Analysis and Faculty Appraisal 2005-2007 Brenda Binder, PhD, RN, PNP-BC Michelle H. Emerson, MSN, RN, CNM College of Nursing, Houston, TX Pat Jones, MSN, RN, FNP-BC Maureen Brogan, MS, WHNP, FNP-BC College of Nursing, Denton, TX Elizabeth E. Fuentes, MSN, RN, FNP-BC College of Nursing, Dallas, TX Objectives At the end of the presentation, the participant will be able to: • Discuss critical thinking testing, suggested cut-scores and school policies of a multi-site sample of graduate nurse practitioner programs • Discuss evidence-based program strategies to foster advance practice nurses successes on national certification exams Purpose • Analysis of student outcomes – Exam administrations between 2005 and 2007 were compared to student success on national certifying examinations • Discuss evidence-based changes that were initiated within the curriculum – Program evaluation – Targeted approach for certification exam preparation Standardized, Computerized Exams for APN Students • Evolve HESI APRN FNP 100-item Comprehensive Exam – Predict certification exam (ANCC/AANP) success – Identify specific areas for remediation – Provide an objective measure of program outcome achievement – Contribute to overall curriculum evaluation APRN Examination Development • Content Expertise – Elsevier APRN item banks developed from paper and pencil exams authored by the NP faculty at two universities & APRNs across the U.S. – Application of HESI critical thinking test item writing model was applied • Original items written primarily at knowledge/ comprehension level • Items were revised to application & higher level Exam Blueprints • Evolve HESI APRN Exam Blueprints • HESI Standard Scoring Categories • NONPF Competencies • ANCC & AANP Exam Content Outlines • Exams Developed for MS Nursing Programs • • • • Family Nurse Practitioner Adult Nurse Practitioner Nursing Administration* Acute Care Nurse Practitioner* * Piloting Exams Evolve HESI APRN Exam Analysis • Reliabilities and Average Item Uses • FNP: KR-20 = 0.932; Average uses = 260 TWU Houston Experience • Standardized FNP Examination • Preparation for AANP or ANCC Certification Examinations • Guides Clinical Experiences during Preceptorship • Provides Program Evaluation Data – Course content and teaching strategies – Evidence of program outcomes for accreditation Purpose • Determine the accuracy of the Evolve HESI APRN FNP Exam in predicting success on the American Academy of Nurse Practitioner (AANP) and the American Nurses Credentialing Center (ANCC) family nurse practitioner certification examinations Research Question • What is the predictive accuracy of the Evolve HESI APRN FNP Exam for Texas Woman’s University family nurse practitioner students taking the examination 3 - 6 months prior to graduation who were predicted to pass the AANP and ANCC family certification examinations based on their performance (composite HESI scores exceeding 800) on the Evolve exam? Methods Population • Following receipt of IRB approval, 118 students that comprised the three campus FNP student cohorts who took the HESI APRN FNP exam in Fall 20052007 (within 3-6 months of graduation) Data Collection • Contacted by e-mail to determine their certification exam results on the AANP or AACN examinations and the date of their exam administrations N= 118 Sample Of the 118 graduates: • One hundred ten (110) students were recognized as being APRN certified by the TX-BON • Outcomes on certification exams was determined for 49 of the 110 students contacted – 51% response rate BNE Status N=110 HESI Scores N=110 Exam AANP n= 38 Scores N=49 ANCC n= 11 Results • Evolve HESI APRN FNP Exam Results • Descriptive Statistics N 110 Minimum 517 Maximum 1092 Mean Std. Deviation 827.66 106.624 HESI APRN Scoring Categories • Number & Percent of Students by Scoring Category (N=110) Scoring Category HESI Score N Percent Category A/B Category C Category D Category E/F Category G/H 900->1000+ 850-899 800-849 700-799 < 699 28 16 25 30 11 25% 15% 23% 27% 10% Certification Exam Results • Thirty-eight students took the AANP exam with students reporting either a numeric passing score or stating that a passing score was awarded • Eleven students took the ANCC exam – all passed – Of students reporting passed certification 48 had evidence of TX-BON APRN recognition 1 did not AANP Certification Exam Results Descriptive Statistics N 32 Minimum 396 Maximum Mean 738 639.47 Std. Dev. 74.064 • 32 students reported scores versus 6 that reported “passing” • Passing Score on the AANP Exam is ≥ 500 ANCC Certification Exam Results • Eleven (11) students took the AANC exam • All 11 students surveyed provided either their numeric passing score or the report of a passing score in combination with evidence of TX-BON recognition ANCC Certification Exam Results Descriptive Statistics N 9 Minimum 379 Maximum Mean Std.Deviation 437 408.56 19.462 • 9 students reported scores versus 2 that reported passing • Passing Score on the AANC Exam is ≥ 350 APRN FNP Exam Predictive Rates (PR) by TX BON • Number & Percent of Students by Scoring Category (N = 110) INCLUDES 61 survey non-responders Scoring Category Recog. Category A/B Category C Category D Category E/F Category G/H HESI Score N PR 900->1000+ 850-899 800-849 700-799 < 699 28 16 25 30 11 89% 100% 100% 87% 82% Lack BON 3 0 0 4 2 Study Non-Responders • Exam type unknown for 61 students because they did not respond to the survey • Fifty-two (n=52) of these students were recognized as APNs by the TX-BON, so they are presumed to have passed their certification exams • Nine (n=9) students did not have TX-BON APN recognition HESI Scores & Certification Results for Survey Responders Number & Percent of Students by Scoring Category (N = 49) Scoring Category Category A/B Category C Category D Category E/F Category G/H HESI Score 900->1000+ 850-899 800-849 700-799 < 699 N 17 5 15 11 1 Predictive Rate 100% 100% 100% 91% 100% APRN Student Results • Predictive Accuracy • 100% of the students with known certification outcomes (n = 37 or 76% of the total 49 students) who scored ≥ 800 passed either the AANC or the AANP certification exams • One (1) student whose HESI score was 700799 did not pass the AANP certification exam Predictive Validity • Evolve HESI APRN FNP Exam – Evolve Recommended Score: 800 – Evolve Minimally Acceptable Score: 750 Conclusions • Survey responses indicated that exam scores were usually consistent with students’ performance • Predictive validity of the HESI scores for students achieving scores >800 on the exams with their ANCC or AANP certification exam outcomes was evaluated for accuracy • Data analysis indicated acceptable and recommended scoring levels Conclusions • The standardized FNP exam was effective in assessing students’ preparedness for the specialty accreditation exams and also provided evidencedbased measures of curricular outcomes • This presentation will assist FNP educators in evaluating the usefulness of incorporating the use of standardized exams within their educational programs Contact information • Brenda K. Binder, PhD, RN, PNP-BC • BBinder@mail.twu.edu Abstract: EBP to Orientation Success The relationship between pediatric nursing specific knowledge of newly hired RNs and the length of orientation required to meet competencies for patient care at a large southeastern pediatric hospital system was examined. Methods: A two-year prospective cohort study determined the relationship of selected variables and length of RN orientation. A Evolve custom pediatric specialty exam assessed pediatric nursing care knowledge, critical thinking ability, and directed Individualized Focused Orientation of the RN. Results: The RNs’ CT ability significantly correlated (-.325; p=.004) with critical thinking ability and length of time in orientation, indicating that those with a higher critical thinking score required fewer clinical orientation days to meet orientation objectives. RN orientation time was decreased by 50%. Conclusions: Standardized testing assists nurse educators in meeting RN orientation needs and meeting facility goals. Use of Elsevier’s Computerized Exam as Measurement for Workplace Competency Christina Ryan-Ramey MSN, RN Children’s Healthcare of Atlanta at Egleston Clinical Research Nurse Introduction Facilities must deliver quality patient care Focused orientation improves nursing care competencies Standardize testing • Assesses nursing knowledge • Measures critical thinking ability Purpose & Aims Purpose • Examine the relationship between pediatric nursing-specific knowledge of newly hired RNs and the length of orientation required to meet the hospital competencies for patient care Specific Aims • Assess RNs knowledge, strengths, and weaknesses using the Pre-Requisite Exam for Pediatric (PREP) exam scores • Implement individualized focused orientation plans • Determine orientation duration Definitions Pre-Requisite Exam for Pediatrics (PREP) Elsevier HESI custom pediatric exam 50 items Multiple-choice exam Reliability KR-20 = 0.80 Rationales provided for questions answered incorrectly Individualized Focused Orientation (IFO) • Developed using subject area scores to identify individual RN’s orientation needs Research Questions Do IFOs decrease orientation duration for newly hired RNs? Is the PREP useful in designing IFOs? Is age, education, pediatric experience, and previous employment related to PREP exam scores? Design Two-year Prospective Cohort Design January 2007 – December 2008 PREP Design N = 98 Orientation Duration PREP Testing & Guided Orientation N = 84 Orientation Duration PREP Framework CIN: Computers, Informatics, Nursing • Vol. 22, No. 4, 220–226 • © 2004 Lippincott Williams & Wilkins, Inc. Intervention Procedure Procedure for Developing IFOs • Administer 50-item PREP to all newly hired RNs • Determine educational needs based on individual RNs subject matter scores • Develop an IFO for each participating RN subject Education process, unit experience, and preceptor matched to knowledge deficits • Meet with unit educators regarding planning • Formative and summative evaluation of process Demographics Age Years of Pediatric Experience Highest Degree Control Group N = 98 Intervention Group N = 84 Mean = 28.25 yrs Mean = 29.63 yrs (SD = 7.06) (Range 21-51) (SD = 8.67) (Range 21-53) Mean = 1.63 yrs Mean = 1.47 yrs Min = 0 (n=59) Max =18 (n=1) Diploma: 2 ASN: 26 BSN: 67 MSN: 2 Min = 0 (n= 66) Max = 29 (n= 2) ASN: 10 BSN: 69 MSN: 5 Demographics Control Group N = 98 Intervention Group N = 84 n = 58 n = 55 ICU n = 17 ICU n = 17 General n = 41 General n = 38 New graduate n = 40 n = 29 ICU n = 17 ICU n = 17 General n = 38 General n = 38 Experienced nurse Scores: Group and Nurse Experience PREP Exam Scores Control Group Intervention Group Range 353 – 1197 Range 448 – 1091 Mean SD Mean SD New graduate nurse ICU General 847 833 852 (127) (119) (126) 876 893 869 (129) (109) (137) Experienced nurse 847 871 831 (127) (133) (186) 847 944 848 (127) (48) (108) ICU General Evolve HESI Pediatric Exam Summary PREP Scoring Interval Description Control N % Intervention N % > 950 Outstanding 24 (24.2) 43 (51.2) 900- 949 Excellent 10 (10.1) 16 850- 899 Average 10 (10.1) 7 (8.3) 800- 849 Below Average 19 (19.2) 11 (13.1) 750- 799 Additional study needed 16 (16.2) 11 (13.1) 700- 749 Serious pediatric nursing knowledge preparation needed 11 (11.1) 6 (7.1) 650- 699 Poor performance 2 (2.0) 5 < 649 Very Poor performance 7 (7.1) 1 (1.2) (19) (6) Bivariate Correlations to PREP Exam Scores Control Intervention AGE -.073 p = 0.301 .049 p = 0.661 Education -.008 p = 0.923 -.096 p = 0.385 Pediatric Nursing Experience -.071 p = 0.354 .081 p = 0.466 Bivariate Correlations to PREP Exam Scores Length of Orientation Control Intervention -.181 p < 0.05 -.325 p = 0.004 Clinical Orientation: Difference Between New and Experienced RNs Weeks in Orientation Control M SD Intervention M SD N = 182 ICU General 16.59 weeks 17.35 weeks 14.69 weeks (7.92) (7.08) (7.86) 8.27 weeks 9.82 weeks 6.28 weeks (3.24) (2.79) (2.49) New graduate nurse ICU General 16.59 weeks (7.03) 9.56 weeks (2.83) 18.48 weeks 16.22 weeks (7.14) (7.01) 10.58 weeks 9.05 weeks (2.90) (2.68) Experienced nurse ICU General 14.69 weeks (S.D. = 8.64) 6.57 weeks (S.D. = 3.00) 10.88 weeks (7.23) 18.50 weeks (8.48) 5.75 weeks (1.71) 6.80 weeks (3.27) Orientation Savings Estimates Average weekly salary for RNs during orientation is $1,500 not including indirect costs (taxes and benefits) Prior to implementation of IFOs, the duration of orientation was 12 weeks, for a total cost of $18,000/RN After implementation of IFOs, which were designed based on data obtained from the PREP, the average duration of orientation was 6 weeks, for an average cost of $9,000/RN (50% reduction in orientation costs) Orientation Savings Estimates $9,000/RN saved in orientation costs 360 new RNs hired annually $3,240,000 Estimated Annual Savings to Facility Focus Group Outcomes Clinical educators met monthly following the implementation of IFOs • 90% of the clinical educators requested the PREP be adopted for the hiring process • Orientation flowed more smoothly with defined educational plans • Knowledge weaknesses identified from the PREP results were assessed to be accurate by educators and preceptors • Newly hired nurses verbalized positive responses to having their learning needs met immediately upon beginning orientation Results No significant correlation between PREP (Evolve HESI Pediatric Exam) scores and subjects’ age, education, and previous employment experience Significant relationship between PREP scores and duration of orientation. • Those with higher PREP scores required fewer clinical orientation days Orientation duration was reduced by an average of 6 weeks, or 50% after implementation of IFOs Recommendations Replicate study within other pediatric organizations Use PREP scores as a screening tool for new applicants Examine PREP subject matter categories that predict successful orientation and nurse retention Conclusion Identification of individual RNs’ strengths and weaknesses at the beginning of orientation allows for development of customized orientation programs Standardized testing assists nurse educators in meeting newly hired RNs individual needs (education, support, and confidence) Standardized testing assists facility nurse educators in meeting organization goals (quality nursing care, efficient orientation, and retention of RNs) Acknowledgements This study was funded by a grant awarded by the Dudley L. Moore Nursing & Allied Health Research Fund Gratitude is expressed to: Gail Klein RN, BSN; Lacey Owen, Nurse Recruiter; and to the Clinical Educators and newly hired RNs of Children’s Healthcare of Atlanta for their assistance in completing this project Cecelia G. Grindel, PhD. RN, Associate Director of Graduate Studies in Nursing at Georgia State University for assistance in developing this project Questions & Answers Thank You!