Simple linear regression Correlation coefficient Covariance Sxy

advertisement

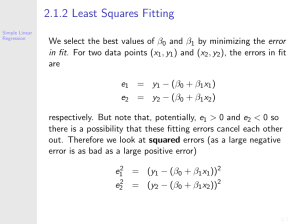

Simple linear regression

Yi

= β0 + β1 xi + εi ; i = 1, . . . , n

E(Y |X)

= β0 + β1 x

• Systematic components: β0 + β1 xi

• Stochastic component : error term ε

the central parameter is the slope parameter β1

• If X rises by an unit, than Y rises by β1 units on average

Interpretation of the parameter σ: Standard deviation of the error term. Measure of the averaged deviation

of the Y-values from the regression line R2 : Coefficient of determination

SSM

SSE

=1−

SST

SST

R2 =

Correlation coefficient

Covariance

n

1X

(xi − x̄)(yi − ȳ)

= Cov(x, y) =

n

Sxy

i=1

Correlation = Standardized Covariance

ρx,y = p

Cov(x, y)

V ar(x)V ar(y)

ρ=1:

exact linear with positive slope

ρ=0:

No linear relationship

ρ = −1 : Exact linear with negative slope

Correlation symmetric measure of linear relationship

Least Square-estimation example: Deep Drill Data

●

●

●

●

3

●

●

● ●

●●

●● ●

●●

●

●

●

●

●

●

●

2

●

●

●

●

●

●

● ● ●● ●

● ●●

●

●

●

●

●

●

●●

●

●

●

●

1

Logarithmic cataclasit concentration

●

●

●

●

0

●

● ●●● ●●

0.10

●● ●

●●●

●

●●

0.15

●●

0.20

0.25

0.30

0.35

Carbon concentration

Residual standard error: 0.6917 on 70 degrees of freedom

Multiple R-squared: 0.6562

Adjusted R-squared: 0.6513

F-statistic: 133.6 on 1 and 70 DF

Confidence interval for βC : [9.828611, 13.9272200]

1

The multiple linear regression model

Yi = β0 + β1 xi1 + β2 xi2 + ...βp xp +εi ; i = 1, ..., n

|

{z

}

x0i β

x0i = (1xi1 ...xip )

Y = Xβ + ε

with

Y1

Y = ... X =

Yn

1 x11 , · · ·

..

..

.

.

1 xn1

x1p

xnp

β0

.

β = .. ε =

βp

Model assumptions

E(εi ) = 0

E(ε) = 0

V (εi ) = σ 2

(εi |i = 1, ..., n) = independ.

V (ε) = σ 2 I

εi ∼ N (0, σ 2 )

ε ∼ N (0, σ 2 I)

X: Constant design matrix (matrix of the regressors)

β: Vector of regression parameter

Y : Random vector of dependent variable

ε: Error terms

Least-Square-estimator

Data: Y = (Y1 , ..., Yn ) Design matrix X

β̂ = arg min (Y − Xβ)0 (Y − Xβ)

{z

}

β |

P

n

0

2

i=1 (Yi −xi β)

ε̂i = Yi − x0i β

For (X 0 X) invertible: β̂ exists, is unique and

β̂ = (X 0 X)−1 X 0 Y.

2

ε1

..

.

εn

Intercept

C

S

Estimate

-1.0463

6.3290

2.2250

Std. Error

0.1710

1.0104

0.2752

t value

-6.119

6.264

8.086

P r(> |t|)

5.04e-08 ***

2.79e-08 ***

1.39e-11 ***

Interpretation: Multiple regression

E(Y |X) = β0 + β1 x1 + ... + βp xp

x1 =⇒ x1 + 1 and x2 , ..., xp fixed −→ E(Y |X1 + 1, ..., Xp ) − E(Y |X1 , ..., Xp ) = β1 −→ Effect of x1

when other parameters are fixed −→ Adjusted effect of x1 on Y

Testing in the multiple linear regression

Y = β0 + β1 x1 + ... + βp xp

H0 : βp = 0 Basic idea: Compare model fit under H0 with unrestricted model Here: Fit model

Y = β0 + ... + βp−1 xp−1 −→ SSE1 (Residual sum of squares) Fit unrestricted model −→ SSE

Look at

(SSE1 − SSE)/a

∼ F1,n−(p+1)

SSE

F = F-Distribution

Example: Deep Drill Data

Residual standard error: 0.4992 on 69 degrees of freedom Multiple R-squared: 0.8235

General linear hypothesis

H0 : Aβ = c

rg(A) = a

(SSEH0 − SSE)/a

∼ F1,n−p

SSE

SSEH0 : Sum of Squares in the restricted model SSE: Sum of Squares in the complete model

Example: Deep Drill Data

Restricted model: LN CAT R = β0 + β1 C Complete model: LN CAT R = β0 + β1 C + β2 S

(SSEH0 − SSE)/a

= 65.37686 ≥ 4 =

SSE

3

F1,69 − distribution

Dummy-Variables (1)

Y = β0 + β1 X1 + X1 ∈ (0, 1) = Indicator for a group, e.g. Rain/ No Rain

E(Y |X1 = 0) = β0

E(Y |X1 = 1) = β0 + β1

β1 : Mean Difference =⇒ Regression= t-Test

Dummy-Variables (2)

X is discrete and has more than 2 levels

4 groups (e.g. single (1), married (2), widowed (3), divorced (4)

Define Indicator for each level:

0

no single

X1 =

1

single

0

not married

X2 =

1

married

Y = β0 + β1 x1 + β2 x2 + β3 x3 + β4 x4 + Since X1 + X2 + X3 + X4 = 1 =⇒ Multicollinearity −→ leave out X4

Dummy-Variables (3)

Y = β0 + β1 X1 + β2 X2 + β3 X3 + Then:

E(Y |X = 1) = β0 + β1 = µ1

E(Y |X = 2) = β0 + β2 = µ2

E(Y |X = 3) = β0 + β3 = µ3

E(Y |X = 4) = β0 = µ4

The Category 4 is called the reference category

β1 : Difference between category 4 and category 1 β2 : Difference between category 4 and category

2

Test: µ1 = µ2 = µ3 = µ4 ⇔ β1 = β2 = β3 = 0

Combine Dummy Variables and continuous X (1)

Y = β0 + β1 X1 + β2 X2 + X1 = [0, 1]: Dummy Variable X2 : Continuous

4

E(Y |X1 = 0, X2 ) = β0 + β2 X2

E(Y |X = 2) = β0 + β1 + β2 X2

=⇒ Two parallel regression lines (Analysis of Covariance)

●

●

●

●

3

●

●

●

● ●

●●

●● ●

●●

●

●

●

●

●

●

●

●

●

●

Y

2

●

●

●

●

●

●

● ● ●● ●

● ●●

●

●

●

●

●●

●

●

●

●

1

●

●

0

●

● ●●● ●●

●● ●

0.10

●●●

●

●●

●●

0.15

0.20

0.25

0.30

0.35

X2

Combine Dummy Variables and continuous X (2)

Y = β0 + β1 X1 + β2 X2 + β3 X1 X2

| {z }

Interaction

E(Y |X1 = 0, X2 ) = β0 + β2 X2

E(Y |X = 2) = β0 + β1 + (β2 + β3 )X2

=⇒ Two different regression lines (Model with Interaction)

●

●

●

●

3

●

●

●

● ●

●●

●● ●

●●

●

●

●

●

●

●

●

●

●

●

Y

2

●

●

●

●

●

●

● ● ●● ●

● ●●

●

●

●

●

●

●●

●

●

●

●

1

●

0

●

● ●●● ●●

●● ●

0.10

●●●

●

●●

0.15

●●

0.20

0.25

0.30

0.35

X2

Combine Dummy Variables and continuous X (3)

Tests:

• β3 = 0 ↔ Is there a different slope?

• β2 = β3 = 0 ↔ Any effect of X2 on Y?

• β1 = β3 = 0 ↔ Any effect of X1 on Y?

• β1 = β2 = β3 = 0 ↔ Any effect of X1 and X2 on Y −→ General Hypothesis?

5

Continuous Variables (1)

Use transformations of X and Y

lnY = β0 + β1 X + or

√

3

Y = β0 + β1 X + Example: Volume of eggs

V = β0 + β1 L + β2 d + ???

lnY = β0 + β1 ln(L) + β2 ln(d) + ⇔

Y = exp(β0 ) · Lβ1 · dβ2 · exp()

β2 ≈ 2 β1 ≈ 1

Transformation

Transformation on X −→ Different interpretation. Comparison (R2 ) is possible

Transformation on Y −→ Different interpretation on all effects −→ Transformation also for

error term −→ Different model

e.g.

lnY = β0 + β1 X + ⇔

Y = exp(β0 · β1 X) · exp()

=⇒ Multiplicative error structure

Continuous Variables (2)

Polynomial regression:

Y = β0 + β1 X + β2 X 2 + β3 X 3 + ... + Note: Relationship between X and Y is non linear, but model is linear in β In General:

Y = β0 + β1 f1 (X) + β2 f2 (X) + β3 f3 (X) + ... + f1 , ..., fp are fixed, known functions

Example: Regression spline

f1 (X) = X

f2 (X) = X 2

f3 (X) = (X − X1 )2+

f4 (X) = (X − X2 )2+

6

Multiple Regression

• Rich tool with many possible models

• Comparison of models by SSE

• F- test of linear hypothesis

• Variable selection stratgies

• Model check by residuals

7