Informative and Uninformative Regions Detection in WCE Frames

advertisement

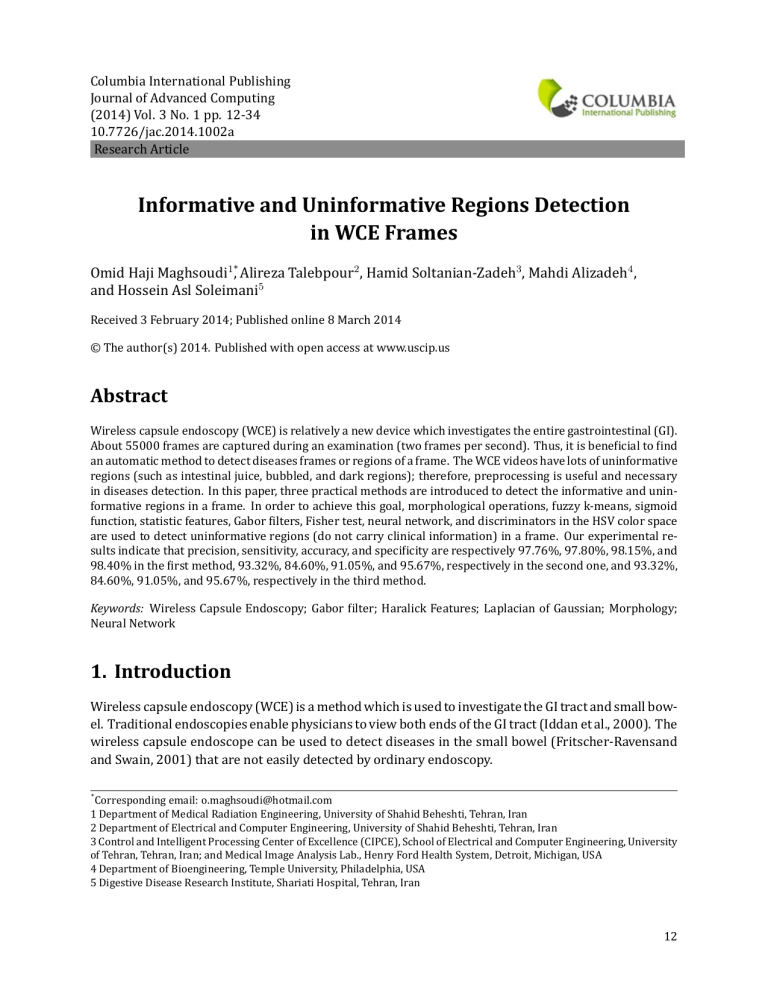

Columbia International Publishing Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 10.7726/jac.2014.1002a Research Article Informative and Uninformative Regions Detection in WCE Frames Omid Haji Maghsoudi1*, Alireza Talebpour2 , Hamid Soltanian-Zadeh3 , Mahdi Alizadeh4 , and Hossein Asl Soleimani5 Received 3 February 2014; Published online 8 March 2014 © The author(s) 2014. Published with open access at www.uscip.us Abstract Wireless capsule endoscopy (WCE) is relatively a new device which investigates the entire gastrointestinal (GI). About 55000 frames are captured during an examination (two frames per second). Thus, it is bene icial to ind an automatic method to detect diseases frames or regions of a frame. The WCE videos have lots of uninformative regions (such as intestinal juice, bubbled, and dark regions); therefore, preprocessing is useful and necessary in diseases detection. In this paper, three practical methods are introduced to detect the informative and uninformative regions in a frame. In order to achieve this goal, morphological operations, fuzzy k-means, sigmoid function, statistic features, Gabor ilters, Fisher test, neural network, and discriminators in the HSV color space are used to detect uninformative regions (do not carry clinical information) in a frame. Our experimental results indicate that precision, sensitivity, accuracy, and speci icity are respectively 97.76%, 97.80%, 98.15%, and 98.40% in the irst method, 93.32%, 84.60%, 91.05%, and 95.67%, respectively in the second one, and 93.32%, 84.60%, 91.05%, and 95.67%, respectively in the third method. Keywords: Wireless Capsule Endoscopy; Gabor ilter; Haralick Features; Laplacian of Gaussian; Morphology; Neural Network 1. Introduction Wireless capsule endoscopy (WCE) is a method which is used to investigate the GI tract and small bowel. Traditional endoscopies enable physicians to view both ends of the GI tract (Iddan et al., 2000). The wireless capsule endoscope can be used to detect diseases in the small bowel (Fritscher-Ravensand and Swain, 2001) that are not easily detected by ordinary endoscopy. * Corresponding email: o.maghsoudi@hotmail.com 1 Department of Medical Radiation Engineering, University of Shahid Beheshti, Tehran, Iran 2 Department of Electrical and Computer Engineering, University of Shahid Beheshti, Tehran, Iran 3 Control and Intelligent Processing Center of Excellence (CIPCE), School of Electrical and Computer Engineering, University of Tehran, Tehran, Iran; and Medical Image Analysis Lab., Henry Ford Health System, Detroit, Michigan, USA 4 Department of Bioengineering, Temple University, Philadelphia, USA 5 Digestive Disease Research Institute, Shariati Hospital, Tehran, Iran 12 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 To reduce reviewing time and increase the accuracy rate for automatic detection of abnormalities (diseases), it is bene icial to ind unimportant regions of a frame which do not carry clinical information (uninformative). The process of elimination of uninformative regions (dark or bubbled regions) in a frame can make impact on detection of abnormalities. Moreover, setting a threshold by physicians can reduce the captured frames based on a research; for example, a frame contained more than 70% uninformative regions can be named as uninformative frame in a video and it can be omitted. The latest advantage (select a threshold to omit some frames) can help physicians to ind results more ef iciently compared with a method that omits frames based on a constant procedure or constant parameters; on the other hand, it can be useful for WCE manufactures to ind the best threshold based on their own software. In addition to these bene its for physicians and manufactures, using lexible threshold can help us to ind some special cases, for example extraneous matters called uninformative regions in detection of abnormalities while the detection of extraneous matters may be helpful in other studies (Oh et al., 2007). Methods proposed by Oh et al. (2007); Vilarino et al. (2006a); Vilarino et al. (2006b); Bejakovic et al. (2009); and Bashar et al. (2012) signify the importance of uninformative regions detection in WCE or colonoscopy videos. These researchers examined the methods to reduce the visualization time while the main purpose of this study is to devise a method to detect uninformative regions in a frame. An automatic method was devised by Oh et al. (2007) to reduce number of uninformative frames (out of focus frame) in traditional endoscopy video (colonoscopy). A method was proposed by Vilarino et al. (2006a, 2006b) to decrease the number of frames based on Gabor ilters that characterized the bubble-like shape (Vilarino et al., 2006a). The proposed method reduced the visualization time (reduced frames full of juice or bubble regions). As they reported, their proposed algorithm reduced the frames by 20%, but they did not mention any measures for separated regions in a frame (the focused on frame work study). In another word, the introduced method omitted frames, but it did not distinguish between informative and non-informative regions in a frame. Using the ROC (receiving operating characteristic) curve with eight classi iers was studied by Vilarino et al. (2006b). The individual classi iers were used as follows: linear discriminant classi ier (LDC), quadratic discriminant classi ier (QDC), logistic classi ier (LOGLC), nearest neighbour (K-NN) with K = 1, 5, 10, decision trees (DT), and Parzen classi ier. 34 features for nine frames were extracted (uninterrupted frames). Features were mean (1 feature × 9 frames = 9 features), the hole size of frames (9 features), global contrast of each frames (9 features), correlation among these features (6 features), and variance among the frames. Their experimental results indicated that the proposed methods could reduce the visualization time. Our presented methods take this fact into consideration that they can distinguish informative and uninformative regions in a frame. Although these the methods proposed by Vilarino et al. (2006a, 2006b) had good results for omitting frames contained juice, no impressing results were found for air bubble detection (as they do not mentioned). 13 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Bejakovic et al. (2009) reported average sensitivity and accuracy for extraneous matter and bubble detection using different methods: 76.50% and 87.30% using MPEG-7 dominant color descriptor, 68.60% and 77.70% using edge histogram descriptor, 65.10% and 88.90% using homogenous texture descriptor, and 61.30% and 77.70% using Haralick statistics. A method was introduced by Bashar et al. (2012) to detect the informative frames. The authors used two steps in their method: the irst step was to isolate highly contaminated non-bubbled (HCN) frames, and the second step was to signify bubbled frames. They used local color moments and the HSV color histogram, which characterized HCN frames. Then, a support vector machine (SVM) was applied to classify the frames. In second step, a Gauss Laguerre transform (GLT) (based on texture feature) was used to isolate the bubble structures. Finally, informative frames were detected using a threshold on the segmented regions. Feature selection was proposed using an automatic procedure based on analyzing the consistency of the energy-contrast map. The combinations of their proposed color and texture features showed average detection accuracies (86.42% and 84.45%). Same as the previous studies, their study was limited to frame reduction. In this paper, three methods are introduced to distinguish among informative and uninformative regions in a frame; the visualization time is not reduced, but it is possible to reduce frames (as we study it to compare with the previous methods). Moreover, as mentioned above, the methods can increase the accuracy of other automatic methods such as automatic detection of different organs by Haji-Maghsoudi et al. (2012); Mackiewicz et al. (2008), bleeding regions by Li and Meng (2009); Pan et al. (2010); Haji-Maghsoudi and Soltanian-Zadeh (2013), and tumor regions by Haji-Maghsoudi and Soltanian-Zadeh (2013); Kumar et al. (2012); Li and Meng (2012); Haji-Maghsoudi et al. (2012). Pan et al. (2011) introduced a method based on color similarity measurements to ind bleeding regions in a WCE frame. They also introduced another bleeding detection method (Pan et al., 2010) using probabilistic neural network. Li and Meng (2009) presented a method based on chrominance moment as a color feature to detect bleeding regions in a WCE frame. Junzhou et al. (2011) presented a method to detect bleeding regions based on contourlet features. Karargyris and Bourbakis (2009) illustrated a method using Gabor ilter, color and texture features, and a neural network to detect ulcers regions in the WCE frames. Karargyris and Bourbakis (2011) also developed another method to detect ulcer and polyp frames in the WCE videos. A method was introduced by Kumar et al. (2012) to detect the frames contained Crohn's disease. Haji-Maghsoudi et al. (2012) proposed a method to segment abnormal regions in a frame based on the intensity value. In addition, Haji-Maghsoudi and Soltanian-Zadeh (2013) presented a method using LFP for detection of frames containing diseases regions. In addition to the researches mentioned above, the methods proposed by Mackiewicz et al. (2008); Coimbra and Silva Cunha (2006); Szczypinski et al. (2012) developed combining geometry, color, and texture features in WCE frame analysis. A method was proposed by Mackiewicz et al. (2008) to discriminate between esophagus, stomach, 14 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 small intestine, and colon tissue in WCE videos. The authors used a combination of geometry, color, and texture features to analyze the WCE frames. Compressed hue-saturation histograms were used to extract color features; LBP was used to extract color and texture features; each frame was divided to sub images to de ine the region of interest for features extraction; and motion features using adaptive rood pattern search were extracted from images. Then, the video was segmented into meaningful parts using support vector classi ier and multivariate Gaussian classier built within the framework of a hidden Markov model. Haji-Maghsoudi et al. (2012) presented two methods to distinguish different organs based on texture and color features in the WCE videos. The proposed methods for organs detection may be improved using the methods presented in this study. As mentioned above, the previous researchers studied methods for reduction of visualization time that help physicians to review the WCE videos easier. Although the visualization time is important issue in this device, omitting uninformative regions in a frame can help physicians to study based on a case study, manufactures to improve their software, and researchers to improve the results of their automatic methods; therefore, we propose the methods obtained this goal. Three novel methods are proposed to detect bubbles and juices regions in the WCE frames. In Section II, the methodologies and functions, which are optimized and utilized in this study, are explained. Our experimental results are also illustrated in Section III and in Section IV, advantage and disadvantage points in our methods are deduced. 2. The Proposed Algorithms In this section, we describe the sequences are used to devise three methods. 2.1 First Method; Using Morphological Operations In the irst method, the following sequences are used: 1. First, the gray scale image is generated from the RGB frame. Then, morphological operations are applied on the gray scale frame. Mathematical morphology (MM) operations are techniques and theories for the analysis and processing of geometrical structures, based on topology and random functions. MM operations were originally developed for binary images and were later extended to the gray scale functions and images (Harlick et al., 1987). The subsequent generalization to complete lattices is widely accepted today as MM theoretical foundation. In this paper, the dilation and closing operations are applied on the gray scale image. Four dilation and four closing operations are used by 13, 11, 9, and 7 pixels. To smooth the image after using MM operations, median ilters (Lim, 1990) with 25×25 and 35×35 pixels as the window size are used. Figure 1 shows the effect of this ilter on sample frames. 2. The fuzzy k-means clustering (FKM) clustering with ive clusters is applied to ind the lighter regions in a frame (as demonstrated in Figure1). FKM algorithm performs iteratively until achiev15 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Fig. 1. Column (a) shows the original frames, (b) is the effect of morphological operation on the frame from column (a), (c) is the median ilter of (b), (d) is the fuzzy k-means by ive clusters on the column (c), and (e) shows applying sigmoid function on the frame (c) after using the irst neural network. 16 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Fig. 2. The effect of sigmoid function by different parameters. In column (a) are the original images, (b) is the input image of sigmoid function from (a). The columns (c), (d) and (e) are the result of applying sigmoid functions with Cut-off = 0.95, 0.75, 0.55 and Gain = 50, respectively. ing absolute classes (each point has to be in a class). The applications of FKM was described by Dring et al. (2006). We have to indicate that most of bubbled regions are relativity lighter and these lightening are also increased by applying the morphological operations in the previous step (increasing the round shape objects like bubbles). Five clusters are generated to distinguish among the borders (the darkest class or black pixels), dark regions (which are not carry important information in the next processes), tissue with mediocre intensities, light regions (usually normal or diseases regions are included in these two latest groups), and the lightest regions (usually bubbled regions) in a frame after applying the MM operations. Regarding the clustering, the average intensity value of pixels for each cluster is calculated (by the logical AND operation, the same regions in the output image after applying morphological process are extracted). Then, a neural network (Leondes, 1998) with a hidden layer and an output layer is used to divide images into two groups: irst group, the images contained no round objects, and second group, the images contained bubbles, juice, or other round objects. 3. Now, a sigmoid function is used to segment the region of interest for the next processes based on the irst neural network output. Sigmoid function is a continuous non-linear function. The name, sigmoid is derived from the fact that the function is shaped (Ramesh et al., 1995). Using f(x) for the output and G(x) as a gain, so: f (x) = 1 1 + eG(x) (1) Here, a new form of sigmoid function is used with additional parameters, which I(i,j) is the input image without any process, J(i,j) is the output image, Gain is a parameter for changing slope, and Cutoff is another parameter to shift data, J(i, j) = 1 1+ eGain×(Cutof f −I(i.j)) (2) Based on the sigmoid function, the input matrices values are resorted between zero and one; however, the output image is rescaled to the gray scaled range [0 255] after applying the sigmoid function. In addition, the usage of the sigmoid function was illustrated by Haji-Maghsoudi et al. 2012 for diseases segmentation in WCE frames. 17 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Regarding to the step 2, the sigmoid function is applied by the following parameters (depend on the classes): • Gain = 50, Cutoff = 0.55 have round object • Gain = 50, Cutoff = 0.95 does not have round object These processes effects are demonstrated in Figure 1, and Figure 2 shows how the sigmoid function can change the segmented regions by changing of parameters. Then, the image is divided to 256 sub images with 32×32 pixels in order to achieve more accurate results. 4. The Gabor ilter (G function) is same as a sinusoidal plane of particular frequency and orientation modulated by a Gaussian envelope before convolving with images. This ilter has good localization properties in both spatial and frequency domains and has been used for texture segmentation by Jain et al. (1997); Clausi and Jernigan (2000); Bovik et al. (1990). The impulse response of the 2D Gabor ilter is G= 2 2 1 −( X 2 + Y 2 ) × e 2σx 2σy × ei(2πf X+ϕ) 2πσx σy (3) sX = x × cos(θ) + y × sin(θ) (4) Y = −x × sin(θ) + y × cos(θ) (5) where and θ is the rotation angel of the impulse response, x and y are the coordinates, σx and σy are the standard deviations of the Gaussian envelope in the x and y directions, respectively, f and ϕ are the frequency and phase of the sinusoidal, respectively. A ilter bank is created by 24 Gabor ilters which are mentioned bellow (combination of parameters): f = 0.25, 1, and 3; θ = 0, 45, 90, and 135; ϕ = 0; x and y ranges = 10; sigmax = 1 and sigmay = 2; sigmax = 1 and sigmay = 1. The Gabor ilter parameters were selected based on experiments, although the method presented by Bovik et al. (1990) inspired us to choose them easier. 5. After using the Gabor ilter bank, the Haralick features (14 features) are extracted using the gray level co-occurrence matrix (GLCM) as follows: Contrast, correlation, entropy, energy, difference variance, difference entropy, information measure of correlation1, information measure of correlation2, inverse difference, sum average, sum 18 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Fig. 3. Some inal frames in the irst method. Rows (a) and (c) are the original images without any process and rows (b) and (d) are the output image after applying the all processes on the original images. variance, sum of squares, sum entropy, maximum correlation coef icient (14 Haralick features) (Haralick et al., 1973). Also, other statistical features are extracted based on GLCM, such as: Autocorrelation, cluster prominence, cluster shade, dissimilarity, homogeneity, maximum probability, inverse difference normalized, and inverse difference moment normalized (Soh and Tsatsoulis, 1999; Clausi, 2002). Three other features are mean, skewness, and kurtosis extracted from the gray scaled image. In this paper, GLCM features signify these 25 features (14 Haralick features and 11 other statistic features). Therefore, 25 features for each Gabor ilter produce 600 features (for a sub image), and 100 features are extracted using the GLCM features from the gray scale frame in four different angles (0, 45, 90, and 135). As a result, a matrix with n×700 features is created that n depends on number of sub images (with a maximum of 256 sub images). 6. The Fisher test (Gu et al., 2012) is used to justify our features and choose 100 features for each sub image (the features are normalized between 0 and 1 before applying the Fisher test). Then, the second neural network is trained by the features selected after applying the Fisher test. Therefore, each sub image can be decided whether is informative or uninformative. 7. The bubbled regions are recognized in a frame (the resolution is 32 ×32) using the steps mentioned above. Now, a threshold on hue, saturation, and intensity value is applied (Naik and Murthy, 2003) to omit the dark regions. In the inal step, a median ilter is applied to smooth the segmented regions (this time, the median ilter window size is 15). The gray scale image is converted to binary, and then the 'AND' function is used to omit dark regions in the RGB frame. This step is illustrated in Figure 10. • Hue ≥ 50.4, Saturation ≥ 0.75, Value ≤ 0.5 19 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 • Hue ≥ 90, For All Saturation, For All Value • For All Hue, For All Saturation, Value ≤ 0.25 The HSV discriminators ranges are studied in the results Section. To justify the irst algorithm, the following factors should be considered: MM operations are designed to emphasize on the round objects in a frame; median ilter is used to smooth the frame not to reduce noise; fuzzy K-mean is used to classify pixels in a frame to ive clusters; sigmoid function is an effective method to signify the selected region after clustering; texture features are extracted from sub images and they are used for the neural network inputs while Fisher score test is used to optimize the features (to ind the best ones); HSV discriminator plays a signi icant role to omit dark parts in a frame. Because the HSV discriminator and sub images determine the neural network resolution in detection of regions, Median ilter is used to smooth the output image. Fig. 4. The left chart is the irst proposed algorithm, and the right one shows the second algorithm. 2.2 Second Method Using the LOG ilter In the second algorithm, the Laplacian of Gaussian (LOG) ilter is used (it is the irst time that LOG is used for bubble-shape recognition). The Gaussian can be used for edge detection procedure. First, the Gaussian function is smooth and localized in both the spatial and frequency domains, providing a good compromise between the need for avoiding false edges and for minimizing errors in edge position (Marr and Hildreth, 1980). In fact, Torre and Poggio (1986) described the Gaussian as the only real valued function which minimizes the product of spatial domain and frequency domain spreads. The Laplacian of Gaussian essentially acts as a band pass ilter because of its differential and smoothing behavior. Second, the Gaussian is separable which helps to make computation very ef icient. Moreover, recently, LOG was used to detect blob in microscopic images which shows how it can be useful for detection of round objects (Akakin and Sarma, 2013). The second algorithm is described in details as following steps: 20 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 1. The same HSV discriminator is applied by the same ranges in the irst method. After applying this discriminator on a frame, the median ilter is used with the same window size of the irst method. HSV discriminator is used in the irst step, because it reduces the time of the next processes. In the irst method, the HSV discriminator could not be used as irst step because it can make impact on the extracted features using Gabor ilter which are not used here (Gabor ilters extract the texture and shape features, but these discriminators can effect on the shape). Fig. 5. Row (a) is the original frames, frames show the LOG effect on the (a) by sigma=0.09 in row (b), frames show LOG by sigma=0.3 in row (c). Fig. 6. Some inal frames after using of the second methods. Row (a) shows the results of applying different processes on a sample frame (from left to right: original, HSV discriminations and median ilter, LOG, after neural network (3 images), again HSV discrimination and median ilter). Row (b) shows eight original images that the output images are shown in Row (c). 2. The gray scaled histogram is equalized. Zimmerman et al. (1988) described the histogram equalization advantages for image processing usages; moreover, Cromartie and Pizer (1991) demonstrated the effectiveness of adaptive histogram equalization for edge detection. 3. It is time to use the LOG ilter by sigma = 0.09. Figure 5 shows how the effectiveness of this function on frames. 4. Same as the irst method, sub images by resolution of 32×32 are created; but unlike the irst algorithm, the preprocessing steps (in the irst method, we focused on bubbled regions using morphological operation, FKM, and sigmoid function) and the Gabor ilter are not used here; 21 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 therefore, the GLCM features are extracted for each sub image, 25 features from the original frame and 25 features after applying LOG. 5. Now, a neural network is trained regarding these ifty features. The second algorithm scheme is illustrated in Figure 4. The methods used in the second algorithm are used for the same reasons which were mentioned in the irst algorithm (LOG is used instead of MM, Fuzzy K-Means, and Sigmoid function), expect two additional changes which are Histogram equalization and LOG. Histogram equalization is used to enhance the gray scale frames, it helps LOG to ind edges better; LOG is the main detector of bubble parts in a frame. 2.3 Third Method Using Chan-Vese Active Countor Chan and Vese proposed models using original Mumford-Shah to segment inhomogeneous objects (Truc et al., 2011; Li et al., 2011; Chan and Vese, 2001; Samson et al., 2000; Zhang et al., 2010). They used optimization processes for discontinuities in form of a curve evaluation problem involve level set method and solving Poison partial differential equations. Although, these methods well represent the objects of interest, this process is very complicated and requires a good initialization Truc et al. (2011). The Chan-Vese model is a region based active contour. Therefore, it does not utilize image gradient and has a better performance for the image with weak object boundaries. Moreover, this model is less sensitive to the initial contour; however, identifying initial contour near the object reduces computational cost (Li et al., 2007). The third algorithm is described in details as following steps: Fig. 7. The schematic of third method. 1. A threshold is de ined to ind which frames have black spots. These black spots are suspicious for being bubbled regions. As illustrated in Figure 8, the block spots are pervaded in the frames contained bubbled regions more than frames without any uninformative regions. 22 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Fig. 8. Black spots are shown in negative images. 2. If the black spots are found, the Chan-Vese active contour is used to determine the bubbled regions in a frame. This process is demonstrated in Figure 9. Initial seeds are found for the ChanVese active contour using the black spots shown in the negative image transformation. The effect of black spots as initial seeds is demonstrated in Figure 9. In this paper, the initial seed of contour is de ined by the median point of the generated vector from black spots in a frame. 3. The HSV discriminator is used to omit dark regions. The overview of this algorithm is illustrated in Figure 7. The number of dark pixels is counted for all the frames that proves this number is higher in frames contained bubbled regions compared with frames without bubbled regions, as it is illustrated in Table I. Fig. 9. The effect of initial seed in using Chan-Vese active contour. The left image shows a sample frames result without using the black spots and the right one demonstrates the results of using black spot as the initial seed. 23 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Table 1. Quantitive comparision of dark pixels in presence and without presence of bubbles. 3. Experiments and Results The Given Imaging PillCam SB videos are collected from the Given Imaging website (givenimageing.com). First, each video is digitized into 40 frames (the recording time is 20 seconds and the device captures two frames per second). The frame size is 576×576, the size is reduced to 512×512 (omit borders). In the proposed methods, each frame is divided to sub images with 32×32 pixels that 256 sub images are generated form a frame. Totally, 60 videos are selected that 2400 frames are extracted from these videos (984 frames contained bubbled regions). A question must be clari ied: What are air bubble and juice? In Figure 1, an example of frame contained air bubble regions is the row 6 and an example of frame contained juice contained is the row 2. If a frame contained bubbled regions has more than 5000 Pixels, it counts as a frame contained air bubble regions. Detection of this type of bubble is more dif icult because it is more similar to the informative regions (especially in the second and third method). If the frame has fewer pixels than 5000, it counts as a frame contained juice in this study (so juice is intestinal juice and little air bubble). The specialist (Dr. Soleimani) distinguishes among these two regions (informative and uninformative) in the WCE frames. Feed forward back propagation multilayer neural network is used with three hidden layers (only for the second network) in the irst and second methods. In the second neural network of irst method, input neurons are one hundred features, 39 neurons are designed for the irst hidden layer, the second hidden layer designed by 15 neurons, the third layer has 6 neurons, and the output layer has two neurons to classify the regions to two separated classes (informative and uninformative regions). To de ine number of hidden layers, six numbers between 20 and 60 for the irst hidden layer (this number is called X), ive numbers between 0.2×X and X×0.6 for the second layer (this number is called Y), and three numbers between 0.2 ×Y and Y ×0.6 are selected using random sub-sampling cross validation (we try to choose logical range by setting 0.2 and 0.6 which helps us to reduce our calculation). At end, the numbers of neurons are chosen based on the best neural network performance. The best 24 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Table 2. Accuracy, sensitivity, speci icity, and precision of the methods (total uninformative parts). Table 3. Accuracy, sensitivity, speci icity, and precision of the methods (air bubbled and dark parts). performance among neural networks is 0.016 in the irst method. In the irst network of irst method, a simple network is used with a hidden layer which has 5 neurons. The Accuracy, Precision, Sensitivity and Speci icity are measured as follows: Sensitivity = TP TP + FN (6) Specif icity = TN TN + FP (7) Accuracy = TP + TN TP + FN + TN + FP (8) TP (9) TP + FP where TP, FN, TN, and FP respectively denote the number of pixels in an unimportant region that are correctly labeled, the number of pixels in the unimportant region that are incorrectly labeled as important, the number of pixels in important regions that are correctly labeled as important and the number of pixels in important region that are incorrectly labeled as unimportant region (bubble, juice and dark regions) (Altman and Bland,1994). The accuracy, precision, sensitivity, and speci icity are computed for the methods and illustrated in Table II. P recision = As mentioned in Section 2, the HSV discriminator ranges can in luence on the measures. Here, the effects of some HSV discriminators mentioned in Table IV are studied; also, these effects are illustrated in Figure 10. The chosen discriminator is divided to three different ranges as (7), (8), and (9). The irst range (7) shows the main discriminator for omitting intestinal juice in the GI tract, the second one (8) is used for omitting the extraneous matters which have very different color values, and the third (9) is used for omitting the dark regions by controlling of intensity value. As it is clear in Figure 10, hue determines the color among different objects in a frame. Usually, the normal tissues have the hue value lower than 0.1. The second, third, and fourth rows in Figure 10 (especially the fourth row) demonstrate that most of informative tissues are remained after using a 25 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Table 4. The HSV discriminators. Table 5. The tested values for selection the best sigmoid function cut off value and their related results. 26 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 discriminator by hue higher than 0.1. Therefore, the procedure of inding the best range is started by setting Hue = 0.1. In order to optimize the ranges, the best value is found by selecting a pace and following the instruction in Figure 11. First, the best value for hue channel, then saturation channel, Fig. 10. The irst row shows the original frames, the remained parts after using discriminators one to seven are demonstrated from the second to the eighth rows, respectively. The last row shows the remained parts after applying the chosen discrimination on the original frames. and at end value channel are selected. As discussed, the set point and pace of hue value are 0.1 and 0.01, respectively. The same algorithm is applied to determine the saturation and value parameters. The set points are 0.6 and the paces are 0.1. The results of these processes are shown in Figure 12. The algorithm to ind the best HSV discriminator is applied to select the irst and third ranges as the results illustrated in Figure 12. The second range is used to omit some extraneous matters and in this study is not important and effective. Moreover, the same algorithm is applied to select the median ilters window size which 25 and 35 pixels are chosen. For selecting the sigmoid functions parameters (Cutoff and Gain), again by using cross validation is used and the chosen parameters indicate the best performance. For selecting the sigmoid functions parameters (Cutoff and Gain). Regarding to the equation (3), the Gain is not a determinant factor compared with the Cutoff value; by a few tests, it is clear that Gain (higher than one) can be a constant, so it is selected by Gain = 50, and then the effect of Cutoff value is studied. The algorithm (illustrated in Figure 11) is applied to select the best number for Cutoff. The pace is 0.1 and two set points are de ined by using random sub-sampling cross validation. One point, in the selection of uninformative regions, is important (for measuring); the uninformative regions. Therefore, the sensitivity and speci27 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 icity are calculated. The sensitivity is gained by 3 and the speci icity by 1; then, the average number determines the best number for Cutoff, the procedure is illustrated in Table VI. The next parameter is Fig. 11. The proposed instruction for inding the best values for the parameters. Fig. 12. The results during procedure of selecting the best HSV discrimination. The black, green, and blue points illustrate the result of hue, saturation, and value in the irst range based on method in Figure11. The red ones show the results during inding of the best value for the third range. the sigma which was used in the second method (LOG parameter). Again by using the algorithm for parameter optimization (Figure 11), two set points (0.08 and 0.2) are selected and the pace is 0.02. The best value for LOG is 0.09, but most of the numbers can signify the bubbled regions very well and the differences are very low (less than 0.002 changes in sensitivity). This note is important that if sigma (LOG parameter) is higher than 0.5 the output image is completely dark (do not segment suspected regions), and also if sigma is lower than 0.05, it would be dif icult to ind bubbled regions; so it is possible to obtain to good results if sigma is higher than 0.05 and lower than 0.5. The last parameter is the median ilter. It was used two times in both methods. In the irst method, it was used to smooth frame after using morphological operation and then to smooth result after using 28 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 HSV discriminators. In the second method, it was applied after using HSV discriminator and at the end of processes after using neural network. In the case of the irst method, the median ilter was designed by window size of 35 ×35. It was used again by 15×15 as window size. In the second method, two median ilters were formed by 15×15. So, to select the best window size, the same algorithm was used (as illustrated in Figure 11). Random sub-sampling cross validation, for selecting set points, is used to determine two set points lower than 100 and pace is 10. For optimization of parameters, sequences were used to obtain to the best result. In the irst method, the irst median ilter was designed, sigmoid function was the second parameter (sensitivity is more important), the last median ilter was optimized without considering HSV discriminator and on the neural network output frame (both sensitivity and speci icity are important), and HSV ranges were the last parameter (both sensitivity and speci icity are important). One more point is also important that the irst median ilter window size was selected by random sub-sampling cross validation between 10 and 50, although the sigmoid parameters were determinative factor on the results. The last median ilter does not change the measures, but it affects on edges and improves visualization by smoothing sub images; in irst row of Figure 6, columns seventh and eighth, these effects are demonstrated. One question is remained Table 6. The tested values for selection the best sigmoid function cut off value and their related results. unanswered: why are two times MM operations used? Each time that MM operations are applied the bubbled parts are magni ied. The irst time emphasizes and magni ies the small bubbles, and the second time emphasizes on the round objects after applying the irst time. The second time of using MM operations helps us to be sure that all bubbled parts are selected while after the irst time the some small bubbles are not large enough for the next detections. To compare our study with the previous studies presented for frame reduction not detection of bubbled regions in a frame, 100 frames are tested to examine the accuracy of the method for frame reduction. Therefore, a threshold is set to classify the frames using the methods. If the bubbled regions in a frame is more than 90% of total pixels (more than 235930 pixels), the frame is totally uninformative. The results are illustrated in Figure 13. In selection of the data set for frame reduction, we select 50 frames for each class (informative and uninformative frames). 29 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Fig. 13. The results of frame reduction. (a), (b), (c), and (d) show respectively the frames classes which should be classi ied using the methods, the classi ied frames using the irst method, the classi ied frames using the second method, and the classi ied frames using the third method. 4. Conclusion In this paper, two new methods were presented for automatic bubble and juice detection. The proposed methods were focused on the detecting of uninformative regions in a frame. In the previous studies (Vilarino et al., 2006a, 2006b; Li and Meng, 2012; Haji Maghsoudi and oltanian-Zadeh, 2013), some methods were proposed to reduce the frames number in a captured video. The obtained precision, sensitivity, accuracy, and speci icity for segmenting the intestinal uninformative regions for the irst method are 97.76%, 97.80%, 98.15% and 98.40% respectively, 93.32%, 84.60%, 91.05%, and 95.67% in the second method, and 93.32%, 84.60%, 91.05%, and 95.67% in the third method. As it is clear in Figure 14, the second method has lower accuracy and precision; especially, for the air bubbled regions detection, the differences are more obvious. It is because of the LOG ilter which cannot distinguish between informative tissue and the air bubbled tissue. The second method has lower accuracy and precision, but it is quicker than the irst. In the case of third method, the results are less than the two irst methods. The third method contains only three steps and it is devised without using a neural network or classi ier that means it is faster than the others. As mentioned in the introduction, other works focused on time reduction, not improving frames for the next processes. Moreover, these studies did not indicate air bubbled parts which are more similar to normal tissue in a frame. The only paper was (Haji Maghsoudi and oltanian-Zadeh, 2013) which proposed methods to distinguish among diseases region and extraneous matters; they reported relatively low accuracy and precision for detection of extraneous matters respectively between 60% and 80%. However, to compare our methods with the proposed methods in the previous literature, we examine the methods using a threshold (90%) to classify the informative and uninformative frames. Therefore, the uninformative frames can be omitted after the processes. The experimental results are illustrated 30 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 in Figure 13. Regarding the methods accuracy for detection uninformative and informative regions, the methods are able to omit the frames in a video. As expected, the irst method is more reliable for the frame reduction that only one mistake ind in the uninformative frames. The First problem in our work was the different ranges of bubble and juices used in the morphological operation to increase bubble shape in the irst and the LOG ilter in the second method. Our Second issue was the number of features after using Gabor wavelet ilter bank and extracting GLCM features in the irst method, so the Fisher score test was used to reduce number of features. A MLP was used as a classi ier, that another choice can be support vector machine (SVM). In addition, results were calculated for different uninformative types such as detecting total informative regions and air bubbled regions which are shown in Figure 14 and the effect of HSV discriminators are illustrated in the irst method which is illustrated in Figure14. The massive problem was to determine the different param- Fig. 14. The blue (total uninformative parts) and green (only air bubbled and dark parts) bars show the results in the irst method while the red (total uninformative parts) and yellow (only air bubbled and dark parts) bars show the results in the second method. eters. An algorithm (illustrated in Figure 11) was used to optimized and ind the best parameters. Random sub-sampling cross validation helped us in some cases to ind the best value (such as neural network hidden layers, sigmoid function, and HSV discriminator). Also, the character of function was used in other cases (such as HSV discriminator, sigmoid function, and LOG). These algorithms can work on different digestive organs. Future research will be directed to investigate automatic detection of diseases and tumor regions in modi ied frames which is regarding to the results; we will be able to detect diseases accurately. 31 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 References Akakin, H. C., Sarma, S. E., 2013. A Generalized Laplacian of Gaussian Filter for Blob Detection and Its Applications, IEEE transaction on Cybernetics, vol. 99, 1-15. Altman, D. G., Bland, J. M., 1994. Diagnostic tests. 1: Sensitivity and speci icity, BMJ, 308, 1552. Bashar, M. K., Kitasaka, T., Suenaga, Y., Mekada, Y., Mori, K., 2012. Automatic detection of informative frames from wireless capsule endoscopy images, Elsevier Medical Image Analysis, 14, 449-470. Bejakovic, S., Kumar, R., Dassopoulos, T., Mullin, G., Hager, G., 2009. Analysis of Crohn’s Disease Lesions in Capsule Endoscopy Images, IEEE International Conference on Robotics and Automation, 2793-2798. Bovik, A. C., Clark, M., Geisler, W. S., 1990. Multichannel Texture Analysis Using Localized Spatial Filters, IEEE Transactions on Pattern Analysis and Machine Intelligence, 12, 55-73. Chan, T., Vese, L., 2001. Active contours without edges, IEEE Trans. Image. Process, 10, 266-277 (2001) Clausi, D. A., 2002. An analysis of co-occurrence texture statistics as a function of grey level quantization, Can. J. Remote Sensing, 28, 45-62. Clausi, D. A., Jernigan, M. E., 2000. Designing Gabor ilters for optimal texture separability, Pattern Recognition, 33, 1835-1849. Coimbra, M., Silva Cunha, J. P., 2006. MPEG-7 visual descriptors - Contributions for automated feature extraction in capsule endoscopy, IEEE Trans. Circuits and Systems for Video Technology, 16, 628-637. Cromartie, R., Pizer, S. M. 1991. Edge-Affected Context for Adaptive Contrast Enhancement, IPMI 1991, 374485. December 2011. Given Imaging. Available: http://www.givenimageing.com. Dring, C., Lesot, M. J., Kruse, R., 2006. Data analysis with fuzzy clustering methods, Computational Statistics and Data Analysis, 51, 192-214. Fritscher-Ravensand, F., Swain, P., 2002. The wireless capsule: New light in the darkness, Dig. Dis., 20, 127-133. Gu, Q., Li, Z., Han, J., 2012. Generalized Fisher Score for Feature Selection, in proceeding of CoRR. Haji Maghsoudi, O., Soltanian-Zadeh, H., 2013. Detection of Abnormalities in Wireless Capsule Endoscopy Frames using Local Fuzzy Patterns, In Proceeding of 20th International conference on Biomedical Engineering (ICBME). Haji-Maghsoudi, O., Talebpour, A., Soltanian-zadeh, H., Haji-Maghsoodi, N., 2012. Automatic Organs Detection in WCE, 16th CSI International Symposium on Arti icial Intelligence & Signal Processing, (AISP), Shiraz, Iran, 116�?21. Haji-Maghsoudi, O., Talebpour, A., Soltanian-zadeh, H., Haji-Maghsoodi, N., 2012. Segmentation of Crohn’s, Lymphangiectasia, Xanthoma, Lym- phoid hyperplasia and Stenosis diseases in WCE, in proceeding of 24th European Medical Informatics Conference (MIE), Pisa, Italy, 180, 143 �?47. Haralick, R. M., Shanmugam, K., Dinstein, I., 1973. Textural Features for Image Classi ication, IEEE Trans. on systems, man and cybernetics, 3, 610-621. 32 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Harlick, R. M., Sternberg, R S., Zhuang, X, 1987. Image Analysis using mathematical Morphology, IEEE Tran. On Pattern analysis and Machine Vision, 9, 532-550. Iddan, G., Meron, G., Glukhovsky, A., Swain, P., 2000. Wireless capsule endoscopy, Nature, 405, 417-418. Jain, K., Ratha, N., Lakshmanan, S., 1997. Object detection using Gabor ilters, Pattern Recognition, 30, 295-309. Junzhou, C., Run, H., Li, Z., Qiang, P., Tao, G., 2011. Contourlet Based Feature Extraction and Classi ication for Wireless Capsule Endoscopic Images, 4th International Conference on Biomedical Engineering and Informatics (BMEI), 1, 219-223. Karargyris, A., Bourbakis, N., 2009. Identi ication of ulcers in Wireless Capsule Endoscopy videos, IEEE International Symposium on Biomedical Imaging: From Nano to Macro, 554-557. Karargyris, A., Bourbakis, N., 2011. Detection of Small Bowel Polyps and Ulcers in Wireless Capsule Endoscopy Videos, IEEE Transactions on biomedical engineering, 58, 2777-2786. Kumar, R., Zhao, Q., Seshamani, S., Mullin, G., Hager, G., Dassopoulos, T., 2012. Assessment of Crohn’s Disease Lesions in Wireless Capsule Endoscopy Images, IEEE Transactions on biomedical engineering, 59,355362. Leondes, C. T., 1998. Algorithms and Architectures Neural Network Systems Techniques and applications. Li, B., Meng, M. Q. H., 2009. Computer-Aided Detection of Bleeding Regions for Capsule Endoscopy Images, IEEE Transactions on biomedical engineering, 56, 1032-1039. Li, B., Meng, M. Q. H., 2012. Tumor Recognition in Wireless Capsule Endoscopy Images using Textural Features and SVM-based Feature Selection, IEEE Transaction on Information Technology in biomedicine, 16, 323329. Li, C., Huang, R., Ding, Z., Gatenby, J. C., Metaxas, D. N., Gore, J. C., 2011. A Level Set Method for Image Segmentation in the Presence of Intensity Inhomogeneities with Application to MRI, IEEE Trans on Image Processing, 20, 2007-2016. Li, C., Kao, C. Y., Gore, J. C., Ding, Z., 2007. Implicit Active Contours Driven by Local Binary Fitting Energy, Conf. Computer Vision and Pattern Recognition, IEEE, 12, 1-7. Lim, J. S., 1990. Two-dimensional signal and image processing, Englewood Cliffs, NJ, Prentice Hall. Mackiewicz, M., Berens, J., Fisher, M., 2008. Wireless Capsule Endoscopy Color Video Segmentation, IEEE transaction on medical imaging, 27, 1769-1781. March 2012. http://murphylab.web.cmu.edu/publications/boland/. Marr, D., Hildreth, E., 1980. Theory of edge detection, Proc. R. Soc. Lond. B, 187-217. Naik, S. K., Murthy, C. A., 2003. Hue-preserving color image enhancement without gamut problem, IEEE Trans. on Image Processing, 12, 1591-1598. Oh, J. H., Hwang, S., Lee, J., Tavanapong, W., Wong, J., Goren, P. C., 2007. Informative frame classi ication for endoscopy video, Medical Image Analysis, 11, 110-127. Olsen, A. R., Gecan, J. S., Ziobro, G. C., Bryce, J. R., 2001. Regulatory Action Criteria for Filth and Other Extraneous Materials V. Strategy for Evaluating Hazardous and Nonhazardous Filth, Regulatory Toxicology and Pharmacology, 33, 363-392. 33 Omid Haji Maghsoudi, Alireza Talebpour, Hamid Soltanian-Zadeh, Mahdi Alizadeh, and Hossein Asl Soleimani / Journal of Advanced Computing (2014) Vol. 3 No. 1 pp. 12-34 Pan, G., Xu, F., Chen J., 2011. A Novel Algorithm for Color Similarity Measurement and the Application for Bleeding Detection in WCE, Journal of Image Graphics and Signal Processing, 3, 1-7. Pan, G., Yan, G., Qiu, X., Cui, J., 2010. Bleeding Detection in Wireless Capsule Endoscopy Based on Probabilistic Neural Network, Journal of Medical Systems, 35, 1477-1484. Ramesh, J., Rangachar, K., Brian, G., 1995. Machine vision, McGraw Hill, New York. Samson, C., Blanc-Feraud, L., Aubert, G., Zerubia, J., 2000. A variational model for image classification and restoration, IEEE Trans. Pattern Anal. Mach. Intell., 22, 460-472. Soh, L., Tsatsoulis, C., 1999. Texture Analysis of SAR Sea Ice Imagery Using Gray Level Co-Occurrence Matrices, IEEE Transactions on Geo- sciences and Remote Sensing, 37, 780-795. Szczypinski, P., Klepaczko, A., Pazurek, M., Daniel, P., 2012. Texture and color based image segmentation and pathology detection in capsule endoscopy videos, Computer Methods and Programs in Biomedicine, 113(1), 396-411. Torre, V., Poggio, T. A., 1986. On edge detection, IEEE Trans, Pattern Anal, Mach, Intell, 8, 147-163. Truc, P. T. H., Kimb, T. S., Lee, Y. K., 2011. A: Homogeneity- and Density Distance-driven Active Contours for Medical Image Segmentation, Journal of Computers in Biology and Medicine, 41, 292-301. Vilarino, F., Spyridonos, P., Pujol, O., Vitria, J., Radeva, P., 2006a. Automatic Detection of Intestinal Juices in Wireless Capsule Video Endoscopy, The 18th International Conference on Pattern Recognition (ICPR �?6), 4, 719-722. Vilarino, F., Kuncheva, L., 2006b. Roc curves and video analysis optimization in intestinal capsule endoscopy. Pattern Recognition Letters, 27, 875-881. Zhang, K., Zhang, L., Song, H., Zhou, W., 2010. Active contours with selective local or global segmentation: A new formulation and level set method, Elsevier, Journal of Image and Vision Computing, 28, 668-676. Zimmerman, J., Pizer, S., Staab, E., Perry, E., McCartney, W., Brenton, B., 1988. Evaluation of the effectiveness of adaptive histogram equalization for contrast enhancement, IEEE Transactions on Medical Imaging, 7, 304-312. 34