Primary versus secondary musical parameters and the classification

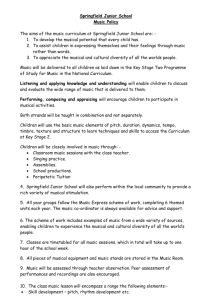

advertisement