Prof. Jian Zhang - Purdue University

advertisement

An Introduction to Statistical Learning Theory

Jian Zhang

Department of Statistics

Purdue University

April, 2010

1

Theory of Learning

Goal: To study theoretical properties of learning algorithm from a

statistical viewpoint.

The theory should be able model real/artificial data so that we can

better understand and predict them.

Statistical Learning Theory (SLT) is just one approach to understanding/studying learning systems.

SLT results are often given in the form the generalization error

bounds, although other types of results are possible.

2

Basic Concepts

Setup:

•

•

•

•

IID observations (x1, y1), . . . , (xn, yn) ∼ PX,Y

yi’s are binary: yi ∈ {0, 1} or {±1}

Dn = {(x1, y1), . . . , (xn, yn)} is also known as the training data

PX,Y is some unknown distribution

A learning algorithm A is a procedure which takes the training data

and produces a predictor ĥn = A(Dn) as the output. Typically the

learning algorithm will search over a space of functions H which is

known as the hypothesis space. So it can also be written as

ĥn = A(Dn , H).

3

Generalization of a Learner

For any predictor/classifier h : X #→ {0, 1}

•

Its performance can be measured by a loss function !(y, p)

•

Most popular loss for classification is the 0/1 loss:

!(y, p) = 1{y %= p}

•

Generalization error (aka. risk):

R(h) := EX,Y [!(Y, h(X))] = P (Y %= h(X)).

Generalization error measures how well the classifier can predict on

average for future observations. Note that it cannot be calculated

in practice as PX,Y is unknown.

4

Bayes Risk and Bayes Classifier

Bayes risk R∗ is defined as the minimum risk over all measurable

functions:

R∗ =

inf

R(h)

h measurable

and Bayes classifier h∗ is the one which attains the Bayes risk:

R∗ = R(h∗ ).

It is easy to show that h∗(x) takes the following form:

!

1, P (Y = 1|X = x) > 0.5

h∗(x) =

0, otherwise.

5

Risk Consistency

Given IID observations Dn = {(x1, y1), . . . , (xn, yn)}, the learning algorithm outputs a classifier ĥn = A(Dn ):

•

•

ĥn is a function of Dn;

R(ĥn) = EX,Y [1{Y %= ĥn(X)}] is also a random variable of Dn

A learning procedure is called risk consistent if

EDn [R(ĥn)] → R∗

as n → ∞. In other words, as more and more training data is provided, the risk of the output classifier will converge to the minimum

possible risk.

6

Empirical Risk Minimization

In general R(h) is not computable since PX,Y is unknown. In practice,

we use empirical risk minimization within a function class H:

n

1"

!(yi, h(xi))

ĥn = arg min R̂n(h) = arg min

h∈H

h∈H n

i=1

where

•

•

•

•

H is some specified function class;

R̂n(h) is the same as R(h) except we replace PX,Y by the empirical

distribution

By law of large numbers, R̂n (h) → R(h) for any h

Uniform convergence needs further condition

7

Overfitting

Overfitting refers to the situation where we have a small empirical

risk but still a relatively large true risk. It could happen especially

when

•

•

sample size n is small

hypothesis space H is large

Recall that empirical risk R̂n(h) is a random variable whose mean

is R(h). Pure empirical risk minimization may lead to the fitting of

noise eventually – overfitting.

8

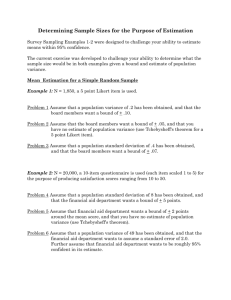

Overfitting: An Example

Consider the following example. Let !(y, p) = (y − p)2 and we obtain

the predictor ĥ by ERM:

n

1"

(yi − h(xi))2.

ĥ = arg min

h∈H n

i=1

Polynomial with degree1

Polynomial with degree2

1.5

1.5

1

1

0.5

0.5

0

0

−0.5

−0.5

−1

−1

−1.5

−1.5

0

2

4

6

0

Polynomial with degree3

1.5

1

1

0.5

0.5

0

0

−0.5

−0.5

−1

−1

−1.5

−1.5

2

4

4

6

Polynomial with degree5

1.5

0

2

6

9

0

2

4

6

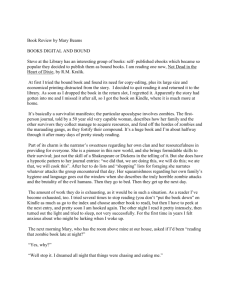

Overfitting vs. Model Complexity

The following figure shows the tradeoff between model complexity

and empirical risk:

True/Empirical Risk VS. Model Complexity

Risk

Empirical Risk

True Risk

Best Model in Theory

Model Complexity

10

Approximation Error vs. Estimation Error

Suppose that the learning algorithm chooses the predictor from the

hypothesis space H, and re-define

h∗ = arg inf R(h),

h∈H

i.e. h∗ is the best predictor among H. Then the excess risk of the

output ĥn of the learning algorithm can be decomposed as follows:

#

$ #

$

R(ĥn ) − R∗ = R(h∗ ) − R∗ + R(ĥn ) − R(h∗ )

%

&'

( %

&'

(

approximation error

11

estimation error

Approximation Error vs. Estimation Error

#

$ #

$

R(ĥn ) − R∗ = R(h∗ ) − R∗ + R(ĥn ) − R(h∗ )

%

&'

( %

&'

(

approximation error

estimation error

Such a decomposition reflects a trade-off similar to the bias-variance

tradeoff:

•

•

approximation error: deterministic and is caused by the restriction of using H;

estimation error: caused by the usage of a finite sample that

cannot completely represent the underlying distribution.

The approximation error behaves like a bias square term, and the

estimation error behaves like the variance. Basically if H is large

then we have a small approximation error but a relatively large

estimation error and vice versa.

12

Generalization Error Bound

Consistency only states that as n → ∞, EDn [R̂n(ĥn)] converges to the

minimum risk. However, sometimes only knowing this is not enough.

We are also interested in the following:

•

•

•

How fast is the convergence?

R̂n(ĥn) is a function of Dn, and thus a random variable. What is

the distribution of this random variable?

In particular, what is the behavior of the tail of the distribution?

A tail bound on the risk can often give us more information including

consistency and rate of convergence.

13

Generalization Error Bound

Recall that the excess risk can be decomposed into approximation

error and estimation error, i.e.

#

$ #

$

∗

∗

R(ĥn ) − R = inf R(h) − R + R(ĥn ) − inf R(h) .

% h∈H &'

( %

&'h∈H

(

approximation error

estimation error

We would like to bound the estimation error. Specifically, if we use

ERM to obtain our predictor ĥn = arg minh∈H R̂n(h), and assume that

inf h∈H R(h) = R(h∗ ) for some h∗ ∈ H, then we have

R(ĥn ) − inf R(h) = R(ĥn ) − R(h∗ )

h∈H

≤ R(ĥn ) − R(h∗ ) + R̂n(h∗) − R̂n (ĥn)

= (R(ĥn)) − R̂n (ĥn)) −)(R(h∗ ) − R̂n (h∗))

)

)

≤ 2 sup )R(h) − R̂n (h)) .

h∈H

14

PAC Learning

Thus if we can obtain uniform bound of suph∈H |R(h) − R̂n(h)| then

the approximation error can be bounded. Thus again justifies the

usage of the ERM method.

The probably approximately correct (PAC) learning model typically

states as follows: we say that ĥn is "-accurate with probability 1 − δ,

if

*

+

P R(ĥn ) − inf R(h∗ ) > " < δ.

h∈H

In other words, we have R(ĥn ) − inf h∈H R(h) ≤ " with probability at

least (1 − δ).

15

PAC Learning: Example I

Consider the special case H = {h}, i.e. we only have a single function. Furthermore, we assume that it can achieve 0 trainining error

over Dn, i.e. R̂n(h) = 0. Then what is the probability that its generalization error R(h) ≥ "? We have

#

$

P R̂n(h) = 0, R(h) ≥ " = (1 − R(h))n

≤ (1 − ")n

≤ exp(−n").

Setting the RHS to δ and solve for " we have " =

probability (1 − δ),

*

+

1

1

P R̂n(h) = 0, R(h) < log

.

n

δ

16

1

n

log 1δ . Thus with

PAC Learning: Example I

Note that we can also utilzie the Hoeffding’s inequality to obtain

P (|R̂n(h) − R(h)| ≥ ") ≤ 2 exp(−2n"2), which leads to

.

,

1

2

log

≤ δ.

P |R̂n(h) − R(h)| ≥

2n

δ

This is more general but not as tight as the previous one since it

does not utilize the fact R̂n(h) = 0.

Example 1 essentially says that for each fixed function h, there is a

set S of samples (whose measure P (S) ≥ 1−δ) for which |R̂n(h)−R(h)|

is bounded. However, such S sets could be different for different

functions. To handle this issue we need to obtain the uniform deviations since:

R̂n (ĥn) − Rn (ĥn) ≤ sup(R̂n (h) − R(h)).

h∈H

17

PAC Learning: Example II

Consider the case H = {h1, . . . , hm}, a finite class of functions. Let

/

0

Bk := (x1, y1) . . . , (xn, yn) : R(hk ) − R̂n(hk ) ≥ " , k = 1, . . . , m.

Each Bk is the set of all bad samples for hk , i.e. the samples for which

the bound fails for hk . In other words, it contains all misleading

samples.

If we want to measure the proability of the samples which are bad

for any hk (k = 1, . . . , m), we could apply the Bonferroni inequality

to simply obtain:

m

"

P (B1 ∪ . . . ∪ Bm) ≤

P (Bk ).

k=1

18

PAC Learning: Example II

Thus we have

#

$

P ∃h ∈ H : R(h) − R̂n(h) ≥ "

=

P

,

m /

0

1

R(hk ) − R̂n(hk ) ≥ "

k=1

union bound

≤

≤

m

"

k=1

#

$

P R(hk ) − R̂n(hk ) ≥ "

m exp(−2n"2).

Hence for H = {h1, . . . , hm}, with probability at least (1 − δ),

2

log m + log 1δ

.

∀h ∈ H, R(h) − R̂n(h) ≤

2n

Since this is a uniform upper bound, it can be applied to ĥn ∈ H.

19

.

PAC Learning: Example II

From the above PAC learning examples we can see that

•

It requires assumptions on data generation, i.e. samples are iid.

The error bounds are valid wrt repeated samples of training data.

√

• For a fixed function we roughly have R(h) − R̂n (h) ≈ 1/

n.

3

• If |H| = m then suph∈H (R(h) − R̂n (h)) ≈

log m/n. The term log m

can be thought as the complexity of the hypothesis space H.

•

There are several things which can be improved:

•

•

•

Hoeffding’s inequality does not utlize the variance information.

The union bound could be as bad as if all the functions in H were

independent.

The supremum over H might be too conservative.

20

From Finite to (Uncountably) Infinite: Basic Idea

If H has infinite number of functions, we want to group functions

into clusters in such a way that functions in each cluster behave

similarly.

•

•

•

Since we aim to bound the risk, the grouping criteria should be

related to function’s output.

Need to related function’s output on a finite sample to its output

on the whole population.

If the number of clusters is finite, we can then apply the union

bound to obtain the generalization error bound.

21

Growth Function

Given a sample Dn = {(x1, y1), . . . , (xn, yn )}, and define S = {x1, . . . , xn}.

Consider the set

HS = Hx1,...,xn = {(h(x1), . . . , h(xn) : h ∈ H} .

The size of this set is the total number of possible ways that S =

{x1, . . . , xn} can be classified. For binary classification the cardinality

of this set is always finite, no matter how large H is.

Definition (Growth Function). The growth function is the maximum

number of ways into which n points can be classified by the function

class:

GH(n) = sup |HS | .

x1 ,...,xn

22

Growth Function

Growth function can be thought as a measure of the “size” for the

class of functions H.

Several facts about the growth function:

•

•

When H is finite, we always have GH(n) ≤ |H| = m.

Since h(x) ∈ {0, 1}, we have GH(n) ≤ 2n. If GH (n) = 2n, then there

is a set of n points such that the class of functions H can generate

any possible classification result on these points.

23

Symmetrization

We define Zi = (Xi, Yi). For notational simplicty, we will use

n

1"

Pf = E[f (X, Y )], Pn f =

f (xi, yi).

n i=1

Here f (X, Y ) can be thought as !(Y, h(X)). The key idea is to upper

bound the true risk by an estimate from an independent sample,

which is often known as the “ghost” sample. We use Z10 , . . . , Zn0 to

denote the ghost sample and

n

1"

0

f (x0i, yi0 ).

Pn f =

n i=1

Then we could project the functions in H onto this double sample

and apply the union bound with the help of the growth function

GH (.) of H.

24

Symmetrization

3

Lemma (Symmetrization). For any t > 0 such that t ≥ 2/n, we

have

,

.

+

*

)

) 0

)

)

P sup |Pf − Pn f | ≥ t ≤ 2P sup )Pnf − Pn f ) ≥ t/2 .

f ∈F

h∈H

25

Generalization Bound for Infinite Function Class

Theorem (Vapnik-Chervonenkis). For any δ > 0, with probability at

least 1 − δ,

2

2 log GH(2n) + 2 log 2δ

∀h ∈ H, R(h) ≤ R̂n(h) + 2

.

n

Proof: Let F = {f : f (x, y) = !(y, h(x)), h ∈ H}.

First note that GH(n) = GF (n).

26

Generalization Bound for Infinite Function Class

P

*

,

.

+

sup R(h) − R̂n(h)) ≥ " = P sup(Pf − Pnf ) ≥ "

h∈H

f ∈F

,

sup(P0nf − Pnf ) ≥ "/2

≤ 2P

= 2P

.

,

f ∈F

.

sup (P0nf − Pnf ) ≥ "/2

f ∈FD ,D0

n n

≤ 2GF (2n)P ((P0nf − Pnf ) ≥ "/2)

≤ 2GF (2n) exp(−n"2/8).

The last inequality is by the Hoeffding’s inequality since P (Pn0 f −

Pnf ≥ t) ≤ exp(−nt2/2). Setting δ = 2GF (2n) exp(−n"2/8) we have the

claimed result.

27

Consistency and Rate of Convergence

It is often easy to obtain consistency and rate of convergence result

from the generalization error bound.

4´

3

√

2

• Consistency: use the fact E[Z] ≤

E[Z ] =

P (Z > t)dt and

plug in the bound.

•

Rate of convergence: often can be read off the error bound.

28

Data Dependant Error Bound

Definition. Let µ be a proability measure on X and assume that

X1, . . . , Xn are independent random variables according to µ. Let F

be a class of functions mapping from X to R. Define the random

variable

5

6

n

)

"

1

)

R̂n(F) := E sup

σif (Xi) )X1, . . . , Xn ,

f ∈F n i=1

where σ1, . . . , σn are independent uniform {±1}-valued random variables. R̂n(F) is called the empirical Rademacher averages of F. Note

that it depends on the sample and can be actually computed. Essentially it measures the correlation between a random noise (labeling)

and functions in the function class F, in the supremum sense. The

Rademacher averages of F is

Rn(F) = E[R̂n(F)].

29

Data-Dependent Error Bound

Theorem. Let F be a set of binary-valued {0, 1} functions. For all

δ > 0, with proability at least 1 − δ,

log(1/δ)

∀f ∈ F, Pf ≤ Pnf + 2Rn(F) +

,

2n

and also with probability at least 1 − δ,

log(2/δ)

,

∀f ∈ F, Pf ≤ Pn f + 2R̂n(F) + C

n

√

√

where C = 2 + 1/ 2.

30