FSS

advertisement

Towards Autonomic Grid Data

Management with Virtualized

Distributed File Systems

Ming Zhao, Jing Xu, Renato Figueiredo

Advanced Computing and Information Systems

Electrical and Computer Engineering

University of Florida

{ming, jxu, renato}@acis.ufl.edu

Advanced Computing and Information Systems laboratory

Motivation

z

Grid data provisioning

•

•

Wide-area latency/bandwidth, dynamism of resources …

How to provide data with application-tailored optimizations?

job job job Highly reliable

access

Simulation

data

Compute

server

Aggressive

prefetching

Data server

job job job

Genome

sequence

GRID

data

Compute

server

Sparse file

job job job access

Data server

Virtual

machine

Compute

server

Advanced Computing and Information Systems laboratory

2

Motivation

z

Grid data management

•

•

Dynamic data access, resource sharing, changing resource availability …

How to manage data provisioning in dynamically changing environments?

job job job Highly reliable

access

Simulation

data

Compute

server

Aggressive

prefetching

Data server

job job job

Genome

sequence

GRID

data

Compute

server

Sparse file

job job job access

Data server

Virtual

machine

Compute

server

Advanced Computing and Information Systems laboratory

3

Overview

z

Goal:

•

z

Challenges:

•

•

z

Efficient data management in heterogeneous, dynamic

and large-scale Grid environments

Application-tailored data provisioning

Data management in dynamically changing environment

Contribution: Autonomic Grid data management

•

•

Virtualized Grid-wide file systems for user-transparent

Grid data access with application-tailored enhancements

Autonomic data management services for self-managing,

goal-driven control over Grid file system sessions

Advanced Computing and Information Systems laboratory

4

Outline

z

Background

• Grid virtual file system and data management

z

Architecture

• Autonomic data management services

z

Evaluation

• Experiments and analysis

z

Summary

• Related work, conclusion and future work

Advanced Computing and Information Systems laboratory

5

Grid Virtual File Systems

z

Grid Virtual File System (GVFS)

•

•

•

Distributed file system virtualization through user-level NFS proxies

Enhancements for Grid environment (disk caching, secure tunneling)

Dynamic, independent, application-tailored GVFS sessions

data

job

job

Proxy Proxy

GVFS Session I

$

$

data

GRID

C1

job

S1

g

Secure Tunnelin

Proxy Proxy

job

FS

GV

II

on

i

s

Ses

Proxy Proxy

S2

Proxy Proxy

$

C2

Advanced Computing and Information Systems laboratory

$

[Cluster Computing, Vol 9/1, 2006]

6

Data Management Services

WSRF-based management services for GVFS sessions

Data Scheduler Service (DSS)

z Server-side

File System Service (FSS)

• Scheduling and customization

z

z

•

of GVFS sessions

z

DRS

DSS

Data Replication Service (DRS)

•

Management of application

data sets and their replicas

Management of serverside GVFS proxies

M

FSS

FSS

Proxy

Proxy

Proxy

Proxy

GVFS Session I

S1

C1

GRID

z

Client-side

File System Service (FSS)

•

Management of clientside mount points, GVFS

proxies and disk caches

FSS

FSS

FS

GV

II

on

i

s

Ses

Proxy

Proxy

S2

Proxy

Proxy

C2

[HPDC’2005]

Advanced Computing and Information Systems laboratory

7

Autonomic Services

Autonomic Data Scheduler

Service

Analyze

Plan

z

Performance

z

Monitor

z Storage

Autonomic

Server-side

Autonomic

Data

Replication

File System

Service

Service

Analyze

Plan

z

DRS

Configuration

Execute

z Reconfigure

Reliability

Performance

Monitor

z

z Storage

Response

DSS

z

Replication

Load balance

Execute

z

z Replicate

Reassign

M

FSS

FSS

Proxy

Proxy

GVFS Session I

S1

Proxy

Proxy

C1

FSS

GRID

Autonomic Client-side

File System Service

Analyze

z

Performance

Monitor

z Response

Proxy

Proxy

Plan

z

F

GV

Prim-server

Execute

z

FSS

Redirect

n

sio

s

e

SS

II

S2

Proxy

Proxy

C2

Advanced Computing and Information Systems laboratory

[Russell, IBM Systems Journal, 2003]

8

Autonomic Data Scheduler Service

z

Manages concurrent GVFS

sessions on shared resources

•

•

•

Plan

Analyze

z

Performance

z

Configuration

Execute

Monitor

z

Storage

Considers both application performance

and resource utilization policy

job job FSS

Session i’s utility:

Proxy Proxy

$

$

C1

Ui = Performancei * Priorityi

Configures sessions to maximize ∑ U i

z

Reconfigure

FSS

Proxy

FSS

Proxy

S2

i

z

E.g. Disk cache management

•

•

Hit rate is important in wide-area environment

Allocates client-side storage among sessions

•

•

•

Monitors storage usage via client-side FSS

Configures the sessions’ caches to maximize global utility

Applies configurations via client-side FSS

Advanced Computing and Information Systems laboratory

9

S1

Autonomic Data Replication Service

z

Replication degree and placement

•

Increases reliability against server-side

failures (crash, network partitioning)

•

•

•

z

Reliability

z

Storage

C1

∑U

Execute

z

Replicate

replica

FSS

Proxy

Proxy

The cost of creating replicas for data set d

Costd < Cmax

Ud = Valued * Reliabilityd , maximizes

Replication

FSS

Replaces replicas based on utility

•

z

Monitor

The reliability of a session’s data set d :

Reliabilityd > Rmin

Reduces replication overhead

•

Plan

Analyze

S1

FSS

Proxy

S2

d

i

z

Replica regeneration

•

•

•

Monitors server status via server-side FSS

Reconfigures sessions’ replicas after a failure

Generates replicas via server-side FSSs

Advanced Computing and Information Systems laboratory

10

Autonomic File System Service

z

Fail-over against server failures

•

•

z

Minimizes impact on the application

Non-interrupt session redirection

•

•

•

•

Monitors server response time

Detects failure when request times out

Redirects session to backup server

Regenerate replicas via DSS/DRS

Plan

Analyze

Performance z Configuration

Execute

Monitor

z

Response

job

C1

z

Redirect

FSS

FSS

Proxy

Proxy

$

FSS

Proxy

z

S1

S2

Primary server selection

•

Maximizes performance against dynamic environment

•

•

•

Monitors connections with effective, low-overhead mechanism:

Small random writes to a hidden file on the mounted partition

Predicts performance with simple effective forecasting algorithm

Chooses the best connection and switches transparently

Advanced Computing and Information Systems laboratory

11

Experimental Setup

z

File system clients and servers

•

z

Wide-area networks

•

z

NIST Net emulated links

I/O part of Grid applications

•

z

VMware-based virtual machines

IOzone file system benchmark

Grid data management

•

•

User-level NFS v3 based GVFS proxies

WSRF-Lite based management services

Advanced Computing and Information Systems laboratory

12

Autonomic Session Redirection

z

Scenario (I)

•

•

80

Data transfer under network fluctuation

E.g. caused by parallel TCP transfers

Setup

•

•

Client connected to two servers with

independently emulated WAN links

Randomly applied latencies with values

from a real wide-area measurement

Network RTT (ms)

z

FSS

70 FSS

Proxy

60

C1

50

S1

Proxy

40

FSS

30

Proxy

20

S2

0

2

4

8

Number of parallel TCP connections

Response Time (sec)

0.09

Server 11

Server

Server 22

Server

Autonomic session redirection

0.08

0.07

0.06

0.05

0.04

0.03

10

z

110

210

310

410

510

Time (second)

610

710

810

Autonomic session redirection achieves the best performance by adapting

to the changing network condition

Advanced Computing and Information Systems laboratory

13

Autonomic Session Redirection

z

Scenario (II)

•

z

FSS

Data transfer under server load variation

Proxy

C1

Setup

•

FSS

S1

Proxy

FSS

Randomly generated background load

applied on the data servers

Proxy

S2

Response Time (sec)

0.6

0.6

Server 11

Server

Server 2

Autonomic session redirection

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

00

60

60

z

180

180

300

300

420

420

540

660

780

540

660

780

Time

Time (second)

(second)

900

900

1020

1020

1140

1140

1260

1260

Autonomic session redirection achieves the best performance by adapting

to the changing server load

Advanced Computing and Information Systems laboratory

14

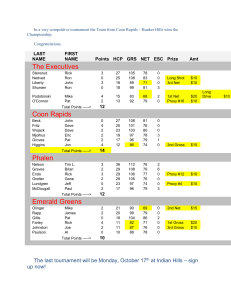

Autonomic Data Replication

z

Scenario

•

z

FSS

FSS

Proxy

Setup

•

•

•

z

Data transfer and replication under

dynamic server failures

Replica degree: 2 (1 primary + 1 backup)

Failures randomly injected on the servers

Consecutive executions of the benchmark

C1

S1

Proxy

FSS

Proxy

Case I: Independent sessions (different inputs)

S2

backup

backupserver

serverfailed

failed

new

newreplica

replicacreated

created

primary

primaryserver

serverfailed

failed

primary

primaryserver

serverfailed

failed

new

newreplica

replicacreated

created

backup

backupserver

serverfailed

failed

new

newreplica

replicacreated

created

new

newreplica

replicacreated

created

0

180

time (s)

198

309

884

676

1st

1strun

rundone

done

redirected

redirectedto

tobackup

backup

z

1015 1077 1213

1096

1329

2nd

2ndrun

rundone

done

2020 2159

1996

3rd

3rdrun

rundone

done

2642

4th

4thrun

rundone

done

redirected

redirectedto

tobackup

backup

Autonomic data replication provides transparent error detection/recovery

Advanced Computing and Information Systems laboratory

15

Autonomic Data Replication

z

Scenario

•

z

FSS

FSS

Proxy

Setup

•

•

•

z

Data transfer and replication under

dynamic server failures

Replica degree: 2 (1 primary + 1 backup)

Failures randomly injected on the servers

Consecutive executions of the benchmark

C1

S1

Proxy

FSS

Proxy

Case II: Dependent sessions: same input, cache reuse

S2

backup server failed

primary server failed

new replica created

new replica created

311

z

10th run done

9th run done

8th run done

7th run done

6th run done

5th run done

4th run done

redirected to backup

675 715 754 793 833 873 913 953 993 1033

3rd run done

201

1011

884

2nd run done

180

1st run done

0

time (s):

Client-side disk cache further helps to minimize the impact of failures

Advanced Computing and Information Systems laboratory

16

Related Work

z

Data management in Grid environment

•

z

Middleware control over Grid data transfers

•

•

z

GridFTP, GASS, Condor, Legion

Batch-Aware Distributed File System [NSDI’04]

Self-optimizing, fault-tolerant bulk data transfer

framework based on Condor and Stork [ISPDC’03]

Replication and storage management

•

•

Wide-area data replication based on Globus RFT

IBM autonomic storage manager

Advanced Computing and Information Systems laboratory

17

Summary

z

Problem:

• Efficient data management in heterogeneous,

dynamic and large-scale Grid environments

z

Solution:

• Autonomic data management system based on

GVFS and self-managing, goal-driven services

z

Future work:

• Autonomic session checkpointing and migration

• Decentralized coordinating per-domain DSS/DRS

Advanced Computing and Information Systems laboratory

18

References

[1] M. Zhao, J. Zhang and R. Figueiredo, “Distributed File System Virtualization

Techniques Supporting On-Demand Virtual Machine Environments for Grid

Computing”, Cluster Computing, 2006.

[2] M. Zhao, V. Chadha and R. Figueiredo, “Supporting Application-Tailored Grid File

System Sessions with WSRF-Based Services”, HPDC-2005.

[3] J. Xu, S. Adabala and J. A.B. Fortes, “Towards Autonomic Virtual Applications in

the In-VIGO System”, ICAC-2005.

[4] L. W. Russell et al, “Clockwork: A New Movement in Autonomic Systems”, IBM

Systems Journal, 42(1), 2003.

[5] J. Bent et al, “Explicit Control in a Batch-Aware Distributed File System”, NSDI-2004.

[6] T. Kosar et al, “A Framework for Self-optimizing, Fault-tolerant, High Performance

Bulk Data Transfers in a Heterogeneous Grid Environment”, ISPDC-2003.

[7] A. Chervenak et al, “Wide Area Data Replication for Scientific Collaborations”, Grid2005.

[8] Y. Zhong, S. G. Dropsho, C. Ding, “Miss Rate Prediction across All Program Inputs”,

PACT-2003.

WSRF::Lite An Implementation of Web Services Resource Framework

http://www.sve.man.ac.uk/Research/AtoZ/ILCT

GVFS

Virtualized distributed file system for Grid environment

http://www.acis.ufl.edu/~ming/gvfs

In-VIGO

Virtualization middleware for computational Grids

http://www.acis.ufl.edu/invigo

Grid virtualization

middleware

Advanced Computing and Information Systems laboratory

19

Acknowledgments

z

In-VIGO team

• http://invigo.acis.ufl.edu

z

NSF Middleware Initiative

NSF Research Resources

IBM Shared University Research

Intel ISTG R&D Council

z

Questions?

z

z

z

Advanced Computing and Information Systems laboratory

20