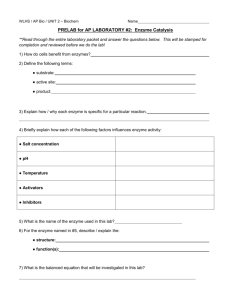

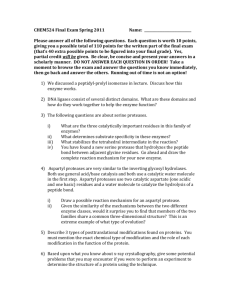

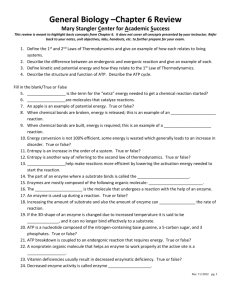

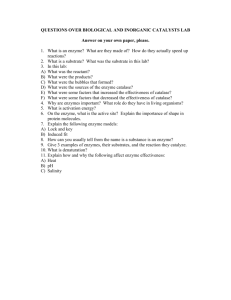

C H A P T E R 1 Biochemical Reactions

advertisement