Common Writing Assignment

advertisement

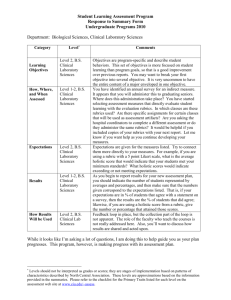

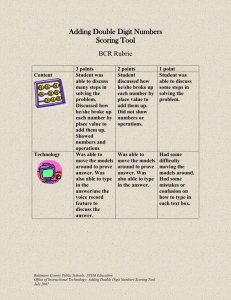

http://www.ccc.commnet.edu/slat/cwa_final.htm REPORT ON ASSESSMENT OF WRITING through a Common Writing Assignment 2001–2002 INTRODUCTION In Capital Community College's design for Student Learning Assessment, individual programs are responsible for refining and assessing their goals according to cycles that fit with program review, certification, or internal examination schedules. At the same time, the broader curricula of General Education and Developmental Education require ongoing assessment, and the plan is to implement one large cross-curricular assessment each year for these areas. Since both General Education and Developmental Education share the goal of effective writing, the first year of implementation was devoted to sampling student essays to determine how well our students are meeting that goal. METHOD Instrument: course-embedded essay Twelve teachers in diverse fields ranging from literature to marketing to math gave a common writing assignment to their students in fifteen different classes. Students in all classes received the same assignment sheet (see Assignment), which asked them to read an article selected by their teacher and compose a response to it. They were asked to devote their first paragraph to introducing and summarizing the information in the article and then to spend the rest of the paper presenting their thoughts about the topic. They were urged to support their ideas with references to the article, to other sources of information, or to their own experiences. Regardless of the range of topics and varying difficulty in the readings, the common conceptual task was to find a balance between objective and subjective discourse—a balance which had been identified as troublesome for students in a preliminary writing scan. Students were given a week and a half to prepare the paper, and no formal revision guidance was offered. Students prepared one copy of the paper to be read by their own teachers as part of required class work. They made a second, anonymous (temporarily identified by Banner student ID number), copy to submit to the pool from which samples would be randomly selected for the assessment scoring. Scoring: holistic and analytic One hundred samples representing all fifteen classes were scored on a scale of 1-4 by members of the Assessment Implementation Team in two scoring sessions, one in November and one in May. Readers read each paper twice: once for holistic scores and then again for analytic scores according to a rubric (see Rubric for detail). Each scoring session began with a little over an hour of norming. For the May scoring session, norming samples included two anchor papers from the November scoring. After norming, each paper was read by two readers. If any paper received holistic scores differing by more than one point on the four-point scale, it was read by a third reader. No reader was able to see the previous readers' scores. Data management For each sample, readers' scores were averaged in each category and entered on a spreadsheet showing students' academic histories for analysis. By this point, student Banner ID numbers had been replaced by assessment key code numbers in an irreversible translation, assuring full anonymity for participants. Further information on data management is available on the Reporting Design page. Validity and reliability 1 of 5 http://www.ccc.commnet.edu/slat/cwa_final.htm As a writing task that was part of the work of ongoing courses, the essay had high content validity. As performance indicators of effective writing, the sampled essays were authentic texts produced by students rather than responses to abstract measures intended to predict performance. The scoring scale and analytic rubric were based on previous readings of student papers that had led to consensus among Capital Community College staff about the key elements of writing. Further, the assignment had high consequential validity in its stimulation of writing and discussion of standards across the curriculum. In holistic scoring, inter-reader reliability (agreement of two readers within one point on a four point scale) was 84%. Sixteen papers required a third reader. Analytic scoring on the rubric's four criteria showed greater variation on some criteria, less on others. An additional possible indication of reliability was offered by the observation that of the six students who wrote essays in two different classes, four received identical scores in all categories, and one received scores differing by only half a point. RESULTS Process lessons As a pilot project in the assessment of General and Developmental Education goals, the Common Writing Assignment presented many opportunities for learning about: logistics of course-embedded measures collegial establishment of standards and criteria scoring challenges, reliability and validity data collection and management reporting, interpretation, and application of results Participating students were asked how they felt about the assignment, and participating teachers were given an evaluation questionnaire about the project. Half of the students reported positive responses to the assignment (either reading, writing, or both). Twenty-nine made no comment or neutral comments, and twenty-one reported negative responses. Seven of the twelve teachers who embedded the assignment in their courses responded to an evaluation of the project. Teachers' greatest agreement was that reading and grading the essays was worth their time and that courses in their fields should incorporate more writing. Their reports of students' mixed levels of enthusiasm for the project roughly match the student's self-reports. Many of this year's lessons can be applied to the next cross-curricular assessment of goals. Further details of the process are collected on the Common Writing Assignment Process page. Findings Writing problems clustered around use of standard written English and inadequate development of points. Strengths were evident in the areas of purpose and structure—students found appropriate avenues into the task and were usually able to frame their compositions, using introductions and conclusions with some confidence. Data supported the following conclusions: 2 of 5 A trend line showing the relationship between holistic CWA scores and Grade Point Averages indicates that students who write effectively (according to our readers) are doing better in school than students who struggle with writing. CWA scores show a rough bell-shaped distribution with the median peak at 2.5 on the 4 point scale. http://www.ccc.commnet.edu/slat/cwa_final.htm Analytic scores based on the rubric range from highest to lowest as follows: purpose & audience, organization, development, language. In holistic scoring, students who enter at the developmental level (based on writing Accuplacer scores) are less likely than other students to reach the level of proficiency (3 or above). In the analytic categories, students who enter at the developmental level score consistently lower in every category, with the greatest divergence showing in the category of language. Students who receive a B- or better in either the upper level-developmental writing course or English composition are likely to score close to or at the level of holistic proficiency on the CWA. Self-reports from students about the amount of writing they'd done in other courses (besides writing classes) indicate that those who had written essays in other classes across the curriculum score higher on the CWA and on GPA than those who had not practiced writing in other classes. However, this data may be unreliable, since it is based on self-reporting, so it opens an area for further study. Students who reported clear feelings about participating in the CWA, either positive or negative, scored better than those who were neutral or silent. Further details of findings are presented on the Graphs page (password protected). CONCLUSIONS AND RECOMMENDATIONS Overview and Suggested Goals The most global findings for 2001–2002 indicate that proficient writing is related to success in school and that 30% of our students are writing at the level of proficiency (holistic score 3.0 or above). The mean holistic score for the entire cohort is 2.3. The Student Learning Assessment Team suggests that units throughout the college use the findings to design interventions leading toward achievement of the following goals by the fall of 2005: To raise the holistic mean by .5, from 2.3 to 2.8 To increase the percentage of students writing proficiently by one third, from 30% to 40%. The Team further suggests that the Common Writing Assignment be repeated in 2005–2006 to assess progress toward these goals. Recommendations A. That the Humanities Department's Committee on Writing Standards (COWS) initiate and coordinate dialogue and professional development activities for the purpose of improving student writing across the college. Further, that COWS annually assess student writing in developmental courses, College Success, English Composition, and courses across the curriculum, reporting findings to the Student Learning Assessment Team, the Academic Dean, and faculty and staff across the college (see Specific Findings & Interventions #1, 2, & 5 below). B. That departments, curriculum planners, academic advisors, counselors, and enrollment services staff collaborate in designing and enforcing policies that support early and continuous student practice in writing (see Specific Findings and Interventions #3,4, & 5 below). Specific Findings Suggesting Interventions in Curriculum, Pedagogy, and Professional Development 1. 3 of 5 The main obstacles to proficiency lie in the areas of development (support of ideas with evidence, examples, elaboration of topics, etc.) and language (effective use of sentence structures, word choices, and http://www.ccc.commnet.edu/slat/cwa_final.htm 2. 3. 4. 5. mechanics of standard written English). Therefore: a. In order to reach the suggested goals, curricular planning and professional development activities should explore methods of increasing students' skills in the categories of development and language, as defined in the scoring rubric. b. The rubric explaining the four analytic categories of writing proficiency should be distributed to students and staff throughout the college in order to open discussion of Capital Community College writing standards. Students who enter the college at the developmental level show a pattern of writing scores parallel to but lower than those of non-developmental students. Since developmental students comprise at least one third of the total cohort, the college cannot reach the goals listed above without raising the scores of the developmental group, a group for which the skills in the category of language are a particular challenge. Therefore: Interventions designed for developmental students should explore methods of building students' skills in all categories, and with particular attention to methods of increasing mastery of standard written English. Grading in required CCC writing classes roughly matches the broader pattern of CWA scores, providing assurance that various college approaches to writing pedagogy are sharing roughly similar goals and assessment criteria. In addition, students who successfully complete English 101 are likely to be writing at least at the essential level on the CWA. Higher GPA's are associated with CWA scores at and above this level. Therefore: The college has a policy that students should complete English 101 within their first 15 credits. Academic advisors, counselors, and support staff should advise students of the relationship of this policy to their overall success in school, and the policy should be strictly followed in the registration process. Students who report having written essays in classes other than English 006 and 101 demonstrate greater levels of writing skill and are more likely to have reached the proficient level. Therefore: a. The college should continue to develop learning communities that pair writing courses (e.g., English 006, 107, and 101) with introductory courses in the disciplines. The purpose is to support writing across the disciplines and engage more faculty in assigning, assessing, and improving student writing. b. The college should pilot W-designated writing-intensive classes in business, science, social science, and other fields. It should support these pilot courses with professional development activities, limited enrollments, and assigned tutors to provide supplemental instruction in writing. Showing growth over time, scores indicate steady increases in students writing at the proficient level in three sequential categories of writing-intensive classes: English 006, English 101, and writing beyond English 101. However across these three stages, a significant number of sampled students continued to write below the essential level. Therefore: a. The college should develop goals and practices for increasing the percentage of students writing at or above the essential level upon successful completion of English 101. b. The college should build on this year's baselines showing increases in percentages of students writing at or above the proficient level, setting benchmarks at each stage for additional growth by 2005. IMPLICATIONS FOR FUTURE ASSESSMENTS The design and implementation of the Common Writing Assignment was guided by the following values: a. b. 4 of 5 Distillation of criteria to reveal the big, pervasive questions The goal to be assessed was one that mattered to many educators and students and one that subsumed a variety of more specific objectives. The criteria for the scoring rubric were arrived at collegially, addressing the large goal and, in the process, clarifying it. Content or face validity The measurement tool directly measured performance on an authentic writing task. http://www.ccc.commnet.edu/slat/cwa_final.htm c. d. e. f. Balance of local thinking and information from national models The Student Learning Assessment Team adapted national models to local needs and took guidance from reports of writing assessment experiences elsewhere, particularly concerning the challenges of sampling and scoring authentic texts. Open-ended content and structure The assignment gave teachers and students room for choices. The uniformity of the writing task provided comparability but also encouraged diversity of student approaches and strategies. Wide participation from staff was invited for the embedding in courses and the scoring of samples. Consequential validity The consequences of the implementation were supportive of learning. For participating students and teachers, the project encouraged writing across the curriculum. For the assessment practitioners at the College, the implementation provoked discovery and further inquiry about the purposes and mechanisms of assessment. For other staff, it elicited constructive discussion of writing and standards. Genuinely useful results The data collection and management design carefully avoided the accumulation of data that was not directly useful to the improvement of student learning. It limited its focus to patterns of writing in anonymous aggregates of students, focusing on fields that had potential to guide interventions for program improvement. These values, along with the choices that they prompted, provide groundwork for the design of future assessments. They are already guiding the preparation of the next General and Developmental Education assessment, which will focus on quantitative reasoning. The data management design, which extracts information on academic history and then assures student anonymity in reporting, is replicable and adaptable to many other types of student learning assessment. In addition to providing useful findings about student learning, the Common Writing Assignment has yielded confidence in the assessment process and momentum towards the development of an ongoing culture of inquiry at Capital Community College. SUMMARY For a three-page condensation of key points about the CWA, see the Summary Report. 5 of 5