Molecular Communication in a Liquid: Bounds on the Capacity of

advertisement

Molecular Communication in a Liquid:

Bounds on the Capacity of the Additive

Inverse Gaussian Channel with Average and

Peak Delay Constraints

流動介質中的粒༬通訊:在傳輸端有平均及最大延遲的限制下

相加性反高斯雜訊通道容量界線

Molecular Communication in a Liquid: Bounds on the Capacity of

the Additive Inverse Gaussian Noise Channel

with Average and Peak Delay Constraints

研 究 生:庭萱

指導教授:Ǒ詩台方 教授

Student : Ting-Hsuan Lee

Advisor : Prof. Stefan M. Moser

ῗ 立 交 通 大 學

電 信 工 程 學 系 碩 士 ჟ

碩 士 ࣧ ᅎ

⑸

ḋ

Submitted to Institution of Communication Engineering

College of Electrical Engineering and Computer Science

National Chiao Tung University

In partial Fulfillment of the Requirements

for the Degree of

Mater of Science

in

Communication Engineering

June 2013

Hsinchu, Taiwan, Republic of China

中ဝ

ῗ一࿇二年六月

Information Theory

Laboratory

Dept. of Electrical and Computer Engineering

National Chiao Tung University

Master Thesis

Molecular Communication in a

Liquid: Bounds on the Capacity

of the Additive Inverse Gaussian

Noise Channel with Average

and Peak Delay Constraints

Lee Ting-Hsuan

Advisor:

Prof. Dr. Stefan M. Moser

National Chiao Tung University, Taiwan

Graduation

Committee:

Prof. Dr. Chia Chi Huang

Yuan Ze University, Taiwan

Prof. Dr. Hsie Chia Chang

National Chiao Tung University, Taiwan

流動介質中的粒༬通訊:在傳輸端有平均及最大延遲的

限制下 相加性反高斯雜訊通道容量界線

研究生:庭萱

! !

指導教授:Ǒ詩台方 教授

ῗ立交通大學電信工程研究所碩士ჟ

中ᅎ摘要

在本篇ࣧᅎ中,ሹ們探討一個相當新的通道模ሗ,此通道是藉ҹ

原༬在液體中的交換來傳輸信號。而ሹ們假設原༬在傳輸過程中,是

在一維的空間做移動。像ሹ們將奈米級的儀ዸ放入᰼管中,而此儀ዸ

在人體內和Ꭾ他儀ዸ交換訊息༫是一個很典ሗ的通訊應用。在此應用

中,ሹ們不再使用電磁波來傳輸ሹ們的信號,相反地,ሹ們將訊息放

在原༬從傳輸端釋放的時間點上。一旦原༬被釋放在液體中,將અ在

液體中進ᥳ布朗運動,進而造成ሹ們無法預估原༬到達傳輸端的時間,

換句話說,布朗運動造成接收時間的不確定性,而此不確定性༫是ሹ

們的雜訊,而ሹ們用反高斯(Inverse Gaussian)分布來描述此雜訊。此

篇的研究重點是相加性雜訊通道,在有平均以及最大延遲的限制下,

基本的通道容量趨勢。

ሹ們深入研究此模ሗ,並且分析ʃ新的通道容量的上界以及下限,

而這些界線是逐漸靠近的,也༫是說,如果ሹ們允許平均以及最大延

遲放寬到無限大,亦或是介質的流體ቴ度趨近無限大,ሹ們可以得到

K確的通道容量。

Molecular Communication in a Liquid:

Bounds on the Capacity of the Additive

Inverse Gaussian Noise Channel with

Average and Peak Delay Constraints

Student: Lee Ting-Hsuan

Advisor: Prof. Stefan M. Moser

Institute of Communication Engineering

National Chiao Tung University

Abstract

In this thesis a very recent and new channel model is investigated that describes

communication based on the exchange of chemical molecules in a liquid medium

with constant drift. The molecules travel from the transmitter to the receiver at

two ends of a one-dimensional axis. A typical application of such communication

are nano-devices inside a blood vessel communicating with each other. In this case,

we no longer transmit our signal via electromagnetic waves, but we encode our

information into the emission time of the molecules. Once a molecule is emitted in

the fluid medium, it will be affected by Brownian motion, which causes uncertainty

of the molecule’s arrival time at the receiver. We characterize this noise with an

inverse Gaussian distribution. Here we focus solely on an additive noise channel to

describe the fundamental channel capacity behavior with average and peak delay

constraints.

This new model is investigated and new analytical upper and lower bounds

on the capacity are presented. The bounds are asymptotically tight, i.e., if the

average-delay and peak-delay constraints are loosened to infinity, the corresponding

asymptotic capacities are derived precisely.

Acknowledgments

First, I want to thank to my advisor, Prof. Stefan M. Moser. Thanks to him,

I am able to have such interesting topic as my master thesis. In addition, I have

learnt a lot of things from him such as how to analysis problems, some methematical

techniques, how to do a good presentation and how to write a good paper and slide.

All of these are reallly important to me.

I am really lucky to get in the IT-lab, this lab is like second home to me. In the

lab, I can do what I want to do and all members give me full support. Thanks to

the IT-lab members, without their help, it is impossible that I can finish everything

in time.

Finally, I want to thanks to my family. When I was upset about the thesis or had

bad mood, they encouraged me which is the reason that I am able to perservered

with thesis.

Lee Ting-Hsuan

III

Contents

1 Introduction

1.1 General Molecular Communication Channel Model . . . . . . . . . .

1.2 Mathematical Model . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.3 Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

1

2

4

2 Mathematical Preliminaries

2.1 Properties of the Inverse Gaussian Distribution . . . . . . . . . . . .

2.2 Related Lemmas and Propositions . . . . . . . . . . . . . . . . . . .

7

7

10

3 Known Bounds to the Capacity of the AIGN Channel with Only

an Average Delay Constraint

13

4 Main Result

17

5 Derivations

5.1 Proof of Upper-Bound of Capacity . . .

5.1.1 For 0 < α < 0.5 . . . . . . . . . .

5.1.2 For 0.5 ≤ α ≤ 1 . . . . . . . . . .

5.2 Proof of Lower-Bound of Capacity . . .

5.2.1 For 0 < α < 0.5 . . . . . . . . . .

5.2.2 For 0.5 ≤ α ≤ 1 . . . . . . . . . .

5.3 Asymptotic Capacity of AIGN Channel

5.3.1 When T Large . . . . . . . . . . .

5.3.2 v Large . . . . . . . . . . . . . .

6 Discussion and Conclusion

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

25

25

25

29

30

30

34

35

36

37

41

List of Figures

43

Bibliography

45

IV

Chapter 1

Introduction

1.1

General Molecular Communication Channel Model

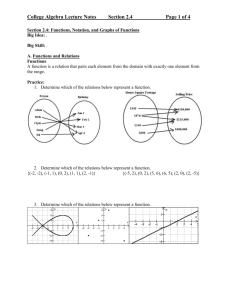

Usually, information carrying signals are transmitted as electromagnetic waves in

the air or in wires. Recently, people are more and more interested in communication

within nanoscale networks. But when we want to transmit our signal via these tiny

devices, we face new problems, for example, the antenna size are restricted or the

energy that could be stored is very little. We solve these problems by providing

a different type of communication instead. This thesis focuses on a channel which

operates in a fluid medium with a constant drift velocity. One application example

is blood vessel, which has a blood drift. The nanoscale device could be any medical

inspection device that is inserted in our body. The transmitter is a point source

with many molecules to be emitted. The receiver waits on the other side for the

molecules’ arrival. The information is encoded in the emission time of the molecules,

X, which takes value in a finite set.

Wiener Process

v

Transmitter

Receiver

w

w=0

w=d

Figure 1.1: Wiener process of molecular communication channel.

1

Chapter 1

Introduction

Once the nanoscale molecules are emitted into the fluid medium, beside the

constant drift, they are affected by Brownian motion, which causes uncertainty of

the arrival time at the receiver. We describe this type of channel noise with an

inverse Gaussian distribution. The typical situation is shown in Figure 1.1, where w

is the position parameter, d is the receiver’s position on w axis, and v > 0 is the drift

velocity. The transmitter is placed at the origin of the w axis. It emits a molecule

into a fluid with positive drift velocity v. The information is put on the releasing

time. In order to know this information, the receiver ideally subtracts the average

traveling time, vd , from the arrival time. Note that once a molecule arrives at the

receiver, it is absorbed and never returns to the fluid. Moreover, every molecule is

independent of each other.

This molecular communication channel model was proposed by Srinivas, Adve

and Eckford [1].

1.2

Mathematical Model

Let W (x) be the position of a molecule at time x that travels via a Brownian

motion medium. Let 0 ≤ x1 < x2 < · · · < xk be a sequence of time indices ordered

from small to large. Then, W (x) is a Wiener process if the position increment

Ri = W (xi−1 ) − W (xi ) are independent random variables with

!

"

Ri ∼ N v(xi − xi−1 ), σ 2 (xi − xi−1 )

(1.1)

where σ 2 = D

2 with D being the diffusion coefficient, which depends on the temperature and the stickiness of the fluid and the size of the particles. Assuming that the

molecule is released at time x = 0 at position W (0) = 0, the position at time x̃ is

!

"

W (x̃) ∼ N v x̃, σ 2 x̃ . The probability density function (PDF) of W is given by:

#

(w − v x̃)2

exp −

fW (w; x̃) = √

2σ 2 x̃

2πσ 2 x̃

1

$

.

(1.2)

In our communication system, instead of looking at the position of the molecule

at a certain time, we turn our focus on its arriving time at the receiver of a fixed

distance d.

We release the molecule at time x from the origin, W (x) = 0 and x ≥ 0. After

traveling for a random time N , the molecule then arrives at the receiver for the first

time at time Y ,

Y = x + N.

(1.3)

Hence, our channel model is characterized by an additive noise in the form of the

random propagation time N . This is the only uncertainty we have in the system.

When we assume a positive drift velocity v > 0, the distribution of the traveling

time N is well known to be an inverse Gaussian (IG) distribution. As a result, we

2

1.2 Mathematical Model

Chapter 1

released

arrived

0

d

position

x

Y

time

N

Figure 1.2: The relation between the molecule’s time and position.

call this channel the additive inverse Gaussian noise (AIGN) channel. Since the

PDF of N is

(

)

*

λ(n−µ)2

λ

exp

−

n > 0,

3

2

2πn

2µ n

(1.4)

fN (n) =

0

n ≤ 0,

we get the conditional probability density of output Y given the channel input X = x

as

(

)

*

λ(y−x−µ)2

λ

exp

−

y > x,

3

2

2π(y−x)

2µ (y−x)

fY |X (y|x) =

(1.5)

0

y ≤ x.

There are two important parameters for the inverse Gaussian distribution: the average traveling time

µ=

d

distance between transmitter and receiver

=

,

v

drift velocity

(1.6)

and a parameter

d2

(1.7)

σ2

that describes the impact of the noise. Usually we write N ∼ IG(µ, λ). By calculation, we get

λ=

d

,

v

µ3

dσ 2

Var(N ) =

= 3 .

λ

v

E[N ] = µ =

(1.8)

(1.9)

If the drift velocity v increases, the variance decreases, in other words, the distribution is more centered. If the drift velocity is slowed down, we will have a more

spread-out noise distribution. Without loss of generality, we normalize the propagation distance to d = 1.

For practical reasons, we constrain the transmitter to have a peak delay constraint T and a average delay constraint m at the transmitter, i.e., the input X are

3

Chapter 1

Introduction

subject to the two constraints:

Pr[X > T] = 0,

(1.10)

E[X] ≤ m.

(1.11)

We denote the ratio between the allowed average delay and the allowed peak

delay by α

m

(1.12)

α!

T

where 0 < α ≤ 1. Note that for α = 1 the average-delay constraint is inactive in the

sense that it has no influence on the capacity and is automatically satisfied whenever

the peak delay constraint is satisfied. Thus, α = 1 corresponds to the case with only

a peak-delay constraint. Similarly, α & 1 corresponds to a dominant average-delay

constraint and only a very weak peak-delay constraint.

1.3

Capacity

Since we introduced a new type of channel, the AIGN channel, we are interested

in how much information it can carry. In [2], Shannon showed that for memoryless

channels with continuous input and output alphabets and an corresponding conditional PDF describing the channel, and under input constraints Pr[X > T] = 0 and

E[X] ≤ αT, the channel capacity is given by

C(T, αT) !

sup

I(X; Y )

(1.13)

fX (x) : Pr[X>T]=0, E[X]≤αT

where the supremum is taken over all input probability distributions f (·) on X that

satisfy (1.10) and (1.11). By I(X; Y ) we denote the mutual information between X

and Y . For the AIGN channel, we have

I(X; Y )

sup

fX (x) : Pr[X>T]=0, E[X]≤αT

=

sup

fX (x) : Pr[X>T]=0, E[X]≤αT

=

sup

fX (x) : Pr[X>T]=0, E[X]≤αT

=

sup

fX (x) : Pr[X>T]=0, E[X]≤αT

=

sup

fX (x) : Pr[X>T]=0, E[X]≤αT

=

sup

fX (x) : Pr[X>T]=0, E[X]≤αT

4

+

+

+

h(Y ) − h(Y |X)

,

h(Y ) − h(X + N |X)

h(Y ) − h(N |X)

h(Y ) − h(N )

h(Y ) − hIG(µ,λ) ,

,

(1.14)

,

(1.15)

(1.16)

(1.17)

(1.18)

1.3 Capacity

Chapter 1

where (1.17) holds because N and X are independent. The mean constraint (1.11)

of the input signal translates to an average constraint for Y :

E[Y ] = E[X + N ]

(1.19)

= E[X] + E[N ]

(1.20)

= E[X] + µ

(1.21)

≤ αT + µ.

(1.22)

5

Chapter 1

Introduction

6

Chapter 2

Mathematical Preliminaries

In this chapter, we will introduce some mathematical properties of the inverse Gaussian random variable and other useful lemmas for future use in this thesis.

2.1

Properties of the Inverse Gaussian Distribution

In [1], the differential entropy of an inverse Gaussian random variable was given in

a complicated form that is unyielding for analytical analysis. So we try to modify

the original expression and derive a cleaner form for mathematical derivation.

Proposition 2.1 (Differential Entropy of the Inverse Gaussian Distribution).

# $ #

$

1

2πµ3 3

2λ

2λ

1

hIG(µ,λ) = log

+ exp

Ei −

+

(2.1)

2

λ

2

µ

µ

2

where Ei(·) is the exponential integral function defined as

- ∞ −t

- −x t

e

e

Ei(−x) ! −

dt =

dt, x > 0.

t

x

−∞ t

(2.2)

In MATLAB, the exponential integral function is implement as expint(x)= − Ei(−x).

Proof: see [3].

Next, when we want to make an IG random variable add with another IG random

variable and end up also in IG distributed, there is a specific way to reach it. Only

certain type of IGs will add up to be IG distributed.

Proposition 2.2 (Additivity of the IG distribution). Let M be a linear combination

of random variables Mi :

l

.

M=

ci Mi , ci > 0,

(2.3)

i=0

where

Mi ∼ IG(µi , λi ),

7

i = 1, . . . , l.

(2.4)

Chapter 2

Mathematical Preliminaries

Here we assume that Mi are not necessarily independent, but summed up under the

constraint that

λi

= κ, for all i.

(2.5)

ci µ2i

Then

22

1

.

.

M ∼ IG

c i µi , κ

c i µi

i

(2.6)

i

Proof: The proof can be found in [4, Sec. 2.4, p. 13].

Remark 2.3. If we simply add two inverse Gaussian random variables, as long as

they are in the same fluid, which means they have the same v and σ 2 , the result is

still inverse Gaussian.

Consider a Wiener process X(t) beginning with X(0) = x0 with positive drift v

and variance σ 2 . Choose a and b so that x0 < a < b and consider the first passage

time T1 from x0 to a and T2 from a to b. Then T1 and T2 are independent inverse

Gaussian variables with parameters

µ1 =

a − x0

,

v

λ1 =

(a − x0 )2

σ2

(2.7)

b−a

,

v

λ2 =

(b − a)2

.

σ2

(2.8)

and

µ2 =

Now consider T3 = T1 + T2 , therefore, c1 = c2 = 1 and

v2

λi

= 2 = constant,

µi

σ

(2.9)

T3 is also an inverse Gaussian variable. That is

#

$

v 2 (µ1 + µ2 )2

T3 ∼ IG µ1 + µ2 ,

.

σ2

Since µ1 + µ2 =

b−x0

v ,

T3 ∼ IG

#

b − x0 (b − x0 )2

,

v

σ2

$

.

(2.10)

(2.11)

The last observation also follows directly from the realization that T3 is the first

passage time from x0 to b [4].

Proposition 2.4 (Scaling). If N ∼ IG(µ, λ), then for any k > 0

kN ∼ IG(kµ, kλ).

Proof: The proof can be found in [4, Sec. 2.4, p. 13].

8

(2.12)

2.1 Properties of the Inverse Gaussian Distribution

Chapter 2

Proposition 2.5. If N is a random variable distributed as IG(µ, λ). Then

E[N ] = µ;

5 6

1

1

1

E

= + ;

N

µ λ

7 8

µ3

E N 2 = µ2 + ;

λ

6

5

3

3

1

1

= 2+ 2+

;

E

N2

µ

λ

µλ

µ3

Var (N ) =

;

λ

# $

2

1

1

+ ;

=

Var

N

µλ λ2

9

# $

λ

2λ µλ ν− 1

ν

E[N ] =

e µ 2 Kν− 1

,

2

π

µ

(2.13)

(2.14)

(2.15)

(2.16)

(2.17)

(2.18)

ν ∈ R.

(2.19)

where Kγ (·) is the order-γ modified Bessel function of the second kind.

Remark 2.6. From formula [5, (8.486.16)], we get

K−ν (z) = Kν (z)

(2.20)

we can also write (2.19) as

7

E N

−ν

8

=

9

2λ µλ −ν− 1

2K

e µ

ν+ 21

π

# $

λ

.

µ

(2.21)

Proof: The proofs are based on [4, (2.6)], [6, Proposition 2.15], [4, (8.36)] and

[5, 3.471 9.].

Proposition 2.7. If N ∼ IG(µ, λ), then

#

2λ

E[log N ] = e Ei −

µ

6

5

µ

N

µ

+

=2+ .

E

µ

N

λ

2λ

µ

$

+ log µ;

(2.22)

(2.23)

Proof: A proof is shown in [7].

Proposition 2.8. If Ni are IID ∼ IG(µ, λ), then the sample mean from that distribution will be

n

1.

Ni = N̄ ∼ IG(µ, nλ),

n

for i = 1, . . . , n.

i=1

Proof: A proof can be found in [4, Sec. 5.1, p. 56].

9

(2.24)

Chapter 2

Mathematical Preliminaries

Lemma 2.9. Under the three constraints

E[log X] = α1 ,

(2.25)

E[X] = α2 ,

8

E X −1 = α3 ,

(2.26)

7

(2.27)

where α1 , α2 and α3 are some fixed values, the maximum entropy distribution is the

inverse Gaussian distribution.

Proof: From [8, Chap. 12] we know that if we have the three constraints

above, the optimal distribution to maximize the entropy will have the form

f (x) = eλ0 +λ1 log x+λ2 x+

= xλ 1 e

λ

λ0 +λ2 x+ x3

λ3

x

,

(2.28)

(2.29)

which is exactly the form of the inverse Gaussian.

2.2

Related Lemmas and Propositions

In this section, we will show some lemmas and properties that will be used in our

proof of bounds.

+

Proposition 2.10. Consider a memoryless channel with input alphabet X = R0

and output alphabet Y = R, where, conditional on the input x ∈ X , the distribution

on the output Y is denoted by the probability measure fY |X (·|x). Then, for any

distribution fY (·) on Y, the channal capacity under a peak-delay constraint T and

an average-delay constraint αT is upper bound by

7 !

"8

C(T, αT) ≤ EQ∗ D fY |X (·|X)||fY (·) ,

(2.30)

where Q∗ is the capacity-achieving distribution satisfying Q∗ (X > T) = 0 and

EQ∗ [X] ≤ αT. Here, D(·||·) denotes relative entropy [8, ch. 2].

Proof: For more details see [9]

There are two challenges in using (2.30). The first is in finding a clever choice of

the law R that will lead to a good upper bound. The second is in upper-bounding

the supremum on the right-hand side of (2.30). To handle this challenge we shall

resort to some further bounding.

Next, we will list some propositions related to the Q-function.

Definition 2.11. The Q-function is defined by

- ∞

1

2

Q (x) ! √

et /2 dt.

2π x

10

(2.31)

2.2 Related Lemmas and Propositions

Chapter 2

Note that Q (x) is the probability that a standard Gaussian random variable

will exceed the value x and is therefore monotonically decreasing with an increasing

argument.

Proposition 2.12 (Properties for the Q-function). Bounds for Q-function

x2

1

√

e− 2

2πx

#

x

1 + x2

$

< Q (x) < √

1 x2

Q (x) ≤ e− 2 ,

2

x2

1

e− 2 ,

2πx

x > 0;

(2.32)

x ≥ 0.

(2.33)

and

Q (x) + Q (−x) = 1.

(2.34)

Proof: see [10]

Remark 2.13. Let Φ(·) denote the cumulative distribution function (CDF) of the

standard normal distribution:

- x

1

2

Φ(x) = √

e−t /2 dt.

(2.35)

2π −∞

Then

Q (x) = Φ(−x),

(2.36)

5

Q (x)

Lower bound of (2.32)

Upper bound of (2.32)

Upper bound of (2.33)

4.5

4

3.5

3

2.5

2

1.5

1

0.5

0

0

0.5

1

1.5

x

11

2

2.5

3

Chapter 2

Mathematical Preliminaries

Proposition 2.14 (Upper and Lower Bound for Exponential Integral Function).

We have

#

#

$

$

1 −x

2

1

−x

e ln 1 +

< − Ei(−x) < e ln 1 +

, x > 0,

(2.37)

2

x

x

or

−e

−x

#

1

ln 1 +

x

$

#

$

2

1 −x

< Ei(−x) < − e ln 1 +

,

2

x

12

x > 0.

(2.38)

Chapter 3

Known Bounds to the Capacity

of the AIGN Channel with Only

an Average Delay Constraint

We can always bound the capacity with peak and delay constraints by the capacity

with only an average delay constraint since adding more constraints will make the

capacity smaller. For the case with only an average delay constraint, an upper bound

of capacity has been derived in [1]. The entropy maximizing distribution f ∗ (y) with

1

a mean constraint E[Y ] ≤ m + µ is the exponential distribution with parameter m+µ

[8, (12.21)]:

f ∗ (y) =

1

− y

e m+µ ,

m+µ

y ≥ 0.

(3.1)

The entropy of such a distribution is

h∗ (Y ) = 1 + ln(m + µ).

(3.2)

This can be used to derive a upper bound on the capacity of the AIGN channel:

C(m) !

I(X; Y )

sup

(3.3)

fX (x) : E[X]≤m

=

sup

fX (x) : E[X]≤m

=

sup

fX (x) : E[X]≤m

+

h(Y ) − hIG(µ,λ)

h(Y ) − hIG(µ,λ)

,

(3.4)

(3.5)

= 1 + ln(m + µ) − hIG(µ,λ) .

(3.6)

Hence,

1

λ(m + µ)2 3

− exp

C(m) ≤ log

2

2πµ3

2

13

#

2λ

µ

$

#

2λ

Ei −

µ

$

+

1

2

(3.7)

Known Bounds to the Capacity of the AIGN Channel with Only an

Chapter 3

Average Delay Constraint

In [3], the choice of the input X ∼ Exp

bound:

!1"

m

get the asympototically tight lower

#

$

m

µ

λ

3

λ 1

e

3 2λ

2λ

µ

C(m) ≥ log

+

− + kλ + log + log

− e Ei −

λ

m µ

2

µ 2

2π 2

µ

1

2

1

9

9

1 λ

λm

2λ

K1

+ k 2 λ2

− log 1 + e µ

2

m

2 + k λm

m

1 9

22

9

1 µλ +kλ

λm

λ

e

K1 2

+ k 2 λ2

.

(3.8)

+

2m

1 + k 2 λm

m

where K1 is type one bessel function.

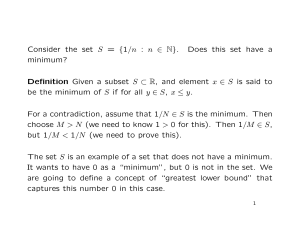

We can see in Fig. 3.3 that for the capacity with only a average delay constraint

is aympototically tight in both m and v.

3.5

3

I(X; Y ) (nats)

2.5

2

1.5

1

Upper bound (3.7)

0.5

Lower bound (3.8)

0

0

1

2

3

4

5

6

7

8

9

10

m

Figure 3.3: Known bounds for the choice: µ = 0.5, λ = 1 respect to m.

14

Chapter 3

3.5

Upper bound (3.7)

Lower bound (3.8)

3

I(X; Y ) (nats)

2.5

2

1.5

1

0.5

0

0

1

2

3

4

5

6

7

8

9

10

v

Figure 3.4: Known bounds for the choice: m = 1, λ = 1 respect to v.

15

Known Bounds to the Capacity of the AIGN Channel with Only an

Chapter 3

Average Delay Constraint

16

Chapter 4

Main Result

To state our results we distinguish between two cases, 0 < α < 0.5 and 0.5 ≤ α < 1.

For the first case, the difference between upper-bound and lower-bound tends to zero

as the allowed peak delay tends to infinity, thus, revealing the asymptotic capacity

at high power.

Theorem 4.1 (Bounds for 0 < α < 0.5). For 0 < α < 0.5, C(T, αT) is lower-bounded

)

∗

−β ∗

C(T, αT) ≥ log T − log β + log 1 − e

*

#

2λ

1

2πeµ3 3 2λ

µ

− log

− e Ei −

2

λ

2

µ

λ β∗

− +

(αT + µ) +

µ

T

$

:

2λ

#

λ

β∗

−

2µ2

T

$

(4.1)

for

T≥

2β ∗ µ2

.

λ

(4.2)

And upper-bounded by

"

!

C(T, αT) ≤ Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− 2(1 − Q− + Q+ ) log(1 − Q− + Q+ )

+

!

",

+ αβ ∗

+ max 0, (Q− − Q+ ) log µ(Q− + Q+ ) + αT(Q− − Q+ )

#

$

αβ ∗ (T +δ)

−

∗

−

+

−

+

− log αβ + log(αT + µ) + log 1 − e αT+µ−αT(Q −Q )−µ(Q +Q )

$

#

2πeµ3 3 2λ

2λ

1

µ

− e Ei −

(4.3)

− log

2

λ

2

µ

for

1

T>

α

1

αβ ∗ e−αβ

1−e

"

!

δ

−β ∗ min 1, α

µ 0

17

2

∗

−µ

(4.4)

Chapter 4

Main Result

where δ0 is the minimum value of δ, and

1: 1:

9 22

λ

δ

µ

Q− ! Q

−

µ

µ

δ

1: 1:

9 22

λ

λ

δ

µ

Q+ ! e2 µ Q

+

µ

µ

δ

(4.5)

(4.6)

Here, δ > µ is free parameter, and β ∗ is the unique solution to

1

e−β

−

β ∗ 1 − e−β ∗

∗

α=

(4.7)

A suboptimal but useful choice of the free parameter in (4.3) is

δ = log(T + 1) + 10µ.

(4.8)

Fig. 4.5 and Fig. 4.6 show the bounds of Theorem 4.1 for α = 0.3 as a function

of T and v, respectly.

Corollary 4.2 (Asymptotics for the case of 0 < α < 0.5). If 0 < α < 0.5, then

+

,

*

)

∗

lim C(T, αT) − log T = αβ ∗ − log β ∗ + log 1 − e−β

T↑∞

$

#

2λ

1

2πeµ3 3 2λ

µ

− e Ei −

(4.9)

− log

2

λ

2

µ

and

)

+

*

,

1

3

λ

∗

lim C(v) − log v = log T − log β ∗ + log 1 − e−β + αβ ∗ + log

. (4.10)

v↑∞

2

2

2πe

Theorem 4.3 (Bounds for 0.5 ≤ α ≤ 1). If 0.5 ≤ α ≤ 1, then C(T, αT) is lowerbounded by

#

$

2λ

2πeµ3 3 2λ

1

µ

− e Ei −

(4.11)

C(T, αT) ≥ log T − log

2

λ

2

µ

and upper-bounded by

"

!

C(T, αT) ≤ Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− (1 − Q− + Q+ ) log(1 − Q− + Q+ )

;

!

"<

+ (Q− − Q+ ) max 0, log µ(Q− + Q+ ) + αT(Q− − Q+ ) + log(T + δ)

#

$

1

2λ

2πeµ3 3 2λ

− log

− e µ Ei −

.

(4.12)

2

λ

2

µ

for

Q−

Q+

T > 1 − min δ

(4.13)

where

and

are given in the (4.5) and (4.6), respectively.

Here, δ > µ is free parameter, and β ∗ is the unique solution to (4.7). A suboptimal

but useful choice of the free parameter in (4.12)

δ = log(T + 1) + 10µ

18

(4.14)

Chapter 4

Corollary 4.4 (Asymptotics for the case of 0.5 ≤ α ≤ 1). If 0.5 ≤ α ≤ 1, then

#

$

+

,

2λ

1

2πeµ3 3 2λ

µ

lim C(T, αT) − log T = − log

− e Ei −

(4.15)

T↑∞

2

λ

2

µ

and

+

,

3

1

λ

lim C(v) − log v = log T + log

v↑∞

2

2

2πe

(4.16)

Fig. 4.7 and Fig. 4.8 show the bounds of Theorem (4.3) for α = 0.7. In addition,

Fig. 4.11 and Fig. 4.12 show how the bounds in (4.3) and (4.12) performance with

different α. Fig. 4.9 and Fig. 4.10 show that the difference of the choice of different

δ, the blue line is the numerical optimal δ. Furthermore, the Fig. 4.13 shows the

continuity of the capacity.

α = 0.3

4.5

4

I(X; Y ) (nats)

3.5

3

2.5

2

1.5

Known upper bound (3.7)

1

Upper bound (4.3)

0.5

Lower bound (4.1)

0

0

10

20

30

40

T

50

60

70

80

90

100

Figure 4.5: Comparison between the upper and lower bound in (4.3) and (4.1) and

known upper bound (3.7) for the choice: µ = 0.5, λ = 0.25, and α = 0.3.

19

Chapter 4

Main Result

α = 0.3

4

3.5

I(X; Y ) (nats)

3

2.5

2

1.5

1

Known Upper bound (3.7)

Upper bound (4.3)

0.5

Lower bound (4.1)

0

0

1

2

3

4

5

6

7

8

9

10

v

Figure 4.6: Upper bound in (4.3) and (4.1) compare to the (3.7) for the choice:

T = 10 λ = 0.25, δ = log(T + 1) + 10µ and α = 0.3.

6

5

I(X; Y ) (nats)

4

3

2

1

0

Known upper bound (3.7)

Upper bound (4.12)

Lower bound (4.11)

−1

−2

−3

0

10

20

30

40

50

60

70

80

90

100

T

Figure 4.7: Bounds in (4.12), (4.11) and (3.7) for the choice: µ = 0.5, λ = 0.25, and

α = 0.7.

20

Chapter 4

5

4.5

I(X; Y ) (nats)

4

3.5

3

2.5

2

1.5

Known upper bound (3.7)

Upper bound (4.12)

Lower bound (4.11)

1

0.5

0

1

2

3

4

5

6

7

8

9

10

v

Figure 4.8: Bounds in (4.12), (4.11) and (3.7) for the choice: T = 10, λ = 0.25,

2

and α = 0.7.

δ = v+1

6

5

I(X; Y ) (nats)

4

3

2

1

0

Known bound (3.7)

Upper bound (sub-optimal δ)

−1

Upper bound (4.12)

−2

Lower bound (4.11)

−3

0

10

20

30

40

50

60

70

80

90

100

T

Figure 4.9: Bounds in (4.12), (4.11) with and (3.7) optimal and sub-optimal δ =

log(T + 1) + 10µ for the choice: µ = 0.5, λ = 0.25 and α = 0.7.

21

Chapter 4

Main Result

5

4.5

I(X; Y ) (nats)

4

3.5

3

2.5

2

Known bound (3.7)

Upper bound (sub-optimal δ)

1.5

Upper bound (4.12)

1

Lower bound (4.11)

0.5

0

1

2

3

4

5

6

7

8

9

10

v

Figure 4.10: Bounds in (4.12), (4.11) with and (3.7) optimal and sub-optimal δ =

2

v+1 for the choice: T = 10, λ = 0.25 and α = 0.7.

3

I(X; Y ) (nats)

2.5

2

1.5

1

α = 0.3

α = 0.4

0.5

α = 0.7

0

0

1

2

3

4

5

6

7

8

9

10

T

Figure 4.11: Upper bounds, (4.3) and (4.12), with different α for the choice: µ = 0.5

and λ = 0.25.

22

Chapter 4

4.5

I(X; Y ) (nats)

4

3.5

3

2.5

α = 0.3

α = 0.4

α = 0.7

2

1.5

0

1

2

3

4

5

6

7

8

9

10

v

Figure 4.12: Upper bounds, (4.3) and (4.12), with different α for the choice: T = 10

and λ = 0.25.

7

6

I(X; Y ) (nats)

5

4

3

2

upper bound

1

lower bound

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

α

Figure 4.13: Upper bounds and lower bounds with different α for the choice: T =

1000, µ = 0.5, and λ = 0.25.

23

Chapter 4

Main Result

24

Chapter 5

Derivations

5.1

5.1.1

Proof of Upper-Bound of Capacity

For 0 < α < 0.5

The derivation of the upper bounds (4.3) is based on Proposition (2.10) with the

following choice of output distribution:

−βy

β(1−p)

if 0 ≤ y ≤ T + δ,

# −β(1+ δ ) $ e T

T

fY (y) = T 1−e

(5.1)

νpe−ν(y−T−δ)

if y > T + δ,

where β, p, ν and δ are free parameters with following constraints:

β > 0,

(5.2)

δ ≥ 0,

(5.3)

ν > 0,

(5.4)

0 ≤ p ≤ 1.

(5.5)

The capacity is upper bounded as follow:

7 !

"8

C(T, αT) ≤ EQ∗ D fY |X (·|X)||fY (·)

(5.6)

T+δ

β

# β(1 −!p) " $ e− T y dy

f

(y|x)

log

≤ −h(N ) − EQ∗

Y

|X

δ

0

T 1 − e−β 1+ T

5- ∞

)

* 6

fY |X (y|x) log pνe−ν(y−T−δ) dy .

(5.7)

− EQ∗

T+δ

Since

fY |X (y|x) =

:

#

$

λ

λ(y − x − µ)2

exp −

I{y ≥ x},

2π(y − x)3

2µ2 (y − x)

25

(5.8)

Chapter 5

Derivations

plugging fY |X into (5.7) then we can get

C(T, αT) ≤ −h(N ) − log p − log ν − ν(T(1 − α) + δ + µ)

+ EQ∗ [c1 (X)]

1

2

δ

1 − e−β(1+ T )

pν

· log

+ log T + log

+ ν(T + δ)

β

1−p

$

#

β

− ν EQ∗ [Xc1 (X) + c2 (X)] ,

+

T

(5.9)

where

-

T +δ−x

9

2

λ −λ(t−µ)

e 2µ2 t dt,

2πt3

0

- T +δ−x 9

2

λ −λ(t−µ)

e 2µ2 t dt.

c2 (x) =

2πt

0

c1 (x) =

To further bound c1 (x) and c2 (x), we let w = ut , τ (x) =

c1 (x) =

-

T +δ−x

0

-

1

τ (x)

9

9

µ

T+δ−x

(5.10)

(5.11)

and ρ =

2

λ −λ(t−µ)

e 2µ2 t dt

2πt3

ρ −ρ (w−1)2

2w

dw

e

2πw3

0

1

1:

22

F

1

√

− τ (x)

=1−Q

ρ

τ (x)

1

1:

22

F

1

√

2ρ

+ τ (x)

ρ

+e Q

τ (x)

- T +δ−x 9

2

λ −λ(t−µ)

e 2µ2 t dt

c2 (x) =

2πt

0

9

- 1

τ (x)

ρ −ρ (w−1)2

2w

dw

µw

e

=

2πw3

0

G

1

1:

22

F

1

√

− τ (x)

=µ 1−Q

ρ

τ (x)

1

1:

22H

F

1

√

2ρ

+ τ (x)

ρ

.

− µe Q

τ (x)

=

λ

µ

(5.12)

(5.13)

(5.14)

(5.15)

(5.16)

(5.17)

Since 0 ≤ x ≤ T, so τ (x) must satisfy

0 ≤ τ (x) ≤

µ

.

δ

(5.18)

Noted that because of (5.18), c1 (x) and c2 (x) are both monotonically decreasing in

τ (x); furthermore, c1 (x) and c2 (x) goes to zero as τ (x) goes to zero. Hence, we can

26

5.1 Proof of Upper-Bound of Capacity

Chapter 5

the bound of c1 (x) and c2 (x) by using (5.18)

1: 1:

1: 1:

9 22

9 22

λ

λ

δ

µ

λ

δ

µ

2

−

+

+ e µQ

0≤1−Q

µ

µ

δ

µ

µ

δ

≤ c1 (x) ≤ 1

(5.19)

1: 1:

1: 1:

9 22H

9 22

λ

λ

δ

µ

λ

δ

µ

2

−

+

− µe µ Q

0≤µ 1−Q

µ

µ

δ

µ

µ

δ

G

≤ c2 (x) ≤ µ.

(5.20)

To simplify the notations, we use the shorthand given in (4.5) and (4.6):

0 ≤ 1 − Q− + Q+ ≤ c1 (x) ≤ 1

−

+

0 ≤ µ(1 − Q − Q ) ≤ c2 (x) ≤ µ

(5.21)

(5.22)

Next, we choose for the free parameters p and ν:

p ! 1 − EQ∗ [c1 (X)]

1 − EQ∗ [c1 (X)]

ν!

µ + αT − EQ∗ [Xc1 (X) + c2 (X)]

(5.23)

(5.24)

From (5.21), we know that

0 ≤ 1 − EQ∗ [c1 (X)] ≤ 1

(5.25)

αT + µ − EQ∗ [Xc1 (X) + c2 (X)] ≥ 0,

(5.26)

and

so that 0 ≤ p ≤ 1 and ν ≥ 0 as required. Plugging p and ν into the (5.9) then we

get the following bound:

$

#

(T + δ)(1 − EQ∗ [c1 (X)])

C(T, αT) ≤ −h(N ) + (1 − EQ∗ [c1 (X)]) 1 −

µ + αT − EQ∗ [Xc1 (X) + c2 (X)]

!

"

− 2 (1 − EQ∗ [c1 (X)]) log 1 − EQ∗ [c1 (X)]

!

"

+ (1 − EQ∗ [c1 (X)]) log µ + αT − EQ∗ [Xc1 (X) + c2 (X)]

#

#

" $$

!

−β 1+ Tδ

+ EQ∗ [c1 (X)] log T − log β + log 1 − e

β

EQ∗ [Xc1 (X) + c2 (X)] − EQ∗ [c1 (X)] log EQ∗ [c1 (X)]

T

then we choose β as:

+

β=

TEQ∗ [c1 (X)]

αβ ∗

EQ∗ [Xc1 (X) + c2 (X)]

where β ∗ is non-zero solution to α =

1

β∗

−

∗

e−β

∗

1−e−β

(5.27)

(5.28)

for 0 < α < 0.5. Furthermore,

!

"

− log β = − log(αT) − log β ∗ − log EQ∗ [c1 (X)] + log EQ∗ [Xc1 (X) + c2 (X)] (5.29)

!

"

≤ − log(αT) − log β ∗ − log EQ∗ [c1 (X)] + log αT + µ ,

(5.30)

27

Chapter 5

Derivations

and

#

log 1 − e−β

!

1+ Tδ

"$

1

−(T +δ) E

= log 1 − e

#

−

≤ log 1 − e

EQ∗ [c1 (X)]

Q∗ [Xc1 (X)+c2

αβ ∗

(X)]

2

αβ ∗ (T+δ)

αT+µ−αT(Q− −Q+ )−µ(Q− +Q+ )

$

(5.31)

(5.32)

Hence, for 0 < α < 0.5

#

$

(T + δ)(1 − EQ∗ [c1 (X)])

C(T, αT) ≤ −h(N ) + (1 − EQ∗ [c1 (X)]) 1 −

αT(Q− − Q+ ) + µ(Q− + Q+ )

!

"

− 2 (1 − EQ∗ [c1 (X)]) log 1 − EQ∗ [c1 (X)]

!

"

+ (1 − EQ∗ [c1 (X)]) log µ(Q− + Q+ ) + αT(Q− − Q+ )

1

+ EQ∗ [c1 (X)] αβ ∗ − log α − log β ∗ + log(αT + µ)

#

−

+ log 1 − e

αβ ∗ (T+δ)

αT+µ−αT(Q− −Q+ )−µ(Q− +Q+ )

− 2EQ∗ [c1 (X)] log EQ∗ [c1 (X)]

It can be shown that for t >

$2

(5.33)

√1

e+1

z(t) = 1 − t − 2(1 − t) log(1 − t) − 2t log t

(5.34)

is monotonically decreasing. In our proof, we choose t = EQ∗ [c1 (X)], as long as

1

1

1 − Q− + Q+ ≥ √e+1

, EQ∗ [c1 (X)] always larger than √e+1

. In addition, for δ ≥ µ

this requirement always be satisfied.

In addition,

(T + δ)(1 − EQ∗ [c1 (X)])

> 0.

(5.35)

αT(Q− − Q+ ) + µ(Q− + Q+ )

Hence, we can get the upper bound for 0 < α < 0.5:

"

!

C(T, αT) ≤ −h(N ) + Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− 2(1 − Q− + Q+ ) log(1 − Q− + Q+ )

;

!

"<

+ (Q− − Q+ ) max 0, log µ(Q− + Q+ ) + αT(Q− − Q+ )

1

+ EQ∗ [c1 (X)] αβ ∗ − log αβ ∗ + log(αT + µ)

#

+ log 1 − e

−

αβ ∗ (T +δ)

αT+µ−αT(Q− −Q+ )−µ(Q− +Q+ )

To further bound the capacity, we have to let

1

2

∗

αβ ∗ e−αβ

1

" −µ ,

!

T>

α

δ

−β ∗ min 1, α

µ 0

1−e

28

$2

(5.36)

(5.37)

5.1 Proof of Upper-Bound of Capacity

Chapter 5

where δ0 is the minimun value of δ, then it can be shown that

#

$

αβ ∗ (T +δ)

−

∗

∗

− −Q+ )−µ(Q− +Q+ )

αT+µ−αT(Q

> 0. (5.38)

αβ − log αβ + log(αT + µ) + log 1 − e

Hence, in (5.36), the EQ∗ [c1 (X)] need to be upper-bounded, finally we get the upper

bound of capacity when 0 < α < 0.5:

"

!

C(T, αT) ≤ Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− 2(1 − Q− + Q+ ) log(1 − Q− + Q+ )

;

!

"<

+ (Q− − Q+ ) max 0, log µ(Q− + Q+ ) + αT(Q− − Q+ ) + αβ ∗

#

$

αβ ∗ (T +δ)

−

∗

− −Q+ )−µ(Q− +Q+ )

αT+µ−αT(Q

− log αβ + log(αT + µ) + log 1 − e

#

$

1

2πeµ3 3 2λ

2λ

− log

− e µ Ei −

.

(5.39)

2

λ

2

µ

5.1.2

For 0.5 ≤ α ≤ 1

For proving (4.12), we use different output distribution:

I

1−p

if 0 ≤ y ≤ T + δ,

fY (y) = T+δ

−ν(y−T−δ)

νpe

if y > T + δ,

(5.40)

where p, ν and δ are free parameters with following constraints:

δ ≥ 0,

(5.41)

ν > 0,

(5.42)

0 ≤ p ≤ 1.

(5.43)

From similar derivation in section 5.1.1, choosing

p ! 1 − EQ∗ [c1 (X)] ,

1 − EQ∗ [c1 (X)]

ν!

.

µ + αT − EQ∗ [Xc1 (X) + c2 (X)]

(5.44)

(5.45)

We get:

#

(T + δ)(1 − EQ∗ [c1 (X)])

C(T, αT) ≤ −h(N ) + (1 − EQ∗ [c1 (X)]) 1 −

µ + αT − EQ∗ [Xc1 (X) + c2 (X)]

!

"

− 2 (1 − EQ∗ [c1 (X)]) log 1 − EQ∗ [c1 (X)]

!

"

+ (1 − EQ∗ [c1 (X)]) log µ + αT − EQ∗ [Xc1 (X) + c2 (X)]

− EQ∗ [c1 (X)] log EQ∗ [c1 (X)] + EQ∗ [c1 (X)] log(T + δ)

$

(5.46)

√

It can be shown that for t > 14 ( 5 − 1)2

z(t) = 1 − t − 2(1 − t) log(1 − t) − t log t

29

(5.47)

Chapter 5

Derivations

is monotonically decreasing. Same as before, we choose t = EQ∗ [c1 (X)], as long

√

√

as 1 − Q− + Q+ ≥ 14 ( 5 − 1)2 , the EQ∗ [c1 (X)] always larger than 14 ( 5 − 1)2 . In

addition, for δ ≥ µ this requirement always be satisfied.

Hence, we get the upper bound for 0.5 ≤ α ≤ 1:

"

!

C(T, αT) ≤ Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− (1 − Q− + Q+ ) log(1 − Q− + Q+ )

;

!

"<

+ (Q− − Q+ ) max 0, log µ(Q− + Q+ ) + αT(Q− − Q+ ) + log(T + δ)

#

$

2λ

2πeµ3 3 2λ

1

− log

− e µ Ei −

.

(5.48)

2

λ

2

µ

where

T ≥ 1 − min δ

1 √

1 − Q− + Q+ ≥ ( 5 − 1)2

4

5.2

5.2.1

(5.49)

(5.50)

Proof of Lower-Bound of Capacity

For 0 < α < 0.5

One can always find a lower bound on capacity by dropping the maximization and

choosing an arbitrary input distribution fX (x) in (1.13). To get a tight bound,

this choice of fX (x) should yield a mutual information that is reasonably close

to capacity. From [3], we know the lower-bound is tight when the input is an

exponential distribution; however, since we have a peak constraint, we only can

choose the cut exponential as our input distribution, fX (x), and fN (n) denotes the

channel distribution,

−β

β

e T x I{0 ≤ x ≤ T},

−β

T(1 − e )

9

$

#

−λ(n − µ)2

λ

I{n ≥ 0}

exp

fN (n) =

2πn3

2µ2 n

fX (x) =

(5.51)

(5.52)

where β > 0 is free parameter. Therefore, the channel output fY (y) is

fY (y) = (fX ∗ fN )(y)

- ∞

fN (x)fX (y − x)dx

=

−∞

#

$

- ∞9

−λ(x − µ)2

λ

exp

=

I{x ≥ 0}

2πx3

2µ2 x

−∞

−β

β

e T (y−x) I{0 ≤ y − x ≤ T} dx.

·

−β

T(1 − e )

30

(5.53)

(5.54)

(5.55)

5.2 Proof of Lower-Bound of Capacity

Chapter 5

To calculate the convolution, we separate it to two cases, y − T ≤ 0 and y − T > 0.

and follow a similar calculation as given in [11].

For the first case, y ≤ T, we have:

fY (y) =

Let a2 =

λ

2

and b2 =

9

-

λ

λ

β

−βy

eµ T

−β

2π T(1 − e )

λ

2µ2

J

y

3

x− 2 e

!

−x

0

"

λ

− 2x

KL

I1 (y)

− βT . We assume b2 ≥ 0, so

T≥

λ

− βT

2µ2

dx

M

2βµ2

.

λ

(5.56)

(5.57)

Then I1 (y) is

I1 (y) =

=

-

y

-0

y

3

x− 2 e

!

λ

− βT

2µ2

−x

a2

3

"

λ

− 2x

dx

(5.58)

2

x− 2 e− x −xb dx.

(5.59)

0

1

Let t = x− 2 ,

I1 (y) = 2

-

∞

√1

y

#

$

b2

exp −a t − 2 dt

t

2 2

(5.60)

from the Abramowitz and Stegun, 7.4.33 [12]:

5

#

$6

√ N

a

π

√

2ab

1 − erf b y + √

e

I1 (y) =

2a

y

$6O

5

#

a

√

−2ab

1 − erf −b y + √

+e

y

" 1:

9 I % !

#

$ : 2

2λ λ2 − βT

λ

2π

λ

β

2µ

=

e

−

Q

2y

+

2

λ

2µ

T

y

% !

: 2P

" 1 :

#

$

− 2λ λ2 − βT

λ

β

λ

2µ

.

+e

Q − 2y

−

+

2

2µ

T

y

(5.61)

(5.62)

Similary, for the case that y − T > 0

fY (y) =

9

λ

β

λ

−βy

eµ T

−β

2π T(1 − e )

31

-

y

3

x− 2 e

!

−x

λ

− βT

2µ2

y−T

J

KL

I2 (y)

"

λ

− 2x

dx

M

(5.63)

Chapter 5

Derivations

where

I2 (y) =

=

-

y

x

-y−T

y

!

− 23 −x

e

3

λ

− βT

2µ2

a2

"

λ

− 2x

dx

(5.64)

2

x− 2 e− x −xb dx

y−T

&

1

y−T

#

(5.65)

$

b2

=2

exp −a t − 2 dt

(5.66)

t

√1

y

1#

#

$

$ 2

- ∞

∞

b2

b2

2 2

2 2

exp −a t − 2 dt −

exp −a t − 2 dt

(5.67)

=2

t

t

√1

√1

y

5

#

5

#

$6 y−T

$6O

√ N

a

a

π

√

√

2ab

−2ab

1 − erf b y + √

1 − erf −b y + √

=

e

+e

2a

y

y

5

#

$6

√ N

F

a

π

e2ab 1 − erf b y − T + √

+

2a

y−T

5

#

$6O

F

a

−2ab

1 − erf −b y − T + √

(5.68)

+e

y−T

%

:

:

2

" 1

!

9 I

#

$

2λ λ2 − βT

λ

2π

λ

β

2µ

e

−

Q

2y

+

=

λ

2µ2

T

y

% !

" 1 :

#

$ : 2P

− 2λ λ2 − βT

λ

β

λ

2µ

+e

Q − 2y

−

+

2µ2

T

y

%

:

I

2

1

"

!

9

#

$ :

2λ λ2 − βT

λ

β

2π

λ

2µ

e

−

Q

2(y − T)

−

+

λ

2µ2

T

y−T

% !

:

2P

" 1 :

#

$

− 2λ λ2 − βT

λ

β

λ

2µ

(5.69)

+e

−

Q − 2(y − T)

+

2µ2

T

y−T

-

2 2

= I1 (y) − I˜2 (y)

(5.70)

where I˜2 (y) > 0 for all y.

Hence, we have output distribution fY (y):

(

λ

− βT y

β

λ

µ

I1 (y)

−β ) e

2π

T(1−e

fY (y) = (

β

λ

− y

β

λ

e µ T I (y)

2π

T(1−e−β )

2

y≤T

(5.71)

y > T.

This now yields the following lower bound on capacity:

C ! max I(X; Y )

fX (x)

Q

≥ I(X; Y )Q

(5.72)

(5.73)

X∼f (x)

Q

= (h(Y ) − h(Y |X))QX∼f (x)

Q

= h(Y )QX∼f (x) − h(N )

Q

= EY [− log fY (Y )] Q

− h(N )

X∼f (x)

32

(5.74)

(5.75)

(5.76)

5.2 Proof of Lower-Bound of Capacity

Chapter 5

= Pr[Y > T]E[− log fY (Y )|Y > T] + Pr[Y ≤ T]E[− log fY (Y )|Y ≤ T]

− h(N )

!

(5.77)

"

= −h(N ) + Pr[Y > T] + Pr[Y ≤ T]

2

1

9

* λ

)

λ

+ log T − log β + log 1 − e−β −

· − log

2π

µ

#

$

β

+ Pr[Y ≤ T]

E[Y |Y ≤ T] − E[log I1 (Y )|Y ≤ T]

T

#

Q

R

S$

β

Q

˜

+ Pr[Y > T]

E[Y |Y > T] − E log (I1 (Y ) − I2 (Y ))QY > T

T

9

* λ

)

λ

+ log T − log β + log 1 − e−β −

≥ −h(N ) + − log

2π

µ

β

+ E[Y ] − E[log I1 (Y )]

T 9

λ β

λ

≥ − log

+ log T − log β + log (1 − e−β ) − + (αT + µ)

2π

µ

T

− E[log I1 (Y )] − h(N )

(5.78)

(5.79)

(5.80)

We further bound the log term,

1:

$ : 2

λ

β

λ

e

Q

2y

−

+

I1 (y) =

2

2µ

T

y

% !

1: #

"G

$ : 2HP

− 2λ λ2 − βT

λ

β

λ

2µ

(5.81)

+e

1−Q

2y

−

−

2µ2

T

y

%

I

1: 1: #

9

$ 9 22

2

2µ2 β

2π − µλ2 − 2λβ

λ

y

µ

T

e

1−

−

=

1−Q

λ

µ

µ

λT

y

22P

1: 1: #

&

$

9

2µ2 β

2λ

2µ2 β

λ

y

µ

+ e µ 1− λT Q

, (5.82)

1−

+

µ

µ

λT

y

9

2π

λ

I

%

2λ

!

λ

− βT

2µ2

"

#

because the function

1: 1: #

$ 9 22

2µ2 β

λ

y

µ

1−

−

y )→1 − Q

µ

µ

λT

y

1: 1: #

&

$ 9 22

2β

2µ2 β

2λ

λ

y

µ

2µ

1−

λT Q

+eµ

1−

+

µ

µ

λT

y

(5.83)

is monotonically increasing, so we upper-bounded it by y goes infinity, then (5.83)

is bouned by 1, i.e.

I1 (y) ≤

9

2π −

e

λ

33

%

λ2

− 2λβ

T

µ2

(5.84)

Chapter 5

Derivations

which does not depend on y anymore, so we can get rid of the expectation directly.

Finally, we get the lower bound of capacity:

: #

$

)

* λ β

λ

β

−β

C(T, αT) ≥ log T − log β + log 1 − e

− + (αT + µ) + 2λ

−

µ

T

2µ2

T

#

$

3

1

2πeµ

3 2λ

2λ

− log

− e µ Ei −

(5.85)

2

λ

2

µ

: #

$

* λ β∗

)

λ

β∗

∗

−β ∗

− +

(αT + µ) + 2λ

−

= log T − log β + log 1 − e

µ

T

2µ2

T

#

$

3

1

2πeµ

3 2λ

2λ

− log

− e µ Ei −

(5.86)

2

λ

2

µ

Here, we choose β as solution of β ∗ which satisfied α =

5.2.2

1

β∗

−

∗

eβ

∗

1−eβ

for 0 < α < 0.5.

For 0.5 ≤ α ≤ 1

For 0.5 ≤ α ≤ 1, we choose uniform distribution as our input distribution:

fX (x) =

1

· I{0 ≤ x ≤ T}

T

(5.87)

since E[X] = 0.5, the average delay constraint is always satisfied.

Hence,

fY (y) = (fX ∗ fN )(y)

- ∞

fN (x)fX (y − x) dx

=

−∞

9

2

1 ∞

λ −λ(x−µ)

2x

2µ

e

I{x ≥ 0}I{0 ≤ y − x ≤ T} dx

=

T −∞ 2πx3

Similary, we separate it to two cases, y − T ≤ 0 and y − T > 0.

For the first case, y − T ≤ 0:

- 9

2

λ −λ(x−µ)

1 y

2µ2 x

e

dx

fY (y) =

T 0

2πx3

(5.88)

(5.89)

(5.90)

(5.91)

Note that the integration is like the c1 (x) we use in the upper bound, the only

different thing is the range of the integration. So, we have:

- y:

λ(w−1)2

λ

1 µ

− 2µw

e

dw

(5.92)

fY (y) =

T 0

2πµw3

G

1: #9

9 $2

λ

y

µ

1

1−Q

−

=

T

µ

µ

y

1: #9

9 $2 H

λ

λ

y

µ

2µ

+e Q

+

(5.93)

µ

µ

y

! I3 (y)

(5.94)

34

5.3 Asymptotic Capacity of AIGN Channel

Chapter 5

For the second case, y − T > 0:

9

2

1 y

λ −λ(x−µ)

2µ2 x

dx

e

fY (y) =

T y−T 2πx3

G- 9

H

- y−T 9

2

y

−λ(x−µ)2

λ −λ(x−µ)

λ

1

2

2

=

e 2µ x dx −

e 2µ x dx

T 0

2πx3

2πx3

0

(5.95)

(5.96)

= I3 (y) − I˜3 (y)

(5.97)

where I˜3 > 0 for all y. Hence, we have output distribution fY (y):

I

y≤T

I3 (y)

fY (y) =

I3 (y) − I˜3 (y) y > T

(5.98)

This now yields the following lower bound on capacity:

C ! max I(X; Y )

fX (x)

Q

≥ I(X; Y )Q

(5.99)

(5.100)

X∼f (x)

Q

= (h(Y ) − h(Y |X))QX∼f (x)

Q

= h(Y )QX∼f (x) − h(N )

Q

− h(N )

= −EY [log fY (Y )] Q

X∼f (x)

(5.101)

(5.102)

(5.103)

= Pr[Y > T]E[− log fY (Y )|Y > T] + Pr[Y ≤ T]E[− log fY (Y )|Y ≤ T]

− h(N )

= −h(N ) − Pr[Y ≤ T]E[log I3 (Y )|Y ≤ T]

Q

R

S

Q

− Pr[Y > T]E log (I3 (Y ) − I˜3 (Y ))QY > T

≥ −h(N ) − E[log I3 (Y )]

(5.104)

(5.105)

(5.106)

We futher bound I3 (y):

G

1: #9

1: #9

9 $2

9 $2 H

λ

1

λ

y

µ

λ

y

µ

2µ

1−Q

−

+e Q

+

(5.107)

I3 (y) =

T

µ

µ

y

µ

µ

y

1

≤ .

(5.108)

T

Equation (5.108) is because (5.107) is monotonically decreasing in y.

Hence, we get the lower bound for 0.5 ≤ α ≤ 1:

#

$

2λ

1

2πeµ3 3 2λ

µ

C(T, αT) ≥ log T − log

− e Ei −

.

2

λ

2

µ

5.3

(5.109)

Asymptotic Capacity of AIGN Channel

In this section, we try to figure out how the capacity behaves when the drift velocity

v or the peak-delay constraint T tend to infinity.

35

Chapter 5

5.3.1

Derivations

When T Large

From chapter 4, we have for 0 < α < 0.5:

∗

)

C(T, αT) ≥ log T − log β + log 1 − e

−β ∗

*

#

2λ

1

2πeµ3 3 2λ

− e µ Ei −

− log

2

λ

2

µ

λ β∗

− +

(αT + µ) +

µ

T

$

:

2λ

#

λ

β∗

−

2µ2

T

$

(5.110)

when T goes to infinity, we get

)

*

∗

C(T) ≥ log T + αβ ∗ − log β ∗ + log 1 − e−β

$

#

1

2πeµ3 3 2λ

2λ

− e µ Ei −

+ o(1)

− log

2

λ

2

µ

(5.111)

and the capacity upper-bounded by

"

!

C(T, αT) ≤ Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− 2(1 − Q− + Q+ ) log(1 − Q− + Q+ )

!

!

""+

+ (Q− − Q+ ) log µ(Q− + Q+ ) + αT(Q− − Q+ )

+ αβ ∗

#

$

∗

αβ (T +δ)

−

∗

− −Q+ )−µ(Q− +Q+ )

αT+µ−αT(Q

− log αβ + log(αT + µ) + log 1 − e

#

$

2λ

1

2πeµ3 3 2λ

− e µ Ei −

− log

(5.112)

2

λ

2

µ

where

1: 1:

9 22

λ

δ

µ

−

Q− ! Q

µ

µ

δ

1: 1:

9 22

λ

λ

δ

µ

2µ

+

Q !e Q

+

µ

µ

δ

(5.113)

(5.114)

As T goes to infinity, the capacity become:

)

*

∗

C(T, αT) ≤ log T + αβ ∗ − log β ∗ + log 1 − e−β + o(1)

#

$

2πeµ3 3 2λ

1

2λ

− e µ Ei −

− log

2

λ

2

µ

(5.115)

Here, (5.115) is because when T goes to infinity, Q− and Q+ will go to zero.

From (5.111) and (5.115). Note that the upper bound and lower bound coincide,

which gives us

)

+

,

*

∗

lim C(T, αT) − log T = αβ ∗ − log β ∗ + log 1 − e−β

T↑∞

#

$

2λ

1

2πeµ3 3 2λ

− log

− e µ Ei −

(5.116)

2

λ

2

µ

36

5.3 Asymptotic Capacity of AIGN Channel

For 0.5 ≤ α ≤ 1:

Chapter 5

#

$

1

2πeµ3 3 2λ

2λ

µ

C(T, αT) ≥ log T − log

− e Ei −

2

λ

2

µ

(5.117)

when T goes to infinity, we get the same equation:

$

#

1

2πeµ3 3 2λ

2λ

− e µ Ei −

C(T, αT) ≥ log T − log

2

λ

2

µ

(5.118)

and the upper bound, from (4.12):

"

!

C(T, αT) ≤ Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− (1 − Q− + Q+ ) log(1 − Q− + Q+ )

;

!

"<

+ (Q− − Q+ ) max 0, log µ(Q− + Q+ ) + αT(Q− − Q+ ) + log(T + δ)

#

$

2λ

2πeµ3 3 2λ

1

µ

− log

− e Ei −

.

(5.119)

2

λ

2

µ

where Q− and Q+ are same as (5.113) and (5.114).

We choose δ = log T, then as T goes to infinity, Q− and Q+ will go to zero. Therefore,

we get asymptotically upper bound:

#

$

2λ

1

2πeµ3 3 2λ

µ

C(T, αT) ≤ log T − log

− e Ei −

+ o(1)

(5.120)

2

λ

2

µ

We can see (5.118) and (5.120) are coincide as T goes to infinity. Hence we get the

asympototic capacity when T goes to infinity for 0.5 ≤ α ≤ 1:

#

$

+

,

2λ

2πeµ3 3 2λ

1

− e µ Ei −

(5.121)

lim C(T, αT) − log T = − log

T↑∞

2

λ

2

µ

5.3.2

v Large

We rewrite the bound (4.3) by using v = µ1 , for 0 < α < 0.5:

#

$ : # 2

*

)

∗

λv

β

1

∗

C(v) ≥ log T − log β ∗ + log 1 − e−β − λv +

αT +

+ 2λ

T

v

2

1

2πe 3

− log 3 − e2λv Ei(−2λv)

2

λv

2

#

$ : # 2

)

*

∗

v λ

β

1

∗

≥ log T − log β ∗ + log 1 − e−β − vλ +

αT +

+ 2λ

T

v

2

#

$

1

λ

3

1

3

+ log v + log

+ log 1 +

2

2

2πe 4

2vλ

β∗

−

T

$

(5.122)

$

β∗

−

T

(5.123)

where (5.123) is simply plugging in the lower bound of Ei(·) from Proposition 2.14.

Its asymptotic lower bound is:

)

*

3

1

λ

∗

+ o(1). (5.124)

C(v) ≥ log T − log β ∗ + log 1 − e−β + αβ ∗ + log v + log

2

2

2πe

37

Chapter 5

Derivations

Similary, we rewrite (4.12):

"

!

C(v) ≤ Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− 2(1 − Q− + Q+ ) log(1 − Q− + Q+ )

N

#

$O

1 −

−

+

+

−

+

(Q + Q ) + αT(Q − Q )

+ (Q − Q ) max 0, log

+ αβ ∗

v

1

2

αβ ∗ (T +δ)

−

1

1

1

−

+

−

+

− log αβ ∗ + log(αT + ) + log 1 − e αT+ v −αT(Q −Q )− v (Q +Q )

v

1

2πe 3

(5.125)

− log 3 − e2λv Ei(−2λv)

2

λv

2

where

22

1

Q− ! Q

vδ

1

1

9 22

√

√

1

+

2λv

Q !e Q

vλ

vδ +

vδ

1

We choose δ =

zero. For Q+ :

e

√1 ,

v

2λv

Q

√

1

√

λv

δv −

9

(5.126)

(5.127)

then when v goes to infinity, it is obvious that Q− will go to

1

√

vλ

1

√

vδ +

9

1

vδ

22

1 − vλ

≤ e 2

2

& "2

!√

1

vδ+ vδ

(5.128)

which will also goes to zero as v goes to infinity. Therefore, we get asymptotically

upper bound:

*

)

3

1

λ

∗

+ o(1). (5.129)

C(v) ≤ log T − log β ∗ + log 1 − e−β + αβ ∗ + log v + log

2

2

2πe

We can see (5.124) and (5.129) are coincide as v goes to infinity. Hence we get the

asympototic capacity for v goes to infinity:

+

*

,

)

3

λ

1

∗

(5.130)

lim C(v) − log v = log T − log β ∗ + log 1 − e−β + αβ ∗ + log

v↑∞

2

2

2πe

For 0.5 ≤ α ≤ 1:

1

2πe 3

log 3 − e2vλ Ei(−2λv)

2

λv

2

#

$

3

1

λ

3

1

+ log 1 +

≥ log T + log v + log

2

2

2πe 4

2vλ

C(v) ≥ log T −

(5.131)

(5.132)

where (5.132) is simply plugging in the lower bound of Ei(·) from Proposition 2.14.

Its asymptotic lower bound is:

C(v) ≥ log T +

3

1

λ

log v + log

+ o(1)

2

2

2πe

38

(5.133)

5.3 Asymptotic Capacity of AIGN Channel

Chapter 5

and the upper bound, from (4.12):

"

!

C(T, αT) ≤ Q− − Q+ − 2 Q− − Q+ log (Q− − Q+ )

− (1 − Q− + Q+ ) log(1 − Q− + Q+ )

N

#

$O

1 −

−

+

+

−

+

(Q + Q ) + αT(Q − Q )

+ (Q − Q ) max 0, log

+ log(T + δ)

v

2πe 3

1

− log 3 − e2λv Ei(−2λv) .

(5.134)

2

λv

2

where Q− and Q+ are defined by (5.126) and (5.127).

Similary, we choose δ = √1v , as v goes to infinity, Q− and Q+ will go to zero.

Therefore, we get asymptotically upper-bound for 0.5 ≤ α ≤ 1:

C(v) ≤ log T +

3

1

λ

log v + log

+ o(1)

2

2

2πe

(5.135)

We can see (5.133) and (5.135) are coincide as v goes to infinity. Hence we get the

asympototic capacity when v goes to infinity for 0.5 ≤ α ≤ 1:

,

+

1

λ

3

lim C(v) − log v = log T + log

v↑∞

2

2

2πe

39

(5.136)

Chapter 5

Derivations

40

Chapter 6

Discussion and Conclusion

This thesis provide new upper bound and lower bound on the capacity of the AIGN

channel in the situation of both a peak delay constraint and average delay constraint.

We have derived the upper bound and lower bound of capacity, and they are very

tight when either T or v goes to infinity. We also have found that as α increased,

our upper bound become the difference between known upper bound is increased.

For the first point, it is because for fixed peak delay constraint, the average delay

constraint become weaker and weaker as α growing;however, as α increased, the peak

constraint become stronger, and that’s the main reason for the second phenomenon.

Moreover, when the fluid velocity, v, is extremely small, from the Fig. 4.6 and

Fig. 4.8, the noise caused by Brownian motion actually helps the transmission.

With the help of [11], we were able to compute the exact output distribution

of an exponential input. This lower bound (4.1) was much tighter than the known

bound with respect to both v and T. It turned out that together with the known

upper bound, this lower bound allowed us to derive the asymptotic capacity at high

T and high v.

For future research, we propose the following problems related to the additive

inverse Gaussian noise channel:

• Derivation of the channel capacity when T and v is small.

• Proof for α ≥ 0.5, the capacity dose not depend on the α.

41

Chapter 6

Discussion and Conclusion

42

List of Figures

1.1

1.2

Wiener process of molecular communication channel. . . . . . . . . .

The relation between the molecule’s time and position. . . . . . . . .

1

3

3.3

3.4

Known bounds for the choice: µ = 0.5, λ = 1 respect to m. . . . . .

Known bounds for the choice: m = 1, λ = 1 respect to v. . . . . . .

14

15

4.5

Comparison between the upper and lower bound in (4.3) and (4.1)

and known upper bound (3.7) for the choice: µ = 0.5, λ = 0.25, and

α = 0.3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Upper bound in (4.3) and (4.1) compare to the (3.7) for the choice:

T = 10 λ = 0.25, δ = log(T + 1) + 10µ and α = 0.3. . . . . . . . . . .

Bounds in (4.12), (4.11) and (3.7) for the choice: µ = 0.5, λ = 0.25,

and α = 0.7. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Bounds in (4.12), (4.11) and (3.7) for the choice: T = 10, λ = 0.25,

2

δ = v+1

and α = 0.7. . . . . . . . . . . . . . . . . . . . . . . . . . . .

Bounds in (4.12), (4.11) with and (3.7) optimal and sub-optimal δ =

log(T + 1) + 10µ for the choice: µ = 0.5, λ = 0.25 and α = 0.7. . . .

Bounds in (4.12), (4.11) with and (3.7) optimal and sub-optimal δ =

2

v+1 for the choice: T = 10, λ = 0.25 and α = 0.7. . . . . . . . . . . .

Upper bounds, (4.3) and (4.12), with different α for the choice: µ =

0.5 and λ = 0.25. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Upper bounds, (4.3) and (4.12), with different α for the choice: T = 10

and λ = 0.25. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Upper bounds and lower bounds with different α for the choice: T =

1000, µ = 0.5, and λ = 0.25. . . . . . . . . . . . . . . . . . . . . . . .

4.6

4.7

4.8

4.9

4.10

4.11

4.12

4.13

43

19

20

20

21

21

22

22

23

23

Bibliography

[1] K. V. Srinivas, R. S. Adve, and A. W. Eckford, “Molecular communication in

fluid media: The additive inverse Gaussian noise channel,” December 2010,

arXiv:1012.0081v2 [cs.IT]. [Online]. Available: http://arxiv.org/abs/1012.0081

v2

[2] C. E. Shannon, “A mathematical theory of communication,” Bell System Technical Journal, vol. 27, pp. 379–423 and 623–656, July and October 1948.

[3] H.-T. Chang and S. M. Moser, “Bounds on the capacity of the additive inverse

Gaussian noise channel,” in Proceedings IEEE International Symposium on Information Theory (ISIT), Cambridge, MA, USA, July 1–6, 2012, pp. 304–308.

[4] R. S. Chhikara and J. L. Folks, The Inverse Gaussian Distribution — Theory,

Methodology, and Applications. New York: Marcel Dekker, Inc., 1989.

[5] I. S. Gradshteyn and I. M. Ryzhik, Table of Integrals, Series, and Products,

6th ed., A. Jeffrey, Ed. San Diego: Academic Press, 2000.

[6] V. Seshadri, The Inverse Gaussian Distribution — A Case Study in Exponential

Families. Oxford: Clarendon Press, 1993.

[7] T. Kawamura and K. Iwase, “Characterizations of the distributions of power

inverse Gaussian and others based on the entropy maximization principle,”

Journal of the Japan Statistical Society, vol. 33, no. 1, pp. 95–104, January

2003.

[8] T. M. Cover and J. A. Thomas, Elements of Information Theory, 2nd ed. New

York: John Wiley & Sons, 2006.

[9] A. Lapidoth and S. M. Moser, “Capacity bounds via duality with

applications to multi-antenna systems on flat fading channels,” June 25,

2002, submitted to IEEE Transactions on Information Theory, available at

<http://www.isi.ee.ethz.ch/∼moser>. [Online]. Available: http://www.isi

.ee.ethz.ch/∼moser

[10] S. M. Moser, Information Theory (Lecture Notes), version 1, fall semester

2011/2012, Information Theory Lab, Department of Electrical Engineering,

45

Bibliography

National Chiao Tung University (NCTU), September 2011. [Online]. Available:

http://moser.cm.nctu.edu.tw/scripts.html

[11] W. Schwarz, “On the convolution of inverse Gaussian and exponential random variables,” Communications in Statistics — Theory and Methods, vol. 31,

no. 12, pp. 2113–2121, 2002.

[12] M. Abramowitz and I. Stegun, Handbook of Mathematical Functions With Formulas, Graphs, and Mathematical Tables, 10th ed. New York: Dover Publications, 1965.

46