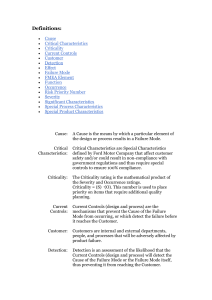

Failure mode and effects analysis of software-based

advertisement