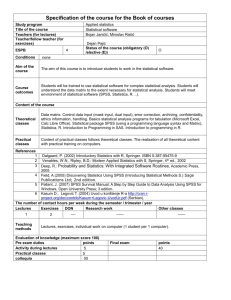

INTRODUCTION TO SPSS FOR WINDOWS

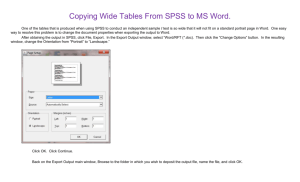

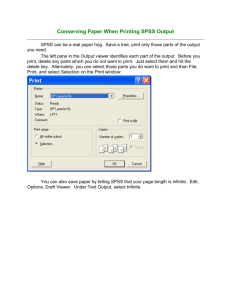

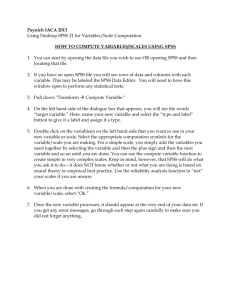

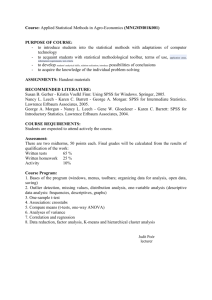

advertisement