Fluensee Draft Proposal

advertisement

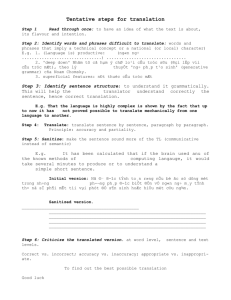

Fluensee Draft Proposal by Jo Agnitti, Faisal Anwar, Hui Soo Chae, Pranav Garg This proposal describes Fluensee, a tool for doing real-time, environment-driven translation of foreign text. Fluensee is intended to take advantage of several key features of mobile devices today: their ability to combine extreme mobility with computing and networking power and their built-in camera and imaging capacity. The purpose of Fluensee is to help users interpret the words and phrases in their environments. Our potential users could be tourists and students visiting a foreign country, or even young schoolchildren learning to interpret the world around them for the first time. Fluensee hopes to make translation and interpretation of text a fast and always-available process by providing a tool that quickly interprets words in any picture. Background and Motivation Fluensee is envisioned as a powerful companion for anyone trying to learn a new language or navigate a foreign environment successfully. Imagine, for example, that an American tourist is visiting Japan. While this individual may have brushed up on "survival" Japanese on the plane ride to Tokyo, he will need a lot of assistance and hard work to follow all of the signs and instructions he will encounter in his new environment. Fluensee can be a resource that supports quicker learning of Japanese while also lessening the burden of interpreting signs and instructions in the new environment. A very simple and quick process will make the translation process easy: when one sees an interpretable phrase, simply capture an image of the phrase on a mobile device's camera, follow a short series of links and then read the translated text as it is superimposed on your image. There are several key features that will set Fluensee apart from other language assistance tools that exist today. In particular, we anticipate the following design principles, when applied collectively, will make Fluensee a unique language learning application: Embed context in to the translation process by using a user's previous actions and information from the mobile GPS system and other sources to inform the interpretation of phrases. Take advantage of Fluensee's mobile platform to make language learning a process that is conducted on-demand and within the environment where understanding the language is needed. Provide the ability to translate longer phrases or texts quickly and seamlessly. Connect the translation process to other learning activities. While a user may initially want to translate a phrase, he should also be given the power to learn more about concepts within that phrase or look up related information about a topic mentioned within the phrase. Embody a multi-sensory approach to language learning that not only helps with visual translation, but also provides pronunciation support. There is considerable research on the potential of mobile applications to support learning in general. Furthermore, there is also much we can use from the language learning literature to help us identify the possible impact of Fluensee and inform a design that maximizes this impact. Below, we review some of this literature in some detail. An important review of the pedagogical potential of mobile applications is provided by Naismith et al (2004), who envision mobile learning as "a rich, collaborative and conversational experience, whether in classrooms, homes or the streets of a city." This report goes on to identify how mobile learning applications can be effective when viewed from the lens of several different pedagogical paradigms. The following paradigms are particularly applicable to the goals of the Fluensee tool (Naismith et al, 2004, p. 3): Situated Learning: model that states that "learning can be enhanced by ensuring that it takes place in an authentic context." Informal and Lifelong Learning: Model that emphasizes "activities that support learning outside a dedicated learning environment or formal curriculum." Sharples (2000, p. 178) makes a connection between situated and lifelong learning by showing that lifelong learning requires the use of situated environments where "there is a break in the flow of routine daily performance and the learner reflects on the current situation, resolves to address a problem, to share an idea, or to gain an understanding." Sharples also proceeds to outline several important features of any mobile learning environment and maps out a theory for lifelong learning that is assisted by technology. In particular, Sharples (2000, p. 180) defines learning (and personal learning in particular) as the "artful deployment of the environment, including its tools and resources, to solve problems and acquire new knowledge". Fluensee seeks to build upon this theory by providing learning tools that make any foreign environment in to a learning opportunity that can be navigated and enriched with a handheld mobile device. There is also literature available on the use of mobile devices as learning tools. Lai et al (2007) discuss one particular implementation of a mobile learning tool intended to assist with "experiential" learning (which is based primarily on making use of the environment). In addition to showing a positive effect of the mobile tool on student learning outcomes, Lai et al also points to additional literature informing the potential value of mobile tools for education. His review focuses on the power of mobile technologies to provide immediate answers and feedback to questions and the possibility of engaging learners "regardless of location and time" (Lai et all, 2007, p. 328; Lehner & Nosekabel, 2002; Curtis et al, 2002). Functional Description of Fluensee Tool Fluensee is an application which would assist travelers in knowing about basic things like road signs written in foreign language to his/her native language. It is based on the assumption of a user taking a picture and then trying to find out what does the image text means in his/her native language. The Fluensee App essentially would be having three major jobs: 1) Take a picture, in case the picture is blur or angled and the Text from the picture cannot be extracted, it should prompt for retake of picture. 2) Extract the Text from the picture seamlessly. 3) The Fluensee App then uses Google API for translation to automatically detect which language the road sign is and then translate the text from that alien language to user's native language. Possible Architectures 1) Mobile + Server Side Computing a) Take a picture from the iPhone and enter the desired language initially it could be a couple of them. b) send this picture to a server running image extraction software using TCP c) The server OCR software extracts the text and uses Google Translate to translate it into user's native language. d) Sends back the translated text back to the iPhone. . *** Will need to have some type of API/Document format that can store both the picture and the translated text (in case we want to superimpose translations on the actual image). 2) Mobile a) Take a picture from the iPhone and enter the desired language initially it could be a couple of them b) uses the native mobile OCR software to extract the text. c) Uses Google Translate Api to translate it into the desired language d) Displays the translated text. Note: Things to ponder on 1) We need to find out whether we can use OCR software like Tesseract, Abbyys Mobile OCR SDK on iPhone. a) If so what are the benefits of using them? like no network required but as we would be needing to use Google Translate API for translation so network connection would be required. b) Questions to some other answers like would it be more helpful if we use Server side computing in terms of performances and what would be the downsides of it. c) If the user does happen to be using the server-mobile architecture, are we reasonably confident that he/she will be able to get on the internet to translate the stuff? What if network access is different in the foreign country. 2) What would be an acceptable time limit for a user to wait to get the translated text once he takes the picture? 3) Also should the user wait in the meantime or there is a round sign showing for processing? Some of the pictures I think to show the Screen Flow of the application which can give us a feeling of what we should have: 1) An icon displayed for Fluensee Application for 57*57 pixels size for home screen- 2) An option of taking a picture or surfing the photogallery for existing pictures a)Take a picture of the image. b) Photogallery :- 3) Enter the source language and target language to be used for Google Translate API. 4) When the user hits on return or go then the application shows him the translated text. Market Research Of the 250 million cell phone users in the US, smartphone owners make up 10% of the US cell phone market. Research in Motion, HTC, and Palm lead the smartphone industry in market shares. Apple holds fourth place with 12% of smartphone sales in the US (1.3% worldwide). Among major smartphone manufacturers, Apple iPhone users reported highest satisfaction scores. The majority of iPhone users are 25-34 years old (33%) while the lowest percentage of users (4%) are 65 years old and older. Generator Research, a market research firm, predicts that Apple will own 40% of the global smartphone market by 2013. This increase in shares is linked to the advanced mobile services available through the iPhone and the App store. The firm draws parallels between how Apple developed its digital music platform through iPod and the iTunes music store. ABI Research predicts that also by 2013, 31% of all phones sold will be smartphones. According to the Neilson Report, 82% of iPhone users access the Internet through their phone, making them 5 times more likely to access the Internet than the average mobile consumer. There are more than 15,000 apps on the App Store, and so far iPhone users have downloaded 500 million, at an average of around 4.76 million apps every day. As of February 15th 2009, there are 1,500 educational apps in the iTunes app store. New educational apps are added to the store every day. Since the success of Apple’s App Store, other smartphone companies, for example, Google (Android), Research in Motion (BlackBerry), Microsoft (Windows Mobile), and now Nokia, have developed similar virtual storefronts. App developers are being challenged to develop competitive and interesting apps that will lure consumers to the stores. Meaningful educational apps will serve to uphold Apple’s success in the app store market. Relevance to the Edlab Mission There are several ways in which this project will advance the mission of the Edlab, which is to conceptualize and implement a fundamentally different educational sector. Mobile tools and pervasive computing are clearly a powerful new wave pushing against the old ways of the classroom. They will undoubtedly change the ways students learn by bringing networked resources to the palms of their hands whenever and wherever they may be learning. Many articles and resources already point to this movement happening (http://www.nytimes.com/2009/02/16/technology/16phone.html) This specific project attacks at least two important learning paradigms (situated learning and informal/lifelong learning) with tools that have traditionally not been used in the classroom. In this regard, we approach language learning in a new and innovative way both within the classroom and outside of it. Fluensee is a substantial endeavor that will build the capacity of our group to create other innovative applications in the mobile device realm. References Curtis, M., Luchini, K., Bobrowsky, W., Quintana, C., & Soloway, E. (Eds.). (2002). Handheld use in K-12: a descriptive account. Los Alamitos, CA: IEEE Computer Society Press. Lai, C.-H., Yang, J. C., Chen, F. C., Ho, C. W., & Chan, T. W. (2007). Affordances of Mobile Technologies for Experiential Learning: The Interplay of Technology and Pedagogical Practices. Journal of Computer Assisted Learning, 23(4), 326-337. Lehner, F., & Nosekabel, H. (Eds.). (2002). The role of mobile devices in e-learning – first experience with a e-learning environment. Los Alamitos, CA: IEEE Computer Society Press. Naismith, L., Lonsdale, P., Vavoula, G., & Sharples, M. (2004). Literature Review in Mobile Technologies and Learning. Bristol, UK: Futurelab. Sharples, M. (2000). The design of personal mobile technologies for lifelong learning. Computers & Education, 34, 177-193. Thornton, P. (2005). Using mobile phones in English education in Japan. Journal of Computer Assisted Learning, 21(3), 217-228.