8 - Roy Rosenzweig Center for History and New Media

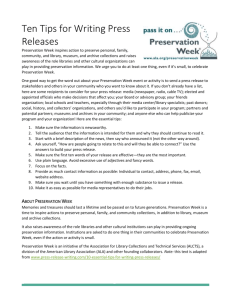

advertisement