Naval Systems Engineering Technical Review Handbook

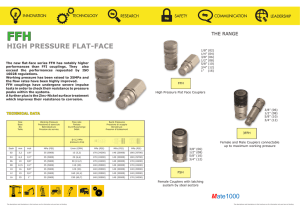

advertisement