483-195

advertisement

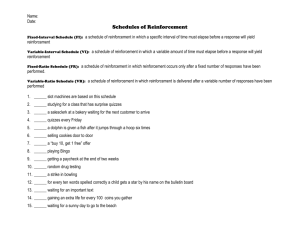

Neurocontroller Design with Rule Extracted by Using Genetic Based Machine Learning and Reinforcement Learning System Hung-Ching Lu and Ta-Hsiung Hung Department of Electrical Engineering, Tatung University No. 40, Sec. 3, Chung Shan North Road, Taipei, 104 Taiwan, Republic of China TEL: (886)-2-25925252 ext. 3470 re-ext 801; FAX: (886)-2-25941371 Abstract: -In this paper, we will integrate genetic based machine learning, reinforcement learning and neural network techniques to develop a no man, no supervisor, no reference model, and complete evolution neural network controller. The primary extracted rules are called micro rules. A further refinement of the extracted rules is achieved by applying additional genetic search and reinforcement to reduce the number of micro rules. This secondary extracted rules are called macro rules. That process results in a smaller set of macro rules which can be used to train a feedforward multilayer preceptron neurocontroller. Key-Words: Genetic algorithm, Machine learning, Reinforcement learning, Neural network 1. Introduction Several designs of automatic control system have been used in any of industry and military applications [1]. The basic objective of a controller is to provide the appropriate input to a plant to obtain the desired output. However, as the complexity of the system increases, the control process becomes very difficulty to design and lack of efficiency. It is convenient to control an object while its input-output relations are known. For this reason, how acquired control rules for any system are very important things. We are interested in finding a control technique without knowing the knowledge of the plant a priori, that can generate the rules of the controller automatically. Here, we will be established the system with extracted control rule by genetic algorithm and reinforcement. As the knowledge is already existed in the rule base, the job of the neural network is not to discover the required control strategies, rather its job is to generalize a control law by implementing the existing rule base. The training rule are extracted by a genetic and reinforcement based machine learning system which we call GRBML system. In general, a genetic assisted reinforcement based machine earning system is a massively parallel rule-based system that continuously interacts with a surrounding environment (plant) [2]. Such a system uses genetic algorithm (GA) [3] for the recombination and searches for rules and a global reward/punish algorithm for rules reinforcement. The learning mechanism uses an adaptive knowledge-based system that receives messages from and transmits consequent actions to the environment. The combination of the genetic based machine learning and the reinforcement algorithm can enhance better rule and delete worse rule. The system that employs a hybrid genetic search and reinforcement strategy way be required the yes/no supervisor and reference model. At first, the produce rules is called micro rule. Refine the micro rules by genetic search and reinforcement to reduce the micro rules are called macro rules. Macro rules can used to train a feedforward multilayer perception neural network controller. However, micro and macro rules are directly used in a look-up table controller. Both controllers are given to show the robustness against noise disturbance and plant parameter variations. 2. The Basic Concepts Genetic Base Machine Learning System Here, we use the common standard version of error backpropagation to train a multilayer feedforward neural network with sigmoid activation function. In this case, the binary rule and the associated input-output pairs are used directly as inputs and targets for the neural network [4]. A classifier system is a machine learning system that learns syntactically simple string rule to guide its performance in an arbitrary environment. In general GBML system [5] consists of two main components: architecture and a learning algorithm and it can be divided by 6 components (see Fig.1). (1) Detector unit: Sense the state of the environment and transform it into a binary message. (2) Classifier unit: It consists of simple binary structured rules that can be evolved and has the following form : IF (condition) THEN (action) The classifier action is taken if the associated condition matches the state of the environment. (3) Credit unit: Every classifier is assigned a quantity called-strength. The credit unit does credit assignment task and adjusts the strengths of the classifiers as a function of their performance during the learning process. (4) GA: An exploration/optimization mechanism. By selecting individual classifiers having high strength off-springs, the GA complements do the action of the credit algorithm. (5) Effectors unit: It’s the trigger of GBML system. It translates the binary-valued action of the classifier which is best matched to the environment state into a real-valued output that in turn controls the environment. In fact, it is similar D/A converter. (6) Message list : These environmental messages are posted to a finite-length message list, where the message may then activation string rules called classifiers when they activated, a classifier posts a message to the message list. These messages may then invoke other classifiers or they may cause an action to be taken through the system’s action triggers called effectors. In this way classifiers combine environmental cues and internal thoughts to determine what the system should do and think next. Apportionment of Credit Algorithm Many GBML systems attempt to rank individual classifiers according to a classifier’s role in achieving reward from the environment. Although there are a number of ways of doing this, the most prevalent method incorporates what Holland has called a bucket brigade algorithm. This service economy contains two main components: an auction and a clearinghouse. When classifiers are matched they do not directly post their messages. Instead, having its condition matched qualifiers a classifier to participate in an activation auction. Then each classifier maintains a record of its net worth, called it strength. Each matched classifier makes a bid proportional to its strength. In this way rules that are highly fit are given preference over other rules. The auction permits appropriate classifiers to be selected to post their messages. Once a classifier is selected for activation, it must clear its payment through the rendered. A matched and activated classifier sends its bid to those classifiers responsible for sending the messages that matched the bidding classifier’s condition. The bid payment is divided in some manner among the matching classifiers. This division of payoff among contributing classifiers helps to ensure the formation of an appropriately sized subpopulation of rules. Thus different types of rules can cover different types of behavioral requirements without undue interspecies competition. Reinforcement Learning Reinforcement learning is the on-line learning of an input-output mapping through a process of trial and error designed to maximize a scalar performance index called a reinforcement signal. Although it cannot be claimed that this principle provides a complete model of biological behavior, its simplicity and common-sense approach have made it be an influential learning rule. Indeed, we may rephrase Thorndike's law of effect to offer the following sensible definition of reinforcement learning [6-8]. If an action taken by a learning system is followed by a satisfactory state of affairs, then the tendency of the system to produce that particular action is strengthened or reinforced. Otherwise, the tendency of the system to produce that action is weakened. Genetic Algorithm Genetic Algorithm (GA) is an exploratory search and optimization procedures of natural evolution and population genetics [9]. GA uses probabilistic rules to make decision. Typically, the GA starts with little or no knowledge of the correct solution depending entirely on responses from an interacting environment and its evolution operators to arrive at good solutions. GA generally consists of three main fundamental operators: reproduction, crossover, and mutation. GA requires the optimization problem to be stated in the form of a cost function and its flow chart is shown in Fig.2. 3. Genetic Reinforcement Based Machine Learning System GRBML system improves GBML system and adds reinforcement algorithm and insertion rule system. The learning process in GRBML is off-line and is implemented by a rule extraction method. Rules are extracted by initializing the plant to a random initial state and then invoking the above reinforcement scheme and GA until a sequence of rules drives the environment to the desired state. Micro Rules Extraction 3. Conclusion GRBML system micro rules extraction scheme can be described as follows: (1) Initialize all parameters of GRBML with values supplied by the user randomly to generate a present number of classifiers in the working population and assign each of them an equal strength. (2) Apply the plant dynamics to compute the state of plant at the next discrete time step. (3) Post the environmental message in binary format to GRBML. (4) The insertion operation is applied at every time step during the first few reinforcement phases for every initial plant state, or after the GA has just been invoked. (5) Effectors release the action of the classifier with the highest strength among the few "closest" classifiers to the environment message of the plant in the next time step. (6) Since the last reinforcement was applied the number of time steps is equal to reinforcement period, then trigger a new reinforcement operation by proceeding to the next step, otherwise go back to step 2. (7) Evaluate the fitness function, selected by the effectors during the last reinforcement period. (8) If the fitness of the above sequence meets a preset threshold, then copy all the classifiers in that sequence to the retrieving population file. Next, if the number of successful learning phases is met, then stop the whole rule extraction process, else reinitialize the environment to some random initial state and go to step 2. (9) If it runs the GA time then it will call GA procedure. (10) If the plant’s state leaves a predefined region of the state space, then re-initialize it to the previous initial state. (11) If the number of reinforcements, for a single initial plant state, exceeds its upper bound then re-initialize the plant to a new random state. (12) Go to step 2. A novel machine learning system, GRBML system for the rule extraction of temporal control problems is introduced. The system uses a hybrid of reinforcement learning and GA search. Based on the rules this system extracted, the designed feedforword neural networks that can successfully control highly nonlinear and noisy system. The rules extracted by the system can be utilized in three ways. First, they can be directly used for retrievals in a table look-up fashion. Second, with further processing, the rules can be refined into a small number of macro rules and then also used in a table look-up method. Last, the macro rules themselves can be used in training feedforward neural networks. The neurocontroller (the macro rule-based neural controller) outperformed both the micro and the macro rules-based table look-up controller in most of the above applications. Moreover, the neurocontrollers show more robustness when controlling non-nominal or noise corrupted plants. Macro Rules Retrievals and Rule Based Neural Controller Although the retrievals using the micro rules may provide the acceptable results, the performance can be improved by further processing of the existing micro rules. It is called macro rules (see Fig.3). In general, BP is used to train a multilayer feedforward neural network with sigmoid activation unit and its flowchart is shown in Fig.4. We use the extracted rules in training a multilayer neural network. Acknowledgment: This work was supported by the National Science Council of the Republic of China under the contract of NSC-91-2213-E036-010. References [1]W. M, Thomas, R. S. Sutton, P. J. Werbos, Neural Nehvorks for Control. Cambridge, MA : MIT Press, 1990. [2]J. H. Holland, "A mathematical framework for studying learning in classifier systems," Physica, vol. 22, pp. 307-311, 1986. [3]D. E. Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning. Reading, MA : Addison-Wesley, 1989. [4]A. U. Levin and K. S. Narendra, "Control of nonlinear dynamical systems using neural networks : Controllability and stabilization," IEEE Trans. New: Net., vol. 4, pp.192-206, 1993. [5]A. Abdulaziz and M. Farsi, "Non-linear system identification and control based on neural and self-tuning control," Int. J. Contr., vol. 7, no. 6, pp. 297-307, 1993. [6]M. D. Waltz, and K. S. Fu, "A heuristic approach to reinforcement learning control systems" IEEE Trans. Automat. Contr.,vol.10, pp.390-398, 1965. [7]E. L. Thomdike, Animal Intelligence. Darien, CT : Hafner, 1991. [8]R. S..Sutton, , A. G. Barto, and R. J. Williams, "Reinforcement learning is direct adaptive optical control." Proceedings of the American Control Conference, pp. 2143-2146, Boston, MA, 1991. [9]K. Krishnakumar and D. E. Goldberg, "Control system optimization using genetic algorithm," J. Guidance, Contr. And Dynam., vol. 15, no. 3, pp. 735-740, 1992. START New Initial Random State Environment Call GA Learning Classifier System Detectors Information 1 0 Payoff 1 Message List 101 000 111 1 1 1 NO Plant Dynamics Effectors Action Criterion Satisfy? YES Satisfy present number of terms? Rule Memory 10# : 111 00# : 000 NO YES Apportionment of Credit NO Reinforce Draft Rules Genetic Alogrithm YES Fig.1 The learning GBML system interacts with its environment START Reinforcement Algorithm STOP Fig.3 Synthesis of the macro rules START Initialize the population G(0) Macro Rules Evaluate the fitness Train a Feedforward Neural Network with Back Propagation crossover K=K+1 mutation Simulations and Perfromance Evaluations on a Validation set New population G(k+1) NO Satisfied the goal YES STOP Fig.2 The flow chart of GA Vary Network Size and/or Learning parameters NO Design is Acceptable? YES STOP Fig.4 Neural network used by macro rule training