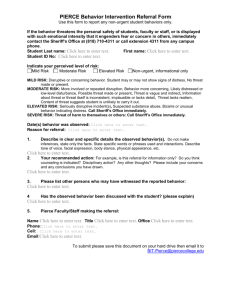

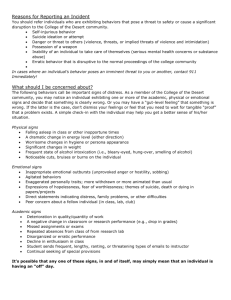

DOC - Columbus State University

advertisement

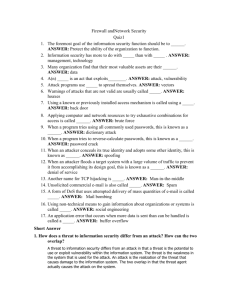

Using Threat Analysis and Legal Liability to Harden Software Applications Going Beyond the Code Charles Andrews Student Columbus State University Columbus, GA USA andrews_charles@colstate.edu Since application security has emerged as a major challenge facing modern computer systems there have been many papers proposing solutions such as code review, binary analysis, and security training for developers as possible solutions. Today some of the most serious problems facing application security have to do with not understanding how applications can, intentionally and unintentionally, be used to steal, cheat, and invade privacy. In many cases no flaw in the software code is required in order to stage these attacks. The problem lies in a failure of the developers to think holistically about the real threats facing the use of the application. Instead of purely focusing on technical problems such as SQL injection, cross-site scripting, or buffer overflows, developers should also think about how the application might be used to compromise the security of the user, or the company hosting the application, in ways that can be mitigated more easily by design rather than additional application code. Threat analysis tools and techniques should be added to the application development process to ensure that developers look creatively at how their applications might be misused, mis-configured, and otherwise turned to the will of the attacker. Finally, we must take a close look at the possibility of financially penalizing software companies for security flaws to shift the cost from consumers to producers and financially incent them to produce better software. Keywords-component; application; security; threat ;analysis; legal; liability. I. INTRODUCTION In Ross Anderson's book "Security Engineering", his chapter 23 titled "The Bleeding Edge" identifies areas of application security that we only beginning to understand and address. Primarily, Anderson implies that in Web 2.0 and gaming applications we have an entirely new class of security issues related to cheating, privacy violations, and identity theft. Many of these vulnerabilities come not from poor coding, but from an inability of the developers to think about the application from an attacker’s perspective. The applications are manipulated and twisted to the will of the attacker using, in many cases, publicly available interfaces and features. Unfortunately, Anderson offers very little in the way of solutions other than those already offered by the general community, but he does do a very good job of outlining the current state of the problem. Insofar as gaming is concerned, Anderson does imply that companies such as Blizzard have found a very good model in "World of Warcraft". This model provides part of the game as an online service which generates a constant revenue stream and also allows the company to detect cheaters and prevent unauthorized duplication of their software (since the software is basically useless without the service). In regards to web-based applications such as eBay, Google, Facebook, and Friendster, Anderson identifies what companies have done to address specific privacy concerns, but does point out that this is a persistent problem and the focus today is more on mitigation than prevention. Companies still seem to be more interested in releasing a steady stream of new features rather than spending time identifying ways in which these new features might be exploited. This paper will discuss the current state of the problem as well as suggest some possible solutions. The "Related Work" section outlines some other papers that have addresses these issues. In the "Problem Statement" section we will examine the main problem proposed by Anderson and condense it down to something that can be addressed in the "Proposed Solution" section that follows. Finally, the "Conclusion" section will identify possible weaknesses in the proposed solution and identify areas for further study. II. RELATED WORK In the paper "Threat Modeling: Diving Into the Deep End", several Ford Motor Company employees outline how they implemented a threat modeling program based on Microsoft's own published processes and tools [1]. Ford was interested in looking at the costs, benefits, and outcomes resulting from implementing Threat Analysis and Modeling in their organization. By including customers into the process, Threat Modeling was found to make the process of addressing security threats more methodical and less subject to personal prejudices and opinions. Ford was able to identify, quantify and make business decisions based on the information obtained in the threat modeling sessions. On the subject of legal liability, Ken McGee wrote an article called "Suing Your Software" where he basically claims that, for a variety of reasons, the age of software publishers being indemnified from legal liabilities as a result of their software are near an end [2]. Mr. McGee gives some history on other liability cases regarding corporations and specifically why software companies have, until now, enjoyed some freedom from lawsuits regarding their software. As security incidents increase and customers begin to face lawsuits themselves from data loss and privacy concerns, it may be time that the companies that produce buggy software pay for their role in the incident. Both of the works referenced relate to the original work in the sense that they address some of the same security concerns that Ross Anderson presented. However, it should be noted that these papers propose (or predict) solutions and provide details on how those solutions addressed their security concerns. III. PROBLEM STATEMENT In essence, the problem is that applications, and the endless stream of added features that come with them, always have security threats either from poor coding, poor design, or a general inability to think of all the ways that the intended behavior and purpose of the application can be exploited by attackers. To date, most of the effort has been to encourage secure development methodologies relating to the technical tasks around writing application code. Examples of this include application scanners, application firewalls, penetration testing, static code analysis, and developer training. What is needed (in addition to those practices) is a way to ensure that companies and developers take the time to fully understand how their applications might be used for nefarious purposes. the customer and/or user. Applying updates and patches to production systems is a very risky endeavor - and not applying them can be equally risky (or worse). Given the large number of active applications in any given business, the task of keeping an inventory of installed software, receiving information on updates, scheduling downtime, applying patches, and testing that applications are still function can easily be a full-time job for information technology personnel. IV. PROPOSED SOLUTION The proposed solution comes in several phases. First, companies need to incorporate threat modeling into the development process - preferably at the design phase. Threat modeling will allow them to not only address the security of the code but to also to think of all the ways their software could be used to compromise user privacy, attack other systems, or even alter the intended functionality of their applications. Threat Modeling can address many of the issues in Anderson’s chapter including dealing with cheaters in regards to video games as well as in applications such as Facebook, eBay, and Google. If companies would take the time to more aggressively investigate how their products could be abused, it might not take a public relations embarrassment to get anything done about these issues. In short, threat modeling identifies the assets, threats, and costs associated with potential vulnerabilities so that developers can focus on the areas of the application that will yield the most security in an economic fashion. There are plenty of free tools for threat modeling including Microsoft's Threat Analysis and Modeling Tool [4] and the CORAS Risk Assessment Platform [5]. Both of these tools allow the user to identify assets, vulnerabilities, data flow, call flow, and attack surface among other metrics. Once all the relevant data has been entered, developers can get DREAD (damage, reproducibility, exploitability, affected users, discoverability) rating for each identified threat. Using these ratings, developers can prioritize threats and address them in a sensible order. For example, there is the instance of Facebook allowing search features to access private data – something most users may not be aware is happening. Of course, most users were not even aware of the new feature or how to disable it. As a result, there was a huge exposure of personal data before users rebelled and forced Facebook to alter its default status to an opt-in approach [3]. Moving to an opt-in approach makes the default setting more secure resulting in less surface area for attackers. In this case, it seems obvious that if Facebook has simply thought more about the threat to users of not reading about the new feature and making the appropriate change to their profile, they would have taken the stance of defaulting to a more secure setting. The overall purpose of threat modeling is to force the developers to get out of their usual defensive mode and spend some time pretending to be an attacker. As the developers of the application, they are in a position to best understand its weaknesses and how the application could be used to compromise users or the company hosting the application. This type of "role playing" can yield huge benefits as the developers can identify otherwise unknown attacks so that the software can be designed to resist these attacks. Threat modeling can yield actionable, quantitative data which can be used to prioritize further changes and should be performed at several points in the development process since applications are bound to suffer from scope creep. In addition, application developers need to have a financial incentive to make software more secure. As it stands, most of the costs of dealing with poorly written software are passed to Lastly, companies must have a financial incentive to protect application data. Obviously, no company wants to have a security incident but with the pressures on companies to constantly release new features before the competition, poor security can be viewed as an acceptable business risk since most license agreements indemnify software developers from damages relating to use. The proposed solution for financial incentive is to force software developers to pay for the vulnerabilities in their code. As stated by security expert Bruce Schneier: "Information security isn't a technological problem It's an economic problem” [6]. History has shown that the public relations damages from security vulnerabilities in software does appear to be a deterrent given the large number of vulnerabilities in software such as Microsoft Windows, Internet Explorer, Adobe Reader, and even Apple OS X. Despite their best efforts, including Microsoft’s Trustworthy Computer Initiative, there seem to be more security issues than ever [7]. While companies have done a better job of patching them, the fact remains that patching the multitude of applications and operating systems within an organization is a cost that really should be incurred by those who created the problem – not by customers. Only when those costs are charged directly to the companies producing the software will the quality of software improve. V. CONCLUSION Of course, implementing threat modeling and legal liability for software flaws will not eliminate all security issues. The threat modeling process will only be as thorough and as good as those performing the threat analysis. Since a lot of what goes into a threat analysis is simply creative thinking about avenues for attack, there are bound to be oversights if those performing the analysis are not as creative or crafty as the attackers. Certainly, if threat analysis and modeling are incorporated into the development process, there needs to be clear rules on when these sessions will occur and how many new features have to be added to the product before a new threat modeling session is warranted. Of course, it is imperative that developer buy-in is secured before embarking on any threat modeling process. If developers feel this is simply another burden placed on them in addition to other security measures such as peer code review, security training, source code and binary analysis, and third-party scanning tools such as HP’s WebInspect [8] or IBM’s AppScan tool [9]. Developers need to understand that the purpose of threat modeling is not to simply stack on more work but to ensure that their time is spent focusing on the most critical threats. By reiterating that threat modeling will only save them from potential embarrassment as well as make the best use of their time, they should find it an easier pill to swallow. As for legal liability, there are likely to be unintended consequences of such an approach. For starters, if developers could be held liable for damages, it may stifle innovation as developers decide it might not be worth the risk to release potentially vulnerable code. Also - businesses have a long history of passing costs to their customers. If businesses are forced to pay for security issues, they will likely increase costs of their products in order to compensate. The result could be increased product costs but if that results in more secure code the trade-off might be a wash. Another unintended consequence of making developers liable for security vulnerabilities is the effect this might have on open source and freeware software. If software was released under the GPLv2, for example, would section 12 (which indemnifies developers from any legal liability) be voided as a result of the new legislation [10]? If this were to occur, would this kill the open source and free software marketplaces? It is difficult to believe people would spend valuable time working on software that might get them into serious legal trouble later. And, if open source and/or freeware applications were excluded; it would create a very clear differentiator between open source and commercial software. The mere fact that commercial software comes with a “warranty” of-sorts means that it would gain a clear advantage in the eyes of consumers which could also potentially collapse the open source market. In conclusion, there are clearly some details that need to be addressed before these solutions are put into practice. As in the case of Ford Motor Company and Microsoft Corporation (mentioned in “Related Works”), some companies are already implementing threat modeling as standard practice and it is hoped that these practices will be adopted by other software companies. However, software security liability is something that clearly needs to be investigated further to understand the implications of such a strategy to ensure it has the intended effect. REFERENCES [1] J.A. Ingaisbe, L. Kunimatsu, T. Baeten. "Threat modeling: diving into the deep end", Jan/Feb2008, Vol. 25 Issue 1, p28-34, 7p. [2] K. McGee. “Suing your software”, MIT Sloan Manage Rev. 48 no2 Winter 2007. [3] Wired.com. http://www.wired.com/threatlevel/2007/06/facebookprivat/ , Retrieved 2009-11-15. [4] Microsoft Corporation. http://www.microsoft.com/downloads/details.aspx?FamilyId=598880789DAF-4E96-B7D1-944703479451&displaylang=en , Retrieved 2009-11-15. [5] 15. Geeknet, Inc. http://sourceforge.net/projects/coras/ , Retrieved 2009-11- [6] B. Schneier. http://www.schneier.com/blog/archives/2004/11/computer_securi.html , Retrieved 2009-11-16. [7] Microsoft Corporation. http://www.microsoft.com/mscorp/twc/default.mspx, Retrieved 2009-11-16. [8] International Business Machines, Inc. http://www01.ibm.com/software/rational/offerings/websecurity/, Retrieved 2009-11-16. [9] Hewlett Packard Corporation. https://h10078.www1.hp.com/cda/hpms/display/main/hpms_content.jsp?zn=bt b&cp=1-11-201-200%5E9570_4000_100__, Retrieved 2009-11-17. [10] Free Software Foundation. http://www.gnu.org/licenses/gpl-2.0.html, Retrieved 2009-11-17.