AIED-99-Believable-layer

advertisement

Integrating a Believable Layer into

Traditional ITS

Sassine Abou-Jaoude

Claude Frasson

Computer Science Department

Université de Montréal

C.P. 6128, Succ. Centre-Ville

Montréal, Quebec Canada

{jaoude, frasson}@iro.umontreal.ca

Abstract. Adding believability and humanism to software systems is one of

the subjects that many researchers in Artificial Intelligence are working on. We,

and from our interest in Intelligent Tutoring Systems (ITS), propose adding a

believable layer to traditional ITS. This layer would act as a user interface,

mediating between the human user and the system. In order to achieve

acceptable believability levels, we based our work on an emotional agent

platform that was introduced in previous works. Our ultimate aim is to study the

effect, from a user's perspective, of adding humanism in software systems that

deals with tutoring.

1. Introduction

We work mainly on research and development that revolve around Intelligent

Tutoring Systems and how to make these systems more efficient. Works that aims at

increasing the efficiency of ITS are mainly conducted under the following guidelines:

Student Model. Building a more representative student model [1] that would project a

thorough image of the user in front, thus permitting the system's adaptability to be more

reflective of the actual user's status.

Curriculum. Constructing a more efficient curriculum [2] module that would work as a

skeleton for teaching subjects.

Tutorial strategies. Working on different cooperative tutorial strategies [3], through the

amelioration of existing ones, the introduction of new ones, such as the troublemaker [4],

and the possibility of switching strategies in real time interactions [5].

It was a natural progressive step, for us, to start exploring the integration of

humanism and believability in ITS, since we highly believe, that it will increase the

system's performance. For this, we suggest the addition of a believable layer in traditional

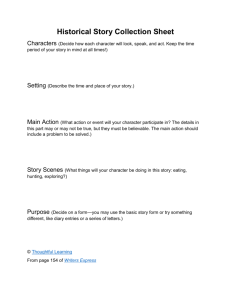

systems in order to create, what we call a believable ITS (see fig. 1).

Traditional

ITS

Intelligent

Tutoring

System

Believable

ITS

Traditional

communication

route

Believability Layer

USER

Figure 1: Introduction of the believable layer

This layer will play the mediating role between the user, an entity that is very much

influenced by humanism in its normal behavior, and the system that is normally not

capable to interpret humanism on its own.

Ultimately we would say that our believable agent platform is complete if a user being

tested by a system, of which he has no direct sight, would not be able to tell if the latter is

a human or a machine (i.e. passing the Turing test somehow).

Adding believability means the introduction of human aspects in the software

agent such as personality, emotions, and randomness in behavior.

The nature of randomness itself makes it somehow, an achievable goal. One way

to achieve randomness is through the use of well-known software procedures that would

produce random output mapped on the sample behavioral space of the agent. Moreover,

since no rules or standards may govern randomness any proposal suggested at different

levels may be considered as an additional randomness entity to the system. For the

character and emotions; the integration is not as evident. They were subject to much

research. And the line of division between these two entities is not as clear too.

Therefore, most researchers approached the emotional aspect and the personality traits as

one entity. Among these research group we would like to mention the contribution of the

following people: C. Elliot and co. for their work on the affective reasoner [6], a platform

for emotions in multimedia. J. Bates and co. at Carnegie Melon University, for their work

on agent with personalities and emotions [7] and the role of the latter's in assuring

believability.

Barbara Hayes-Roth and her team at Stanford, for their work on the virtual theater [8] and

agent improvisation. Pattie Maes and her team at MIT [9] for their work on virtual world

and the emotional experience in simulated world.

As for us, we believe, for reasons that will be presented in the next section

(section 2), that emotions might be the major entity in creating believability. And

integrating a believable layer in ITS, would be almost reached by adding emotional

platforms to main agents in this ITS. Section 3 will allow us to introduce our emotional

agent platform, the emotional status E and the computational model that would allow

the agent to interact emotionally in real time following different events that might take

place in his micro-world (in our case the ITS). Section 4, is a case study in which the

theory proposed in section 3 will be applied to a student model in a competitive learning

environment (CLE). Finally, in section 5, we will be concluding by presenting our future

work and the main questions that we are planing to treat in our future experiments.

2

2. Believable layer vs. Emotions

The use of the term "emotions" in our work is done to its widest meaning. An

emotion is not simply a feeling that an agent X might have to an agent Y (i.e. hate, like,

etc.). It is more, a submersible status in which an entity (human or agent in our case) is,

that would influence its behavior. Instead of limiting our choice to the classical definition

of emotions we widened it, to include many types, based mainly on previous work done

by Ortony and Elliot [10, 11], such as well-being (i.e., joy, distress, etc.), prospect-based

(i.e. hope, fear, etc.), attraction (i.e. liking, disliking, etc.) and etc.

We also introduced in previous work [12] the notion of stable emotions as a mean of

defining personality traits, and henceforth personality.

Although interpretation of emotions differs among cultures, backgrounds and even

individuals themselves, the sure thing, is that humans are the most reliable source we

know for this interpretation. This human ability has always given human teachers

advantages on their machine's counterpart [12].

In ITS emotions enhance believability, because they permit the system to simulate

different aspects that the user had previously only encountered in a human teacher.

Among these aspects we mention the following:

1) The engagement of the user, which is achieved when the system wears different

personalities, that would interest the user, to discover. 2) The fostering of enthusiasm in

the domain that is also achieved by the capacity of the system to show its own interest in

the subject through the variations of its emotions. And 3) the capacity to show positive

emotions as a response to the users positive performances.

We believe that the wide definition and the flexible classification we gave to emotions

allow us to approach believability as analog to emotional. And to us, creating a believable

layer is in fact building an emotional agent platform. Yet, we are totally aware, that the

ultimate measurement of the righteousness of our choice will be provided by the final

results of the system.

Particularly in ITS, adding a believable layer (such as fig. 1 shows) would in fact be

narrowed down to adding additional emotional layers to agents that might exist in the

simulated micro-world of the ITS (i.e. A tutor, a troublemaker, a companion, and a

student model, etc.). The existence of these actors depends mainly on the tutorial strategy

of the system.

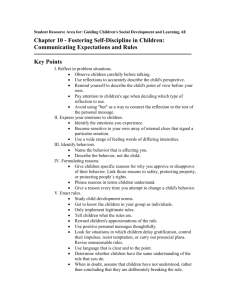

Traditional layer that would

involve the knowledge level,

introduced in 1996 [1]

Student Model

Layer 1

Layer 2

Layer 3

Knowledge level

&

Cognitive model

Layer that involves the

learning preferences

introduced in 1998 [5]

Learning profile

Emotions

&

Believability

The believable layer that will

include emotions,

personality, and randomness

in behavior. Introduced

hereby.

Figure 2: Student model with layers added chronologically

3

The student model is one of the entities that exists in many strategies, therefore

for illustration purposes we show in figure 2 a diagram of our student model and its

chronological evolution. As figure 2 shows, the final layer is the emotional layer. This

layer will be the mean to produce believability in the system as a whole.

3. The emotional agent platform

Lately our research has been concentrated on creating a computational system for

emotions. A system that would be used to create, calculate and constantly update the

emotional status of the agent while interacting with its environment. This section (section

3) will present the general computational model for an emotional platform and the next

section (section 4) will present a case study in which the theory is implemented in a

particular scenario where an emotional agent is reacting in a competitive learning

environment.

3.1 The emotional couple

The basic entity in our system is the emotional couple ei. An emotional couple is

a duo of two emotions that belong to the same group [11] but contradict each other. If Ei1

and Ei2 are two emotions that satisfies the condition just stated, than we can write:

ei [Ei1/Ei2] is an emotional couple made of these two emotions

As an example, the two emotions Joy and Distress belongs to the same group

(appraisal of a situation) and they have contradictory exclusive interpretations (while Joy

is being pleased about an event, Distress is being displeased). Joy and Distress will make

an emotional couple e1 = [Joy/Distress].

The value of an emotional couple is a real number that varies between -1 and +1

inclusively. When this value is equal to +1, it would be interpreted that the left emotion in

the couple is being experienced to a maximum. When the value is equal to -1, it would be

interpreted as the emotion on the right side of the couple is witnessed to a maximum. A

zero would mean that concerning this group of emotions the agent is indifferent. Most of

the times the value of the couple is floating between those limit values.

Formally we would have:

ei [Ei1/Ei2] [-1,1]

Where: i

ei = +1 Ei1 = +1 et Ei2 = -1 || ei = -1 Ei1 = -1 et Ei2 = +1 || ei = 0 Ei1= Ei2 = 0

3.2 The emotional status

The emotional status E is the set of all the emotional couples that an agent would

have. The emotion status is somehow function of the time t (however this variation with

t is not continuous, it is somehow discrete, since it awaits an event) :

E [e1, e2, …, ei, … , en] where

4

i, n

The new emotional status E' is computed every time an event takes place. It is

function of the previous status E, and the set of the particularities P(s) that the context

posses (see section 4). These particularities are external factors that influence the

emotional status yet belong to external entities other than the emotional layer.

As an example the performance of the user in an ITS would be an external factor that

would influence its emotional status but is not a part of it. Again we define P(s) as the

set of these factors pi, therefore we have:

P(s) [p1, p2, …, pi, … , pm] where

i, n

Finally we can write:

E' = f { E, P(s) }

3.3 The computational matrix

In order to compute every element ei' of E', we will be creating a computational

matrix M. M will have elements aiJ that will determine the weight of how much each

constituents affects the emotional couple ei'. The choice of the values of aiJ is a very

critical issue since it is basically the core of the whole emotional paradigm. The next

paragraph will present the experimental way by which these weights, in the matrix M are

to be determined. For the moment, we know that M has the following dimensions:

|M| = |E| x |E + P(s)| = n x (n+m)

And it has the following shape:

E

E'

e 1'

...

e n'

e1

a11

...

...

...

...

aaijij

...

P(s)

en

...

...

...

p1

...

ai n 1

...

... pm

... a1m

... ...

... anm

Now we can calculate E' as the set of ei, where ei:

n

m

j1

j n 1

ei aijej aijpj

5

Provide thorough

explanation of ei, pi,

and values allowed

Tolerate misunderstanding in

order to produce a level of

humanism and indecision

Test the user

Fail

Pass

Provide the

initial emotional

st. E

Events

User provides E'

Repeat With E'

Register E'

Quit

Figure 3: Block diagram of the backtrack test allows the

determination of the weights aiJ in M.

3.4 The weight factor and the procedure

Upon determining P(s) we will proceed to determine the weight aiJ

experimentally. The experiment is a backtrack procedure (see fig. 3 for details). Normally

E and M are known and we proceed to calculate E'. But at this level M is the target.

Human users will help determine M. In details, users are given explanations about the

system's entities (ei, pi, and permissible values), and then they are tested to see if they

assimilated their meanings. In this test, as figure 3 suggests, we tolerate a certain level of

misunderstandings in order to simulate the randomness and the indecision in human.

Starting with E, the user is asked to provide the new E' following a certain event,

according to the user's best judgement. Note that the idea of human making choices is

exactly what we wanted in our system, since we are aiming at creating systems that

imitate humans. Repeating this procedure (see fig. 3) will allow us to create enough

equations to solve for aiJ in a system of n*m equations with n*m variables.

4. Case Study: An emotional student model in a competitive learning environment

(CLE)

6

To test all thus, we proceeded in adding an emotional layer to the traditional

student model (see figure 2), in a simulated learning environment. There are three main

actors in the system: the emotional agent in question, the troublemaker (a special actor

who sometimes mislead the student for pedagogical purposes [5]) in the role of a

classmate and the tutor. In the story: the tutor will ask a question of value V to both

students. The troublemaker will provide an answer to the tutor Rpt, of which the

emotional agent has no knowledge. The troublemaker will then propose an answer to the

emotional agent Rpe. At this point, the emotional agent will have to provide his answer

Ret to he tutor. Once this exchange is finished the emotional agent have access to Rpt.

And following this, his emotional status will be recalculated. Figure 3 shows the

environment of the emotional agent, with different variables that will enter in the

calculation of E.

Question with

value

V {1,2,3}

TUTOR

The Troublemaker's answer

The Emotional Agent 's

answer Ret

EMOTIONAL AGENT

1- Emotional Status E

2- Deception Degree dd

Rpt

Troublemaker's

answer to

emotional

agent

3- Perceived Performance (Pp)e

TROUBLEMAKER

1- Performance Pp

Rpe

4- Performance Pe

Figure 3: Simulation of an emotional layer added to the user model in a CLE

The particularities (i.e. pi) of the system that affects the calculation of the

emotional status should be determined experimentally based on human judgements, the

way M was. In our system we identified three main factors that might affect E. The

performance of the emotional agent Pe, the performance of the troublemaker as perceived

by the user (Pp)e, and the level of disappointment also known as the deception degree

dd.

4.1 Value of question vs. Value of answer

To simulate the fact that different goals of the agent might have different priorities

we propose to add a weight V to each question asked by the tutor. The answers have a

value of -1 for wrong answers and +1 for correct one, therefore:

V {1, 2, 3}

&

Rij {-1, +1} where +1 = correct answer & -1 = wrong answer

4.2 The performance of the agent and the perceived performance

7

Pe is the performance of the believable agent himself, this performance is also

computed in real time taking into consideration its previous value, the value of the

question and the value of the answer.

P'e =

(1 - V / 8 ) x Pe + (V / 8) x Ret

The factor 8 is also an experimental value determined by a human user sample and

rounded in the formula of Pe'.

(Pp)e is the perceived performance of the troublemaker as seen by the believable

agent. It is normal that the emotions of the agent play a significant role in the calculation

of this entity. We propose the following:

(Pp)'e = f { (Pp)a , Pp , E(t) }

Also experimentally we managed to approximate this function to the following:

1

1

(Pp)'e = [ (Pp)e + Pp + e13 ] (see table 2, for e13)

4

4

4.4 The deception degree

We define the deception degree dd as the degree of disappointment that the agent

is witnessing in his interaction with the troublemaker. The value of dd also varies

between -1 and +1. Upon explaining the factor and its extreme values (i.e. -1 and +1) we

proceeded by providing the users with different combinations of Rpt, Rpe, Ret and C and

asked them to provide values for dd based on their own judgement.

Rpt

Rpe

Ret

C*

dd

-1

0

1

0

1

0

1

0

1

-0.4

-0.5

-0.2

**

0.4

-1

+1

-1

-1

+1

+1

Rpt

Rpe

Ret

C

dd

-1

0

1

0

1

0

1

0

1

-0.7

-1

-0.4

-1

+1

+1

-1

+1

0.8

1

+1

0.3

0.5

1

C is a factor that tells if the agent chose the troublemaker answer (c = 1)

or not (c=0)

** Table

Gray 1:

square

means impossibility

of the situation

Experimental

values of the deception

factor

4.5 The emotional status

In our model we have defined 13 (see table 2) emotional couples

shown in table below. These emotional couples are based on the work of Ortony and

Elliot [10,11]. Therefore the emotional status E is the set of values of those 13 emotions.

8

Joy/Distress (e1)

Satisfaction/Disappointment (e5)

Liking/Disliking (e9)

Jealousy/-Jealousy (e13)

Happy-for/Resentment (e2)

Relief/Fears-Confirmed (e6)

Gratitude/Anger (e10)

Sorry-for/Gloating (e3)

Pride/Shame (e7)

Gratification/Remorse (e11)

Hope/Fear (e4)

Admiration/Reproach (e8)

Love/Hate (e12)

Table 2: Emotional couples used in our case study

4.6 Solving for M

Knowing E and P(s) we proceeded experimentally to solve for M. In this case

we had 208 variables. This will require a minimum of 208 equations of the form

13

ei' aij ej ai14 Pa ai15 ( Pp)a ai16 dd

j1

4.7 Results

The results we obtained for the matrix M and its final shape were under

development by the time we first produced this article, now these results are ready and

will be presented in the conference’s presentation and in future works.

5. Conclusion (The believable tutor)

Another major experience that we are working on, is the creation of a mini ITS

based on the classical tutor-tutee strategy. Two models of the tutor will be explored, a

model in which the tutor is an agent with no emotional nor believability aspects, and

another one where a believable layer is added to the tutor. Our aim is to compare the

performances of the ITS under both models. We have already started working on this

application, and preliminary results lead us to believe that believable tutors influence

users performances depending on the latter's knowledge levels.

System performance

123-

Believable ITS

4-

Does this crossing occur?

If yes. Where?

Effect of learning profiles?

Effect of the curriculum?

Regular ITS

Knowledge level

Starter

Novice

Intermediate

Expert

Figure 4. Traditional vs. Believable ITS performances and the pre-estimated

crossing between the two.

9

In details (see figure 4), a believable tutor would be very successful with users who have

a low to average knowledge level, but less appealing to experts.

Still many questions will be answered when the system is implemented. We will

be interested in answering questions such as:

1- Early applications tends to tell that the believable layer in a ITS would reduce the

performance of an expert, who is more interested in direct application, than emotional

systems. Does a crossing between the two ITS (believable and regular) exist?

2- If it does. Then where does it occur? In other terms, at which level of knowledge it is

better to switch to the regular ITS?

3- The knowledge level affects the crossing point of the two systems. Does the learning

preferences or learning profiles of the user affect it too? If yes, then how?

4- Does the choice of the curriculum affect the crossing point?

Acknowledgments

We thank the TLNCE (TeleLearning Network Centers of Excellence in Canada)

who have supported this project.

References

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

Lefebvre, B., Nkambou, R., Gauthier, G. and Lajoie, S. "The Student Model in the SAFARI

Environment for the Development of Intelligent Tutoring System ", Premier Congrès

d’Électromécanique et Ingénierie des Systèmes, Mexique, 1996.

Rouane, K. and Nkambou, R. "La nouvelle structure du curriculum". Rapport semestriel-008,

Research Group: SAFARI, DIRO, University of Montreal, pp. 25-35, 1997.

Aïmeur, E., Alexe, C., and Frasson, C. "Tutoring Strategies in SAFARI Project", Departmental

Publication # 975, Department of Computer Science, University of Montreal, 1995.

Frasson, C. and Aïmeur, E. "A Comparison of Three Learning Strategies in Intelligent Tutoring

Systems", Journal of Educational Computing Research, vol 14, 1996.

Abou-jaoude, S. and Frasson, C. "An Agent for Selecting a Learning Strategy". Nouvelles

Technologies de l'Information et de la communication dans les Formations d'Ingénieurs et dans

l"Industrie. NTICF'98- Rouen, France, 1998.

Elliot, C. "I Picked Up Catapia and Other Stories: A Multimedia Approach to Expressivity for

“Emotionally Intelligent” Agents." In Proceedings of the first International Conference on

Autonomous Agents, Marina del Rey, 1997.

Bates, J. "The Role of Emotions in Believable Agents." Technical Report CMU-CS-94-136,

School of Computer Science, Carnegie Melon University, Pittsburgh, PA, 1994.

Rousseau, D. and Hayes-Roth, B. "Interacting with Personality-Rich Characters" Stanford

Knowledge Systems Laboratory Report KSL-97-06, 1997.

Maes, P. "Artificial Life meets Entertainment: Life Like Autonomous Agents. "Communications

of the ACM, 38, 11, 108-114, 1995.

Ortony, A., Clore, B. and Collins, C. "The Cognitive Structure of Emotions. Cambridge University

Press, 1988.

Elliot, C. "Affective Reasoner personality models for automated tutoring systems." In proceedings

of the 8th World Conference on Artificial Intelligence in Education, AI-ED 97. Kobe, Japan, 1997.

Abou-Jaoude, S. and Frasson, C. "Emotion Computing in Competitive Learning Environments".

Proceedings of the workshop on pedagogical agents, Fourth International Conference ITS 98,

Texas, 1998.

10