Evaluation of Counter-Terrorism Data Mining Programmes

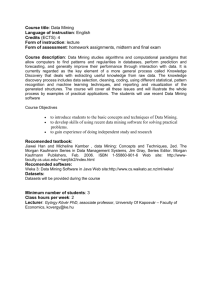

advertisement