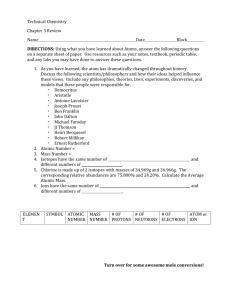

Anthropology

advertisement