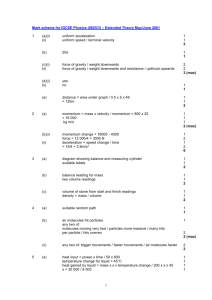

Ultrawave Theory

advertisement