An Overview of Statistical Data Processing

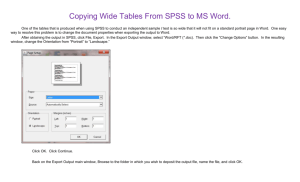

advertisement

Educational Psychology 626

Statistical Data Processing

Course Guide

An Overview of Statistical Data Processing

Statistical data processing is made fairly easily with the use of modern computer

software. While general purpose programs such as Excel and Word have some statistical

capabilities, they are generally limited. However, there are a number of popular

programs that are dedicated to statistical analysis. These programs vary in their ease of

use, comprehensiveness and cost. Two of the most popular programs are SPSS and SAS.

These are the programs that you will find in most universities and social science research

facilities.

Essentially all statistical programs work the same. First, data must be entered on

a data file. These data are then read and analyzed by a series of statements written in the

statistical program “language” and contained in a syntax file. Finally, the results of the

analysis are written to an output file for viewing or printing. Thus, a typical statistical

analysis requires the management of three files: data, syntax and output. These three files

work interactively to help you accomplish your analysis. In most cases, these files are

written as “text” files with no special formatting. (NOTE: In many applications, the

Windows/Mac “pull down menus” have replaced the need for a syntax file.)

Program or

Syntax file

Data file

Results or

Output file

SPSS or SAS may be run on a PC or on the campus Alpha (UNIX) system. On

the PC, both SAS and SPSS have Windows based versions. Running these programs on

the campus UNIX based system from a PC requires a “terminal” program. There are a

number of “freeware” terminal programs available for use with campus networked

computers and home PCs. Consult the IM&T web at

http://www.uwm.edu/IMT/purchase/soft-dept.html

for more information.

One word of warning. Statistical programming is very unforgiving of errors both

in data entry and in writing program syntax. Prepare to spend considerable time

“debugging” your data and program syntax. It may be a bit frustrating at first, but you’ll

2

get the hang of it soon. Initially, it takes great care to make sure everything will work as

you intend.

3

Introduction to UNIX and creating text files (in pico)

I. UNIX

A. Accessing your UNIX (alpha) account:

If working from home, you’ll need a terminal program (many are freeware, like “CRT”

– see the UWM website for IM&T services) and inquiries should be directed to IM&T

on campus if you run into difficulties. The examples shown below assume you are

using a PC with a terminal program (“CRT” is the program shown in the figures).

When you are working in the UNIX environment, even though you are using a PC with

a terminal “window” the mouse functions will not work. All commands are entered as

text and functions like saving files and editing documents require keystrokes. This takes

a while to get used to this if your only exposure has been to a Windows/Mac

environment, but it is not difficult to learn.

If you are working from a campus computer you should find a yellow folder, called

Alpha, on your computer desktop. Double click on the folder to see a bunch of little

computer icons with the word Alpha and then a letter after the word Alpha. This letter

is important because it represents the first letter of your login name. This letter (i.e., p)

and the word Alpha represent the location of your alpha account. So, to choose your

alpha account, use the first letter of your login name. For example, if your login name

is “psmith” making the first letter of your login name “p” , your alpha account is

alphap. When you double click on your alpha account, a UNIX window will open.

You will be asked to type in your login and password . Type those in and press Enter.

You are now logged in. You will see a screen similar to this:

4

You are told when your last login was and a bunch of other unimportant information.

The important information is in BOLD text. First, the following will be displayed:

You have mail.

Please don't type anything, trying to determine your terminal type

This tells you whether or not you have mail and also not to type anything. Do exactly

that. Do not type anything. After a few seconds the second part will be displayed:

Terminal recognized as vt100 (Base vt100)

TERM = (vt100)

Once you see this message, press the Enter key and the following will be displayed:

5

This is the alpha “ez” menu. Options on this menu are selected by typing the number to

left of the option and pressing the ‘enter’ key. Notice that you can access UWM student

SASI information, class lists and other useful information.

B. Getting to the UNIX prompt:

From the ‘ez’ menu, type 62 and then press Enter. You will see text like this in the

terminal window:

Suspend this menu and to go Unix

=================================

You are about to set aside this menu system so that you can type in

your own

Unix commands. When you are through typing your own Unix commands

issue the

Unix command

exit

to return to this menu system.

If you only want to issue one or two Unix commands you might find it

more

convenient to issue them at the menu system prompt. You can do so by

proceeding the name of the command with an exclamation point, e.g.,

!ls

Note: if you go ahead with this option, and then decide that you want

6

to

logout from ALPHA, you will need to first type exit to get back to

this menu

system. Then you can choose the menu option "Logout! Disconnect!

Quit!"

Do you wish to continue? (y/n)[y]:

Again, the BOLD text is what we are interested in. It is simply asking you if you want

to continue, so type ‘y’ and press Enter.

You are now in the UNIX shell. In this shell you’ll see the UNIX prompt that looks

like that below. You might get additional text before you get to the prompt below.

Simply type clear to get rid of this text:

(alpha) 1:

The number after the (alpha) indicates how many commands you’ve entered. The

number will increment by one each time a new command is entered. The ‘clear’

command is one example This command will clear the screen of all the text on the

screen that we are not interested in. Type in “clear” at the prompt and press Enter.

Your screen should now look like this:

(alpha) 2:

Notice that we have issued one command, so now we are on command 2, which is

indicate by the number behind the alpha. Other UNIX commands work like the ‘clear’

command. You enter text at the UNIX prompt and press enter to execute the

command. When the UNIX prompt reappears, the command has been executed and

you can issue another command.

If you ever want to get back to the initial “ez” menu screen (the one with all the

numbers and options), type exit at the UNIX prompt and then press Enter.

C. Naming files in UNIX

The names that you come up with for your UNIX files need to work with the UNIX

file system. The way you name files in UNIX is pretty flexible, but there are specific

rules you have to follow. In practice, there are also some good habits to get into when

making your own files.

7

Although some UNIX systems might vary, the general rules for file naming are as

follows.

You can name a file in UNIX using up to fourteen characters in any combination from

the following sets:

1. {a, b, c, d, e, f, g, h, i, j, k, l, m, n, o, p, q, r, s, t, u, v, w, x, y, z, A, B, C, D, E, F,

G, H, I, J, K, L, M, N, O, P, Q, R, S, T, U, V, W, X, Y, Z}

2. {0, 1, 2, 3, 4, 5, 6, 7, 8, 9}

3. {period (.), underscore(_), comma(,)}

You can not name a file period (.) or period period (..).

Directories are files in UNIX, so directory names follow these same rules also.

You need to follow the official rules for file naming in UNIX, but here are some good

practices:

1. Never name a file with the same name as a UNIX command.

2. Use file extensions that are widely recognized when possible. For example, if you

create a text file, use the character string .txt as the last part of the file name.

3. Make all the letters of all your files (and directory names) lower case. Unix cares

about case. That is, the file Alpha.txt is different from alpha.txt. In most cases, the

capital letter is only going to cause you problems with trying to remember if the

filename or directory name has a capital letter or not.

4. Start or end a file name with a letter or number. If you start it with a dot (.), it will

be a hidden file.

5. Make your names short, but not cryptic. Use correctly-spelled nouns when

possible. For example, store your inventory in inventory.dat and not invtry.dat

II. Pico (a text editior in UNIX similar to Word in Windows)

A. Creating a text file using Pico:

Pico is a text editor similar to Word except all editing and all functions are done with

the keyboard, not the mouse. We will use it to create and edit text files (like the data

and syntax files described earlier). To get into Pico, type

pico

at the UNIX prompt. The PICO editor will then be displayed:

8

On the bottom of the window you should see the commands you can use in Pico.

Each command consists of holding down the Ctrl key and a letter. For example, ^X

tells the user to hold down the Ctrl key and the X key. ^X is the command for EXIT.

Now type something (anything). When you’re done, use the EXIT command (hold

down the Ctrl key and the X key). Pico will ask you if you want to save the file:

Save modified buffer?

Press y (for yes). Pico will then ask you for a filename. Type in a filename (any name

you want to give to your file) and press Enter. The file you just created is a plain text

file, which is also known as an ASCII file.

You should now be back to the UNIX prompt. Type

ls

at the prompt. The ‘ls’ command in UNIX lists all of the files that you have saved on

your UNIX account. The file you just created should be listed.

To look at or edit the file you just created, type

pico filename

where filename stands for whatever you named your file. This will open your file in

pico. Make some changes to your file and exit (^X). If you’ve made any changes,

pico will ask you if you want to save the file. If you answer yes, it will ask you again

for a filename. If you want it to keep the same filename, and replace the old file with

9

this edited file, simply press Enter. If you want it to save the edited file under a new

filename, backspace and enter the new filename.

D. Common UNIX Commands

There are hundreds of UNIX commands that can be entered at the UNIX prompt to

accomplish various tasks. You should be able to get by with just a few:

ls = list all files in your account

cat filename = show contents of the file filename (use the actual file name – not

“filename”)

mv oldfile newfile = changes file name from oldfile to newfile

rm filename = removes (deletes) the file filename

pico = involks pico editor

logout = logs you out of UNIX

exit = exits the UNIX shell to “ez” (if that’s where you started).

Of course, when you are doing statistics on the UNIX system, there are a few other

commands associated with SPSS or SAS that you’ll need to know. These are detailed in

the Doing Statistics on UNIX handout.

To exit your account, go back to the ez menu screen by typing exit (then Enter) at the

UNIX prompt. When you get back to the ez menu screen, type bye and Enter.

Moving files from PC to Alpha (and Back)

In general, it’s possible to create files on the PC and send them electronically to the

UNIX machine and to retrieve files from UNIX to your PC. Most of these files will be

in ASCII format with no formatting. To do this, you’ll need an “FTP” program

installed on your PC.

10

This figure is typical of FTP programs. There are two windows: one shows the file

contents in your UNIX account (the right window above) and the other shows the files

on your PC. Moving files from one computer to another is usually as simple as

“dragging and dropping” the file from one window to another.

11

Data Structures: Planning Data Entry for Analysis

Before data can be analyzed, it must be “entered” in a form that is readable by

various statistical programs. In planning data entry, there are several issues to be

considered. These are reviewed below. A data set can be thought of as a subject by

variable “array” of data. This two dimensional data “matrix” generally has rows

associated with “subjects” and columns associated with “variables.” This general format

is followed regardless of the method of data entry (see below). Each variable should

have its own column and each subject should have its own row. Variables are often

given names. For example, a variable that describes a subjects SAT score might be

labeled “sat.” The mechanics of naming variables differs depending on the data entry

method chosen (see below). Subjects are often given ID numbers, frequently running

from 1 to N (although any unique number may be used, like student ID or even the

subject’s name). The first variable in a data matrix is often the subject ID. (NOTE:

“Subjects” are not always people. They may be rats or pigeons or even organizations).

Variable Types

Variables can be coded as numeric (with or without decimals) or text (alpha). While

most programs can deal with alpha/text variables for some analyses, it’s a good idea to

code data as numeric whenever possible. For example, a gender variable might be coded

“1” and “2” instead of entering “male” and “female” in the data. Other categorical

variables can be similarly coded. Keep in mind that these numeric labels generally have

no quantitative meaning, but are simply numbers used to abbreviate the category.

A variable from a questionnaire item with 5 responses ranging from “strongly agree” to

“strongly disagree” might be coded a 1=strongly agree; 2=agree; etc. Here, the number

has some quantitative meaning. Obviously, data like “age” or some test score can be

numerically entered as it stands.

In some cases, especially on questionnaires, the subject may choose multiple answers to a

particular question. In this case, variables should be created for each option presented to

the subject. For example if a question asks “ethnicity” and indicates that multiple

responses are allowed (e.g., African-American and Asian), each option should be

associated with a particular variable. In this case there would be a variable associated

with African-American identity (possibly coded “1” if checked and “0” if not checked),

another variable for Asian, etc.

Missing Data

Almost all data sets have some missing data (the subject left the “age” variable blank; the

subject didn’t have a particular test score; etc.). The data entry process should plan for

missing data. In general, some unique numeric (or text in the case of alpha variables)

should be used as a “missing data indicator” as opposed to leaving the field blank for that

subject. While some programs handle blank fields well, others do not. It is good data

12

entry practice to assign missing data code(s) to each variable. For example, the SAT

score missing indicator might be “9999”; for age it might be “999”; for gender it might be

“3”.

Code Book

Once you have determined the codes for the variables, the missing data indicators and the

variable names (see specific methods below), you should put together a “code book” that

details all of this information. Essentially, this is just a list of variable names, valid entry

characteristics and missing data codes. This is done so that you have a clear record of the

variables, their order and their meaning.

Data Entry Methods

In general, data may be entered using a word processor (like Word or Notepad), a

spreadsheet (Like Excel or Lotus) or a dedicated data entry program. Each method has

its relative advantages and disadvantages, but once you gain experience with each your

choice will probably be dictated by personal preference. The method you choose to enter

data should have the capability of converting your data to “ASCII” text. ASCII text

refers to a particular type of file structure that has no hidden formatting codes or other

information that can cause errors when the data are eventually read by a statistical

program. It’s also called “plain text” or “unicode” by many word

processors/spreadsheets and often has a .txt or .dat file extension automatically assigned.

It should be noted that some statistical programs can read data that have been entered into

a spreadsheet or word processor directly, but some (especially specialized programs)

cannot. If you are working in a UNIX environment (like the UWM “alpha” computers),

you’ll need to use a UNIX editor (like PICO) to enter your data. Data entry should

proceed like that described below for word processor. UNIX editors save data in

unformatted ASCII text so no conversion is necessary.

Word Processor (e.g., Word): When using a word processor, data may be entered using

either a “freefield” or “fixed” format. In freefield format data are entered for each subject

in a row with variables separated by a “delimiter” such as a space or comma. Here’s an

example:

13

Here data are delimited by a space. There are seven variables and 10 subjects in the data

set. Note especially that each subject has the same number of variables (seven) and the

variables are in the same order. (NOTE: One reason why using blanks as a missing data

indicator is a bad idea is that if subject “1” above had a missing variable, the line would

contain only six variables, possibly causing an error when the data is read by the

statistical program).

Fixed format differs only slightly from freefield. In fixed format, each variable maintains

“column integrity” in the data. For example, the ID variable is always in the first two

character positions, the second variable is always in the fourth character spot (after the ID

and a space), etc. The same data in fixed format would look like:

14

Note in fixed format, all numeric variables are “right justified,” which means that the

numbers all “line up” on the right side. In addition, each variable is always in the exact

same character position for each subject. While most statistical programs will read

freefield data, fixed format is more universal and makes the data easier to visually

inspect.

Regardless of the format used when entering data using a word processor, a

“nonproportional” font should be used. With proportional fonts, different numbers and

characters take up different amounts of space which makes keeping column integrity

during data entry a more tedious task. Courier type face is the most common of the

nonproportional fonts used in word processors.

Once data are entered, it needs to be saved as an ASCII (plain text) file. On most word

processors, this is a matter of choosing File>Save As and then selecting the ASCII or

plain text option. If you have saved your data in a word processing file, you should

choose a different name when saving the ASCII data. This will prevent you from

overwriting the word processing data and provide you with a convenient backup of your

data.

If you are entering data on a UNIX text editor (like PICO), data files are automatically

saved as ASCII text and nonproportional fonts are the default. Here’s what the data

would look like in PICO using “CRT” as the terminal program:

15

Spreadsheets: Spreadsheets (like Excel) are set up naturally with the row and column

array required for data entry. This makes data entry very convenient from a formatting

standpoint, but the data entry often requires a few more keystrokes as you move around

the array to enter data. An Excel sheet with data entered would look like:

16

Once data are entered, it can be saved as ASCII data by selecting File>Save As (choose

tab delimited or Unicode as the format). Excel files are saved with an .xls file extension.

Dedicated Programs: Some statistical programs (like SPSS) provide for data entry

directly. In SPSS, the data entry “window” looks very much like a spreadsheet and data

are entered in the same way. If you plan to use SPSS for your data analysis, there is no

reason to convert to ASCII: SPSS will read the data directly. The data in SPSS would

look like:

17

Note here the “tab” at the bottom indicating “variable view.” When selected, the user is

switched to a window that details variable information:

18

Note that this window allows the user to provide variable names, missing data codes and

specify the type of data being entered, among other things. Here, the assigned variable

names (e.g., v1, v2) are replaced:

19

The “data view” now looks like this with the variable names indicated in the column

headings:

20

Data entered in SPSS may be saved as ASCII text for use with other programs using the

methods described above for word processors and spreadsheets (File>Save As, then

select “fixed ASCII” or “tab delimited” as the file type). SPSS data files are saved with a

.sav file extension.

Importing Data

ASCII data may also be “imported” into the Excel or SPSS spreadsheet form once

entered by word processor or other method. If you are obtaining data from another

source, it is often safest to ask for the data in ASCII format. If you then need to add to

the data, or edit the data you may import the data into Excel or SPSS. In Excel, to import

the data you need to select “text” as the file type on clicking File>Open. In SPSS select

File>Read Text Data. Both programs will step you through reading the data in the proper

format.

21

Basic SPSS & SAS Syntax

I. STATISTICAL PROGRAMMING SYNTAX

By now, most everyone is used to working in a “windows” or graphical user

interface (GUI) environment to accomplish most tasks on the computer. Most of the time

this involves using “pull-down” menus, mouse clicks and so forth to accomplish the

desired result. Because of the complexity of statistical analysis and the variety of options

associated with a particular analysis, GUI based statistical programs can be somewhat

limiting: It’s often not possible to include all options and procedures in a series of simple

pull-down menus and dialog boxes. Consequently, it is useful in statistical programming

to know how to write “syntax.” Syntax implies a series of “statements” written in a text

file that “tells” the program what analysis you want done. Each statement is sentence like

(although often more cryptic with many abbreviations) that must follow a predefined

format.

Syntax statements can be divided conceptually into three categories: Those that

tell the program where to find the data and define the data characteristics; statements that

can be used to transform or manipulate the data; and statements that tell the program to

do specific analyses with the data. Together, these statements form a “program file” that,

when executed, perform the desired analysis. These statements are generally written in a

text editor (either in the statistical program itself or by using a separate text editor – e.g.,

Word, PICO) and saved as a text file.

The general structure of SPSS and SAS syntax are described below. Keep in

mind that the syntax structure of both SPSS and SAS are much more complex than what

is presented here. While you will be shown only the basics, it should provide a good

foundation for self-study or further instruction in advanced statistical coursework.

II. SPSS PROGRAM SYNTAX STRUCTURE

There are several program commands that you may use for each statistical run.

Some of these are required. Many of the optional commands are omitted from

this introduction. All commands should begin in column 1 (left justified). If any

of the commands extend beyond 72 columns (the limit in some applications), the

command may be continued on the next line after a one space indent. ALL SPSS

STATEMENTS END WITH A PERIOD ‘.’ – THIS IS THE MOST COMMON

SOURCE OF SYNTAX ERRORS IN SPSS.

A. Reading the Data:

To read ASCII data, you need to tell the program where to find the data (i.e., give

the file name) and indicate the variable names (and columns where the variables reside on

the file).

22

This process can begin with the TITLE command. The TITLE command is

optional and gives your analysis a title that is printed on each output page. It should be

used to identify the analysis for future reference.

Col. 1

|

TITLE 'give your run a title here'.

note: The title you use should be given between apostrophes and may extend up

to column 72. This title will appear at the top of each page of output.

The next command (or first command if you choose not to use the TITLE

command) is used to identify the data file you are using and provide the variable names

and location of variables in the file. This command has two basic forms depending on

the type of data file that you are using. The first form reads data from an ASCII file,

while the second form reads data from an SPSS system (.SAV) file. The first form

follows this general format:

Col. 1

|

DATA LIST FILE='{drive}:{file name}'/{var} {cols}.

where {drive}:{file name} is the disk drive and the name of the data file that

contains your data. Be sure to enclose the drive and file name in apostrophes.

(Note: if your data are not on a separate file but included later in the program,

omit the FILE='{drive}:{file name}' portion of this command.) {var} {cols}

implies a list of variable names each followed by the columns that the variable

occupies in the data matrix. For example:

DATA LIST FILE='c:mydata.prn'/var1 1-2 var2 4-6.

This command indicates that the data can be found on a file named "mydata.prn"

on a disk in drive "C" and that the variable "var1" will be in columns 1 and 2 on

each data record, and the variable "var2" will be in columns 4, 5, and 6 on each

data record. Of course, if your working in UNIX, you won’t have the drive letter

included in the file name, but otherwise, the syntax is identical.

If the data for each case are formatted on more than one record (i.e., more than

one line per case), variable names and formats for each record are separated by a

slash. For example, if data are on two records:

DATA LIST FILE='c:mydata.prn'/1 var1 1-2 var2 4-6/2 var3 2-4 var4 5-8.

In this case, "var3" and "var4" are found on the second line of data for each case

in columns 2-4 and 5-8 respectively.

23

If you’re reading data from an SPSS system file (.SAV) the command has a

different form:

Col. 1

|

GET FILE='{drive}:{file name}'.

In this case, the drive and file name imply an SPSS System file which already

contains information on variable names and locations (see the third example

below for how to convert an ASCII file to an SPSS system file).

SPSS DATA INPUT EXAMPLES:

1. From ASCII file, your first command might read:

DATA LIST FILE='C:DUMBDATA.TXT'/SEX 1 AGE 4-5

SCR1 8-10 SCR2 13-15 SCR3 18-19.

If you’re working on UNIX, this might look like this:

DATA LIST FILE='UNIXDATA.DAT'/SEX 1 AGE 4-5

SCR1 8-10 SCR2 13-15 SCR3 18-19.

If data are entered in “freefield” format (see “data structure” handout), the

variable list does not need to contain the column numbers. SPSS will expect to find the

variables one after another on each subject’s record. If using freefield format it is

extremely important that all data elements are present for each subject and that missing

value indicators, not blanks, are used to specify missing data. The example above would

look like this if done in freefield format:

DATA LIST FILE='C:DUMBDATA.TXT'/SEX AGE

SCR1 SCR2 SCR3.

2. From SPSS/PC system file, your first command might read:

GET FILE='C:DUMBDATA.SYS'.

Or

GET FILE='UNIXDATA.SYS'.

3. To convert an ASCII file to an SPSS system file use the following

format:

24

DATA LIST FILE='C:DUMBDATA.TXT'/SEX 1 AGE 4-5

SCR1 8-10 SCR2 13-15 SCR3 18-19.

SAVE OUTFILE='C:DUMBDATA.SAV'.

The "SAVE OUTFILE" command saves your data in SPSS system file

format. By default, SPSS uses the file extension ‘.SAV’ for system files,

but you don’t have to use this extension. This file also contains the

variable names and other information you provide regarding the format

and labels to be applied to the variables. If you save your data as an SPSS

system file, you can then use the "GET FILE" procedure for accessing

data (on system file DUMBDATA.SAV) and avoid all of the formatting

information required when using ASCII data. The "SAVE OUTFILE"

command will also save into the SPSS system file any computed or

transformed variables that precede the "SAVE OUTFILE" command (see

below).

Note that SPSS system files are readable only by SPSS and not other

programs. While it is possible to convert these files to other formats, all of

the variable information will not be converted.

B. Manipulating the data

The above statements are used to read the data scheduled for

analysis. In addition, there are several commands that can be used to manipulate

or modify the data for analysis. Below is a brief description of some of these

statements and how they are useful. Users should consult the SPSS User's Guide

for the specific form and syntax of each statement.

Col. 1

|

MISSING VALUES var1(x) var2(y).

In some cases, some of the data in the records may be missing. Missing

data codes for each variable can be identified on this command so that they can be

excluded from the analysis. The missing value indicator is included between

parentheses after each variable name. If all variables have the same missing value

indicator (say, 0), you may substitute ALL(0) after the MISSING VALUES

specification. When data are set up, considerable thought should be given to what

values to assign to missing data. One alternative is to use blanks - SPSS/PC

always interprets blank fields as missing.

RECODE -- the recode statement recodes data values. For example, if you have

a continuous variable in the range 0-100 and wish to recode the variable into four

categories, this command allows you to do so.

25

RECODE var01 (0 to 25 = 1)(26 to 50 = 2)(51 to 75 = 3)(76 to 100 = 4).

Or if you simply want to change the way a variable is coded (say letter grades to

numbers):

RECODE var01 (‘D’ = 1)(‘C’= 2)(‘B’ = 3)(‘A’ = 4).

Note that “alpha” variables (those expressed by text instead of numbers) are

placed in single quotes.

COMPUTE -- the compute command allows you to create new variables from

mathematical expressions involving old variables. For example, if you had

several subscores that you wished to combine into a total score, the COMPUTE

statement could be used to sum the subscores.

COMPUTE total=var01+var02+var03.

SELECT IF -- the select if statement may be used to do analysis on an identified

subset of your data (e.g., males only, those with test scores over 50, etc.)

SELECT IF (gender eq 1).

SELECT IF (test gt 50).

C.

Specific SPSS Analytical Procedures

SPSS analyses are specified through separate commands for each procedure you

want to perform (e.g., t-test, correlation, etc.). The command is specific to each statistical

procedure. It defines the precise statistical analysis that you desire. Examples of some of

these procedures are presented later.

D. The final SPSS command

Col. 1

|

FINISH.

This command is the last command in your syntax and tells the computer that you

are done with your SPSS analysis.

Some Specific Statistical Procedures

26

This section details a few specific procedures that can be specified in the

command syntax.

1.

CORRELATION

The CORRELATION procedure computes correlations between

variables. The format of the CORRELATION procedure is as follows:

Col. 1

|

CORRELATION {list of variable names}

/STATISTICS {list}

/OPTIONS {list}.

In this format, the procedure will produce correlations between all

possible combinations of variables listed on the CORRELATION

command. The STATISTICS and OPTIONS for these procedures are

listed by number and may be found in the SPSS/PC manual. An

alternative format of the procedure is:

CORRELATION {variable list 1} WITH {variable list 2}

In this format, correlations will be produced between variables to the left

of 'with' with those on the right (i.e., var1 with var3, var1 with var4, var2

with var3, var2 with var4).

2.

FREQUENCIES

The FREQUENCIES procedure requests frequency distributions

for variables. It has the following format:

Col. 1

|

FREQUENCIES variables={variable list}

/STATISTICS list.

This procedure produces frequency distributions for all variables

listed to the right of the 'variables=' in the procedure command. Various

formats (not shown, consult manual) may be used to produce the tables.

Available options and statistics are listed in the SPSS/PC manual.

3.

DESCRIPTIVES

Program DESCRIPTIVES computes descriptive statistics for

variables and is specified in the following format:

27

Col. 1

|

DESCRIPTIVES {variable list}

/OPTIONS list

/STATISTICS list.

Descriptive statistics will be produced for all variables listed to the

right of the DESCRIPTIVES command. Options and statistics may be

found in the manual.

4.

GENERAL

All SPSS procedures (there are many) follow the general format of

the procedures listed above. They are readily found in the corresponding

section of the SPSS/PC manual.

Complete Syntax – Example Programs

A. An SPSS program that contains the data on an UNIX file.

TITLE 'SPSS program with data on UNIX'.

DATA LIST file=’mydata.txt’/id 1-2 score1 4-5 score2 7-8 sex 10 (a).

COMPUTE total=score1+score2.

DESCRIPTIVES score1 score2 total/statistics=all.

FINISH.

B. An SPSS program using data from another file.

TITLE 'SPSS program that reads data from a file on a PC'.

DATA LIST FILE='c:study.txt'/id 1-2 score1 4-5 score2 7-8 sex 10 (a)/iq 2-4 group

7.

COMPUTE total=score1+score2.

DESCRIPTIVES score1 score2 total iq.

FINISH.

C. Saving a system file.

TITLE 'The same SPSS program that saves a system file'.

DATA LIST FILE='c:study.txt'/id 1-2 score1 4-5 score2 7-8 sex 10 (a)/iq 2-4 group

7.

COMPUTE total=score1+score2.

DESCRIPTIVES score1 score2 total iq.

SAVE OUTFILE='c:study.sav'.

FINISH.

28

D. An SPSS/PC program using a system file.

TITLE 'SPSS program using a system file'.

GET FILE='c:study.sav'.

DESCRIPTIVES score1 score2.

FINISH.

III. SAS Programming Syntax

SAS syntax works similarly to SPSS, but there are important differences. SAS

uses a series of “proc” (short for procedure) statements to accomplish the statistical tasks.

Each syntax line in SAS must end with a semi-colon “;” character.

A. Reading the Data

In SAS, when data are read the data file is given an “internal name.” This name is

used when doing analyses to refer to a particular data set. This way, you can have more

than one active data set for your analysis – say “data1” and “data2.” The general form

SAS uses to read data includes four basic lines:

data internalname;

infile ‘drive:filename’;

input var1 #-# var2 #-# var3 #-#;

run;

Following the ‘data’ command you need to supply an internal file name. Next is the

‘infile’ command, followed by the internal file name that you need to supply. The ‘infile’

command tell SAS where to find the (ASCII) data. You need to give the drive letter (PC

only) and any subdirectory information here. Here, of course, the drive letter implies the

data are on a PC. If you’re using UNIX, there would be no drive letter. Following the

‘input’ command is the ‘input’ command followed by a list of variable names and the

column numbers in which the variables can be found. The ‘run’ command causes the

syntax to be executed. Here’s an example:

data allcases;

infile 'c:/sas examples/hsb.dat';

input SEX 5 CONCPT 20-25 MOT 26-30;

run;

Here, the ‘data’ statement names the active SAS data file to be read. In this example, the

internal name given the data is “allcases.” The data are on drive c in the subdirectory

“sas examples” and the file name is “hsb.dat.” There are three variables to be read (sex,

29

concpt and mot) in the columns indicated on the input command line. Data may also be

read in freefield format by omitting the column numbers:

data allcases;

infile 'c:/sas examples/hsb.dat';

input SEX CONCPT MOT;

run;

It’s also possible to save data into another file. This is accomplished as follows:

data allcases; {new data file called ‘allcases’ to be input}

infile 'c:/sas examples/hsb.dat';

input SEX CONCPT MOT;

run;

data male;

{defines a new data file}

set allcases; {inputs data from ‘allcases’}

output;

{writes cases to ‘male’}

run;

From here, the user could reference the data set ‘allcases’ or ‘males’ in further procedures

and analysis. There are really two new commands here: ‘set’ inputs data from an active

SAS data file and ‘output’ writes the data to the file specified on the most recent ‘data’

statement (in this case, the file ‘male’).

A. Manipulating the Data

Unlike SPSS which has specific programming statements to handle missing

values, data transformations, case selections and other ‘data manipulation’ tasks, these

must be programmed by the user in SAS. This is generally done through a series of

algebraic and logical statements once the data have been read.

First, missing data in SAS is handled differently than in SPSS. A common

indicator for missing data in SAS is the ‘.’ (period) character. Anytime SAS finds a

period where it expects numeric data, it assumes the data is missing. When reading alpha

variables, the period is read as a true period, thus blanks may be substituted (as long as

freefield formatting is not used).

Other transformations (e.g., recoding data, creating new variables, selecting cases)

are done through algebraic and logical statements. Transformation possibilities are

endless with statistical data analysis, but an example of three common procedures using

SAS is presented below.

a. Computing new variables

Computing new variables in SAS involves creating algebraic expressions. Here

are some examples:

30

data male;

set male;

newvar=sex +1; {creates the variable ‘newvar’ which is the value of sex plus 1}

newmot = mot/2; {creates the variable ‘newmot’ which is half the value of

‘mot’}

sumvar=concpt + mot; {creates ‘sumvar’ which is ‘concpt’ and ‘mot’ added

together}

run;

The transformation possibilities with syntax are quite extensive. Consult one of the

references for more information.

b. Recoding Data

Assuming the data have been read, recoding a variable would take this form

(using the above example):

data male;

set male;

if mot < 30 then mot=1;

if mot => 30 and mot < 60 then mot =2;

if mot => 60 then mot=3;

run;

Here, the object of the program is to take a continuous variable (mot) and form three

categories based upon the values of mot. In the data file, the new variable values (1, 2 or

3) replace the original numeric values. Note the logical operators here (‘<’ = less than;

‘>’ = greater than; ‘=’ = equal to). In this case, the variable name remains the same. If

you wanted to keep the variable ‘mot’ as originally read and create a new variable

‘newmot’ that contains the three categories you’d like to create, the syntax would look

like this:

data male;

set male;

newmot=0;

if mot < 30 then newmot=1;

if mot => 30 and mot < 60 then newmot =2;

if mot => 60 then newmot=3;

run;

It is usually preferable to create a new variable rather than recode an old variable with the

original coding since you may want to use the old variable for some other purpose later.

31

c. Selecting Cases

If you want to select a subset of cases for analysis in SAS, you essentially need to

create a new data file that contains only those cases that you wish to analyze. For

example:

data allcases;

{read data file ‘allcases}

infile 'c:/sas examples/hsb.dat';

input id sex concpt mot;

run;

data males;

{specify new data file ‘males’}

set allcases;

{use existing data file ‘allcases’}

if sex=1 then output;

{write cases to ‘males’ if the sex variable equals 1}

data females;

{specify new data file ‘females’}

set allcases;

{use existing data file ‘allcases’}

if sex=2 then output;

{write cases to ‘females’ if the sex variable equals 2}

run;

Once the files ‘males’ and ‘females have been created, analyses can proceed with these

data sets.

d. Specific SAS Statistical Procedures

Specific statistical operations in SAS are accomplished through the use of “proc”

(short for procedure) statements. Each statistical analysis requires a specific “proc.” A

few examples are provided below.

A. Correlation

proc corr data=group1;

var mot math;

run;

B. Frequencies

proc freq data=one;

tables group sex;

run;

C. Descriptive Statistics

proc means data=one;

class sex;

var test1 test2 test3;

run;

32

Complete Syntax – Example Programs

A. A SAS program that contains the data on an UNIX file.

data example;

infile=’mydata.txt’;

input id 1-2 score1 4-5 score2 7-8 sex 10 (a);

run;

data example;

total=score1+score2;

run;

proc freq data=example;

tables sex;

run;

B. A SAS program using data from a PC file.

data example;

infile=’mydata.txt’;

input id 1-2 score1 4-5 score2 7-8 sex 10 (a);

run;

data example;

total=score1+score2;

run;

proc means data=example;

var score1 score2 total;

run;

C. Saving a modified file.

data example;

infile=’mydata.txt’;

input id 1-2 score1 4-5 score2 7-8 sex 10 (a);

run;

data example;

total=score1+score2;

run;

data=highscores;

set example;

if score1>100 then output;

run;

33

Running Statistical Programs (SPSS/SAS) on UNIX

Running a statistical program with SPSS or SAS on the UNIX system requires

that you create (or FTP) your data file and your syntax file on the UNIX system (see

Using the Campus UNIX System). Once you have created your files, the UNIX

commands for running an SPSS or SAS program are similar. Both programs are most

easily run in “batch” mode. Batch mode processing means that you program will be run

with no visible output shown on the screen. All output is written to a text file which you

can view or edit after the program runs.

The SPSS command looks like this:

spss –m <inputfile > outputfile

where ‘inputfile’ is the name of the file that contains your program syntax and

‘outputfile’ is the file name you specify to receive your output for later viewing. The

program listing and output are all contained on this file.

The SAS command looks like this:

sas inputfile

where ‘inputfile’ is the name of the file that contains your program syntax. The output

for SAS is written to a file with the same name as your ‘inputfile’ but has a ‘.lst’ file

extension. For example, if your syntax file is named ‘program1’ the output will be

written to a file called ‘program1.lst’. SAS also creates a program “log” file with an

‘.log’ extension. This file is useful for debugging programs. In this case, this file would

be named ‘program1.log’.

Both the SPSS and SAS commands are entered at the UNIX prompt.

SPSS EXAMPLE

Data may be entered on a PC and “FTPed” to the UNIX system or entered in

PICO. Regardless, you should examine the data in the PICO editor to make sure

everything looks like it should. In this case, the data are named ‘example1.dat’. The data

for this example are shown in the Figure below.

34

Likewise, the SPSS program syntax may be “FTPed” or entered directly in the

PICO editor. The SPSS program syntax for these dat might look like that shown in the

figure below. In this case, the program syntax file is named ‘spssexample1.prg’.

35

To run this program, the following would be entered at the UNIX command

prompt:

Alpha 14:spss –m <spssexample.prg > spssexmple1.out

In this case, the output for the program will be written to a file called ‘spssexample1.out’.

This file can be viewed and edited in PICO and, in the case, would look like that in the

figure below.

36

SAS EXAMPLE

Our SAS example will use the same data as the SPSS example above. Remember

that the file is named ‘example1.dat’. The SAS program file, in this case named

‘sasexample1.prg’ is shown in the PICO editor below. Again, this file can be FTPed or

entered directly into the editor.

37

The syntax for running the SAS program at the UNIX prompt would look like

this:

alpha 12: sas sasexample1.prg

When the program executes, two additional files will be created: ‘sasexample1.prg.log’

will include the program log which can be inspected for errors and general program

information; and ‘sasexample1.prg.lst’ which will contain the program output. This latter

file is shown in the figure below.

38

39

Running Statistical Programs in a Windows Environment

Both SPSS and SAS are available in a Windows version that can be run on a PC.

The Windows versions of these programs make running a program a little less awkward

since there are no UNIX commands to worry about. The programs are costly, however

which makes their use outside of a business or academic environment impractical for

many people. SPSS offers a “Grad Pack” version of SPSS for Windows that is available

at the UWM Bookstore. The cost is around $200 and it is not upgradeable. SPSS for

Windows is available on many campus computers for use by students. SAS for Windows

is also available in some computer labs on campus.

SPSS and SAS for Windows involve the same file structure that is used in UNIX.

You will still be using three files: data file, syntax file and an output file. (NOTE: SAS

has a fourth log file.)

SPSS for Windows

In general, SPSS for Windows uses a Graphical User Interface (GUI) to

accomplish many tasks. It has a “pull down menu” structure that allows the user to

accomplish many common analyses. However, the menu structure makes some tasks

awkward and others impossible to do without writing some syntax. Consequently, this

presentation will involve the use of SPSS syntax in the Windows environment. Students

may explore the pull down menu structure on their own.

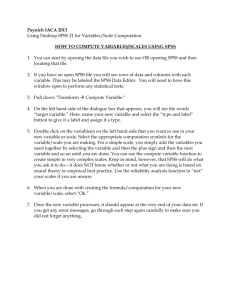

When SPSS is started, the user is presented with a blank data window that looks

like that in the figure below.

40

Data can be enter directly into this window (see Data Entry and Structure) or it can be

populated by reading and ASCII file from SPSS Syntax. In order to use SPSS syntax in

this environment, you must open a syntax window (File>New>Syntax if you’re writing a

new file or File>Open>Syntax for an existing syntax file). The figure below illustrates

what the GUI will look like with this additional syntax window open. The syntax

window works like a word processor that can be used to enter syntax. Note the arrow in

this figure that points to the SPSS “run” button. To run SPSS syntax, you need to first

highlight the syntax you wish to run, and then click the run button.

41

The syntax above is designed to read ASCII data as we did in the UNIX environment.

When this syntax is run, the data window is populated with data you have read and looks

like the figure below (NOTE: you may have to save the data before it is visible in this

data window – File>Save on the data window menu).

42

Note that the data have been read and are now in “spreadsheet” form. Note also the two

“tabs” at the bottom of the data window: Data View and Variable View. If you click on

variable view, you’ll see (and can edit) the characteristics of each variable. The variable

view for these data are shown in the figure below.

43

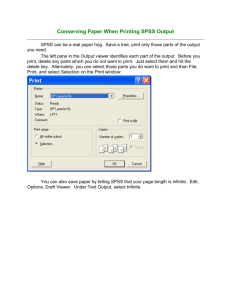

Anytime a statistical procedure is run, the output is written to an output file which is

contained in a third “window” in the SPSS GUI. For example, the figure below shows

some simple syntax for these data added to the syntax window. In this case, the

additional syntax specifies some descriptive statistics for a few of the variables. When

highlighted and run, this procedure writes output to the output window which is also

show in the figure below. The output window also works like a word processor, thus the

output can be edited, printed, saved or cut and pasted into another document.

SPSS data files can also be saved for future use with SPSS. Once data are entered

or read into the data window, they can be saved (File>Save or File> Save As) as an SPSS

system file. These files are saved with a ‘.sav’ file extension by default. Subsequent use

of these files requires the user to open these files in the data window (File>Open>Data)

which makes running the syntax to read the ASCII data unnecessary. SPSS syntax files

are save by default with an ‘.sps’ file extension and output files are saved with a ‘.spo’

file extension.

44

SAS for Windows

SAS for Windows works like SPSS for Windows but the GUI looks somewhat

different. When started, the SAS for Windows displays the GUI shown below. By

default, the program log (upper window in the figure) and syntax editor (lower window in

the figure) are shown. SAS syntax may be written or opened in the program editor.

Note also the two arrows in the figure below. Once a program is run, the output is

written to an output file, just like SPSS. The buttons at the bottom of the GUI are a

convenient way to view the contents of each window. Clicking on the buttons will

display the output, log and program editor windows respectively.

45

The Figure below shows the SAS GUI with syntax contained in the program editor. Note

the SAS “run” button indicated by the arrow at the top of the window. Click on this

button and the syntax in the editor will be run. If you highlight certain lines of syntax in

the editor, only this syntax will be run.

46

Once the syntax has been run, the output will be written to the output editor. The

figure below shows the output window visible are the above syntax has been run. Again,

the buttons at the bottom of the GUI can be used to display the log and syntax editor

respectively.

47

48

Appendix A SPSS Statistical procedures - Syntax examples varx = variable name)

(FREQUENCY DISTRIBUTIONS)

FREQUENCIES [VARIABLES=]varlist

[/FORMAT=[{DVALUE}] [{NOTABLE }]]

{AFREQ } {LIMIT(n)}

{DFREQ }

[/MISSING=INCLUDE]

[/BARCHART=[MIN(n)][MAX(n)][{FREQ(n) }]]

{PERCENT(n)}

[/PIECHART=[MIN(n)][MAX(n)][{FREQ }]

{PERCENT}

[{MISSING }]]

{NONMISSING}

[/HISTOGRAM=[MIN(n)][MAX(n)][{FREQ(n) }]

{PERCENT(n)}

[{NONORMAL}][INCREMENT(n)]]

{NORMAL }

[/NTILES=n]

[/PERCENTILES=value list]

[/STATISTICS=[DEFAULT][MEAN][STDDEV][SUM]

[MINIMUM][MAXIMUM][RANGE]

[SEMEAN][VARIANCE][SKEWNESS][SESKEW]

[MODE][KURTOSIS][SEKURT][MEDIAN]

[ALL][NONE]]

[/GROUPED=varlist [{(width)

}]]

{(boundary list)}

[/ORDER = [{ANALYSIS}]

[{VARIABLE}]

Simple Example:

Frequencies

Variables=var1 var2.

49

(DESCRIPTIVE STATISTICS)

DESCRIPTIVES [VARIABLES=] varname[(zname)] [varname...]

[/MISSING={VARIABLE**} [INCLUDE]]

{LISTWISE }

[/SAVE]

[/STATISTICS=[DEFAULT**][MEAN**][MIN**][SKEWNESS]]

[STDDEV** ][SEMEAN][MAX**][KURTOSIS]

[VARIANCE ][SUM ][RANGE][ALL]

[/SORT=[{MEAN }] [{(A)}]]

{SMEAN } {(D)}

{STDDEV }

{VARIANCE}

{KURTOSIS}

{SKEWNESS}

{RANGE }

{MIN }

{MAX }

{SUM }

{NAME }

Simple example:

Descriptives

Variables=var1 var2 var3/

Statistics=mean sddev min max.

50

(T TEST – INDEPENDENT GROUPS)

T-TEST GROUPS=varname({1,2**

})

{value

}

{value,value}

/VARIABLES=varlist

[/MISSING={ANALYSIS**}[INCLUDE]]

{LISTWISE }

[/CRITERIA=CI[{(0.95 )}]]

{(value)}

Simple Example:

t-test

groups=var1(1 2)/

variables=var2 var3.

(T-TEST – DEPEDNENT GROUP)

T-TEST PAIRS=varlist[WITH varlist

[(PAIRED)]]

[/varlist...]

[/MISSING={ANALYSIS**}[INCLUDE]]

{LISTWISE }

[/CRITERIA=CI[{(0.95 )}]]

{(value)}

Simple Example:

t-test

pairs=var1 with var2.

51

(CHI SQUARE TEST OF ASSOCIATION)

CROSSTABS [TABLES=]varlist BY varlist [BY...]

[/varlist...]

[/MISSING={TABLE**}]

{INCLUDE}

[/FORMAT={TABLES**}{AVALUE**}]

{NOTABLES}{DVALUE }

[/CELLS=[COUNT**][ROW ][EXPECTED][SRESID ]]

[COLUMN][RESID ][ASRESID]

[TOTAL ][ALL ][NONE ]

[/WRITE[={NONE** }]]

{CELLS }

[/STATISTICS=[CHISQ][LAMBDA][BTAU][GAMMA][ETA ]]

[PHI ][UC ][CTAU][D ][CORR]

[CC ][KAPPA ][RISK][MCNEMAR] [CMH [(value)]]

[ALL ][NONE]

[/METHOD={MC [CIN({99.0 })] [SAMPLES({10000})]}]††

{value}

{value}

{EXACT [TIMER({5 })]

}

{value}

[/BARCHART]

**Default if the subcommand is omitted.

††The METHOD subcommand is available only if the Exact Tests Option is installed.

Simple Example:

Crosstabs

Tables=var1 var2 by var3/

Statistic=chisq/

Cells=count row column total.

52

(FACTORIAL ANOVA)

UNIANOVA dependent varlist

[BY factor list [WITH covariate list]]

[/RANDOM=factor factor...]

[/REGWGT=varname]

[/METHOD=SSTYPE({1 })]

{2 }

{3**}

{4 }

[/INTERCEPT=[INCLUDE**] [EXCLUDE] ]

[/MISSING=[INCLUDE] [EXCLUDE**]]

[/CRITERIA=[EPS({1E-8**})][ALPHA({0.05**}) ]

{a }

{a }

[/PRINT = [DESCRIPTIVE] [HOMOGENEITY] [PARAMETER][ETASQ] [RSSCP]

[GEF] [LOF] [OPOWER] [TEST [([SSCP] [LMATRIX]]

[/PLOT=[SPREADLEVEL] [RESIDUALS]

[PROFILE (factor factor*factor factor*factor*factor ...) ]

[/TEST=effect VS {linear combination [DF(df)]}]

{value DF (df)

}

[/LMATRIX={["label"] effect list effect list ...;...}]

{["label"] effect list effect list ... }

{["label"] ALL list; ALL...

}

{["label"] ALL list

}

[/CONTRAST (factor name)={DEVIATION[(refcat)]** ‡ } ]

{SIMPLE [(refcat)]

}

{DIFFERENCE

}

{HELMERT

}

{REPEATED

}

{POLYNOMIAL [({1,2,3...})]}

{

{metric } }

{SPECIAL (matrix)

}

[/KMATRIX= {list of numbers } ]

{list of numbers;...}

[/POSTHOC =effect effect...([SNK] [TUKEY] [BTUKEY][DUNCAN]

[SCHEFFE] [DUNNETT(refcat)] [DUNNETTL(refcat)]

[DUNNETTR(refcat)] [BONFERRONI] [LSD] [SIDAK]

[GT2] [GABRIEL] [FREGW] [QREGW] [T2] [T3] [GH][C]

[WALLER ({100** })]]

{kratio}

[VS effect]

[/EMMEANS=TABLES({OVERALL

})

{factor

}

{factor*factor...

}

WITH (covariate=MEAN covariate=MEAN)

COMPARE ADJ (LSD)

53

(BONFERRONI)

(SIDAK)

[/SAVE=[tempvar [(name)]] [tempvar [(name)]]...]

[/OUTFILE=[{COVB (file)}]

{CORB (file)}

[/DESIGN={[INTERCEPT...] }]

{[effect effect...]}

** Default if subcommand or keyword is omitted.

Simple Example:

Unianova

Var1 by var2 var3/

Design=var2 var3 var2*var3.

54

(REPEATED MEASURES ANOVA)

GLM dependent varlist

[BY factor list [WITH covariate list]]

[/WSFACTOR=name levels[{DEVIATION[(refcat)]

}] name...

{SIMPLE [(refcat)]

}

{DIFFERENCE

}

{HELMERT

}

{REPEATED

}

{POLYNOMIAL [({1,2,3...})]**}

{

{metric } }

{SPECIAL (matrix)

}

[/MEASURE=newname newname...]

[/WSDESIGN=effect effect...]†

[/RANDOM=factor factor...]

[/REGWGT=varname]

[/METHOD=SSTYPE({1 })]

{2 }

{3**}

{4 }

[/INTERCEPT=[INCLUDE**] [EXCLUDE] ]

[/MISSING=[INCLUDE] [EXCLUDE**]]

[/CRITERIA=[EPS({1E-8**})][ALPHA({0.05**}) ]

{a }

{a }

[/PRINT = [DESCRIPTIVE] [HOMOGENEITY] [PARAMETER][ETASQ] [RSSCP]

[GEF] [LOF] [OPOWER] [TEST [([SSCP] [LMATRIX] [MMATRIX])] ]

[/PLOT=[SPREADLEVEL] [RESIDUALS]

[PROFILE (factor factor*factor factor*factor*factor ...) ]

[/TEST=effect VS {linear combination [DF(df)]}]

{value DF (df)

}

[/LMATRIX={["label"] effect list effect list ...;...}]

{["label"] effect list effect list ... }

{["label"] ALL list; ALL...

}

{["label"] ALL list

}

[/CONTRAST (factor name)={DEVIATION[(refcat)]** ‡ } ]

{SIMPLE [(refcat)]

}

{DIFFERENCE

}

{HELMERT

}

{REPEATED

}

{POLYNOMIAL [({1,2,3...})]}

{

{metric } }

{SPECIAL (matrix)

}

[/MMATRIX= {["label"] depvar value depvar value ...;["label"]...} ]

{["label"] depvar value depvar value ...

}

{["label"] ALL list; ["label"] ...

}

{["label"] ALL list

}

55

[/KMATRIX= {list of numbers } ]

{list of numbers;...}

[/POSTHOC =effect effect...([SNK] [TUKEY] [BTUKEY][DUNCAN]

[SCHEFFE] [DUNNETT(refcat)] [DUNNETTL(refcat)]

[DUNNETTR(refcat)] [BONFERRONI] [LSD] [SIDAK]

[GT2] [GABRIEL] [FREGW] [QREGW] [T2] [T3] [GH][C]

[WALLER ({100** })]]

{kratio}

[VS effect]

[/EMMEANS=TABLES({OVERALL

}) ]

{factor

}

{factor*factor...

}

{wsfactor

}

{wsfactor*wsfactor ... }

{factor*...wsfactor*...}

WITH (covariate=MEAN covariate=MEAN)

COMPARE ADJ (LSD)

(BONFERRONI)

(SIDAK)

[/SAVE=[tempvar [(list of names)]] [tempvar [(list of names)]]...]

[/OUTFILE=[{COVB (file)}]

{CORB (file)}

[/DESIGN={[INTERCEPT...] }]

{[effect effect...]}

† WSDESIGN uses the same specification as DESIGN,

with only within-subjects factors.

‡ DEVIATION is the default for between-subjects factors

while POLYNOMIAL is the default for within-subjects factors.

** Default if subcommand or keyword is omitted.

Simple Example:

Glm

Var1 var2 var3 by var4 var5/

Wsfactor=factor1 3/

Wsdesign=factor1/

Design=var4 var5 var4*var5.

56

(CORRELATIONS)

CORRELATIONS [VARIABLES=] varlist [WITH varlist]

[/varlist...]

[/MISSING={PAIRWISE**} [INCLUDE]]

{LISTWISE }

[/PRINT={TWOTAIL**} {SIG**}]

{ONETAIL } {NOSIG}

[/MATRIX=OUT(file)]

[/STATISTICS=[DESCRIPTIVES][XPROD][ALL]]

**Default if the subcommand is omitted.

Default for /MATRIX OUT is the working data file.

Simple Example:

correlations

variables= var1 var2 var3.

57

(REGRESSION)

REGRESSION [MATRIX=[IN(file)] [OUT(file)]]

[/VARIABLES={varlist }]

{(COLLECT)**} Command Syntax

{ALL

}

[/DESCRIPTIVES=[DEFAULTS][MEAN][STDDEV][CORR][COV]

[VARIANCE][XPROD][SIG][N][BADCORR]

[ALL][NONE**]]

[/SELECT={varname relation value}

[/MISSING=[{LISTWISE**

}] [INCLUDE]]

{PAIRWISE

}

{MEANSUBSTITUTION}

[/REGWGT=varname]

[/STATISTICS=[DEFAULTS**][R**][COEFF**]

[ANOVA**][OUTS**]

[ZPP][CHA][CI][F][BCOV]

[SES][XTX][COLLIN]

[TOL][SELECTION][ALL]]

[/CRITERIA=[DEFAULTS**][TOLERANCE({0.0001**})]

{value }

[PIN({0.05**})][POUT({0.10**})]

{value }

{value }

[FIN({3.84 })][FOUT({2.71 })]

{value}

{value}

[CIN({ 95**})]][MAXSTEPS(n)]

{value}

[/{NOORIGIN**}]

{ORIGIN }

/DEPENDENT=varlist

[/METHOD=]{STEPWISE [varlist]

}

{FORWARD [varlist]

}

{BACKWARD [varlist]

}

{ENTER [varlist]

}

{REMOVE varlist

}

{TEST(varlist)(varlist)...}

[/RESIDUALS=[DEFAULTS][ID(varname)]

[DURBIN][{SEPARATE}]

{POOLED }

[HISTOGRAM({ZRESID })]

{tempvarlist}

[OUTLIERS({ZRESID })]

{tempvarlist}

[NORMPROB({ZRESID })]

{tempvarlist}

[/CASEWISE=[DEFAULTS][{OUTLIERS({ 3 })}]

58

{

{value} }

{ALL

}

[/SCATTERPLOT=(varname,varname)]

[/PARTIALPLOT={ALL }]

{varlist}

[OUTFILE={COVB (filename)}] [MODEL (filename)]

{CORB (filename)}

[/SAVE=tempvar[(name)][tempvar[(name)]...][FITS]]

Temporary variables for residuals analysis are:

PRED,ADJPRED,SRESID,MAHAL,RESID,ZPRED,SDRESID,COOK,

DRESID,ZRESID,SEPRED,LEVER,DFBETA,SDBETA,DFFIT,SDFFIT,

COVRATIO,MCIN,ICIN.

SAVE FITS saves DFFIT,SDFIT,DFBETA,SDBETA,COVRATIO.

**Default if the subcommand is omitted.

Simple Example:

regression

/dependent var1

/method=enter var2 var3.

59

Appendix B SAS Statistical procedures - Syntax examples varx = variable name)

(FREQUENCY DISTRIBUTIONS)

proc freq

data=info

page;

by x;

tables y*z

/chisq

expected

exact

sparse

nocum

nopercent

nocol

norow;

The backslash MUST be used (once) to select any of the options of the tables statement.

The data= line is required and specifies the data set to be analyzed.

The optional by statement produces separate analyses for each value of the by variable(s).

The tables statement produces a table of values for analysis. The form y*z produces a 2

way table with the values of y defining table rows and the values of z defining table

columns. A 1 way table is obtained by specifying a single variable name. Multiple tables

may be requested within a single tables statement. The short form (q1--q3)*a requests 3

tables and is equivalent to q1*a q2*a q3*a. Note the double hyphen required here.

Options for the tables statement follow the /.

The chisq option to the tables statement performs the standard Pearson chi-square test on

the table(s) requested.

The expected option prints the expected number of observations in each cell under the

null hypothesis.

The exact option requests Fisher's exact test for the table(s). This is automatically

computed for 2 x 2 tables.

The sparse option produces a full table, even if the table has many cells containing no

observations.

The nocum option suppresses printing of cumulative frequencies and percentages in the

table.

60

The nopercent option suppresses printing of the cell percentages and cumulative

percentages.

The nocol and norow options suppress the printing of column and row percentages.

Simple Example:

proc freq data=example;

tables=var1 var2;

run;

61

(DESCRIPTIVE STATISTICS)

proc means

data=first

alpha=0.10

vardef=n

mean std t prt n lclm uclm;

var x y;

by x;

The required data=first statement selects the data set used in the analysis.

The alpha= option sets the significance level for any confidence intervals computed

(defaults to alpha=0.05 if not specified).

The vardef=n option sets the divisor for the sample variance to be the sample size n

(default is to use the degrees of freedom n-1 and is the same as vardef=df). NOTE: do

not use the vardef option if you also use the t and/or prt options. THE T AND PRT

VALUES GENERATED WHEN VARDEF=N ARE INCORRECT.

The optional last line gives a list of the statistics to be computed. The mean and std

options give the sample mean and sample standard deviation for each variable in the

analysis.

The t option computes the value of the t-statitistic for a test of zero mean for each

variable.

The prt option computes the p-value for a test of the null hypothesis of zero mean for

each variable.

The n option prints the sample size.

The lclm and uclm options produce the lower and upper endpoints of a 100(1-alpha)%

confidence interval for the mean.

If no statistics are specified, n mean std min and max are printed for each variable. Min

and max are the sample minimum value and maximum value.

The var option can be used to limit the output to a specific list of variables in the data set.

If it is not used, the requested statistics will be computed for all variables in the data set.

The optional by statement generates separate analyses for each level of the by variable(s).

Simple Example:

proc means data=example mean;

var var1 var2 var3;

run;

62

(T TEST – INDEPENDENT GROUPS)

proc ttest data=second;

class type;

var x y;

THE CLASS SPECIFICATION IS REQUIRED. The class statement specifies a

variable (here type) in the data set which takes exactly two values. The values of this

class variable define the two populations to be compared.

The optional var statement restricts comparisons to the variables listed; if not used, all

variables in the data set (execpt the class variable) are compared pairwise.

Simple Example:

proc ttest data=example;

class var1;

var var2 var3;

run;

(T-TEST – DEPEDNENT GROUP)

In SAS, the dependent group t-test is performed by PROC MEANS after the creation of a

“gain” variable from the two dependent

measures.

Simple Example:

gain=var1-var2;

proc means data=example mean stderr t prt;

var gain;

run;

63

(CHI SQUARE TEST OF ASSOCIATION)

See PROC FREQ above.

Simple Example:

proc freq data=example;

tables=var1*var2 / expected chisq;

run;

(FACTORIAL ANOVA)

PROC ANOVA handles only balanced ANOVA designs.

PROC ANOVA

CLASS

MODEL

MEANS

DATA=datasetname;

factorvars;

responsevar = factorvars;

factorvars / BON

/* See below

/* Bonferroni t-tests,

g=r(r-1)/2

T or LSD

/* Unprotected t-tests

TUKEY

/* Tukey studentized range

SCHEFFE

/* Scheffe contrasts

ALPHA=pvalue /* default: 5%

CLDIFF

/* Confidence limits

LINES

/* Non-significant subsets

;

/* use LINES or CLDIFF

Simple Example:

proc anova data=example;

class var1 var2;

model var3=var1 var2 var1*var2; (or var1|var2;)

run;

PROC GLM handles both balanced and unbalanced designs but uses more computing

resources.

PROC GLM options;

CLASS variables;

MODEL dependents = independents / options;

ABSORB variables;

BY variables;

FREQ variables;

64

*/

*/

*/

*/

*/

*/

*/

*/

*/

ID variables;

WEIGHT variables;

CONTRAST 'label' effect values ... / options;

ESTIMATE 'label' effect values ... / options;

LSMEANS effects / options;

MANOVA H = effects E = effect M = equations MNAMES = names PREFIX =

name / options;

MEANS effects / options;

OUTPUT OUT=SASdataset keyword=names ...;

RANDOM factorname levels(levelvalues) transformation[, ... ] / options;

REPEATED factorname levels(levelvalues) transformation[,...] / options;

TEST H = effects E = effect / options;

Simple Example:

proc glm data=example;

class var1 var2;

model var3=var1 var2 var1*var2; (or var1|var2;)

run;

(REPEATED MEASURES ANOVA)

PROC GLM ;

MODEL dv1 dv2 dv3 dv4 dv5 = /NOUNI;

REPEATED reptdfac /PRINTE ;

RUN ;

Simple Example:

proc anova data=example;

model var1 var2 var3=var4|var5 / nouni;

repeated factor1 3 (1 2 3);

run;

65

(CORRELATIONS)

PROC CORR options;

VAR variables;

WITH variables;

PARTIAL variables;

WEIGHT variables;

FREQ variables;

BY variables;

PROC CORR Options:

DATA=SASdaataset -- Names SAS dataset to be used

OUTH=SASdaataset -- Names SAS dataset containing Hoeffding statistics

OUTK=SASdaataset -- Names SAS dataset containing Kendall correlations

OUTP=SASdaataset -- Names SAS dataset containing Pearson correlations

OUTS=SASdaataset -- Names SAS dataset containing Spearman correlations.

HOEFFDING -- requests Hoeffding's D statistic

KENDALL -- requests Kendall's tau-b coefficients

PEARSON -- requests Pearson correlation coefficients

SPEARMAN -- requests Spearman coefficients

NOMISS -- missing values to any variable to be dropped from all calculations

VARDEF=divisor -- specifies the divisor used in calculation of variances

SINGULAR=p -- criterion for determining singularity

ALPHA -- Cronbach's alpha

COV -- covariances

CSSCP -- corrected sums of squares and cross products

NOCORR -- Pearson correlations not calculated or printed

SSCP -- sums of squares and cross products

BEST=n -- prints n correlations with largest absolute values

NOPRINT -- Suppresses printed output

NOPROB -- suppresses printing of significance probabilities

NOSIMPLE -- suppresses printing of simple descriptive statistics

rank -- print correlations in order from highest to lowest

Simple Example:

proc corr data=example;

var var1 var2 var3;

run;

66

(REGRESSION)

PROC REG options;

MODEL dependents=regressors / options;

VAR variables;

FREQ variable;

WEIGHT variable;

ID variable;

OUTPUT OUT=SASdataset keyword=names...;

PLOT yvariable*xvariable = symbol ...;

RESTRICT linear_equation,...;

TEST linear_equation,...;

MTEST linear_equation,...;

BY variables;

The PROC REG statement is always accompanied by one or more MODEL statements to

specify regression models. One OUTPUT statement may follow each MODEL statement.

Several RESTRICT, TEST, and MTEST statements may follow each MODEL.

WEIGHT, FREQ, and ID statements are optionally specified once for the entire PROC

step. The purposes of the statements are:

The MODEL statement specifies the dependent and independent variables in the

regression model.

The OUTPUT statement requests an output data set and names the variables to

contain predicted values, residuals, and other output values.

The ID statement names a variable to identify observations in the printout.

The WEIGHT and FREQ statements declare variables to weight observations.

The BY statement specifies variables to define subgroups for the analysis. The

analysis is repeated for each value of the BY variable.

Simple Example:

proc reg data=example;

model var1=var2 var3;

run;

67