Clusters - University of the Basque Country

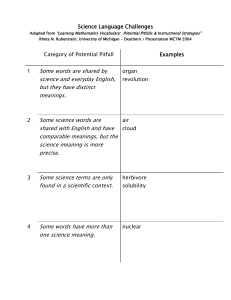

advertisement