Digital Communication and Computation

advertisement

Digital Communication and Computation

Final Report on

Hidden Markov Models

資工四 B86506009 鄭家駿

I.

Backgrounds of Speech Recognition

1. A Mathematical Formulation

A = a1, a2,…, am

acoustic evidence(data) made of a sequence of symbols taken from some alphabet

A on the basis of which recognizer will make its decision about which words were

spoken

W = w1, w2,…, wn

A string of n words, each belonging to a fixed and known vocabulary V

If P(W|A) denotes the probability that the words W were spoken, given that the

evidence A were observed, then the recognizer should decide in favor of a word

string Ŵsatisfying

Ŵ= arg max P(W|A)

That is, the recognizer will pick the most likely word string given the observed

acoustic evidence.

P(W|A) = P(W)P(A|W)/P(A)

The maximization will carried out with A fixed, then

Ŵ= arg max P(W)P(A|W)

(1)

where P(W) is the probability that the word string W will be uttered, P(A|W) is the

probability that when speaker says W the acoustic evidence A will be observed.

2. Acoustic Processing

This is the first step in speech recognition. That is, one needs to decide on a front

end that will transform the pressure waveform into the symbols ai with which the

recognizer will deal.

3. Acoustic Modeling

In (1), we need to know all possible pairings of A and W to determine the value of

P(A|W). However the number of different possible pairings is too large to deal

with, we need a statistical acoustic model of the speaker’s interaction with the

acoustic processor. The modeling involves the way the speaker pronounces W, the

ambience, the microphone placement and characteristics, and the acoustic

processing performed by the front end. The usual model is hidden Markov model.

4. Language Modeling

In (1), we also nee to know P(W), the priori probably that the speaker wishes to

utter W. We decompose P(W) such that

P(W) = ΠP(wi |w1,…,wi-1)

In fact, the arguments for P(W) are just too many. Therefore, we use equivalence

classes Φ(w1,…,wi-1) instead of the entire history.

P(W) =ΠP(wi |Φ(w1,…,wi-1))

II.

Hidden Markov Chains

1. HMM Concepts

We define

(1) An output alphabet Y = {0, 1,…, b-1}

(2) A state space I = {1, 2,…,c} with unique starting state s0

(3) A probability distribution of transitions from state s to state s’:p(s’|s)

(4) An output probability distribution associated with transitions from state s to

state s’:q(y|s,s’)

Then the probability of observing an HMM output string y1, y2,…,yk is given by

P(y1, y2,…,yk) = ΣΠp(si|si-1) q(y|si-1, si)

(2)

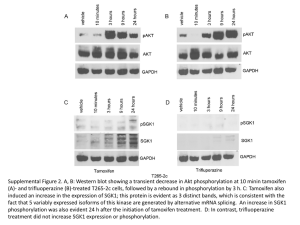

Figure 1 is an example of an HMM with b = 2 and c = 3 attaching outputs to

transitions..

0

1

1

3

0

1

0

1

0

1

2

Fig. 1

2. The Trellis

Figure 2 shows the stages for yi = 0 and yi = 1 corresponding to the HMM of

figure 1.

1

1

1

1

2

2

2

2

3

3

3

3

y=0

y=1

Fig. 2

Figure 3 shows the trellis corresponding to the output sequence 0110. The required

probability P(0110) is equal to the sum of the probabilities of all complete paths

through the trellis that start in the obligatory starting state.

S0

1

1

1

1

1

2

2

2

2

2

3

3

3

3

3

0

1

1

0

Fig. 3

The probability P(y1, y2,…,yn) can be obtained recursively. Define

αi(s) = P(y1, y2,…,yi, si = s),

the probability that output sequence is y1, y2,…,yi and ending state is s.

(3)

With boundary conditions of

α0(s) = 1 for s = s0 andα0(s) = 0 otherwise, and

setting p(yi, s|s’) = q(yi|s’,s)p(s|s’),

(4)

we get the recursion

αi(s) = Σp(yi, s|s’)αi-1(s’),

(5)

By definition, the desired probability is

P(y1, y2,…,yn) = Σαn(s)

(6)

3. Search for the Likeliest State Transition Sequence

Given an observed output sequence y1, y2,…, yk which state sequence s1, s2,…, sk is

most likely to generate it? Note that

P(s1, s2,…,sk |y1, y2,…,yk, s0) = P(s1, s2,…,sk, y1, y2,…,yk|s0)/ P(y1, y2,…,yk|s0).

Because it is Markov, then for all i,

P(s1, s2,…,sk , y1, y2,…,yk|s0) = P(s1,…,si , y1,…,yi|s0) P(si+1,…,sk , yi+1,…,yk|si)

Therefore, we can find the maximally likely sequence s1,…,si, si+1,…,sk by finding

first for each state s on trellis level i the most likely state sequence s1(s),…,si-1(s)

leading into state s, then finding the most likely sequence si+1(s),…,sk(s) leading

out of state s, and finally finding the state s on level i for which the complete

sequence s1(s),…,si-1(s), si = s, si+1(s),…,sk(s) has the highest probability. This leads

to the Viterbi algorithm.

(1) Define the recursive formula

γi(si) = max P(s1,…,si , y1,…,yi|s0)

= max p(yi, si|si-1) max P(s1,…,si-1 , y1,…,yi|s0)

= max p(yi, si|si-1)γi-1(si)

(7)

(2) Setγ0(s0) = 1, γ0(s) = 0 for s ≠ s0

(3) Use (7) to computeγ1(s) for all states of the trellis’s first column,

γ1(s) = max p(y1, s1|s0)γ0(s0) = p(y1, s1|s0)

(4) Computeγ2(s) for all states of the trellis’s second column, and purge all other

transitions thatγ2(s) > p(y2, s2|s1)γ1(s1). If more than one transition remains,

arbitrarily select one to keep and purge the others.

(5) In general, computeγi(s) for all states of the trellis’s ith column and purge all

but one transitions.

(6) Find the state s in the trellis’s kth column for whichγk(s) is maximal.

max P(s1,…,si , y1,…,yk|s0) = maxγk(s)

(8)

Figure 4~8 traces the algorithm with the example in figure 1.

p(0,1|1) = 0.2

p(1,1|3) = 0.5

1

3

p(0,3|1) = 0.3

p(1,2|1) = 0.4

p(0,3|2) = 0.4

p(1,3|2) = 0.6

p(0,2|1) = 0.1

p(1,2|3) = 0.5

2

Fig. 4

S0

1

1

1

1

1

2

2

2

3

3

3

γ1(1)=0.2

2

2

γ1(2)=0.1

3

3

γ1(3)=0.3

0

1

1

0

Fig. 5

S0

1

2

1

1

γ1(1)=0.2

γ2(1)=0.15

2

3

2

γ1(2)=0.1

γ2(2)=0.15

3

3

γ1(3)=0.3

γ2(3)=0.06

0

1

1

1

2

2

3

3

1

0

Fig. 6

S0

1

2

1

1

1

γ1(1)=0.2

γ2(1)=0.15

γ3(1)=0.03

2

2

γ1(2)=0.1

γ2(2)=0.15

γ3(2)=0.06

3

3

3

γ1(3)=0.3

γ2(3)=0.06

γ3(3)=0.09

2

3

0

1

1

1

2

3

0

Fig. 7

S0

1

2

1

1

1

1

γ1(1)=0.2

γ2(1)=0.15

γ3(1)=0.03

γ4(1)=0.006

2

2

2

γ1(2)=0.1

γ2(2)=0.15

γ3(2)=0.06

γ4(2)=0.003

3

3

3

3

γ1(3)=0.3

γ2(3)=0.06

γ3(3)=0.09

γ4(3)=0.024

2

3

0

1

1

0

Fig. 8

The most likely sequence is 12313.

4. Estimation of Statistical Parameters of HMMs

In most cases, the parameters of HMMs is not available. We want to use HMMs to

model data generation, and the most we can expect is to be given a sample of such

data. We wish to find the parameter specification that allow the HMM to account

best for the observed data as well as unseen data in the future.

Consider the following example and use q(y|t) = q(y|s, s’)

t1

t2

S1

S3

t5

t4

t3

S2

p(t1)+p(t2) = 1;P(t4) + p(t5) = 1

We will estimate the probabilities p(ti) and q(y|t), where y=0,1 and i=1,…6.

If c*(t) is equal to the normalized number of times the process went through

transition t ,and c*(y,t) is equal to the normalized number of times the process

went through t and as a consequence produced the output y. Then the natural

estimation would be

q(y|t) = c(y,t)/c(t)

p(ti) = c(ti)/c(t1) + c(t2) for i = 1,2

p(t3) = 1 for i = 3

p(ti) = c(ti)/c(t4) + c(t5) for i = 4,5

Suppose that y1,…,yk were observed and define

L(t), R(t) :the source and target states of the transition t

P*{ti=t}:the probability that y1,…,yk were observed and transition t was taken

when leaving the ith stage of the trellis, i = 0, 1,…,k

αi(s) = P{si = s}:the probability that y1,…,yi were observed and the state

reached at the ith stage was s

βi(s) = P{rest|si = s}:the probability that yi+1,…,yk were observed when the

state at the ith stage was s

Then we can have c*(t) and c*(y,t) as

c*(t) = ΣP*{ti = t}

(9)

c*(y,t) =ΣP*{ti = t}δ(yi+1,y), where δ(yi+1,y) = 1 for yi+1 = y and δ(yi+1,y) = 0

otherwise

(10)

Observe that transition t can be taken only after HMM reached the state L(t) and

the rest action will start in state R(t), thus

P*{ti=t} =αi(L(t)) p(t) q(yi+1|t) βi+1(R(t))

(11)

The following recursions are also hold:

αi(s) = Σαi-1(L(t))p(t)q(yi|t) + Σαi(L(t))p(t)

(12)

βi(s) = Σp(t)q(yi+1|t)βi+1(R(t)) + Σp(t)βi(R(t))

(13)

We can use equation (9) ~ (13) to estimate the parameters of an HMM.

III.

Additional Considerations

1. In the previous discussion, the transitions that produce null symbols were omitted.

When we take it into consideration, some appropriate changes should be made to

the formulas.

2. Pratically, normalization should be made to the equations (12) and (13) because

they will lose precision as i increases/decreases.

3. The front end of speech recognition includes a microphone whose output is an

electric signal, a means of sampling that signal, and a manner of processing the

resulting sequence of samples. The features of the samples are extracted and

compare to the pre-saved prototype database to find the best match.

4. Reference:Statistical method for Speech Recognition