ABSTRACT - Welcome to test.postgrad.eee.bham.ac.uk

advertisement

PhD Three Month Report

PhD Three Month Report

1 Introduction

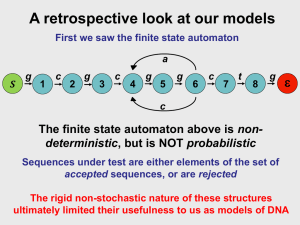

Automatic Speech Recognition (ASR) is mainly concerned with automatic conversion of an

acoustic speech signal into a text transcription of that speech. Many approaches to automatic speech

recognition have been studied in the past, including approaches based on Artificial Intelligence (AI),

pattern template matching techniques and Artificial Neural Networks (ANN). Currently, the use of

Hidden Markov Models (HMMs) is the most popular statistical approach to automatic speech

recognition. At present, all state-of-the-art commercial and most laboratory speech recognition

systems are based on HMMs approach.

Generally speaking, the goal of the first three months is to gain a comprehensive perspective

throughout HMM-based speech recognition techniques, from conventional HMMs (Young 1995)to

segment HMMs (Holmes, Russell 1999)and to the newly developed trajectory HMMs (Tokuda et al

2000). Moreover, some highly relevant areas to speech recognition, for example, machine learning,

have also been explored during the past three months. As far as this report is concerned, the aim is

to provide a summary of background reading and literature survey done so far. More importantly,

an intended research question is proposed based on what have been reviewed on speech recognition.

Finally, a plan and timetable for the next six months are represented at the end of the report.

2 Literature Review

2.1 HMM-based speech recognition system overview

The performance of automatic speech recognition systems has been greatly advanced as a result of

the employment of Hidden Markov Models (HMMs) since 1980s. HMMs assume an utterance as a

time-varying sequence of segments. All variations between various realizations of the segments

which caused by different speaking style, different speakers etc are normally modelled by using

multiple-component Gaussian mixtures. Figure 1 below shows a typical phoneme-level HMMbased large vocabulary continuous speech recognition system. It can be seen that the main

components of a speech recognition system include Front-end processing model, acoustic model,

language model and Viterbi decoder.

1

PhD Three Month Report

Speech Waveform

Acoustic Model

(Phoneme-level HMM store)

Front-end Processing

Pronunciation

Dictionary

Acoustic vector

sequence

Y={y1, y2,…, yT}

Language

Model

P(Y|W)

P(W)

Viterbi Decoder

Optimal word

sequence

w1, w2,…, wN

Figure 1 Schematic diagram of a phoneme-level HMM-based speech recognition system

Any speech recognition begins with automatic transformation of the speech waveform into a

phonetically meaningful, compatible and compact representation which is appropriate for

recognition. This initial stage of speech recognition is referred to as front-end processing. For

instance, the speech is firstly segmented, usually 20-30ms with 10ms overlap between adjacent

segments. Each short speech segment is windowed and a Discrete Fourier Transform (DFT) is

thereafter applied. The resultant complex spectral coefficients are taken the modulus and logarithm

to produce the log power spectrum. Mel frequency averaging is then applied, followed by a Discrete

Cosine Transform (DCT) to produce a set of Mel Frequency Cepstral Coefficients (MFCC).

Alternative front-end analyses are also used. Examples include linear prediction, Perceptual Linear

Prediction (PLP) etc.

MFCCs are widely used as the acoustic feature vectors in speech recognition. However, they do not

account for the underlying speech dynamics which cause speech changes. A simple way to capture

speech dynamics is to calculate the first and sometimes the second time derivatives of the static

feature vectors and concatenate the static and dynamic feature vectors together. Although the use of

dynamic features improves recognition performance, the independence assumption actually

2

PhD Three Month Report

becomes even less valid because the observed data for any one frame are used to contribute to a

time span of several feature vectors (Holems, Russell 1999).

Given a sequence of acoustic vectors y y1 , y2 ,..., yT , the speech recognition task is to find the

optimal words sequence W w1 , w2 ,..., wL , which maximizes the probability P W | y , i.e. the

probability of the word sequence W given the acoustic feature vectors y . This probability is

normally computed by using Bayes Theorem:

P W | y

P y | W P W

P y

In the equation above, P W is the probability of the word sequence W , named language model

probability and computed using a language model. The probability P y | W is referred to as

acoustic model probability, which is the probability that the acoustic feature vector y is produced by

the word sequence W . P y | W is calculated by using acoustic model.

Phoneme-level HMMs represent phoneme units instead of a whole word. The pronunciation

dictionary specifies the sequence of HMMs for each word in the vocabulary. For a given word

sequence, the pronunciation dictionary finds and concatenates corresponding phoneme HMMs for

each word in the sequence. The probabilities P y | W and P W are then multiplied together using

the Viterbi decoder for all possible word sequences allowed by the language model. The one with

the maximum probability is selected as the optimal word sequence.

2.2 Segment HMMs

Although conventional HMM-based systems have achieved impressive performance on certain type

of speech recognition tasks, there have been discussions on its inherent deficiencies when applied to

speech. For example, HMMs assume independent observation vectors given the state sequence,

ignoring the fact that constraints of articulation are such that any one frame of speech is highly

correlated with previous and following frames (Holmes, 2000). Generally speaking, the piecewise

stationarity assumption, the independence assumption and poor state duration modelling are three

major limitations of HMMs for speech. These assumptions are made for mathematical tractability

and are clearly inappropriate for speech.

3

PhD Three Month Report

Acoustic feature

vectors

HMM states

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

1

2

:

:

:

:

:

:

:

:

:

3

Figure 2 Schematic diagram of a segment HMM

A variety of refined and alternative models have been investigated to overcome limitations of

conventional HMMs. In particular, a number of “Segment HMMs” have been exploited, in which

variable-length sequence of observation vectors or ‘segments’ rather than individual observation are

associated with models, as is shown in Figure 2. A major motivation for the development of

“segmental” acoustic models was the opportunity to exploit acoustic features which are apparent at

the segmental but not at the frame level (Holmes, Russell 1999). Examples of segment HMMs

include Digalakis (1992), Russell (1993) and Gales and Young (1993). Ostendorf, Digalakis and

Kimball (1996) represented a comprehensive review on a variety of segment HMMs.

Segment HMMs have several advantages against conventional HMMs. Firstly, the length of

segments is variable, which allows a more realistic duration model to be easily incorporated. The

state duration in a conventional HMM is modelled by a geometric distribution, which is unsuitable

for speech segment duration modelling. Other relevant duration models with explicit distribution of

state occupancy have been studied by Russell (1985) and Levinson (1986). Secondly, segment

HMMs allow relationship between feature vectors within a segment to be represented explicitly,

usually taking some form of trajectories in the acoustic vector space. There are many different type

of trajectory-based segmental HMMs. Examples include the simplest trajectory form in which the

trajectory is constant over time (Russell 1993; Gales, Young 1993), the linear trajectory

characterized by a mid-point and slope (Holmes, Russell 1999), and the non-parametric trajectory

model (Ghitza and Sondhi 1993).

2.3 Multiple-level segment HMM (MSHMM)

It is generally believed that any type of incorporation of speech dynamics into acoustic models will

eventually lead to improvements in speech recognition performance. What is interesting and of

4

PhD Three Month Report

potential benefits is to model speech dynamics directly within the existing HMMs framework and

hence retaining the advantages of HMMs, for instance the well-studied training and decoding

algorithm. A good example is the “Balthasar” project conducted at the University of Birmingham

(Russell, Jackson 2005). The “Bathasar” project introduced a novel multiple-level segmental hidden

Markov model (MSHMM), which incorporates an intermediate ‘articulatory’ layer to regulate the

relationship between underlying states and surface acoustic feature vectors.

Acoustic layer

w

Articulatory-toacoustic mapping

Intermediate layer

Finite state process

1

2

3

Figure 3 A multiple-level segmental model that uses linear trajectories in an intermediate space.

Figure 3 shows the multiple-level segmental HMMs (MSHMM) proposed in the ‘Balthasar’ project.

It can be seen clearly that the relationship between the state-level and acoustic-level descriptions of

a speech signal is regulated by an intermediate, ‘articulatory’ representation (Russell, Jackson 2004).

To construct the ‘articulatory’ space, a sequence of Parallel Formant Synthesiser (PFS) control

parameters is used.

A typical parallel formant synthesiser (Holmes, Mattingley and Shearme 1966) consists of a series

of resonators arranged in parallel, usually four, representing the first four formant frequencies and

the corresponding amplitude control for each formant resonator plus a voicing control to determine

the type of excitations. A sequence of 12 dimensional vectors, including the frequencies and

amplitudes of the first three formants, the fundamental frequency and degree of voicing etc, is sent

every 10-20ms to drive the synthesiser. It has been demonstrated that the derivation of appropriate

control parameters determines to what extent the synthesis quality would be.

5

PhD Three Month Report

The segmental model used in the MSHMM framework is a fixed, linear trajectory segmental HMM

(FT-SHMM) (Holmes, Russell 1999), in which each acoustic segment is considered as a variableduration, noisy function of a linear trajectory. The segment trajectory of duration is characterized

by f t t t m c , where t

1

2

, m and c are slope vector and midpoint vector

respectively. Assume y1 [ y1 , y2 ,..., y ] as the acoustic vectors associated with state i , the state

output probability is given as follows:

bi y1 ci yt | f i t ,Vi ,

t 1

where ci is the duration PDF and yt | fi t ,Vi is the multivariate Gaussian PDF with mean

fi t and diagonal N N covariance Vi .

The novelty of the MSHMM is to define the trajectory f t in the intermediate or articulatory layer

and then map onto to the acoustic layer by using an ‘articulatory-to-acoustic ’mapping function W ,

i.e.

bi y1 ci yt | W f i t ,Vi

t 1

Here the mapping function W is piecewise linear. The linear mapping work has been doing by Miss

Zheng and research on non-linear mapping has already started.

The principle of Viterbi decoding still applies to segmental HMMs, though the computational load

is more expensive than conventional HMM. The segmental Viterbi decoder is shown as below:

ˆt i max max ˆt j a jibi ytt 1

j

where bi ytt 1 is the segmental state output probabilities.

Although multiple-layer segmental HMM is at very early stage, it provides a solid theoretical

foundation for the development of richer classes of multi-level models, including non-linear models

of dynamics and alternative articulatory representations (Russell, Jackson 2005). The incorporation

of an intermediate layer has many advantages. It introduces the possibility of better speech

dynamics modelling and articulatory-to-acoustic mapping.

6

PhD Three Month Report

2.3 Trajectory HMM

Recently, a new type of model “Tajectory HMM” was proposed by Tokuda, Zen and Kitamura

(2003). The trajectory HMM is based on the conventional HMMs whose acoustic feature vectors

include static and dynamic features. It intends to overcome the limitations of constant statistics

within an HMM state and independence assumption of state output probabilities. The conventional

HMM allows inconsistent static and dynamic features, as is shown in Figure 4. The piecewise

constant thick line at the top stands for static feature or state mean of each state. The thin line at the

bottom stands for dynamic feature, for example delta coefficients. The trajectory HMM for speech

recognition was derived by reformulating conventional HMM with static and dynamic observation

vectors (e.g. delta and delta-delta cepstral coefficients), whereby explicit relationship is imposed

between static and dynamic features.

t-1

t t+1 t+2

…..

Figure 4 Inconsistency between static and dynamic features

From the speech production point of view, vocal organs move smoothly when people articulate

because of the physiological limitation. Intuitively, the state mean trajectory should be a continuous

line, as is shown in Figure 4, instead of a piecewise constant line sequence. To mitigate the

inconsistency between piecewise constant static and dynamic features, it is hoped that the dynamic

features can be incorporated into the static features hence to form an integrated optimal speech

parameter trajectory.

To derive the trajectory HMM, the first step is to map the static feature space to the output acoustic

feature space which contains both static and dynamic features, for example delta and delta-delta

spectral coefficients. To begin with, assume an acoustic vector sequence y [ y1 , y2 ,..., yT ]

contains both static features c and dynamic features c , 2 c , i.e. the t -th acoustic vector

yt [ct , ct , 2 ct ] , where means matrix transposition. The goal is to find a mapping function

between the static and dynamic feature vectors. Assume the static feature vector is D dimensional

i.e. ct [ct 1 , ct 2 ,..., ct D ] . The dynamic features is calculated by

7

PhD Three Month Report

L

1

ct

L1

w1 ct

(1)

L

2

ct

2

L2

w 2 ct

(2)

Now the static features and dynamic features can be arranged in a matrix form as follows

y Wc

(3)

where

c [c1 , c2 ,..., cT ]

W [ w1 , w2 ,..., wT ]

wt [ wt 0 , wt1 , wt 2 ]

wt n [0 D D ,..., 0 D D , w n Ln I D D ,..., w n 0 I D D ,..., w n Ln I D D , 0 DD ,..., 0 DD ] , n 0,1, 2

1st

and L0 L0 0 , w

0

n

t L -th

t L -th

t-th

T -th

n

0 1.

The state output probability for the conventional HMM is

P y | M P y | x, M P x | M

(4)

x

where x x1 , x2 ,..., xT is a state sequence. The next goal is to transform (4) into a form which

contains static features only. Assume each state output PDF as single Gaussian, the

probability P y | x, M then can be written as

T

P y | x, M yt | xt , U xt y | x , U x

t 1

(5)

where xt and U xt are the 3M 1 mean vector and the 3M 3M covariance matrix of state xt ,

respectively, and

x [ x , x ,..., x ]

1

2

T

U x diag[U x1 ,U x2 ,...,U xT ]

Now rewrite (5) by substituting (3) for (5)

P Wc | x, M Wc | x ,U x K x c | c x , Qx

Where c x is given by

Rx c x rx

8

(6)

PhD Three Month Report

And

Rx W U x1W

rx W U x1 x

Qx Rx1

2 | Qx |

1

exp xU x1 x rxQx rx

3 DT

2

2 | U x |

DT

Kx

where K x is the normalization constant.

Finally, the trajectory model is defined by eliminating the normalization constant K x in (6)

P c | M P c | x, M P x | M

x

where

P c | x, M c | c x , Qx

It is demonstrated that the mean c x obtained this way is exactly the same as the speech parameter

trajectory (Tokuda et al 2000), i.e.

c x arg max P y | x, M arg max P(Wc | x, M )

c

c

3 Project Aims and the Initial Stages of Work

The ultimate goal of this project is to develop more appropriate, sophisticated statistical method to

model speech dynamics. The framework chosen in this project is multiple-level segment HMM

(MSHMM). It is hoped that the development of these models will be able to support both high

accuracy speech and speaker recognition, and high accuracy speech synthesis.

Based on the research of last three months, the research question proposed here is: what is the best

way to model speech dynamics under the frame work of multiple-layer segmental HMMs. As has

been discussed before, trajectory HMM defines a mapping function between static and dynamic

features, which is analogous to articulatory-to-acoustic mapping in MSHMM. It is assumed that

positive outcome may take place if trajectory HMM is incorporated into the MSHMM framework,

i.e. modelling dynamics in the intermediate layer using trajectory HMM.

9

PhD Three Month Report

In the “Balthasar” project, speech dynamics were modelled as piecewise linear trajectories in the

articulatory space and linearly mapped into the acoustic space. A piecewise linear model provides

an adequate ‘passive’ approximation to the formant trajectories, but does not capture the active

dynamics of the articulatory system (Russell 2004). One of the objectives of this project, and

possibly an initial stage of this project, is to conduct research on non-linear articulatory trajectories

modelling and the incorporation of these trajectories into multiple-layer segment HMMs. The

improved model will be tested and evaluated with extensive experiments for speech and speaker

recognition and speech synthesis.

4 Plans for Next Six Months

This section gives a plan and timetable for next six months. A Gantt chart is represent below

showing an approximate schedule. Basically, there are three main objectives in the next six months.

The first objective is to become familiar with HTK. A specific task is to use HTK to build a set of

monophone or triphone HMMs based on PFS control parameters. The expected duration for

completion of this objective is two months. The second objective is to write a programme to

synthesize a set of HMMs. It may take about one month to finish this job. Finally, half of next six

months time is given to the implementation of trajectory HMM algorithm as trajectory HMM is

highly related to the research question proposed before. Apart from the above objectives,

background reading will last throughout the whole period.

10

PhD Three Month Report

References

Digalakis, V. “Segment-Based stochastic models of spectral dynamics for continuous speech

recognition”, PhD Thesis, Boston University, 1992.

Gales, M.J.F. Young, S.J. “Segmental hidden markov models”, Proceedings of Eurospeech’93,

Berlin, Germany, pp. 1579-1582, 1993.

Ghitza, O. Sondhi, M. “Hidden markov models with templates as non-stationary states: an

application to speech recognition”, Computer. Speech Lang. 2, 101-119, 1993.

Gish, H. NG, K. “A segmental speech model with applications to word spotting”, Proc. IEEE. Int.

Con. Acoust,Speech Signal Processing, Minneapolis, pp. 447-450, 1993.

Holmes, J.N. “Speech synthesis and recognition/ John Holmes and Wendy Holmes” 2 nd edition,

Taylor & Francis, 2001.

Holmes, W.J. Russell, M.J. “Probabilistic-trajectory segmental HMMs”, Computer Speech and

Language 13, 3-37, 1999.

Holmes, W.J. “Segmental HMMs: Modelling Dynamics and Underlying Structure for Automatic

Speech Recognition”, IMA Talks: Mathematical Foundations of Speech Processing and

Recognition, September 18-22, 2000.

Holmes, J.N. Mattingly I.G. and Shearme J.N., “Speech synthesis by rule”, Language and Speech, 7,

pp. 127-143, 1966.

Levinson, S. “Continuously varable duration hidden Markov models for automatic speech

recognition”, Computer. Speech Language, vol.1, pp. 29-45, 1986.

Ostendorf, M., Digalakis, V. & Kimball, O. A. (1996), “From HMM’s to segment models: A

unified view of stochastic modelling for speech recognition”, IEEE Transactions on Speech

and Audio Processing 4, 360-378, 1996.

Russell, M.J. Jackson, P.J.B. “A multiple-level linear/linear segmental HMM with a formant-based

intermediate layer”, Computer Speech and Language, 19 (2): 205 225, 2005.

Russell, M.J. “A unified model for speech recognition and synthesis”, the University of Birmingham,

2004.

Russell, M.J. Moore, R. “Explicit modelling of state occupancy in hidden Markov models for

automatic speech recognition”, Proc. Int. Conf. Acoust., Seppch Signal Processing, pp.

2376-2379,1985.

Russell, M.J. “A segmental HMM for speech pattern modelling”, Proceedings of the IEEE

International Conference on Acoustics Speech and Signal Processing, Minneapolis, MN, pp.

499-502, 1993.

Richards, H.B. Bridle, J.S. “The HDM: a segmental Hidden Dynamic Model of coarticulation”,

Proceedings of the IEEE-ICASSP, Phoenix, AZ, pp. 357-360, 1999.

Tokuda, K. Zen, H. and Kitamura, T. “Trajectory modelling based on HMMs with the explicit

relationship between static and dynamic feature”, in Proc. Of Eurospeech 2003, pp. 865-868,

2003.

Tokuda, K. Yoshimura, T. Masuko, T. Kobayashi, T. Kitamura, T. “Speech parameter generation

algorithms for HMM-based speech synthesis”, Proc. ICASSP, vol.3, pp. 1315-1318, 2000.

Young, S.J. “Large vocabulary continuous speech recognition: a review”, Proceedings of IEEE

workshop on Automatic Speech Recognition, Snowbird, pp. 3-28, 1995.

11