Reading - Rockhurst

advertisement

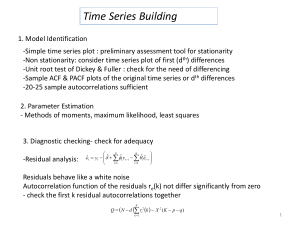

following is the original site for the Duke document on ARIMA models. It is the best I have found in introducing you to what the ARIMA models do that connects to the approach we have been using in class, particularly on assignment 1. http://www.duke.edu/~rnau/411arim.htm April 7, 2010 Introduction to ARIMA: nonseasonal models ARIMA(p,d,q) ARIMA(0,1,0) = random walk ARIMA(1,1,0) = differenced first-order autoregressive model ARIMA(0,1,1) without constant = simple exponential smoothing ARIMA(0,1,1) with constant = simple exponential smoothing with growth ARIMA(0,2,1) or (0,2,2) without constant = linear exponential smoothing A "mixed" model--ARIMA(1,1,1) Spreadsheet implementation ARIMA(p,d,q): ARIMA models are, in theory, the most general class of models for forecasting a time series which can be stationarized by transformations such as differencing and logging. In fact, the easiest way to think of ARIMA models is as finetuned versions of random-walk and random-trend models: the fine-tuning consists of adding lags of the differenced series and/or lags of the forecast errors to the prediction equation, as needed to remove any last traces of autocorrelation from the forecast errors. The acronym ARIMA stands for "Auto-Regressive Integrated Moving Average." Lags of the differenced series appearing in the forecasting equation are called "autoregressive" terms, lags of the forecast errors are called "moving average" terms, and a time series which needs to be differenced to be made stationary is said to be an "integrated" version of a stationary series. Random-walk and random-trend models, autoregressive models, and exponential smoothing models (i.e., exponential weighted moving averages) are all special cases of ARIMA models. A nonseasonal ARIMA model is classified as an "ARIMA(p,d,q)" model, where: p is the number of autoregressive terms, autoregression and autocorrelation are used interchangeably. In the correlograms we used in class, when we were above .15 or below -.15 we were indentifying that autocorrelation existed. When such autocorrelation existed in our “lag 1” model then p=1. If the correlogram showed that a “lag 2” model also was above .15 or below .15 then p=2. So, in choosing our ARIMA model, we can make an intelligent guess about “p” by looking at a correlogram. d is the number of nonseasonal differences, and q is the number of lagged forecast errors in the prediction equation. To identify the appropriate ARIMA model for a time series, you begin by identifying the order(s) of differencing needing to stationarize the series and remove the gross features of seasonality, perhaps in conjunction with a variance-stabilizing “variance stabilizing”- remember how the first differences got bigger through time? We used percentage changes to get rid of that problem. But by choosing the correct parameters (p,d,q) on an ARIMA model we can take care of this problem transformation such as logging or deflating. If you stop at this point and predict that the differenced series is constant, you have merely fitted a random walk “random walk model”. The original “random walk” model was illustrated by envisioning a drunk at a light post in the middle of the night. The “random walk” describes the path that drunk would be likely to make around the light post before collapsing. If the “random walk” does not have a “constant term”, the drunk will collapse on the lamp post. If the random walk is “stationary” the drunk will collapse at the same spot every time the drunk gets up, wanders around and collapses again. (sorry for the graphic nature of the illustration). or random trend model. (Recall that the random walk model predicts the first difference of the series to be constant, the seasonal random walk model predicts the seasonal difference to be constant, and the seasonal random trend model predicts the first difference of the seasonal difference to be constant--usually zero.) However, the best random walk or random trend model may still have autocorrelated errors, suggesting that additional factors of some kind are needed in the prediction equation. ARIMA(0,1,0) = random walk: In models we have studied previously, we have encountered two strategies for eliminating autocorrelation in forecast errors. One approach, which we first used in regression analysis, was the addition of lags of the stationarized series. For example, suppose we initially fit the random-walk-withgrowth model to the time series Y. The prediction equation for this model can be written as: (1) The “Naïve” Model=Regressing a variable on itself= ARIMA(0,1,0) ...where the constant term (here denoted by "mu") is the average difference in Y. in other words “” indicates how far from the lamppost the drunk collapses This can be considered as a degenerate regression model in which DIFF(Y) is the dependent variable and there are no independent variables other than the constant term. Since it includes (only) a nonseasonal difference and a constant term, it is classified as an "ARIMA(0,1,0) model with constant." Of course, the random walk without growth would be just an ARIMA(0,1,0) model without constant. without constant, collapsing means hitting the lamppost, but with constant collapsing happens out there in the dark somewhere. ARIMA(1,1,0) = differenced first-order autoregressive model: If the errors of the random walk model are autocorrelated, perhaps the problem can be fixed by adding one lag of the dependent variable to the prediction equation--i.e., by regressing DIFF(Y) on itself lagged by one period. This would yield the following prediction equation: (2) Regressing Change (first Difference) on one period lagged change= ARIMA(1,1,0) Everything that comes after the “” is simply a way of saying the “change” from one period earlier. which can be rearranged to This is a first-order autoregressive, or "AR(1)", model with one order of nonseasonal differencing and a constant term--i.e., an "ARIMA(1,1,0) model with constant." Here, the constant term is denoted by "mu" and the autoregressive coefficient is denoted by "phi", in keeping with the terminology for ARIMA models popularized by Box and Jenkins. (In the output of the Forecasting procedure in Statgraphics, this coefficient is simply denoted as the AR(1) coefficient.) ARIMA(0,1,1) without constant = simple exponential smoothing: Another strategy for correcting autocorrelated errors in a random walk model is suggested by the simple exponential smoothing model. Recall that for some nonstationary time series (e.g., one that exhibits noisy fluctuations around a slowly-varying mean), the random walk model does not perform as well as a moving average of past values. In other words, rather than taking the most recent observation as the forecast of the next observation, it is better to use an average of the last few observations in order to filter out the noise and more accurately estimate the local mean. The simple exponential smoothing model uses an exponentially weighted moving average of past values to achieve this effect. We saw this in the chapter on weighted moving averages. The prediction equation for the simple exponential smoothing model can be written in a number of mathematically equivalent ways, one of which is: ...where e(t-1) denotes the error at period t-1. Note that this resembles the prediction equation for the ARIMA(1,1,0) model, except that instead of a multiple of the lagged difference it includes a multiple of the lagged forecast error. (It also does not include a constant term--yet.) The coefficient of the lagged forecast error is denoted by the Greek letter "theta" (again following Box and Jenkins) and it is conventionally written with a negative sign for reasons of mathematical symmetry. "Theta" in this equation corresponds to the quantity "1-minus-alpha" in the exponential smoothing formulas we studied earlier. When a lagged forecast error is included in the prediction equation as shown above, it is referred to as a "moving average" (MA) term. The simple exponential smoothing model is therefore a first-order moving average ("MA(1)") model with one order of nonseasonal differencing and no constant term --i.e., an "ARIMA(0,1,1) model without constant." This means that in Statgraphics (or any other statistical software that supports ARIMA models) you can actually fit a simple exponential smoothing by specifying it as an ARIMA(0,1,1) model without constant, and the estimated MA(1) coefficient corresponds to "1-minus-alpha" in the SES formula. ARIMA(0,1,1) with constant = simple exponential smoothing with growth: By implementing the SES model as an ARIMA model, you actually gain some flexibility. First of all, the estimated MA(1) coefficient is allowed to be negative: this corresponds to a smoothing factor larger than 1 in an SES model, which is usually not allowed by the SES model-fitting procedure. Second, you have the option of including a constant term in the ARIMA model if you wish, in order to estimate an average non-zero trend. The ARIMA(0,1,1) model with constant has the prediction equation: The one-period-ahead forecasts from this model are qualitatively similar to those of the SES model, except that the trajectory of the long-term forecasts is typically a sloping line (whose slope is equal to mu) rather than a horizontal line. ARIMA(0,2,1) or (0,2,2) without constant = linear exponential smoothing: Linear exponential smoothing models are ARIMA models which use two nonseasonal differences in conjunction with MA terms. The second difference of a series Y is not simply the difference between Y and itself lagged by two periods, but rather it is the first difference of the first difference--i.e., the change-in-the-change of Y at period t. Thus, the second difference of Y at period t is equal to (Y(t)-Y(t-1)) - (Y(t1)-Y(t-2)) = Y(t) - 2Y(t-1) + Y(t-2). A second difference of a discrete function is analogous to a second derivative of a continuous function: it measures the "acceleration" or "curvature" in the function at a given point in time. The ARIMA(0,2,2) model without constant predicts that the second difference of the series equals a linear function of the last two forecast errors: which can be rearranged as: where theta-1 and theta-2 are the MA(1) and MA(2) coefficients. This is essentially the same as Brown's linear exponential smoothing model, with the MA(1) coefficient corresponding to the quantity 2*(1-alpha) in the LES model. To see this connection, recall that forecasting equation for the LES model is: Upon comparing terms, we see that the MA(1) coefficient corresponds to the quantity 2*(1-alpha) and the MA(2) coefficient corresponds to the quantity -(1alpha)^2 (i.e., "minus (1-alpha) squared"). If alpha is larger than 0.7, the corresponding MA(2) term would be less than 0.09, which might not be significantly different from zero, in which case an ARIMA(0,2,1) model probably would be identified. A "mixed" model--ARIMA(1,1,1): The features of autoregressive and moving average models can be "mixed" in the same model. For example, an ARIMA(1,1,1) model with constant would have the prediction equation: Normally, though, we will try to stick to "unmixed" models with either only-AR or only-MA terms, because including both kinds of terms in the same model sometimes leads to overfitting of the data and non-uniqueness of the coefficients. Spreadsheet implementation: ARIMA models such as those described above are easy to implement on a spreadsheet. The prediction equation is simply a linear equation that refers to past values of original time series and past values of the errors. Thus, you can set up an ARIMA forecasting spreadsheet by storing the data in column A, the forecasting formula in column B, and the errors (data minus forecasts) in column C. The forecasting formula in a typical cell in column B would simply be a linear expression referring to values in preceding rows of columns A and C, multiplied by the appropriate AR or MA coefficients stored in cells elsewhere on the spreadsheet. Go to next topic: Identifying the order of differencing Identifying the order of differencing The first (and most important) step in fitting an ARIMA model is the determination of the order of differencing needed to stationarize the series. Normally, the correct amount of differencing is the lowest order of differencing that yields a time series which fluctuates around a well-defined mean value and whose autocorrelation function (ACF) plot decays fairly rapidly to zero, either from above or below. the ”autocorrelation function (ACF) plot” is what we have been calling a correlogram. If the series still exhibits a long-term trend, or otherwise lacks a tendency to return to its mean value, or if its autocorrelations are positive out to a high number of lags (e.g., 10 or more), then it needs a higher order of differencing. for example if the correlogram has a bunch of lags above .15 for a “change” model (ARIMA(0,1,0)), then go to a “change of the change” model (ARIMA(0,2,0)). The correlogram should deteriorate more rapidly. We will designate this as our "first rule of identifying ARIMA models" : Rule 1: If the series has positive autocorrelations out to a high number of lags, then it probably needs a higher order of differencing. Differencing tends to introduce negative correlation: if the series initially shows strong positive autocorrelation, then a nonseasonal difference will reduce the autocorrelation and perhaps even drive the lag-1 autocorrelation to a negative value. If you apply a second nonseasonal difference (which is occasionally necessary), the lag-1 autocorrelation will be driven even further in the negative direction. If the lag-1 autocorrelation is zero or even negative, then the series does not need further differencing. You should resist the urge to difference it anyway just because you don't see any pattern in the autocorrelations! One of the most common errors in ARIMA modeling is to "overdifference" the series and end up adding extra AR or MA terms to undo the damage. If the lag-1 autocorrelation is more negative than -0.5 (and theoretically a negative lag-1 autocorrelation should never be greater than 0.5 in magnitude), this may mean the series has been overdifferenced. The time series plot of an overdifferenced series may look quite random at first glance, but if you look closer you will see a pattern of excessive changes in sign from one observation to the next--i.e., up-down-up-down, etc. : Rule 2: If the lag-1 autocorrelation is zero or negative, or the autocorrelations are all small and patternless, then the series does not need a higher order of differencing. If the lag-1 autocorrelation is -0.5 or more negative, the series may be overdifferenced. BEWARE OF OVERDIFFERENCING!! Another symptom of possible overdifferencing is an increase in the standard deviation, rather than a reduction, when the order of differencing is increased. This becomes our third rule: Rule 3: The optimal order of differencing is often the order of differencing at which the standard deviation is lowest. In the Forecasting procedure in Statgraphics, you can find the order of differencing that minimizes the standard deviation by fitting ARIMA models with various orders of differencing and no coefficients other than a constant. For example, if you fit an ARIMA(0,0,0) model with constant, an ARIMA(0,1,0) model with constant, and an ARIMA(0,2,0) model with constant, then the RMSE's will be equal to the standard deviations of the original series with 0, 1, and 2 orders of nonseasonal differencing, respectively. The first two rules do not always unambiguously determine the "correct" order of differencing. We will see later that "mild underdifferencing" can be compensated for by adding AR terms to the model, while "mild overdifferencing" can be compensated for by adding MA terms instead. In some cases, there may be two different models which fit the data almost equally well: a model that uses 0 or 1 order of differencing together with AR terms, versus a model that uses the next higher order of differencing together with MA terms. In trying to choose between two such models that use different orders of differencing, you may need to ask what assumption you are most comfortable making about the degree of nonstationarity in the original series--i.e., the extent to which it does or doesn't have fixed mean and/or a constant average trend. Rule 4: A model with no orders of differencing assumes that the original series is stationary (mean-reverting). A model with one order of differencing assumes that the original series has a constant average trend (e.g. a random walk or SES-type model, with or without growth). A model with two orders of total differencing assumes that the original series has a time-varying trend (e.g. a random trend or LES-type model). Another consideration in determining the order of differencing is the role played by the CONSTANT term in the model--if one is included. The constant represents the mean of the series if no differencing is performed, it represents the average trend in the series if one order of differencing is used, and it represents that average trend- in-the-trend (i.e., curvature) if there are two orders of differencing. We generally do not assume that there are trends-in-trends, so the constant is usually removed from models with two orders of differencing. In a model with one order of differencing, the constant may or may not be included, depending on whether we do or do not want to allow for an average trend. Hence we have: Rule 5: A model with no orders of differencing normally includes a constant term (which represents the mean of the series). A model with two orders of total differencing normally does not include a constant term. In a model with one order of total differencing, a constant term should be included if the series has a non-zero average trend. An example: Consider the UNITS series in the TSDATA sample data file that comes with SGWIN. (This is a nonseasonal time series consisting of unit sales data.) First let's look at the series with zero orders of differencing--i.e., the original time series. There are many ways we could obtain plots of this series, but let's do so by specifying an ARIMA(0,0,0) model with constant--i.e., an ARIMA model with no differencing and no AR or MA terms, only a constant term. This is just the "mean" model under another name, and the time series plot of the residuals is therefore just a plot of deviations from the mean: The autocorrelation function (ACF) plot shows a very slow, linear decay pattern which is typical of a nonstationary time series: This is just the correlogram you have been computing for a variable upon itself. The RMSE (which is just the standard deviation of the residuals in a constant-only model) shows up as the "estimated white noise standard deviation" in the Analysis Summary: Forecast model selected: ARIMA(0,0,0) with constant ARIMA Model Summary Parameter Estimate Stnd. Error t P-value ----------------------------------------------------------------------Mean 222.738 1.60294 138.956 0.000 Constant 222.738 ----------------------------------------------------------------------Backforecasting: yes Estimated white noise variance = 308.329 with 149 degrees of freedom Estimated white noise standard deviation = 17.5593 Number of iterations: 3 Clearly at least one order of differencing is needed to stationarize this series. After taking one nonseasonal difference--i.e., fitting an ARIMA(0,1,0) model with constant-the residuals look like this: They are trying to pick how many ”p,d,q” features you need to prevent autocorrelation from being a problem while improving the forecasting capability of the model. They are showing you how it’s done by taking you through this example. note the use of the pvalue to show how well the model does in fitting the data. The number of iterations refers to the number of times the model has to go back to estimate the parameters to the equation being used to forecast the series. Notice that the series appears approximately stationary with no long-term trend: it exhibits a definite tendency to return to its mean, albeit a somewhat lazy one. The ACF plot confirms a slight amount of positive autocorrelation: In other words, by using a “change” (first difference) model they have eliminated a lot of the significant autocorrelation- but not for the first three lags!!! They’re still above. 15. The standard deviation has been dramatically reduced from 17.5593 to 2.38 as shown in the Analysis Summary: Forecast model selected: ARIMA(0,1,0) with constant ARIMA Model Summary Parameter Estimate Stnd. Error t P-value ----------------------------------------------------------------------Mean 0.50095 0.141512 3.53999 0.000535 Constant 0.50095 ----------------------------------------------------------------------Backforecasting: yes Estimated white noise variance = 2.38304 with 148 degrees of freedom Estimated white noise standard deviation = 1.54371 Number of iterations: 2 Is the series stationary at this point, or is another difference needed? Because the trend has been completely eliminated and the amount of autocorrelation which remains is small, it appears as though the series may be satisfactorily stationary. If we try a second nonseasonal difference--i.e., an ARIMA(0,2,0) model--just to see what the effect is, we obtain the following time series plot: If you look closely, you will notice the signs of overdifferencing--i.e., a pattern of changes of sign from one observation to the next. This is confirmed by the ACF plot, which now has a negative spike at lag 1 that is close to 0.5 in magnitude: Is the series now overdifferenced? Apparently so, because the standard deviation has actually increased from 1.54371 to 1.81266: Forecast model selected: ARIMA(0,2,0) with constant ARIMA Model Summary Parameter Estimate Stnd. Error t P-value ----------------------------------------------------------------------Mean 0.000782562 0.166869 0.00468969 0.996265 Constant 0.000782562 ----------------------------------------------------------------------Backforecasting: yes Estimated white noise variance = 3.28573 with 147 degrees of freedom Estimated white noise standard deviation = 1.81266 Number of iterations: 1 Thus, it appears that we should start by taking a single nonseasonal difference. However, this is not the last word on the subject: we may find when we add AR or MA terms that a model with another order of differencing works a little better. Or, we may conclude that the properties of the long-term forecasts are more intuitively reasonable with another order of differencing (more about this later). But for now, we will go with one order of nonseasonal differencing. Go to next topic: Identifying the orders of AR or MA terms. Identifying the numbers of AR or MA terms ACF and PACF plots AR and MA signatures A model for the UNITS series--ARIMA(2,1,0) Mean versus constant Alternative model for the UNITS series--ARIMA(0,2,1) Which model should we choose? Mixed models Unit roots ACF and PACF plots: After a time series has been stationarized by differencing, the next step in fitting an ARIMA model is to determine whether AR or MA terms are needed to correct any autocorrelation that remains in the differenced series. Of course, with software like Statgraphics, you could just try some different combinations of terms and see what works best. But there is a more systematic way to do this. By looking at the autocorrelation function (ACF) and partial autocorrelation (PACF) plots of the differenced series, you can tentatively identify the numbers of AR and/or MA terms that are needed. You are already familiar with the ACF plot: it is merely a bar chart of the coefficients of correlation between a time series and lags of itself. The PACF plot is a plot of the partial correlation coefficients between the series and lags of itself. In general, the "partial" correlation between two variables is the amount of correlation between them which is not explained by their mutual correlations with a specified set of other variables. For example, if we are regressing a variable Y on other variables X1, X2, and X3, the partial correlation between Y and X3 is the amount of correlation between Y and X3 that is not explained by their common correlations with X1 and X2. This partial correlation can be computed as the square root of the reduction in variance that is achieved by adding X3 to the regression of Y on X1 and X2. A partial autocorrelation is the amount of correlation between a variable and a lag of itself that is not explained by correlations at all lower-order-lags. The autocorrelation of a time series Y at lag 1 is the coefficient of correlation between Y(t) and Y(t-1), which is presumably also the correlation between Y(t-1) and Y(t-2). But if Y(t) is correlated with Y(t-1), and Y(t-1) is equally correlated with Y(t-2), then we should also expect to find correlation between Y(t) and Y(t-2). (In fact, the amount of correlation we should expect at lag 2 is precisely the square of the lag-1 correlation.) Thus, the correlation at lag 1 "propagates" to lag 2 and presumably to higher-order lags. The partial autocorrelation at lag 2 is therefore the difference between the actual correlation at lag 2 and the expected correlation due to the propagation of correlation at lag 1. Here is the autocorrelation function (ACF) of the UNITS series, before any differencing is performed: The autocorrelations are significant for a large number of lags--but perhaps the autocorrelations at lags 2 and above are merely due to the propagation of the autocorrelation at lag 1. This is confirmed by the PACF plot: Note that the PACF plot has a significant spike only at lag 1, meaning that all the higher-order autocorrelations are effectively explained by the lag-1 autocorrelation. The PACF plot is just like the correlogram EXCEPT that correlation shown in the second lag is NET of what was explained in the first lag. The correlation shown in the the third lage is NET of what was explained the previous two lags. By using multiple regression with all of the lags you are getting these net correlations- some of you did this on quiz08. The partial autocorrelations at all lags can be computed by fitting a succession of autoregressive models with increasing numbers of lags. In particular, the partial autocorrelation at lag k is equal to the estimated AR(k) coefficient in an autoregressive model with k terms--i.e., a multiple regression model in which Y is regressed on LAG(Y,1), LAG(Y,2), etc., up to LAG(Y,k). Thus, by mere inspection of the PACF you can determine how many AR terms you need to use to explain the autocorrelation pattern in a time series: if the partial autocorrelation is significant at lag k and not significant at any higher order lags--i.e., if the PACF "cuts off" at lag k-then this suggests that you should try fitting an autoregressive model of order k The PACF of the UNITS series provides an extreme example of the cut-off phenomenon: it has a very large spike at lag 1 and no other significant spikes, indicating that in the absence of differencing an AR(1) model should be used. However, the AR(1) term in this model will turn out to be equivalent to a first difference, because the estimated AR(1) coefficient (which is the height of the PACF spike at lag 1) will be almost exactly equal to 1. Now, the forecasting equation for an AR(1) model for a series Y with no orders of differencing is: If the AR(1) coefficient ("phi") in this equation is equal to 1, it is equivalent to predicting that the first difference of Y is constant--i.e., it is equivalent to the equation of the random walk model with growth: The PACF of the UNITS series is telling us that, if we don't difference it, then we should fit an AR(1) model which will turn out to be equivalent to taking a first difference. In other words, it is telling us that UNITS really needs an order of differencing to be stationarized. In their case, here, the naïve model works!!!!! AR and MA signatures: If the PACF displays a sharp cutoff while the ACF decays more slowly (i.e., has significant spikes at higher lags), we say that the stationarized series displays an "AR signature," meaning that the autocorrelation pattern can be explained more easily by adding AR terms than by adding MA terms. You will probably find that an AR signature is commonly associated with positive autocorrelation at lag 1--i.e., it tends to arise in series which are slightly underdifferenced. The reason for this is that an AR term can act like a "partial difference" in the forecasting equation. For example, in an AR(1) model, the AR term acts like a first difference if the autoregressive coefficient is equal to 1, it does nothing if the autoregressive coefficient is zero, and it acts like a partial difference if the coefficient is between 0 and 1. So, if the series is slightly underdifferenced--i.e. if the nonstationary pattern of positive autocorrelation has not completely been eliminated, it will "ask for" a partial difference by displaying an AR signature. Hence, we have the following rule of thumb for determining when to add AR terms: Rule 6: If the PACF of the differenced series displays a sharp cutoff and/or the lag-1 autocorrelation is positive--i.e., if the series appears slightly "underdifferenced"--then consider adding an AR term to the model. The lag at which the PACF cuts off is the indicated number of AR terms. In principle, any autocorrelation pattern can be removed from a stationarized series by adding enough autoregressive terms (lags of the stationarized series) to the forecasting equation, and the PACF tells you how many such terms are likely be needed. However, this is not always the simplest way to explain a given pattern of autocorrelation: sometimes it is more efficient to add MA terms (lags of the forecast errors) instead. The autocorrelation function (ACF) plays the same role for MA terms that the PACF plays for AR terms--that is, the ACF tells you how many MA terms are likely to be needed to remove the remaining autocorrelation from the differenced series. If the autocorrelation is significant at lag k but not at any higher lags--i.e., if the ACF "cuts off" at lag k--this indicates that exactly k MA terms should be used in the forecasting equation. In the latter case, we say that the stationarized series displays an "MA signature," meaning that the autocorrelation pattern can be explained more easily by adding MA terms than by adding AR terms. An MA signature is commonly associated with negative autocorrelation at lag 1--i.e., it tends to arise in series which are slightly overdifferenced. The reason for this is that an MA term can "partially cancel" an order of differencing in the forecasting equation. To see this, recall that an ARIMA(0,1,1) model without constant is equivalent to a Simple Exponential Smoothing model. The forecasting equation for this model is where the MA(1) coefficient "theta" corresponds to the quantity "1-alpha" in the SES model. If the MA(1) coefficient is equal to 1, this corresponds to an SES model with alpha=0, which is just a CONSTANT model because the forecast is never updated. This means that when the MA(1) coefficient is equal to 1, it is actually cancelling out the differencing operation that ordinarily enables the SES forecast to re-anchor itself on the last observation. On the other hand, if the moving-average coefficient is equal to 0, this model reduces to a random walk model--i.e., it leaves the differencing operation alone. So, if the MA(1) coefficient is something greater than 0, it is as if we are partially cancelling an order of differencing . If the series is already slightly overdifferenced--i.e., if negative autocorrelation has been introduced--then it will "ask for" a difference to be partly cancelled by displaying an MA signature. (A lot of arm-waving is going on here! A more rigorous explanation of this effect is found in the "Mathematics of ARIMA Models" handout.) Hence the following additional rule of thumb: Rule 7: If the ACF of the differenced series displays a sharp cutoff and/or the lag-1 autocorrelation is negative--i.e., if the series appears slightly "overdifferenced"--then consider adding an MA term to the model. The lag at which the ACF cuts off is the indicated number of MA terms. A model for the UNITS series--ARIMA(2,1,0): Previously we determined that the UNITS series needed (at least) one order of nonseasonal differencing to be stationarized. After taking one nonseasonal difference--i.e., fitting an ARIMA(0,1,0) model with constant--the ACF and PACF plots look like this: Notice that (a) the correlation at lag 1 is significant and positive, and (b) the PACF shows a sharper "cutoff" than the ACF. In particular, the PACF has only two significant spikes, while the ACF has four. Thus, according to Rule 7 above, the differenced series displays an AR(2) signature. If we therefore set the order of the AR term to 2--i.e., fit an ARIMA(2,1,0) model--we obtain the following ACF and PACF plots for the residuals: The autocorrelation at the crucial lags--namely lags 1 and 2--has been eliminated, and there is no discernible pattern in higher-order lags. The time series plot of the residuals shows a slightly worrisome tendency to wander away from the mean: However, the analysis summary report shows that the model nonetheless performs quite well in the validation period, both AR coefficients are significantly different from zero, and the standard deviation of the residuals has been reduced from 1.54371 to 1.4215 (nearly 10%) by the addition of the AR terms. Furthermore, there is no sign of a "unit root" because the sum of the AR coefficients (0.252254+0.195572) is not close to 1. (Unit roots are discussed on more detail below.) On the whole, this appears to be a good model. Analysis Summary Data variable: units Number of observations = 150 Start index = 1/80 Sampling interval = 1.0 month(s) Length of seasonality = 12 Forecast Summary ---------------Nonseasonal differencing of order: 1 Forecast model selected: ARIMA(2,1,0) with constant Number of forecasts generated: 24 Number of periods withheld for validation: 30 Estimation Validation Statistic Period Period -------------------------------------------MSE 2.10757 0.757818 MAE 1.11389 0.726546 MAPE 0.500834 0.280088 ME 0.0197748 -0.200813 MPE 0.0046833 -0.0778831 ARIMA Model Summary Parameter Estimate Stnd. Error t P-value ----------------------------------------------------------------------AR(1) 0.252254 0.0915209 2.756240 0.006593 AR(2) 0.195572 0.0916343 2.13426 0.034492 Mean 0.467566 0.240178 1.94675 0.053485 Constant 0.258178 --------------------------------------------------------------------------Backforecasting: yes Estimated white noise variance = 2.10875 with 146 degrees of freedom Estimated white noise standard deviation = 1.45215 Number of iterations: 1 The (untransformed) forecasts for the model show a linear upward trend projected into the future: The trend in the long-term forecasts is due to fact that the model includes one nonseasonal difference and a constant term: this model is basically a random walk with growth (NF#1) fine-tuned by the addition of two autoregressive terms--i.e., two lags of the differenced series. The slope of the long-term forecasts (i.e., the average increase from one period to another) is equal to the mean term in the model summary (0.467566). The forecasting equation is: where "mu" is the constant term in the model summary (0.258178), "phi-1" is the AR(1) coefficient (0.25224) and "phi-2" is the AR(2) coefficient (0.195572). Mean versus constant: In general, the "mean" term in the output of an ARIMA model refers to the mean of the differenced series (i.e., the average trend if the order of differencing is equal to 1), whereas the "constant" is the constant term that appears on the right-hand-side of the forecasting equation. The mean and constant terms are related by the equation: CONSTANT = MEAN*(1 minus the sum of the AR coefficients). In this case, we have 0.258178 = 0.467566*(1 - 0.25224 - 0.195572) Alternative model for the UNITS series--ARIMA(0,2,1): Recall that when we began to analyze the UNITS series, we were not entirely sure of the correct order of differencing to use. One order of nonseasonal differencing yielded the lowest standard deviation (and a pattern of mild positive autocorrelation), while two orders of nonseasonal differencing yielded a more stationary-looking time series plot (but with rather strong negative autocorrelation). Here are both the ACF and PACF of the series with two nonseasonal differences: The single negative spike at lag 1 in the ACF is an MA(1) signature, according to Rule 8 above. Thus, if we were to use 2 nonseasonal differences, we would also want to include an MA(1) term, yielding an ARIMA(0,2,1) model. According to Rule 5, we would also want to suppress the constant term. Here, then, are the results of fitting an ARIMA(0,2,1) model without constant: Analysis Summary Data variable: units Number of observations = 150 Start index = 1/80 Sampling interval = 1.0 month(s) Forecast Summary ---------------Nonseasonal differencing of order: 2 Forecast model selected: ARIMA(0,2,1) Number of forecasts generated: 24 Number of periods withheld for validation: 30 Estimation Validation Statistic Period Period -------------------------------------------MSE 2.13793 0.856734 MAE 1.15376 0.771561 MAPE 0.518221 0.297298 ME 0.0267768 -0.038966 MPE 0.017097 -0.0148876 ARIMA Model Summary Parameter Estimate Stnd. Error t P-value ----------------------------------------------------------------------MA(1) 0.75856 0.0607947 12.4774 .000000 ----------------------------------------------------------------------Backforecasting: yes Estimated white noise variance = 2.1404 with 147 degrees of freedom Estimated white noise standard deviation = 1.46301 Number of iterations: 4 Notice that the estimated white noise standard deviation (RMSE) is only very slightly higher for this model than the previous one (1.46301 here versus 1.45215 previously). The forecasting equation for this model is: where theta-1 is the MA(1) coefficients. Recall that this is similar to a Linear Exponential Smoothing model, with the MA(1) coefficient corresponding to the quantity 2*(1-alpha) in the LES model. The MA(1) coefficient of 0.76 in this model suggests that an LES model with alpha in the vicinity of 0.72 would fit about equally well. Actually, when an LES model is fitted to the same data, the optimal value of alpha turns out to be around 0.61, which is not too far off. Here is a model comparison report that shows the results of fitting the ARIMA(2,1,0) model with constant, the ARIMA(0,2,1) model without constant, and the LES model: Model Comparison ---------------Data variable: units Number of observations = 150 Start index = 1/80 Sampling interval = 1.0 month(s) Number of periods withheld for validation: 30 Models -----(A) ARIMA(2,1,0) with constant (B) ARIMA(0,2,1) (C) Brown's linear exp. smoothing with alpha = 0.6067 Estimation Period Model MSE MAE MAPE ME MPE ----------------------------------------------------------------------(A) 2.10757 1.11389 0.500834 0.0197748 0.0046833 (B) 2.13793 1.15376 0.518221 0.0267768 0.017097 (C) 2.18056 1.14308 0.514482 0.000765696 0.0041274 Model RMSE RUNS RUNM AUTO MEAN VAR ----------------------------------------------(A) 1.45175 OK OK OK OK OK (B) 1.46217 OK OK OK OK OK (C) 1.47667 OK OK OK OK OK Validation Period Model MSE MAE MAPE ME MPE ----------------------------------------------------------------------(A) 0.757818 0.726546 0.280088 -0.200813 -0.0778831 (B) 0.856734 0.771561 0.297298 -0.038966 -0.0148876 (C) 0.882234 0.796109 0.306593 -0.014557 -0.00527448 The three models perform nearly identically in the estimation period, and the ARIMA(2,1,0) model with constant appears slightly better than the other two in the validation period. On the basis of these statistical results alone, it would be hard to choose among the three models. However, if we plot the long-term forecasts made by the ARIMA(0,2,1) model without constant (which are essentially the same as those of the LES model), we see a significant difference from those of the earlier model: The forecasts have somewhat less of an upward trend than those of the earlier model--because the local trend near the end of the series is slightly less than the average trend over the whole series--but the confidence intervals widen much more rapidly. The model with two orders of differencing assumes that the trend in the series is time-varying, hence it considers the distant future to be much more uncertain than does the model with only one order of differencing. Which model should we choose? That depends on the assumptions we are comfortable making with respect to the constancy of the trend in the data. The model with only one order of differencing assumes a constant average trend--it is essentially a fine-tuned random walk model with growth--and it therefore makes relatively conservative trend projections. It is also fairly optimistic about the accuracy with which it can forecast more than one period ahead. The model with two orders of differencing assumes a time-varying local trend--it is essentially a linear exponential smoothing model--and its trend projections are somewhat more fickle. As a general rule in this kind of situation, I would recommend choosing the model with the lower order of differencing, other things being roughly equal. In practice, random-walk or simple-exponential-smoothing models often seem to work better than linear exponential smoothing models. Mixed models: In most cases, the best model turns out a model that uses either only AR terms or only MA terms, although in some cases a "mixed" model with both AR and MA terms may provide the best fit to the data. However, care must be exercised when fitting mixed models. It is possible for an AR term and an MA term to cancel each other's effects, even though both may appear significant in the model (as judged by the t-statistics of their coefficients). Thus, for example, suppose that the "correct" model for a time series is an ARIMA(0,1,1) model, but instead you fit an ARIMA(1,1,2) model--i.e., you include one additional AR term and one additional MA term. Then the additional terms may end up appearing significant in the model, but internally they may be merely working against each other. The resulting parameter estimates may be ambiguous, and the parameter estimation process may take very many (e.g., more than 10) iterations to converge. Hence: Rule 8: It is possible for an AR term and an MA term to cancel each other's effects, so if a mixed AR-MA model seems to fit the data, also try a model with one fewer AR term and one fewer MA term-particularly if the parameter estimates in the original model require more than 10 iterations to converge. For this reason, ARIMA models cannot be identified by "backward stepwise" approach that includes both AR and MA terms. In other words, you cannot begin by including several terms of each kind and then throwing out the ones whose estimated coefficients are not significant. Instead, you normally follow a "forward stepwise" approach, adding terms of one kind or the other as indicated by the appearance of the ACF and PACF plots. Unit roots: If a series is grossly under- or overdifferenced--i.e., if a whole order of differencing needs to be added or cancelled, this is often signalled by a "unit root" in the estimated AR or MA coefficients of the model. An AR(1) model is said to have a unit root if the estimated AR(1) coefficient is almost exactly equal to 1. (By "exactly equal " I really mean not significantly different from, in terms of the coefficient's own standard error.) When this happens, it means that the AR(1) term is precisely mimicking a first difference, in which case you should remove the AR(1) term and add an order of differencing instead. (This is exactly what would happen if you fitted an AR(1) model to the undifferenced UNITS series, as noted earlier.) In a higherorder AR model, a unit root exists in the AR part of the model if the sum of the AR coefficients is exactly equal to 1. In this case you should reduce the order of the AR term by 1 and add an order of differencing. A time series with a unit root in the AR coefficients is nonstationary--i.e., it needs a higher order of differencing. Rule 9: If there is a unit root in the AR part of the model--i.e., if the sum of the AR coefficients is almost exactly 1--you should reduce the number of AR terms by one and increase the order of differencing by one. Similarly, an MA(1) model is said to have a unit root if the estimated MA(1) coefficient is exactly equal to 1. When this happens, it means that the MA(1) term is exactly cancelling a first difference, in which case, you should remove the MA(1) term and also reduce the order of differencing by one. In a higher-order MA model, a unit root exists if the sum of the MA coefficients is exactly equal to 1. Rule 10: If there is a unit root in the MA part of the model--i.e., if the sum of the MA coefficients is almost exactly 1--you should reduce the number of MA terms by one and reduce the order of differencing by one. For example, if you fit a linear exponential smoothing model (an ARIMA(0,2,2) model) when a simple exponential smoothing model (an ARIMA(0,1,1) model) would have been sufficient, you may find that the sum of the two MA coefficients is very nearly equal to 1. By reducing the MA order and the order of differencing by one each, you obtain the more appropriate SES model. A forecasting model with a unit root in the estimated MA coefficients is said to be noninvertible, meaning that the residuals of the model cannot be considered as estimates of the "true" random noise that generated the time series. Another symptom of a unit root is that the forecasts of the model may "blow up" or otherwise behave bizarrely. If the time series plot of the longer-term forecasts of the model looks strange, you should check the estimated coefficients of your model for the presence of a unit root. Rule 11: If the long-term forecasts appear erratic or unstable, there may be a unit root in the AR or MA coefficients. None of these problems arose with the two models fitted here, because we were careful to start with plausible orders of differencing and appropriate numbers of AR and MA coefficients by studying the ACF and PACF models. Go to next topic: Estimation of ARIMA models Estimation of ARIMA models Linear versus nonlinear least squares Mean versus constant Backforecasting Linear versus nonlinear least squares ARIMA models which include only AR terms are special cases of linear regression models, hence they can be fitted by ordinary least squares. AR forecasts are a linear function of the coefficients as well as a linear function of past data. In principle, least-squares estimates of AR coefficients can be exactly calculated from autocorrelations in a single "iteration". In practice, you can fit an AR model in the Multiple Regression procedure-just regress DIFF(Y) (or whatever) on lags of itself. (But you would get slightly different results from the ARIMA procedure--see below!) While you can use regression to estimate some ARIMA models- at least the AR (p) component- you generally have to use the regressions in several “iterations” as noted above to reestimate the coefficients. This changes the meaning , accuracy, and usefulness of the t-statisitcs and prob-values that we normally use in regressions. ARIMA models which include MA terms are similar to regression models, but can't be fitted by ordinary least squares: Forecasts are a linear function of past data, but they are nonlinear functions of coefficients--e.g., an ARIMA(0,1,1) model without constant is an exponentially weighted moving average: ...in which the forecasts are a nonlinear function of the MA(1) parameter ("theta"). Another way to look at the problem: you can't fit MA models using ordinary multiple regression because there's no way to specify ERRORS as an independent variable--the errors are not known until the model is fitted! They need to be calculated sequentially, period by period, given the current parameter estimates. MA models therefore require a nonlinear estimation algorithm to be used, similar to the "Solver" algorithm in Excel. The algorithm uses a search process that typically requires 5 to 10 "iterations" and occasionally may not converge. You can adjust the tolerances for determining step sizes and stopping criteria for search (although default values are usually OK). "Mean" versus "constant The "mean" and the "constant" in ARIMA model-fitting results are different numbers whenever the model includes AR terms. Suppose that you fit an ARIMA model to Y in which p is the number of autoregressive terms. (Assume for convenience that there are no MA terms.) Let y denote the differenced (stationarized) version of Y--e.g., y(t) = Y(t)-Y(t-1) if one nonseasonal difference was used. Then the AR(p) forecasting equation for y is: This is just an ordinary multiple regression model in which "mu" is the constant term, "phi-1" is the coefficient of the first lag of y, and so on. Now, internally, the software converts this slope-intercept form of the regression equation to an equivalent form in terms of deviations from the mean. Let m denote the mean of the stationarized series y. Then the p-order autoregressive equation can be written in terms of deviations from the mean as: By collecting all the constant terms in this equation, we see it is equivalent to the "mu" form of the equation if: The software actually estimates "m" (along with the other model parameters) and reports this as the MEAN in the model-fitting results, along with its standard error and t-statistic, etc. The CONSTANT (i.e., "mu") is then calculated according to the preceding formula, i.e., CONSTANT = MEAN*(1 - sum of AR coefficients) If the model does not contain any AR terms, the MEAN and the CONSTANT are identical. In a model with one order of nonseasonal differencing (only), the MEAN is the trend factor (average period-to-period change). In a model with one order of seasonal differencing (only), the MEAN is the annual trend factor (average year-to-year change). "Backforecasting" The basic problem: an ARIMA model (or other time series model) predicts future values of the time series from past values--but how should the forecasting equation be initialized to make a forecast for the very first observation? (Actually, AR models can be initialized by dropping the first few observations--although this is inefficient and wastes data-- but MA models require an estimate of a prior error before they can make the first forecast.) Strange but true: a stationary time series looks the same going forward or backward in time, therefore... The same model that predicts the future of a series can also be used to predict its past. The solution: to squeeze the most information out of the available data, the best way to initialize an ARIMA model (or any time series forecasting model) is to use backward forecasting ("backforecasting") to obtain estimates of data values prior to period 1. When you use the backforecasting option in ARIMA estimation, the search algorithm actually makes two passes through the data on each iteration: first a backward pass is made to estimate prior data values using the current parameter estimates, then the estimated prior data values are used to initialize the forecasting equation for a forward pass through the data. If you DON'T use the backforecasting option, the forecasting equation is initialized by assuming that prior values of the stationarized series were equal to the mean. If you DO use the backforecasting option, then the backforecasts that are used to initialize the model are implicit parameters of the model, which must be estimated along with the AR and MA coefficients. The number of additional implicit parameters is roughly equal to the highest lag in the model--usually 2 or 3 for a nonseasonal model, and s+1 or 2s+1 for a seasonal model with seasonality=s. (If the model includes both a seasonal difference and a seasonal AR or MA term, it needs two season's worth of prior values to start up!) Note that with either backforecasting option, an AR model is estimated in a different way than it would be estimated in the Multiple Regression procedure (missing values are not merely ignored--they are replaced either with an estimate of the mean or with backforecasts), hence an AR model fitted in the ARIMA procedure will never yield exactly the same parameter estimates as an AR model fitted in the Multiple Regression procedure. Conventional wisdom: turn backforecasting OFF when you are unsure if the current model is valid, turn it ON to get final parameter estimates once you're reasonably sure the model is valid. If the model is mis-specified, backforecasting may lead to failures of the parameter estimates to converge and/or to unit-root problems.