Time Series II Autoregression and Moving Average

advertisement

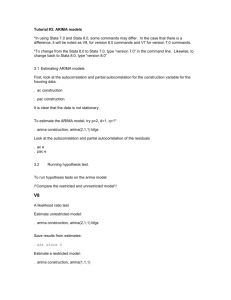

Time Series II Autoregression and Moving Average IV. Moving Average in Time Series Data A. What is a moving average process? In a time series with an MA process, errors are the average of this period’s random error and last period’s random error. This process depends solely on innovations, so it has no memory of past levels (even AR-1 does remember). Put another way, the covariance of et and et-2 = 0 under an MA-1 process. This sort of MA-1 process might be generated by a variable with measurement error, or some process where the impact of a shock to y takes exactly one period to fade away. In an MA-2 process, the shock takes two periods, and then has completely faded away. We can express an MA process by the following equation, where both of the vs are innovation or random errors: et = θvt-1 + vt B. How can we identify a moving average process? We can look at special time series graphs called autocorrelation functions (ACFs) and partial autocorrelation functions (PACFs) to determine whether we have an AR process, and MA process, or neither. In order to produce either chart in Stata, we need to first tell it that we have time series data, tell it the name of the variable is that indicates where we are in the time series, and then tell it what the units (daily, monthly, yearly, etc) of the series are. After we do this, we can ask for an ACF for a particular dependent variable, and tell it how far back in time to go. An ACF just looks at the raw correlations between yt and yt-M, . Stata gives us a confidence band around zero; if the correlation is outside of this band, then the correlation between yt and ytM is statistically significant. tsset t, monthly time variable: t, 1960m2 to 1972m1 ac air, lags(6) level(95) 1.00 0.50 0.00 -0.50 1 2 3 4 5 6 Lag Bartlett's f ormula f or MA(q) 95% conf idence bands This looks like an AR process. How do I know? In autoregression, this year’s budget should be highly correlated with last year’s budget, and there should be enough memory in the process so that its correlation with the budget from six years ago is statistically significant. In an MA-1 process, the correlation would only last one time period. The correlation with the second through sixth lags should be inside the confidence band. We should see the reverse of this pattern in a PACF, which calculates correlations controlling for all correlations with prior lags. Suppose we have an AR-1 process. The ρ for the first lag should be significant, but controlling for that, the correlation of today’s value with the value two time periods ago should not be significant. If the correlations in the PACF are significant for two time periods, but only two, we probably have an AR-2 process. What will the PACF look like when we have a moving average process? It will look like the ACF of an AR process – the lags will continue to be significantly (partially) correlated with each other for a while. 1.00 0.50 0.00 -0.50 1 2 3 4 5 6 Lag 95% Conf idence bands [se = 1/sqrt(n)] V. Integration and ARIMA Models A. What is integration? An integrated time series has a time trend, where the dependent variable is a function of some random innovation and the product of time and a coefficient. yt = βt + et Perhaps your height (until about age 16) is an integrated time series. So the interesting variation to explain in any time unit is not your height but how much you grew. An I-1 model then explains variation in the difference in the dependent variable, yt - yt-1. The ARIMA model. An ARIMA model is one that can estimate any and all of these processes together. It is written by specifying the order of each process, in order. For instance, and ARIMIA(2,0,1) process is an AR-2 and an MA-1 process. An ARIMA(2,1,1) process explains variation in the first differences of the dependent variable (yt - yt-1, which comes from I-1) using the first differences of one and two lags previous (yt-1 - yt-2 and y2 - yt-3, coming from AR-1), the previous disturbances (et-1, coming from MA-1) and a random disturbance et. Here are examples of each: arima air, arima(2,0,1) (setting optimization to BHHH) Iteration 0: log likelihood = -860.83601 Iteration 22: log likelihood = -699.12461 ARIMA regression Sample: 1960m2 to 1972m1 Log likelihood = -699.1246 Number of obs Wald chi2(3) Prob > chi2 = = = 144 1087.32 0.0000 -----------------------------------------------------------------------------| OPG air | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------air | _cons | 281.7383 62.14023 4.53 0.000 159.9456 403.5309 -------------+---------------------------------------------------------------ARMA | ar | L1 | .4990531 .1305942 3.82 0.000 .2430931 .755013 L2 | .4313754 .1244967 3.46 0.001 .1873665 .6753844 ma | L1 | .8564644 .0812863 10.54 0.000 .6971461 1.015783 -------------+---------------------------------------------------------------/sigma | 30.6991 1.748526 17.56 0.000 27.27205 34.12615 arima air, arima(2,1,1) (setting optimization to BHHH) Iteration 0: log likelihood = -714.88177 Iteration 15: log likelihood = -675.8479 ARIMA regression Sample: 1960m3 to 1972m1 Log likelihood = -675.8479 Number of obs Wald chi2(3) Prob > chi2 = = = 143 366.94 0.0000 -----------------------------------------------------------------------------| OPG D.air | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------air | _cons | 2.669459 .1533041 17.41 0.000 2.368988 2.969929 -------------+---------------------------------------------------------------ARMA | ar | L1 | 1.104262 .0667915 16.53 0.000 .9733534 1.235171 L2 | -.5103714 .0801074 -6.37 0.000 -.667379 -.3533638 ma | L1 | -.9999992 654.2094 -0.00 0.999 -1283.227 1281.227 -------------+---------------------------------------------------------------/sigma | 26.88058 8792.34 0.00 0.998 -17205.79 17259.55 ------------------------------------------------------------------------------ Why does the effect of the constant get smaller in the model that includes integration? Why is the number of observations smaller? VI. Granger Causality and Vector Autoregression A. How can I Granger-cause something? If we can control for past values of Y, and X still has an effect on present Y, then X “Granger-causes” Y. This Granger-caused a UCSD economist to win the Nobel Prize, and get a whole notion of causality named after him. So how do we find out if X Granger-causes Y? To test this, perform a “vector autoregression.” Regress variable Yt on all of its lags, and all of the lags of the X variables that might possibly in a kitchen sink world be related to it. This cleans up the errors (though at the cost of multi-collinearity, low degrees of free, and an utter lack of theory). A Granger test looks at the standard error of this regression, versus the S.E.R. for a regression of Y on lagged Ys along. Another way to see if X Granger-causes Y is to do a joint F-test on the lagged Xs. VII. Unit Roots A. What is a stationary process? In a stationary process, the mean and variance of Y is not dependent on time. We like to assume that this is true. If there is a time trend in our process (but the variable is stationary around that trend), that’s not a big problem. We can simply “detrend” our data, and then model it. But the problem comes when we have a “random walk” or a “random walk with drift.” The mean and/or variance of Y moves around over time, but not in any systematic fashion (more like a drunk by a lamppost). If we have a random walk, in a model regressing Y on its lag, we should get a ρ = 1, which is called a “unit root.” But the problem is that the variance of the lagged Y (the denominator of ρ) will be infinite, making it impossible to perform a traditional t-test to see whether ρ = 1. So to see if we indeed have a unit root, we perform a slightly different test called the Dickey-Fuller test