To study the performance of the intelligent agents in our iSCM

Beer Game Computers Play:

Can Artificial Agents Manage Supply Chain?

1

Steven O. Kimbrough, D.-J. Wu, Fang Zhong

Department of Operations and Information Management

The Wharton School

University of Pennsylvania and

Department of Management

Bennett S. LeBow College of Business

Drexel University

Philadelphia, PA 19104

U.S.A.

May, 2000

1 Corresponding author is D.J. Wu, his current address is: 101 North 33rd Street, Academic Building,

Philadelphia, PA 19104. Email: wudj@drexel.edu.

Abstract

In this study, we build an electronic supply chain that is managed by artificial agents. We investigate if artificial agents do better than human beings when playing the MIT “Beer

Game” by mitigating the Bullwhip effect or by discovering good and effective business strategies. In particular, we study the following questions: Can agents learn reasonably good policies in the face of deterministic demand with fixed lead-time? Can agents track reasonably well in the face of stochastic demand with stochastic lead-time? Can agents learn and adapt in various contents to play the game? Can agents cooperate across the supply chain? What kind of mechanisms induces coordination, cooperation and information sharing among agents in the electronic supply chain when facing automated electronic markets?

KEY WORDS AND PHRASES: Artificial Agents, Beer Game, Supply Chain

Management, Business Strategies.

1. Introduction

We propose a framework that treats an automated enterprise as an electronic supply chain that consists of various artificial agents. In particular, we view such a virtual enterprise as a MAS. A classical example of supply chain management is the MIT Beer

Game, which has attracted great attention from both the supply chain management practitioners as well as academic researchers.

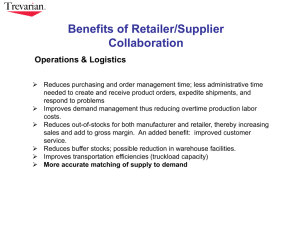

There are several types of agents in this chain, for example, the retailer, distributor, wholesaler, and manufacturer. Each self-interested agent tries to achieve its own goal such as minimize its inventory cost by making orders to its supplier. Each agent makes its own prediction on future demand of its customer based on its observation. Due to lack of the incentives of information sharing and bounded rationality of each agent, the observed or experiment performance of real agents (human beings) managing such a supply chain is usually far from optimal from a system-wide point of view (Sterman,

1989). It would be interesting to see if we can gain insights for such a community by using artificial agents to play the role of human beings.

This paper intends to provide a first step towards this direction. We differ from current research on supply chains in the OM/OR area, in that the approach taken here is an agent-based, information-processing model, in automated marketplace. While in

OM/OR area, the focus is usually on the optimal solutions that are based on some known demand assumptions, and it is generally very difficult to derive and to generalize such optimal policies when the environment changes.

2. Literature review

In the management science literature, Lee and Whang (1997) identified four sources of the bullwhip effect and offered counter actions for firms. Chen (1999) shows that the bullwhip effect can be eliminated under base-stock installation policy. This two are both based on the seminal work of Clark and Scarf (1960). A well-known phenomenon when human agents playing this game, is demand information distortion or the “bullwhip effect” across the supply chain from the retailer to the factory agent (Lee, Padmanabhan, and Whang, 1997; Lee and Whang, 1999). Under the assumption that the division managers share a common goal to optimize the overall performance of the supply chain - acting as a team - Chen (1999) proves that the “Pass Order,” or “One for one” (“1-1”) strategy - order whatever is ordered from your customer - is optimal.

In the information technology literature, the idea of marrying multi-agent systems and supply chain management has been proposed by several researchers (Nissen, 2000; Yung and Yang, 1999). Our approach (Kimbrough, Wu and Zhong, 2000) differs from others in that we focus on agents learning in an electronic supply chain. We developed

DragonChain, a general platform for multi-agent supply chain management. We implement the “MIT Beer Game” (Sterman, 1989) to test the performance of

DragonChain.

3. Methodology and implementation discussion

4. Results

Our main idea is to replace human players with artificial agents by letting DragonChain to play the Beer Game and to see if artificial agents can learn to mitigate the bullwhip effect by discovering good and efficient order policies.

Experiment 1.

In the first round of our experiment using DragonChain, under deterministic demand as set up in the classroom Beer Game (customer demands 4 cases of beer in the first 4 weeks and then jumps to 8 cases of beer from week 5, but maintains the same demand over time), we fix the policy of three other players (the Wholesaler,

Distributor and Factory Manager) to use “1-1” rules, i.e., if current demand is x then order x from my supplier, while letting the Retailer agent to adapt and learn. The goal of this experiment, is to see if the Retailer agent can match the other players’ strategy and learn the “1-1” policy.

The Retailer makes orders according to different rules, such as x-1, x-2 , or x+1 etc. where x is the customer demand for each week. The rules are expressed in binary strings with 6 digits. The left most one represent the sign of the rule function 0 represents “-” and

1 represents “+“. The rest digits of the string decide how many to add to or subtract from the demand. For example, a rule like 101001 can be translated to “x+9”:

“+”

1 0 1 0 0 1

= 9 in decimal

So the total searching range is from x-31, x-30,…x-1, x, x+1 to x+31 , in which totally 63 rules are included. Whenever x – j < 0, (j = 1…31) , we set the order for current week to

0. Genetic algorithm is used here to help the Retailer agent to find better rules to minimize its accumulate cost in 35 weeks. In any generation, the agent will place orders

according to every rule in the generation for all the 35 weeks, and get corresponding fitness for each rule. We follow the Fitness Proportion Principle , which means the best rule in the generation has the largest opportunity to be chosen as the parent of the next generation. In such mechanism, the agent learns which rule will be better generation by generation.

The execution cycle of the GA used here is described as following:

Step 1. GA randomly generates 20 rules, from 000000 to 111111;

Step 2. Each rule is assigned absolute fitness according to evaluation function.

Step3. The rules in the current generation will be sorted according to their fitness.

Step4. Relative fitness is calculated for each rule by the following equation

Absolute Fitness – Lowest absolute fitness in the generation

Relative Fitness = -------------------------------------------------------------------------

Highest absolute fitness – Lowest absolute fitness and Pick up parent rules based on Fitness Proportion Principle and create offsprings. Replace current generation with the newly generated offsprings.

Step5. Conduct “mutation” on the new generation according to the mutation rate and return to step 2.

There are two types of GA operators used here, Crossover and Mutation:

Crossover

RULES

110010

001001

101011

000000

111110

011100

110000

000101

Mutation

Crossover Pt.

[3]

[5]

[2]

[6]

Offspring

RULES Mutation Pt. Result

1 1 0100

0001 0 1

[2]

[5]

1 0 0100

0001 1 1

110011

101010

101010

111111

001100

010000

011101

000100

In the evaluation function, the Retailer Agent will place its order according to the selected rules for 35 weeks. At the end of each week, its cost for that week is calculated as following:

InventoryPosition = CurrentInventory + NewShipment – CurrentDemand;

If InventoryPosition >= 0

Else

CurrentCost = InventoryPosition * 1

CurrentCost = InventoryPosition * 2

Where CurrentInventory is the inventory at the beginning of each week, NewShipment is the amount of beer just shipped in, and CurrentDemand is the demand from customers for the current week.

Our initial results show that DragonChain can learn the “1-1” policy consistently.

With a population size of 20, the Retail agent can find the “1-1” strategy very quickly and converges at that point.

We then allow all four agents make their own decisions simultaneously. In this case, the binary strings are longer than those of the previous experiment, because they are composed of the rules for all the agents. So far, the length of the binary strings is 6 * 4 =

24. For example:

110001 001001 101011 000000 where 110001 is the rule for Retailer;

001001 is the rule for Wholesaler;

101011 is the rule for Distributor;

000000 is the rule for Factory.

Same crossover and mutation operators as in the previous experiment are used and also each time only one point of the string is select to conduct the crossover or mutation.

In the evaluation function, each agent places orders according to its own rule for the 35 weeks and current cost is calculated for each of them separately. But for Wholesaler,

Distributor and Factory, their current demand will be the order from its lower level, which are Retailer, Wholesaler and Distributor. The four agents act as a team and their goal is trying to minimize their total cost in 35 weeks. Genetic algorithm is also the key method for the agents to learn and find their optimal strategies. The result is very

exciting., again each agent in DragonChain can find and converge to the “1-1” rule separately, as shown in Figure 1. This is certainly much better than human agents (MBA students from a premier business school), as shown in Figure 2.

Order vs. Week

9

8

7

6

5

2

1

4

3

0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

Week

Figure 1: Artificial agents in DragonChain are able to find the optimal “pass order” policy when playing the MIT Beer

Game.

Accumulate Cost Comparison of Human Being and Agent

5000

Retailer

WholeSaler

Distributer

Factory

4000

3000

2000

MBA Group1

MBA Group2

MBA Group3

Agent

1000

0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Weeks

Figure 2: Artificial agents in DragonChain perform much better than MBA students in the MIT Beer Game.

Although it is well-known that “1-1” policy is the optimal for the deterministic demand with fixed leadtime, no previous studies have tested whether 1-1 is Nash. We conduct further experiments to test this. The result is that each agent finds 1-1 and it is stable, therefore constitutes the Nash equilibrium. We also conduct experiments to test the robustness of the results by allowing agents to search a much large space. We introduce more agents to the supply chain. In these experiments, all the agents discover

“1-1” policy, but it takes longer to find it when the number of agents increases. The following table summarizes the findings.

Table 1:

Convergence generation

Four Agents

4

Five Agents

11

Six Agents

21

Experiment 2.

In the second round of experiment, we test the case of stochastic demand where demand is randomly generated from a known distribution, e.g., uniformly distributed between [0, 15]. We have 16 * 31 = 496 possible rules in total. The goal of this experiment, is to find whether artificial agents can track the trend of demand, whether artificial agent can discover good dynamic order policies. Again we use “1-1” is as a heuristic to benchmark. Our initial experiments show that DragonChain can discover dynamic order strategies (so far, the winning rule seems to “ x + 1

” for each agent) that outperform “1-1”, as shown in Figure 3. Further more, as shown in Figure 4, notice an interesting and surprising discovery of our experiments is that, when using

artificial agents to play the Beer Game, in all test cases, there is no bullwhip effect, or the bullwhip effect disappears!

Accumulated Cost vs. Week

5000

4000

3000

2000

1000

0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36

Week

Agent Cost

1-1 Cost

Figure 3: When facing stochastic demand and penalty costs for all players as in the MIT Beer Game, artificial agents seem to discover a dynamic order policy that outperforms “1-1’.

Order vs. Week

20

18

16

14

12

10

8

6

4

2

0

Week

Figure 4: The bullwhip effect is eliminated when using artificial agents to replace human beings in playing the

MIT Beer Game.

Retailer

WholeSaler

Factory

Distributer

By calculating the variances across the supply chain, we notice that the Bullwhip effect has been eliminated under all test cases, even under the stochastic customer demand.

Table 2: Variance comparison for experiment 2.

Retailer Wholesaler

26.0790 26.1345 Variance

Distributor

25.2571

Manufacturer

26.8671

Like experiment 1, we conduct a few more experiments to test the results of experiment 2. First, the Nash property remains under stochastic demand. Second we increase game period from 35 weeks to 100 weeks, and see if agents can beat ‘1-1’ constantly. Again, the customer demand is uniformly distributed between [0, 15]. Agents find new strategies ( x+1, x, x + 1, x ) than outperform the ‘1-1’ policy. The agents are smart enough to adopt this new strategy to take advantage of the end effect of the game.

Third, we test the statistical validity by rerunning the experiment with different random seed numbers. The results are fairly robust.

Experiment 3.

In the third round of experiment, we changed lead-time from fixed 2 weeks to uniformly distributed from 0 to 4. So now we are facing both stochastic demand and lead-time. The length of rule strings is also 6 * 4 = 24. The evaluation function is changed in this experiment, because when the ordered beer be shipped in will depend on the different lead-time of each week. For example, in the 12 th

week, if Wholesaler Agent orders 20 unit of beer to Distributor Agent and the lead-time is 3 weeks for that week.

Then the 20 unit beer should be shipped in week 16 according to the equation:

ShippingInWeek = WeekOfOrder + 1 + Lead-time

We add “1” to the right part of the equation, because the order is passed to the upper level agent in the next week.

Following the steps we described in the first experiment, the agents find better strategies within 30 generations. The best rules they found so far are:

Retailer:

Wholesaler:

Distributor: x + 1 x + 1 x + 2

Factory: x + 1

And the total cost of 35 weeks is 2555, which is much lesser than 7463 by “1-1” policy.

In the appendix, we show the evolution of effective adaptive supply chain management policies when facing stochastic customer demand and stochastic lead-time. We further test the stability of these strategies discovered by agents, and find that they are stable, thus constitute a Nash equilibrium.

Order vs. Week

14

12

10

8

6

18

16

4

2

0

1 3 5 7 9 11 13 15 17

Week

19 21 23 25 27 29 31 33 35

Customer Demand

Retailer

Figure 6: Artificial Retailer agent is able to track customer demand reasonably well when facing stochastic customer demand.

Orders vs. Weeks

25

20

15

10

Retailor Order

WholeSaler Order

Distributer Order

Factory Order

5

0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

Weeks

Figure 7: Artificial agents mitigate the bullwhip effect when facing stochastic demand and stochastic lead time.

Accumulated Cost vs. Week

8000

7000

6000

5000

4000

3000

2000

1-1 cost

Agent cost

1000

0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

Week

Figure 9: Artificial agents seem to be able to discover dynamic ordering policies that outperform the “1-1” policy when facing stochastic demand and stochastic lead time.

Table 3: Variance comparison for experiment 3: No Bullwhip effect.

Variance

Retailer

26.0790

Wholesaler

26.1345

Distributor

25.2571

Manufacturer

26.8671

11

12

13

14

15

16

17

18

19

20

7

8

5

6

9

10

2

3

0

1

4

Table 4: The evolution of effective adaptive supply chain management policies when facing stochastic customer demand and stochastic lead-time.

Generation x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 3 x – 0 x + 2 x + 1 x + 1 x + 1

Strategies

Retailer Wholesaler Distributor Manufacturer

Total Cost x – 0 x + 3 x – 1 x – 2 x + 4 x + 2 x + 2 x + 5

7380

7856 x – 0 x – 1 x + 0 x + 5 x + 5 x + 5 x + 6 x + 2 x – 0 x + 3 x + 3 x – 2

6987

6137

6129 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x+ 2 x + 2 x + 2 x + 2 x + 2 x + 2 x + 3 x + 0 x+ 1 x + 1 x + 1 x + 1

3886

3071

2694

2555

2555

2555 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 2 x + 2 x + 2 x + 2 x + 2 x + 2 x + 2 x + 2 x + 2 x + 2 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1 x + 1

2555

2555

2555

2555

2555

2555

2555

2555

2555

2555

3. The Columbia Beer Game

The Columbia Beer Game is a modified version of MIT beer game (Chen, 1999). The following table compares major differences between the two.

MIT Beer Game Columbia Beer Game

Customer Demand Deterministic

Information Delay 0

Stochastic

Fixed

Physical Delay

Penalty Cost

Holding Cost

Fixed Fixed

All agents have penalty costs Only retailer incurs penalty cost

Players all have the same holding costs

Players have decreasing costs upstream the supply chain.

Information

Acknowledgement

All players have no knowledge

All players know the distribution about the distribution of of customer demand. customer demand.

Now our experiment follows the settings of the Columbia Beer Game, except that our agents have no knowledge about the distribution of customer demand. We specifically set the information leadtime to (2, 2, 2, 0), physical leadtime to (2, 2, 2, 3) and customer demand is normally distributed with mean of 50 and standard deviation of 10. Other initial conditions are set as in Chen (1999).

Since there is additional information delay in Columbia Beer Game, we consider it into our rules too. Two more bits are added into the rule bit-string to represent the

information delay. For example, if the Retailer has a rule of 10001101, it means “if demand is x in week t-1 , then order x+3 ”, where t is the current week:

“+”

1 0 0 0 1 1 0 1

3

“ t – 1

”

Now, the master rule for the whole team has the length of 8 * 4 = 32.

Under the same learning mechanism as that of the previous experiments, agents find the optimal policy in the literature (Chen, 1999): order whatever amount is ordered with time shift, I.e., Q

1

= D (t-1), Q i

= Q i-1

(t – l).

4. Summary and further research

This study makes the following contributions: (1) first and foremost, we test the concept of an intelligent supply chain that is management by artificial agents. Agents are capable of playing the Beer Game by tracking demand; eliminating the Bullwhip effect; discovering the optimal policies if exist; finding good policies under complex scenarios where analytical solutions are not available. (2) such an electronic supply chain is adaptable to an ever-changing business environment. The findings of this research could have high impact on the development of next generation ERP or multi-agent soft enterprise computing as depicted in Figure 7.

Ongoing research includes investigation on the value of information sharing in supply chain; the emergence of trust in supply chain; coordination, cooperation, bargaining, and negotiation in multi-agent systems; and the study of alternative agent learning mechanisms such as classifier systems.

Factory

Agent

Supply Chain

Community

(DragonChain)

Distributor

Agent

Pricing

Agent

Retailer

Agent

Wholesaler

Agent

Investment

Agent

Executive Community

(StrategyFinder)

Production

Community

(LivingFactory)

Contracting

Agent

E-Marketplace

Community

(eBAC)

Bidding

Agent

Auction

Agent

Figure 7: A framework for multi-agent intelligent enterprise modeling.

5. References

1.

Chen, F., “Decentralized supply chains subject to information delays,”

Management Science , 45, 8 (August 1999), 1076-1090.

2.

Kleindorfer, P., Wu, D.J., and Fernando, C. “Strategic gaming and the evolving electric power market”, forthcoming in, European Journal of Operational

Research , 2000.

3.

Lee, H., Padmanabhan, P., and Whang, S. “Information distortion in a supply chain: The bullwhip effect”,

Management Science , 43, 546-558, 1997.

4.

Lee, H., and Whang, S. “Decentralized multi-echelon supply chains: Incentives and information”, Management Science , 45, 633-640, 1999.

5.

Strader, T., Lin, F., and Shaw, M. “Simulation of order fulfillment in divergent assembly supply chains”,

Journal of Artificial Societies and Social Simulations , 1,

2, 1998.

6.

Kimbrough, S., “Introduction to a Selectionist Theory of Metaplanning,” Working

Paper, The Wharton School, University of Pennsylvania, http://grace.wharton.upenn.edu/\~~sok .

7.

Lin, F., Tan, G., and Shaw, M. “Multi-agent enterprise modeling”, forthcoming in, Journal of Organizational Computing and Electronic Commerce .

8.

Maes, P., Guttman, R., and Moukas, A. “Agents that buy and sell: Transforming commerce as we know it”, Communications of the ACM , March, 81-87, 1999.

9.

Marschak, J., R. Radner. 1972. Economic Theory of Teams . Yale University

Press, New Haven, CT.

10.

Nissen, M. “Supply chain process and agent design for e-commerce”, in: R.H.

Sprague, Jr., (Ed.), Proceedings of The 33rd Annual Hawaii International

Conference on System Sciences , IEEE Computer Society Press, Los Alamitos,

California, 2000.

11.

Robinson, W., and Elofson, G. “Electronic broker impacts on the value of postponement”, in: R.H. Sprague, Jr., (Ed.),

Proceedings of The 33rd Annual

Hawaii International Conference on System Sciences , IEEE Computer Society

Press, Los Alamitos, California, 2000.

12.

Sikora, R., and Shaw, M. “A multi-agent framework for the coordination and integration of information systems”,

Management Science , 40, 11 (November

1998), S65-S78.

13.

Sterman, J. “Modeling managerial behavior: Misperceptions of feedback in a dynamic decision making experiment”,

Management Science , 35, 321-339, 1989.

14.

Weiss (ed.), Multi-agent Systems: A Modern Approach to Distributed Artificial

Intelligence , Cambridge, MA: MIT Press, 1999.

15.

Weinhardt, C., and Gomber, P. “Agent-mediated off-exchange trading”, in: R.H.

Sprague, Jr., (Ed.), Proceedings of The 33rd Annual Hawaii International

Conference on System Sciences , IEEE Computer Society Press, Los Alamitos,

California, 1999.

16.

Wooldridge, M. “Intelligent Agents”, in G. Weiss (ed.),

Multi-agent Systems: A

Modern Approach to Distributed Artificial Intelligence , Cambridge, MA: MIT

Press, 27-77, 1999.

17.

Wu, D.-J. “Discovering near-optimal pricing strategies for the deregulated electronic power marketplace using genetic algorithms”, Decision Support

Systems , 27, 1/2, 25-45, 1999b.

18.

Wu, D.-J. “Artificial agents for discovering business strategies for network industries”, forthcoming in,

International Journal of Electronic Commerce , 5, 1

(Fall 2000b).

19.

Wu, D.J., and Sun, Y. “ Multi-agent bidding, auction and contracting support systems”, Working Paper, LeBow College of Business, Drexel University, April,

2000.

20.

Yan, Y., Yen, J., and Bui, T. “A multi-agent based negotiation support system for distributed transmission cost allocation”, in: R.H. Sprague, Jr., (Ed.),

Proceedings of The 33rd Annual Hawaii International Conference on System Sciences , IEEE

Computer Society Press, Los Alamitos, California, 2000.

21.

Yung, S., and Yang, C. “A new approach to solve supply chain management problem by integrating multi-agent technology and constraint network”, in: R.H.

Sprague, Jr., (Ed.), Proceedings of The 32nd Annual Hawaii International

Conference on System Sciences , IEEE Computer Society Press, Los Alamitos,

California, 1999.