Interactive marker-less tracking of human limbs

advertisement

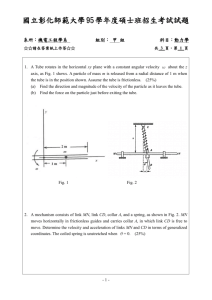

IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS, MANUSCRIPT ID 1 Interactive marker-less tracking of human limbs Srinivasa G. Rao, and Larry F. Hodges, Member, IEEE Abstract— One of the hard problems that combines research in Computer Graphics and Computer Vision is tracking human limbs in real time without using any markers. This problem is known to be notoriously difficult and ill posed. In this paper we present a technique to track human limbs at near interactive rates without markers. This work builds on, modifies, and adds to the state-of-theart tracking techniques developed by previous researchers. We use a particle filtering tracking algorithm that uses 3d data derived from a modified visual hull algorithm. The particle filtering algorithm runs on a GPU and hence we are able to track human limbs at 4Hz with reasonable accuracy. This method has the potential to be applied to tracking the whole human body without markers. Index Terms— I.3.7.a Animation, I.4.8 Scene Analysis, I.4.8.n Tracking, I.3.7 Three Dimensional Graphics and Realism. —————————— —————————— 1 INTRODUCTION AND MOTIVATION T HE applications of Marker-less Limb Tracking in Real Time (abbreviated as MLTRT from now on) are many. Firstly, if we can track a person’s limb poses over time, we can construct a free view-point video by rendering his 3d model from novel camera positions. The viewer watching this video can alter his view-point in the video at will. Previously, [1], [2], and [3] have achieved this and have synthesized video from novel view-points, but only by offline processing. Secondly, we can render the tracked position of user’s limbs in VR environments without use of cumbersome maker based systems. [21], [22] have shown that if a user in a VR environment can see his limbs, the feeling of immersion is increased. Third, MLTRT will be very useful for new types of user interfaces. We can use human actions such as pointing, waving hands etc. instead of pointing devices such as mice or joysticks to interact with our computer. Fourth, MLTRT can be used for achieving telepresence and telecollaboration in combined virtual and real environments. Gross et al. [14], achieve this by constructing 3d video consisting of video fragments (3d point samples derived from a visual hull) and streaming it across the network. MLTRT would enable us to just stream the joint angle values over the network, thereby reducing the amount of bandwidth required to drive such systems. Section 2 describes our ideas and further sections show how we implemented these ideas. In section 3 we describe the previous work done both in Computer Vision and Computer Graphics literature on human motion tracking. Section 4 gives an overview of our work. Section 5 goes into details of particle filtering. Section 6 describes implementation of the particle ———————————————— Srinivasa G. Rao is a PhD student in the Department of Computer Science, University of North Carolina, Charlotte, NC 28213. E-mail: srao3@uncc.edu. Professor Larry F. Hodges is the chair of the Department of Computer Science, University of North Carolina, Charlotte, NC 28213. E-mail: lfhodges@uncc.edu. Manuscript received (insert date of submission if desired). Please note that all acknowledgments should be placed at the end of the paper, before the bibliography. filtering algorithm on a GPU and its tracking speed and accuracy. In section 7 we describe how we obtain the necessary 3d data from a visual hull. Section 8 describes real time implementation of the system. Section 9 describes our attempt to do marker less limb tracking in PCA (Eigen) space. Finally, we conclude and describe our future work. 2 CONTRIBUTIONS Our ideas and technical contributions can be summarized as follows – (a) Even though vision and computer graphics researchers have used camera images as input for tracking algorithms, only the silhouette information is used for estimating motion parameters [1]. Some of the researchers have used 3d data [6], [7] but have not used a robust tracking framework such as particle filtering. Our major contribution is to integrate current particle filtering techniques primarily found in computer vision literature and three-dimensional visual hull techniques from computer graphics. (b) Previous techniques for MLT have required offline processing and hence cannot track in real time as data is acquired. We implement a parallelizable particle filtering algorithm with 3d data as input on a GPU that enables us to track human limbs at near interactive rates. 3 PREVIOUS WORK This section starts by summarizing the work done on MLT in the computer vision literature. Then we summarize similar research done in the computer graphics literature. MLTRT is challenging due to the high dimensionality of degrees of freedom (DOFs) of the articulated human body, the lack of explicit depth information in 2D images, occlusion, and complexity of human dynamics and kinematics. There has been extensive research in the field of computer vision on MLT, but not at real-time rates (MLTRT). Deutscher et al. [9], [10] have used particle filters to do MLT. Particle filtering provides a robust Bayesian framework for human motion capture. A kinematic 2 IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS, MANUSCRIPT ID body model is used to “search for” the optimal body pose (a vector of angles representing joint angles of the human body) such that, it minimizes the error between the kinematic model’s projection on an image plane and body silhouettes obtained from doing background subtraction on camera images. We refer the interested readers to [9], [10] for a more detailed description. This is the basic framework that we have modified and we describe it in detail in section 5. Sigal et al. [11] pose the problem of 3D human limb tracking as one of inference in a graphical model. Their model of the body is a collection of loosely-connected limbs. Conditional probabilities relating the 3D pose of connected limbs are learned from motion-captured training data. Human pose and motion estimation is then solved with non-parametric belief propagation using a variation of particle filtering that can be applied over a general loopy graph. Gavrila and Davis [12] use a decomposition approach and a best-first technique to search through the high dimensional pose parameter space, to search for the pose of the articulated model that best matches image data. Both ellipsoids [7] and super quadrics [12] have been used in kinematic models. Bregler and Malik [13] have used the product of exponential maps and twist motions to track a walking person in a video captured from his side view. Cheung et al. [8] use an algorithm that iteratively segments points on the silhouettes to each articulated part of the kinematic model and estimates the motion of each individual part using the segmented silhouette. Luck and Small [6] create a force field exerted by voxels in a volumetric data set to fit a kinematic skeleton at near interactive frame rates. Computer Graphics researches have gone a step further and generated free view-point and virtual videos from novel views after estimating motion parameters. [15], [16] compute a visual hull, which is a coarse representation of human shape from a set of input images acquired from cameras at known positions. The visual hull can then be rendered from novel viewpoints. Vedula et al. [17] have created models of moving human actors using Multi-view video, voxel-based reconstruction, and space-time interpolation along the 3D scene flow. Carranza et al. [1] have used sophisticated human models for offline parameter estimation and then rendered free view-point video from novel view-points. Gross et al.[14] have used 3d point samples derived from a visual hull for telepresence in a CAVE-like environments. But, a visual hull and its derivatives are a very coarse approximation for a high quality video. Recently [4], [5] have demonstrated that, with the use of many cameras and high bandwidth and/or offline computation, it is possible to generate virtual videos and autostereoscopic 3d display even without estimation of any motion parameters. But, in such systems, not only is the computational and/or bandwidth cost very high, but also novel views generated are very close to and in-between the views captured by the cameras. 4 OVERVIEW OF OUR WORK Our work aims to generate a high quality LIVE action virtual video at near interactive rates. To accomplish this, we track the joint angles of human motion using a simple kinematic model. Using the joint angle data estimated by the tracking algorithm we can animate a high resolution, high quality, preprocessed, 3d human model from novel view-points. We achieve this by implementing a particle filtering tracking algorithm that runs on a Graphics Processing Unit (GPU) and uses 3d data derived from a visual hull. MLT, let alone MLTRT, is known to be a notoriously difficult and ill posed problem. In this work, we have decided to solve a much simpler problem first – track only human limbs without any markers on the limbs. Fig. 1 shows architecture of our system. Fig. 1. The system architecture. 5 PARTICLE FILTERING ALGORITHM (PFA) In this section, we describe the particle filtering techniques we use for tracking. Our algorithm is a modification of the techniques originally presented in [9], [10]. The term “particle” should not be confused with particles used in physical simulation of fire/smoke/water etc. Here, “particle” is a vector which has dimension equal to the number of degrees of freedom (say d) of the limbs of an articulated model. It can be thought of as a point in d-dimensional space. We first simulated our entire work and studied the nature of the MLTRT problem using the CMU Mocap data and viewing software [18]. To determine the accuracy of our tracking approach, we compared the synthetic 3d data points rendered by MOCAP software using the motion captured data (Fig. 2b, yellow points) to the pose determined by our tracking algorithm. Our kinematic model (Fig. 2a) consisted of ellipsoidal limbs of the same bone structure with 10 DOF - three rotation values for each thigh, one rotation value (along X axis (red line)) for each leg, and one rotation value (along X axis) for each foot. Hence in our case each particle is a vector of floating point values with 10 components, for legs, and is a 4 component vector (3DOF for shoulder and 1DOF for arm) for tracking arms. RAO S. ET AL.: INTERACTIVE MARKER-LESS TRACKING OF HUMAN LIMBS 3 ticles are shown for brevity. This illustrates the multimodal nature of the MLTRT weight function. The green line represents the particle in Fig. 2(e), which has the pose that is closest to ground truth. Fig. 2. Clockwise starting from bottom left (a) kinematic model, (b) synthetic 3d data (c), (d) particles having imperfect match, (e) particle having perfect match with 3d data and (f) typical MLTRT weight function plot, green line indicating ground truth, particle where the weight function has the highest peak. 5.1 Multimodal property of the PFA weight function Given a particle P (vector with dimension d), it describes a pose of the kinematic model. A measure of how good this particle matches the actual 3d data is the fraction of 3d points that are inside the ellipsoids of the model for that particular pose. This is called the weigh, w, of the particle. Weights are between [0, 1]. For example, the particle in Fig. 2c has a lesser weight than the particle in Fig. 2d. The particle in Fig. 2e has a weight of 1, since it matches the 3d data perfectly – this is the particle/pose that we want to search for. But, even a non perfect match like the one shown in Fig. 2d can have weight close to 1. This is because; ellipsoids representing the right foot and the lower part of the right leg of the kinematic model contain some 3d points in the ground truth that are actually a part of middle part of the right leg! Due to this the weight function in MLTRT is multimodal, which means it has many peaks, which further means we cannot use gradient descent methods or its variants to search for the peak of such a function! Say we have only 4DOF (rotation along X axis for thighs and limbs). Also suppose we know the maximum and minimum angles of rotation for each DOF. We want to find where the highest peak lies. If we take 10 values in between the maximum and minimum values for each DOF, we end up with 10 4 particles whose weights we have to evaluate to find the peak of the function! Hence, the computation is exponential. Fig. 2f shows a plot (particle number along X axis and weights along Y axis) of weights of 104 particles; only the middle 6000 par- Fig. 3. The annealing stack of PFA for arbitrary frame, Frame K– green line gives the ground truth. See sections 5.1, 5.2 for description of the process. 5.2 Details of the PFA A Particle Filtering Algorithm (PFA for brevity) may be used to search for a peak on such a multimodal function. Fig. 3 demonstrates how the PFA works. We have to track the 3d data each frame, which means we have to run the PFA each frame. Each run of the PFA has an annealing stack that has several layers. The main idea is based on simulated annealing [23]. At the topmost layer (say M-1), the weight function described in the previous section is smoothed so that the search does not get “distracted” by smaller peaks. As the PFA progress from the topmost layer to the bottommost layer, the degree of smoothing of the weight function is decreased such that at layer 0 we use the original unsmoothed weight function. At the beginning of the PFA, a particular number (say N) of random particles (poses or vectors, each of d dimensions) are generated. Their weights are computed depending on how close their match is with the actual 3d data as described above (for a more detailed description see Section 6). Then, a fraction of particles (say half of them), that have higher weights are selected. Gaussian noise 4 IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS, MANUSCRIPT ID (also a vector) with a mean zero vector and particular variance (diagonal matrix, correlations between noise in different dimensions is assumed to be 0) is generated and added to the selected weighted particles to generate unweighted particles for the next layer. This process of computing weights of particles and “propagating” only a heavier fraction of those to the next layer is repeated M times. Thus, the particles/poses that match the given 3d data more closely “live” and the rest “die out” as the PFA progresses. The PFA is very robust for tracking because some of the particles with less initial weight “live long enough” and sometimes turn out to be a very close match at the end. We learn the variance for each DOF from the motion captured data and use those values while running the PFA. A histogram of the absolute difference between consecutive frame values for each DOF is generated. A maximum change in that DOF is taken from each histogram after discounting for the noise. The standard deviation of the noise for the particular DOF is set to be one third of this maximum value at the top most layer of the PFA. As the PFA progresses from the topmost to the bottommost layer, noise of smaller and smaller variance is added to the heavier fraction of weighted particles that are selected for generating un-weighted particles for the next layer and the weight function becomes closer and closer to the actual unsmoothed weight function. This results in many particles that are near the desired peak of the multimodal MLTRT weight function as shown in Fig. 3. After we reach the bottom layer (layer 0), an average of the selected particles (weighted by their weights) is computed to determine the particle that is very close to the actual 3d data – the particle where the highest peak of multimodal weight function occurs – the particle/pose which we want to search for! Fig. 3 shows the PFA in action with number of particles (N) = 4, selection fraction of 0.5 (i.e. 2 heavier particles getting selected) and, number of layers (M) = 3. 5.3 Technical Contributions We have modified the PFA algorithm described in [9] and [10] in the following ways. Fig. 4. Clockwise from top left. 2d image from (a) camera in front (b) camera on the left (c) a camera behind (d) camera on the right (e) view from camera at 45deg (f) result of using 3d data. (a) Deutscher et al. [9], [10] use 2d images to compute the weight function. The contours of an actor are obtained from doing background subtraction on a set of camera images. They then project the kinematic model onto the camera images and compute the weight of a particle depending on how close the projection matches the contours. We have found that the use of 2d images causes many problems during PFA. An example is shown in Fig. 4 – green is the kinematic model, red is ground truth - (a), (b), (c) and (d) show four images from four surrounding synthetic cameras, placed at 90 degrees separation (front, left, back and right) around the synthetic kinematic model. Notice that, even though the reprojection error (number of green pixels not overlapping with red foreground contour) is not very high, the position picked by the PFA is far from ground truth! The left leg of the kinematic model is matched up with the right leg of the ground truth and vice versa as seen in Fig. 4(e)! But when we use 3d data for tracking, as shown in Fig. 4(f), this problem does not happen. In addition, using 3d data for weight computation is more efficient for GPU implementation as we show in Section 6. Fig. 5. Clockwise from top left (a) Distribution is only approximately uniform. Hence (b) particles with lesser weights are sometimes Selected more number of times for Generating new particles (# SG) than ones with higher weights, which is avoided by having deterministic algorithm (c). (d) Use of Crossover operator results in particles having more variance in some DOFs. (b) Deutscher et al. [9], [10] select the particles with a probability proportional to their weights, with replacement, for generating new particles. Initially, we implemented this approach by dividing the unit interval [0, 1] into N (number of particles) intervals whose lengths were equal to normalized particle weights. Then, we generated a uniform random number. Based on which interval this random number belonged to, we chose the corresponding particle. But, pseudo random number generators generate random numbers whose distribution is only approximately uniform (or approximately Gaussian, for that matter). See Fig. 5(a). Due to this sometimes particles with lesser weights were chosen more times than ones with heavier weights for generating new particles for the next layer – contrary to that required by the PFA (Fig. 5(b)). Hence, to fix this problem, we now use a deterministic rather than a probabilistic algorithm to do selection (Fig. 5(c)). RAO S. ET AL.: INTERACTIVE MARKER-LESS TRACKING OF HUMAN LIMBS 5 (c) Also, we found that use of the cross-over operator found in Genetic Algorithms [10] actually resulted in particles having more variance during PFA compared to those simulations where we did not use it. The smaller the variance is for the DOFs, the better it is for the PFA. Hence, as compared to [10] we do not use cross-over operator in our implementation. This comparison is shown in Fig. 5(d). ordinate system’s origin’s position in global coordinates; and X, Y, Z, unit vectors in the local coordinate system transformed to the global coordinate system. Do for all 3d points, P = (x, y, z), that have BNE set to 1 Let D = (P – O); Dx = (X-O).D; Dy = (YO).D; Dz = (Z-O).D; If a 3d point is inside the ellipsoid, i.e., (Dx <= xradius) and (Dy <= yradius) and (Dz <= zradius), then set the BNE flag to 0, indicating that 3d point belongs to one of the ellipsoids. (3) Weight of the particle w = (total number of 3d points – number of 3d points with BNE flag set to 1)/ (total number of 3d points). 6 IMPLEMENTATION OF THE PFA ON THE GPU The PFA executes partly on the CPU and partly on a GPU. To speed up the execution of the PFA, the weight computation, which is parallelizable, is implemented on a GPU. The remainder of the PFA is implemented on the CPU. The part of the PFA that executes on the CPU supplies appropriate texture data to the GPU during multi-pass rendering, which then executes its part of the PFA. Fig. 6 shows an articulated model (on the left) with a thigh and a leg being represented by one ellipsoid each (solid blue) with their local coordinate systems. On the right, we have synthetic ground truth 3d data color coded depending on which limb (ellipsoid) of the articulated model the 3d point belongs to. The 3d data is in the global coordinate system. Yellow means it belong to the leg, cyan means it belongs to the thigh and blue means it does not belong to any part of the kinematic articulated model. Green colored 3d points are not of interest. The articulated kinematic model and synthetic 3d data have the same “root” position, i.e., the green sphere of the articulated model on the left contains all red 3d points – but here they are shown apart just for clarity. Also shown is the global coordinate system. 6.1 3d weight calculation The weight of a particle (pose) like the one shown in Fig. 6 can be calculated by this simple algorithm. (1) Mark all the 3d data points as Belongs to No Ellipsoid (BNE) i.e., set all BNE flags of all 3d points to 1. Fig. 6. 3d Weight Calculation. (2) Do for all ellipsoids (having radii xradius, yradius and zradius in corresponding directions) in articulated model Transform ellipsoid’s local coordinate system to the global coordinate system. We get O, local co- 6.2 Parallelization of 3D weight calculation Computational complexity in the PFA comes from calculating the weight of each particle, in each layer, and for each frame. The process can be easily parallelized. Notice that the BNE flags for different particles are independent of each other. Also notice that, given a single particle we have to process BNE flags for the 1st ellipsoid first; and then for the 2nd ellipsoid, using only those 3d points whose BNE flag was NOT set to 0 by the 1st ellipsoid and so on. So, if we can feed the same 3d data for every particle, the BNE flags for a particular ellipsoid can be processed for all the particles independently in parallel – this corresponds to one rendering. Notice that a particular ellipsoid will have different orientations for different particles. So we process BNE flags for the 1st ellipsoid of all particles (independent of each other) and use the resulting data for the 2nd ellipsoid of all particles and so on. Hence we have to update the frame buffer a number of times equal to the number of ellipsoids in the articulated model. In this way we have parallelized the weight computation. We use Open GL Shading Language (GLSL) to program the GPU. We use the fragment shader (FS for brevity) on the GPU to do this parallel computation. The vertex shader (VS) is just a pass-through shader. We input 3d data into FS as a 512X512 RGB texture. We do multi-pass rendering because we need to use the data from the previous render as an input for the next render. Each render corresponds to an ellipsoid in the articulated kinematic model. In each rendering, we render N textured squares – each square corresponds to one particle. We use a uniform variable 4X4 matrix (GLSL) to feed in the ellipsoid’s orientation matrix for the corresponding particle to the FS. Each point in the square triggers the FS which checks if the corresponding 3d point (read from texture) has its BNE flag set to 1. If it is, then the FS checks whether the point is inside the current ellipsoid. If so, its BNE flag is set to 0 and that fragment’s color is set to black during rendering. If not, the fragment is rendered with color read originally from the texture. At end of all these renders we are left with only those 3d points which don’t belong to ANY ellipsoid, for each particle, i.e. their BNE flags will still be set to 1. Fig. 7 shows two frame buffer read backs – at start (left) and near the end of multi-pass rendering (right). Notice that some squares have all 6 IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS, MANUSCRIPT ID black pixels (corresponding particles fit 3d data very well) and some don’t. We use two Pbuffers for multi-pass rendering. We first render to the buffer1 and in the next rendering, read from buffer1 and render to buffer2; and in the next rendering read from buffer2 render to buffer1 and so on (usually known as the “ping pong” action). We have 6 ellipsoids (2 thighs, 2 limbs, 2feet) in our articulated model for tracking legs and 2 ellipsoids for arm. We have used N = 81 particles. So we have to do multi-pass rendering 6(or2) times for each anneal layer of PFA, depending on whether we are tracking legs(or arms) followed by a read back of the frame buffer. We have used M=3 anneal layers. Hence, to track each frame, we need 18(or 6) renderings and 3 read backs of frame buffer. 7 DERIVING 3D DATA FROM A VISUAL HULL We use a modified Visual Hull algorithm to derive 3d points inside the 3d object directly. We refer interested readers to [15] for more details. We use this 3d point data for the PFA and hence achieve MLT of human limbs at near interactive rates. Since we only need the 3d points inside the 3d object, the modified visual hull algorithm has O(n) complexity instead of O(n2), where n is the number of cameras. Hence, less computation time is spent on computing the 3d data. Fig. 7. Two sample frame buffer read backs. 6.3 Tracking Accuracy and Speed If we implement the PFA on a Pentium 3.06 GHz CPU only it takes 5 seconds to calculate the pose of the articulated model in each frame. When we use both the CPU and a GeForce 5900FX GPU, the time to calculate the pose is reduced to 0.25 seconds – a 20X speedup! We also calculated the accuracy of the PFA by calculating Euclidean distance between the ground truth particle (vector of d dimensions of angles) and the particle found by the PFA for each frame and averaging it over many ground truth sequences. The average absolute joint angle error was 7.7302 degrees. Maximum, minimum and standard deviation of the error were 19.3103, 0.0 and 4.5398 degrees respectively. 6.4 Comparison with 2D weight calculation We compare our approach with the approach taken in [9], [10] in this section. Let us say that instead of deriving 3d data from camera images, we wanted to use 2d re-projection error on the image plane for weight computation. If we use only 6 renderings per anneal layer to match the number of renderings per anneal layer in our 3d data method, then, in each rendering we must process N (= 81)/6 = 14 particles. If we use 4 cameras as done in [9] and render a 512X512 quadrilateral each time during the PFA, then each camera image would occupy (512X512)/(14*4) = 4681 pixels. Assuming we are capturing 320X240 images, this would mean we have to resize the image to less than 1/16th the size of original image! Apart from having fundamental pose calculation problems like that shown in Fig. 4, this would severely alias the image data which would adversely affect the performance of the PFA. Fig. 8. Image contours from 3cameras – one each along X,Y and Z axis and Modified Visual Hull computation. Consider three calibrated cameras, one each along X, Y and Z axes. The algorithm can be described as follows – (1) Choose grid points on foreground contour obtained from Camera1 (Fig. 8, red points on black contour). (2) For each of these points, do (a) Compute the 3d line (red line) passing through the camera center and the grid point (see Fig. 8). (b) Compute the corresponding epipolar lines on image planes of Camera2 and Camera3 (green and blue lines on respective image planes). Intersect these with the corresponding contours. Using these points of intersection for Camera2 compute that portion on the red 3d line which, when projected onto the image plane of Camera2, belongs to the foreground contour of Camera2 – say Cam2_3DLine. Similarly compute Cam3_3DLine. (c) Compute the part of the red 3d line that is the intersection of Cam2_3DLine and Cam3_3DLine – black part of red 3d line in Fig. 8. (d) Choose 3d points on this line. Number of 3d points chosen is directly proportional to length of the black 3d line (see Fig. 8). Fig. 9 shows the result of this algorithm at an instant in time. The 3d points inside the 3d object derived from the contours in the camera images are shown on the left. A close up view of the 3d points is shown on the right. RAO S. ET AL.: INTERACTIVE MARKER-LESS TRACKING OF HUMAN LIMBS 7 Fig. 9. (Left) 3d point data derived from modified visual hull computation and (Right) Zoomed in version. DirectX 9.0 library was used to capture camera images and synchronize them in software. The whole program has 7 threads. A main thread creates and controls all other threads. There is one thread for doing only the GPU part of the PFA. There are separate threads for capturing data from each camera. The main thread requests data from all the camera threads and suspends itself until it received updates from all of the camera threads. Then a separate thread computes the visual hull and the 3d data which is then fed to the PFA. A separate debug thread displayed all the debugging information. We were able to track human limbs with reasonable accuracy at 4Hz. See Fig. 11, Fig. 12, Fig. 13 and Fig. 14 for few tracking results. 10 CONCLUSION AND FUTURE WORK 8 PROBLEMS WITH DOING MLTRT IN PCA SPACE During this work, we tried another idea. Safonova et al. [19] and Grochow et al. [20] showed that human motion can be simulated and solved for in a lesser dimensional space rather than in the original high DOF space. We thought that this would help in particle filtering too. If we could do PFA in a lesser dimensional Eigen (PCA) space, we could use a smaller number of particles and hence reduce computation time. We computed the covariance matrix and base vectors for the DOFs of our articulated model in the PCA space from the Mocap database [18]. We postulated that the error due to tracking in PCA space would be acceptable. But, it turns out that error in PCA space is not acceptable. Fig. 10 shows two instances where the PFA failed in PCA space. We think this is because Safonova et al. [19] actually solved for motion parameters on a whole sequence by specifying positions for the articulated model at some key frames. This results in the solution of motion parameters “getting pulled to the right path”. Since we do not do any kind of optimization/key frame position specification, doing PFA in PCA space actually decreases its robustness. Also since the position computed for Frame K is used to generate particles for Frame K+1, tracking errors accumulate while doing PFA in PCA space. We have demonstrated how human limbs can be tracked at near interactive rates. We also have demonstrated that using 3d data derived from a visual hull greatly improves the robust particle filtering framework. Implementation of the particle filtering framework on GPU has enabled us to do MLTRT at 4Hz and with mean error of 7.7302 degrees. We are currently working on optimizing the algorithm further and increase the update rate using a faster GPU. We also want to try out full body MLTRT. We will investigate signal processing issues associated with smoothing and sampling of the MLTRT weight function. We will integrate human dynamics and collision detection between limbs into MLTRT. We then will proceed to using this technique for free view-point video, VR gaming, HCI, telepresence in combined VR and real environments. ACKNOWLEDGMENT The first author would like to thank his friends Gajendra Singh, Arvind Somya and Madhusudhan Reddy for their help and moral support during this project. Fig. 10. Two instances showing failure of PFA in PCA space. For both, tracking in actual space (left) and tracking in PCA space (right). 9 REAL TIME IMPLEMENTATION OF OUR SYSTEM We implemented the whole system on a Pentium 4 3.06 GHz processor and a GeForce 5900FX video card. We used 3 IBOT fire-wire web cameras for hand tracking and leg tracking. GLSL and GLEW library were used for GPU programming. Calibration toolbox by [24] was used to calibrate our cameras. Fig. 11. First row shows images of a hand at a particular position captured by three cameras. Second row shows corresponding foreground segments extracted by doing background subtraction. Last row show three virtual views of the articulated model that is used to track user’s hands – tracking and rendering of virtual views happens in real time at near interactive rates. 8 IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS, MANUSCRIPT ID Fig. 12. Same as Fig. 11, but for a different pose of the arm. Fig. 14. Same as Fig. 13, but for a different pose of the legs. [4] [5] [6] [7] [8] [9] [10] Fig. 13. First row shows images of legs at a particular position captured by three cameras. Second row shows corresponding foreground segments extracted by doing background subtraction. Last row show three virtual views of the articulated model that is used to track user’s legs – tracking and rendering of virtual views happens in real time at near interactive rates. [11] [12] REFERENCES [1] [2] [3] Carranza, J., Theobalt, C., Magnor, M. A., Seidel, H., “Free-View point Video of Human Actors”. In Proceedings of SIGGRAPH 2003, ACM Press / ACM SIGGRAPH, 2003. Wuermlin, S., Lamboray, E., Staadt, O., Gross, M., “3d video recorder”. In Proceedings of Pacific Graphics 2002, IEEE Computer Society Press, 325– 334. 2002. Matsuyama, T., Akai, T., “Generation, visualization, and editing of 3D video”. In Proc. of 1st International Symposium on 3D Data Processing Visualization and Transmission (3DPVT’02), 234ff, 2002. [13] [14] [15] [16] [17] [18] Matusik, W., and Pfister, H., “3DTV: A Scalable System for Real-Time Acquisition, Transmission, and Auto stereoscopic Display of Dynamic Scenes”. In Proceedings of SIGGRAPH 2004, ACM Press / ACM SIGGRAPH, 2004. Zitnick, C.L., Kang, S.B., Uyttendaele, M., Winder, S., Szeliski, R., “High quality Video View Interpolation using a Layered Representation”. In Proceedings of SIGGRAPH 2004, ACM Press / ACM SIGGRAPH, 2004. Luck, J., Small, D., “Real-time markerless motion tracking using linked kinematic chains”. In Proc. of CVPRIP02, 2002. Cheung, K., Kanade, T., Bouguet, J.Y., Holler, M., “A real time system for robust 3D voxel reconstruction of human motions”. In Proc. of Computer Vision and Pattern Recognition, vol. 2, 714 – 720, 2000. Cheung, G., Baker, S., Kanade, T., “Shape-From-Silhouette of Articulated Objects and its Use for Human Body Kinematics Estimation and Motion Capture”. Proc. Conf. Computer Vision and Pattern Recognition, 2003. Deutscher, J., Blake, A., Reid, I., “Articulated body motion capture by annealed particle filtering”. In Proc. Conf. Computer Vision and Pattern Recognition, volume 2, pages 1144-- e 1149, 2000. Deutscher, J., Davison, A.J., Reid, I., ”Automatic Partitioning of High Dimensional Search Spaces associated with Articulated Body Motion Capture”. Proc. IEEE Conference on Computer Vision and Pattern Recognition, Kauai, 2001. Sigal, L., Bhatia, S., Roth, S., Black, M.J., Isard, M., “Tracking Looselimbed People”. Proc. IEEE Conference on Computer Vision and Pattern Recognition, 2004. Gavrila, D., and Davis, L., “3d model-based tracking of humans in action: a multi-view approach”. Proc. Conf. Computer Vision and Pattern Recognition (1996), 73–80, 1996. Bregler, C., Malik, J., “Tracking People with Twists and Exponential Maps”. Proc. IEEE Computer Vision and Pattern Recognition 1998. Gross, M., Würmlin, S., Naef, M., Lamboray, E., Spagno, C., Kunz, A., Koller-Meier, E., Svoboda T., Van Gool, L., Lang S., Strehlke, K., Moere, V.A., StaadT,O., “blue-c: A Spatially Immersive Display and 3D Video Portal for Telepresence”. Proceedings of ACMSIGGRAPH 2003, pp. 819827, 2003. Matusik, W., Buehler, C., and McMillan, L., “Polyhedral Visual Hulls for Real-Time Rendering”. In Proceedings of Eurographics Workshop on Rendering, 2001. Laurentini, A., “The Visual Hull Concept for Silhouette Based Image Understanding”. IEEE PAMI, 16, 2 (1994), pp. 150-162., 1994. Vedula, S., Baker, S., Kanade, T., “Spatio-temporal view interpolation.” In Proceedings of the 13th ACM Eurographics Workshop on Rendering, 65– 75, 2002. http://mocap.cs.cmu.edu/ RAO S. ET AL.: INTERACTIVE MARKER-LESS TRACKING OF HUMAN LIMBS [19] Safonova, A., Hodgins, J., Pollard, N., “Synthesizing Physically Realistic Human Motion in Low-Dimensional, Behavior-Specific Spaces”. In Proceedings of SIGGRAPH 2004, ACM Press / ACM SIGGRAPH, 2004. [20] Grochow K., Martin S.L., Hertzmann A., Popović Z., “Style-based Inverse Kinematics”. ACM Transactions on Graphics (Proceedings of SIGGRAPH 2004), 2004. [21] M. Slater and M. Usoh, “The Influence of a Virtual Body on Presence in Immersive Virtual Environments.” VR 93, Virtual Reality International, Proceedings of the Third Annual Conferenceon Virtual Reality, London, Meckler, 1993, pp 34-42. [22] M. Slater and M. Usoh, “Body Centred Interaction inImmersive Virtual Environments.” In Artificial Life and Virtual Reality, (N. Magnenat, D. Thalmann, eds.), John Wiley and Sons, 1994, pp. 125-148. [23] Kirkpatrick, S., C.D. Gelatt Jr, and M.P. Vecchi, “Optimization by Simulated Annealing”, Science, V. 220, No. 4598, pp. 671 – 680, 1983. [24] Tomas Svoboda, Daniel Martinec, and Tomas Pajdla. “A convenient multi-camera self-calibration for virtual environments”. PRESENCE: Teleoperators and Virtual Environments, 14(4), August 2005. Srinivasa G. Rao is a PhD student in the Department of Computer Science in the College of Information Technology at the University of North Carolina - Charlotte. He earned his B.E. (Bachelor of Engineering) degree in Computer Science in 1999 from University of Mysore, India. He worked with IBM as a software engineer (19992000). He holds a M.S. in Computer Science from University of Maryland at College Park (2000-2002). He also worked at MERL labs as a research intern (2002-2003). His main research interests are Virtual Reality, Augmented Reality, Real time Computer Graphics and Computer Vision. Dr. Larry F. Hodges received the PhD degree from North Carolina State University in Computer Engineering in 1988. He is a professor and chair of the Department of Computer Science in the College of Information Technology at University of North Carolina at Charlotte. Prior to moving to Charlotte, he spent 14 years as a faculty member in the College of Computing at the Georgia Institute of Technology, where he was a founding member of the Graphics, Visualization and Usability (GVU) Center. He is also cofounder of Virtually Better, a company that specializes in creating virtual environments for clinical applications in psychiatry, psychology, and addiction. His research interests include virtual reality, interactive computer graphics, visualization and 3D HCI. 9