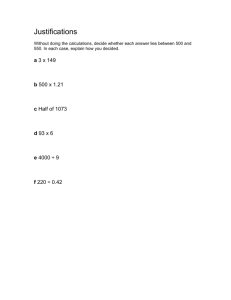

a written test for procedural understanding

advertisement

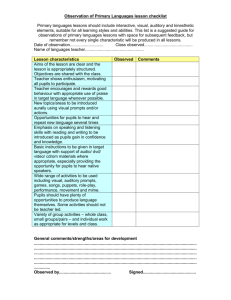

ASSESSMENT OF PERFORMANCE IN PRACTICAL SCIENCE AND PUPILS’ ATTRIBUTES. Richard Gott and Ros Roberts, School of Education, University of Durham, UK Paper presented at the British Educational Research Association Annual Conference, Heriot-Watt University, Edinburgh, 11-13 September 2003 PLEASE NOTE THAT MATERIAL IN THIS PAPER IS THE BASIS OF WORK SUBMITTED FOR PUBLICATION Correspondence should be addressed to: Ros Roberts School of Education University of Durham Leazes Road Durham DH1 1TA UK Tel: +44 191 3348394 Email: Rosalyn.Roberts@durham.ac.uk ASSESSMENT OF PERFORMANCE IN PRACTICAL SCIENCE AND PUPILS’ ATTRIBUTES. Richard Gott and Ros Roberts, School of Education, University of Durham, UK Abstract Performance assessment in the UK science GCSE relies on pupil reports of their investigations. These are widely criticised (House of Commons (2002), Roberts and Gott (in press), Gott and Duggan (2002). Written tests of procedural understanding could be used as an alternative, but what exactly do they measure? This paper describes small scale research. An analysis was made of assessments of pupils’ module scores of substantive ideas, their coursework performance assessment and a novel written evidence test which is described. Results from these different assessments were compared with each other and with baseline data on CAT scores and pupils’ attributes. Teachers were interviewed about pupils’ attributes with inter-rater correlations of between 0.7-0.8. Measures of ‘conformity’ (which included conscientiousness, neatness and behaviour) and ‘native wit’ (confidence and quickness of thought) were established. Significant predictors of performance on each of these assessments were defined as those independent variables which contribute a relatively large variance (10% or so, based on Beta >0.3) and approach or surpass a p<0.05 significance level. The module scores were shown to be significantly affected by pupils’ verbal (0.44, 0.00) and non-verbal (0.39, 0.00) reasoning scores. Coursework was affected by ‘conformity’ (0.51, 0.00) and verbal reasoning (0.34, 0.02). The written evidence test was affected by ‘native wit’ (0.31, 0.01). The intended outcome of GCSE assessment is to ‘measure’ the ability of pupils to know and understand and be able to ‘do’ science. Currently data from substantive tests and performance assessment are used to assess this. But is this a valid and reliable assessment? The data reported shows that a choice could be made between practical coursework that links to ‘conformity’ and written evidence tests which link, albeit less strongly, with ‘native wit’. There would be differential affects on pupils. Introduction Many problems have been associated with the introduction of investigative work in Science 1 (Sc1) of the UK National Curriculum in 1989. It has been an area of considerable professional and academic debate much of it centred around its assessment (Donnelly et al (1994), Donnelly (2000), Gott and Duggan (2002), UK House of Commons Science and Technology committee (2002)). Gott and Mashiter (1991) showed that practical problem solving in science can be seen to require an understanding of two sets of specific ideas or concepts: a substantive understanding and a procedural understanding (Figure 1). This approach recognises that, in addition to the substantive ideas, there is something, per se, to think about. It acknowledges that thinking has to be about something and recognises a knowledge base to procedural understanding. Figure 1: A knowledge-based model of Sc1 (from Gott and Mashiter 1991) Problem solving in science Mental processing (Higher order investigative skills) Substantive understanding Procedural Understanding concepts laws and theories concepts associated with the collection, interpretation and validation of evidence Facts Basic skills Work at Durham University has developed a suggested list of the ideas (the concepts of evidence) that form the knowledge base of procedural understanding and are required when solving problems in science (http://www.dur.ac.uk/~ded0rg/Evidence/cofev.htm). It covers some 19 areas ranging from fundamental ideas of causation and association, through experimental design, data analysis and interpretation to validity and reliability as overarching touchstones of evidence 'quality'. One possible solution to the current assessment problems identified in Sc1, which involves assessment of written accounts of whole and parts of practical investigations, is that of a written test of the concepts of evidence and their application to 'small' problems (Roberts and Gott (a)). Figure 2 shows how different assessment tasks can be related to procedural ideas. It is recognized that a written test is not a replacement for either a practical or a paper and pencil problem solving task in which the ideas are synthesised to solve the problem. The test aims to assess the set of understandings, some of which will be a necessary part of problem solving but which do not constitute it. Figure 2. Assessment tasks related to Procedural ideas (from Roberts and Gott (a) in press) Problem solving in science Complete open ended practical investigations Mental processing IT based problem solving, or written design tasks Higher order investigative skills Substantive understanding concepts, laws and theories Facts Procedural Understanding concepts associated with the collection, interpretation and validation of evidence Basic skills Parts of investigations choose apparatus to make a reliable measurement of the starch in a leaf, repeating until happy with answer, or written test of underpinning ideas Skill circuses practical (read a thermometer) or written (draw a graph from data) How would pupils’ performance be affected by this alternative form of assessment? Methods The written test of the concepts of evidence was given to nearly a hundred 14-15 year old pupils in a school in the NE of England. The items were randomly distributed across biology, chemistry and physics contexts although the context was sometimes driven by the concept of evidence to be assessed (i.e. sample size was most appropriately addressed in a biology context). The test was subjected to checks on facility and discrimination; the results are shown in Table i. Item facilities are reasonable and there are no negatively discriminating items although they suggest there is room for improvement in their construction. The Cronbach Alpha was 0.85. A factor analysis showed only one significant factor which accounted for over two thirds of the variance. There is no claim that this test is robust enough for large scale assessment in its present form. It is intended only to be illustrative of the sort test that could be developed. Table i: Description and analysis of the test items (Roberts and Gott b, in press) Test item Item description Facility Discrimination 1 Interpretation of data in table 0.44 0.18 2 Interpretation of data in table 0.44 0.16 3 Design of an investigation with two independent variables 0.50 0.64 4 Interpretation of data where some conclusions cannot be justified 0.71 0.15 5 Choice of size of measuring cylinder for defined measurement task 0.54 0.32 6 Variation in measurements of the same quantity 0.58 0.43 7 Handling repeat measurements and averaging 0.37 0.42 8 Repeat measurements and variation 0.28 0.30 9 Deciding on how many repeat measurements are necessary 0.48 0.28 10 Deciding on the merits of data from two investigations of the same phenomenon 0.52 0.45 11 Choosing the best graph type 0.33 0.24 12 Graph types and variables 0.53 0.31 13 Lines of best fit 0.60 0.51 14 Variable identification in designing an investigation 0.42 0.73 15 Variable types 0.49 0.58 16 The value of a trial run 0.52 0.45 17 Anomalous data in a table 0.23 0.42 18 Interpreting data from multiple experiments 0.15 0.30 19 Sample size and the limits of generalising from the data 0.25 0.38 In addition, data was available from seven written tests of the substantive ideas of science (based on previous external examinations and using the markscheme provided by the exam board) and pupils’ performance in five Sc1 investigations which have been routinely used by teachers in coursework assessment – case law having established their acceptability to the examination board and its moderators and were spread across all science subjects. For each test pupils were ranked. We were particularly interested, following this preliminary work with the test data, to uncover what we could about the attributes of pupils who did well in some tests and not others, or did well or badly in them all. Once the test data was to hand, we produced 6 lists of names. Each list had the names of the pupils who were in the top 20 or bottom 20 in each of the tests: 22 pupils were selected from these lists of pupils at the extremes of performance on each test and their names were printed on ‘flashcards’. All the science teachers in the school were simply asked to reflect on the names on the cards and offer any comments about the pupils. Their responses were recorded and transcribed and a set of ‘attributes’ produced from that transcript – table iii. Table iii: Pupil attributes (Gott and Roberts in press) Attribute Definition Conscientiousness Works hard at tasks including homework, even when the tasks are proving difficult. Neat work Neat work with careful handwriting (not always 'good'), neat layout of work etc Behaviour/attention Attentive and well behaved, with no (or minimal disruption). Keen to learn. Confidence Feels confident and gets on with tasks even in situations where that confidence is misplaced. Quickness of thought Faced with a novel situation (Krypton factor, Scrapheap Challenge, collapse of arrangements for open day) in an everyday situation, will come up with a solution through strategic quickness of thought and ability to see through to the heart of the issue. Every pupil was rated by two teachers using these attributes. The inter rater correlations were about .7 to .8. Some individual cases varied by significant amounts – they were either resolved in discussion with the teachers involved, or omitted from the data set. Inspection of correlations between the 5 attributes, together with a cluster analysis, suggested that there were two, fairly clearly defined, clusters within this data. On the basis of this, two new variables were created: ‘behaviour’ and ‘quickness’. Stanford Cognitive Ability Tests data of verbal, non-verbal and quantitative reasoning (Thorndike and Hagen, 1973) were also available for these pupils. Results Correlations of the scores in the 3 types of assessment were made. (Table ii) Table ii: correlation matrix for assessments made in Year 10. (Roberts and Gott b in press) Evidence test Sc1 Year 10 POAE scores summed Year 10 module scores calibrated Evidence test Pearson Correlation 1 .486** .600** Sc1 Year 10 POAE scores summed Pearson Correlation .486** 1 .698** Year 10 module scores Pearson - calibrated Correlation .600** .698** 1 ** Correlation is significant at the 0.01 level (2-tailed). These low correlations are in line with other authors who have compared different forms of assessment, albeit in different circumstances (Gott and Murphy (1987), Baxter et al (1992), Stark (1999), Gray and Sharp (2001), Lawrenz et al (2001), Gott and Duggan (2002)). They give us little help in deciding which one(s) we might use in formal assessment and what the consequences of so doing might be. Each of the scores in the 3 types of assessment was entered into a linear regressive analysis with independent variables of gender, ‘behaviour’, ‘quickness’ and verbal, nonverbal and quantitative reasoning. We present the ‘Coefficients’ and ‘Model Summary’ tables from an SPSS analysis for each dependent variable in turn below (Tables iii-v). Table iii: The written evidence test (Gott and Roberts in press) Coefficients Unstandardised Coefficients Model 1 B (Constant) Standardised Coefficients Std. Error Sig. Beta -1.220 .436 -2.797 .007 Zscore: Verbal .218 .150 .212 1.453 .150 Zscore: Quantitative .172 .131 .167 1.311 .194 Zscore: Non verbal .144 .119 .140 1.207 .231 -.315 .196 -.153 -1.605 .113 'Quickness' .311 .113 .305 2.758 .007 ‘Behaviour’ 6.013E-02 .106 .063 .565 .574 Gender a Dependent Variable: Zscore: Evidence test Model Summary Model R 1 R Square .709 Adjusted R Square .503 Std. Error of the Estimate .463 .754 a Predictors: (Constant), ‘Behaviour’, Zscore: Non verbal, Gender, Zscore: Quantitative, 'Quickness', Zscore: Verbal Table iv: Coursework – POAE (Gott and Roberts in press) Coefficients Unstandardised Coefficients Model 1 B (Constant) Standardised Coefficients Std. Error Sig. Beta -2.477 .397 -6.244 .000 .334 .137 .337 2.442 .017 Zscore: Quantitative 2.075E-02 .119 .021 .174 .862 Zscore: Non verbal Zscore: Verbal 7.644E-02 .108 .077 .705 .483 Gender .333 .178 .169 1.864 .066 'Quickness' .123 .102 .125 1.197 .235 ‘Behaviour’ .465 .097 .508 4.802 .000 a Dependent Variable: Zscore: Coursework (POAE) Model Summary Model 1 R R Square .745 Adjusted R Square .554 Std. Error of the Estimate .519 .686 a Predictors: (Constant), ‘Behaviour’, Zscore: Non verbal, Gender, Zscore: Quantitative, 'Quickness', Zscore: Verbal Table v: Module tests – substantive ideas (Gott and Roberts in press) Coefficients Model 1 (Constant) Unstandardised Coefficients Standardised Coefficients B Beta Std. Error Sig. -.813 .336 -2.417 .018 .453 .116 .443 3.911 .000 -2.264E-02 .101 -.022 -.224 .823 Zscore: Non verbal .396 .092 .387 4.303 .000 Gender .215 .151 .105 1.419 .160 'Quickness' .154 .087 .152 1.777 .080 ‘Behaviour’ 2.830E-02 .082 .030 .345 .731 Zscore: Verbal Zscore: Quantitative a Dependent Variable: Zscore: Module scores Model Summary Model 1 R R Square .837 Adjusted R Square .700 Std. Error of the Estimate .677 .581 a Predictors: (Constant), ‘Behaviour’, Zscore: Non verbal, Gender, Zscore: Quantitative, 'Quickness', Zscore: Verbal We have chosen, then, to look for independent variables which do two things: Contribute a sensibly large variance (10% or so, based on a Beta >0.3), and Approach or surpass a p<0.05 significance level. Table vi: Significant factors by assessment type (Gott and Roberts in press) Type of assessment Significant factors: Module scores Verbal reasoning (0.44, 0.00) Non-verbal reasoning (0.39, 0.00) Coursework (POAE) ‘Behaviour’ (0.51, 0.00) Verbal reasoning (0.34, 0.02) Evidence test ‘Quickness’ (0.31, 0.01) Discussion The intended outcome of the GCSE assessment is to ‘measure’ the ability of pupils to know and understand and be able to ‘do’ science. The assumption has been that the aggregation of data from substantive tests and performance assessments is a contentvalid and reliable measure of all these things. The addition of paper and pencil testing, which is very tentative at the moment, could be seen as a broadening of the assessment. The correlation data reported here points to the fact that each of these assessments is measuring something different. Performance assessment, it could be argued, relies on (in Bloomian terms) a synthesis of skill and concepts – procedural and substantive – to arrive at a solution. The evidence test reported here, on the other hand, deals largely with individual concepts of evidence and could be described as being more related to ‘understanding and application’ – table vii. Demand Procedural ideas Through? Recall … of skills Skills tests – both practical and paper and pencil Understand and apply … concepts of evidence and skills in familiar and unfamiliar situations The evidence test as described here Synthesise … skills, concepts of evidence (and substantive concepts) into a solution to a problem Planning and carrying out complete tasks Table vii: Bloomian taxonomy applied to procedural ideas We posed a question as to whether there are grounds for accepting or rejecting each of the three assessments, or combination thereof, in developing overall science assessment schemes. The data reported above show, in this context, that there is a choice to be made between a practical course work assessment that links to ‘behaviour’; and a switch to a paper and pencil evidence test which links, albeit less strongly, to ‘quickness’. Either of these, or of course some combination, together with the substantive tests could form the basis for development in GCSE assessment, but there would be, as a result, differential affects on pupils. Attainment on substantive understanding is linked to a traditional definition of IQ – we are not dealing with the pros and cons of that element here. Ability to carry out and write up investigations is linked to ‘behaviour’ and would therefore benefit a ‘conforming’ student. Attainment on an evidence test is linked to ‘quickness’ which could benefit a student who conforms less well and relies rather on a degree of quickness of thought to solve problems. Ideally we might want to go for a valid and reliable assessment of performance. But, as we have seen, the constraints of the assessment process have routinised the tasks which may be the cause of the link reported here between performance and ‘conformity’. The evidence test, by contrast, favours the ‘conformers’ rather less. It could be argued that conformity and perseverance are precisely what we need, but to accept it by default would not be wise. We believe that there is a case for inclusion of evidence tests on the grounds that such an assessment can go some way to identify those pupils who have that native wit which deserves credit. It may be that an evidence test might be used instead of attempts to assess practical performance in the way it is currently done because of its well-documented distortion of the curriculum and inefficiency. One final question remains for consideration. Could it not be the case that replacing a routinised practical assessment system with an evidence test might be undone by a routinisation of the evidence test itself? Investigations have become constrained into 6 or so routine ones, ‘blessed’ by the examination boards, from which teachers choose the 2 or 3 they use for assessment. There is little variety as these are known to be ‘safe’ against downgrading individual pupils’ work in the moderation process. Paper and pencil evidence type questions could follow the same depressing path. We do not believe this is likely. Written questions based on the concepts of evidence list can take many contexts as their background and, even if to the expert the procedural demand is the same, it is unlikely to appear so to the novice. Recall is likely to be much less influential and the ability to think about a problem rather more so. Indicative Bibliography BAXTER, G.P., SHAVELSON, R.J., DONNELLY, J., JOHNSON, S., AND WELFORD, G. (1988) Evaluation of procedure-based scoring for hands-on assessment, Journal of Educational Measurement, 29(1), pp1-17. DONNELLY, J. (2000) Secondary science teaching under the National Curriculum. School Science Review 81 (296) pp. 27 – 35 DONNELLY, J., BUCHANAN, A., JENKINS, E. AND WELFORD, A. (1994) Investigations in Science Education Policy. Sc1 in the National Curriculum for England and Wales (Leeds, University of Leeds) DUGGAN, S. AND GOTT, R. (2000) Intermediate General National Qualifications (GNVQ) Science: a missed opportunity for a focus on procedural understanding? Research in Science and Technology Education,18 (2), pp. 201-214. GOTT , R. AND DUGGAN, S. (2002). Problems with the assessment of performance in practical science: which way now? Cambridge Journal of Education, 32(2), pp.183-201. GOTT, R., DUGGAN, S. AND ROBERTS, R. (website) http://www.dur.ac.uk/~ded0rg/Evidence/cofev.htm GOTT, R. AND MASHITER, J. (1991) Practical work in science - a task-based approach? in Practical Science. Woolnough, B.E. (ed). (Buckingham, Open University Press). GOTT, R. AND ROBERTS, R. (submitted for publication) Assessment of Performance in practical science and pupil attributes GRAY, D., SHARP, B. (2001). “Mode of assessment and its effect on children's performance in science.” Evaluation and Research in Education 15(2): 55-68. HOUSE OF COMMONS, SCIENCE AND TECHNOLOGY COMMITTEE (2002) Science education from 14 to 19. Third report of session 2001-2, 1. (London, The Stationery Office). KUHN, D., GARCIA-MILA, M., ZOHAR, A. AND ANDERSON, C. (1995). Strategies of Knowledge Acquisition. Monograph of the Society for research in child development. Serial no. 245, 60(4) (Chicago, University of Chicago press). LAWRENZ et al (2001), QCA (2002) National Curriculum Assessments from 2003. Letter from QCA to all Heads of Science June 2002. QCA (website) http://www.qca.org.uk/rs/statistics/gcse_results.asp ROBERTS, R. AND GOTT, R. a (paper accepted for publication) Assessment of biology investigations Journal of Biological Education ROBERTS, R. AND GOTT, R. b (paper submitted for publication) A written test for procedural understanding: a way forward for assessment in the UK science curriculum? STARK, R. (1999). “Measuring science standards in Scottish schools: the assessment of achievement programme.” Assessment in Education 6(1): 27-42. SOLANO-FLORES, G.,JOVANOVIC, J., SHAVELSON, R.J. AND BACHMAN, M. (1999) On the development and evaluation of a shell for generating science performance assessments, International Journal of Science Education 21(3), pp. 293-315. STERNBERG, R.J.(1985) Beyond IQ: a triarchic theory of human intelligence. (Cambridge, Cambridge University press). THORNDIKE, R. L. AND HAGEN, E. (1973). Cognitive Abilities Test. Windsor, NFER Nelson. TYTLER, R., DUGGAN, S. AND GOTT, R. (2000) Dimensions of evidence, the public understanding of science and science education International Journal of Science Education 2(8), pp. 815-832. TYTLER, R., DUGGAN, S. AND GOTT, R. (2001). Public participation of an environmental dispute: implications for science education. Public Understanding of Science, 10, pp. 343-364. WELFORD, G., HARLEN, W., AND SCHOFIELD, B. (1985) Practical testing at Ages 11, 13 and 15. (London, Department of Education and Science)

![afl_mat[1]](http://s2.studylib.net/store/data/005387843_1-8371eaaba182de7da429cb4369cd28fc-300x300.png)