The Auditory System

advertisement

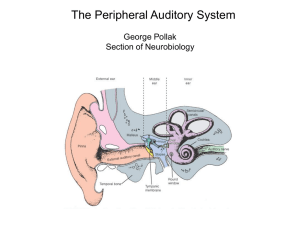

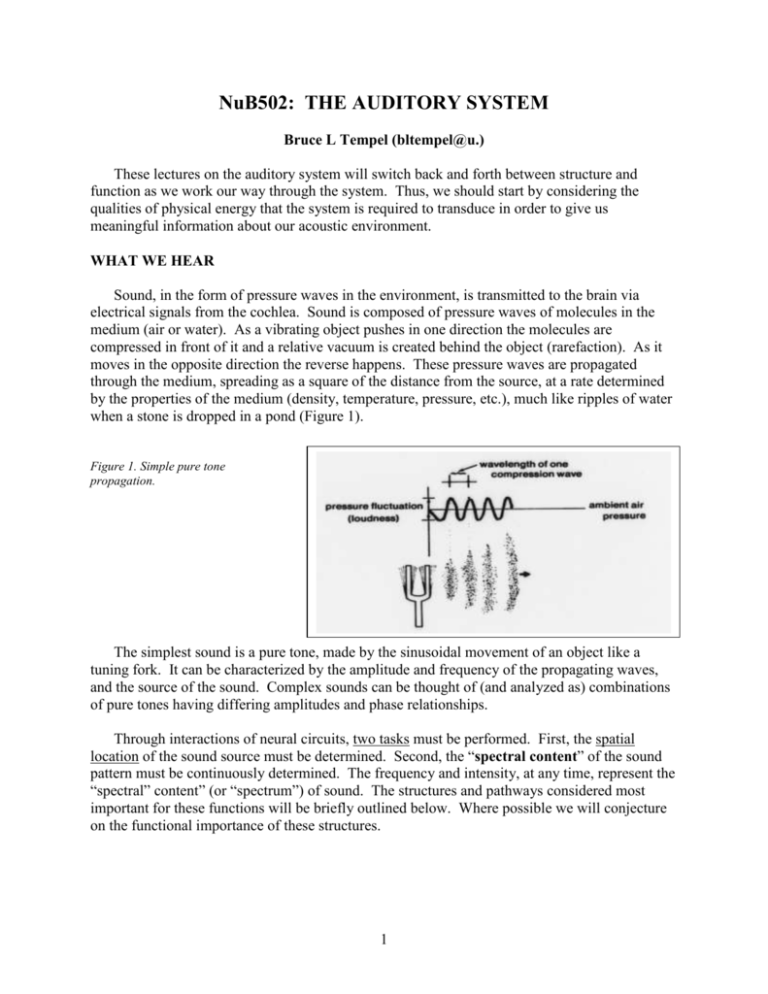

NuB502: THE AUDITORY SYSTEM Bruce L Tempel (bltempel@u.) These lectures on the auditory system will switch back and forth between structure and function as we work our way through the system. Thus, we should start by considering the qualities of physical energy that the system is required to transduce in order to give us meaningful information about our acoustic environment. WHAT WE HEAR Sound, in the form of pressure waves in the environment, is transmitted to the brain via electrical signals from the cochlea. Sound is composed of pressure waves of molecules in the medium (air or water). As a vibrating object pushes in one direction the molecules are compressed in front of it and a relative vacuum is created behind the object (rarefaction). As it moves in the opposite direction the reverse happens. These pressure waves are propagated through the medium, spreading as a square of the distance from the source, at a rate determined by the properties of the medium (density, temperature, pressure, etc.), much like ripples of water when a stone is dropped in a pond (Figure 1). Figure 1. Simple pure tone propagation. The simplest sound is a pure tone, made by the sinusoidal movement of an object like a tuning fork. It can be characterized by the amplitude and frequency of the propagating waves, and the source of the sound. Complex sounds can be thought of (and analyzed as) combinations of pure tones having differing amplitudes and phase relationships. Through interactions of neural circuits, two tasks must be performed. First, the spatial location of the sound source must be determined. Second, the “spectral content” of the sound pattern must be continuously determined. The frequency and intensity, at any time, represent the “spectral” content” (or “spectrum”) of sound. The structures and pathways considered most important for these functions will be briefly outlined below. Where possible we will conjecture on the functional importance of these structures. 1 Three independent parameters of an acoustic stimulus must be considered. 1. Frequency: Defined on the basis of the number of cycles per second of a simple sine wave. The period of simple sinusoidal wave is 1/frequency. Units: cycles per second (cps) or Hertz (Hz). The wavelength of a particular frequency component depends on the medium and is calculated by the period x velocity of sound propagation in the medium. Average “normal” human range of frequency sensitivity – 20 Hz to 20 kHz. There are very wide species variations in the frequencies that can be heard. Among vertebrates, mammals, in general, are high frequency specialists, some hearing above 100 kHz. Complex sounds can be represented by a Fourier analysis showing the relative contributions of each frequency or frequency band. 2. Amplitude (or Intensity): Technically, refers to the amount of the displacement from peak to peak (see Figure 1) in one cycle that the source moves around its equilibrium point. In practice we measure it as force (pressure) at the object. The intensity range of hearing, from the softest sound we can detect to the loudest sound we can analyze, is so large, it is not practical to express it on an equal interval scale. Therefore sound intensity is usually measured and defined on the basis of relative force, or a ratio scale (in this case log10 scale). In general the basis (reference level) for this ratio is the force required for human auditory threshold at 1000 Hz: 0.0002 dynes/cm2. Intensity units: decibels (dB). X(dB) =20log10 measured pressure reference pressure When referenced to human threshold (0.0002 dynes/cm2) amplitudes are noted in dB (SPL), where SPL = Sound Pressure Level. The convenient rule of thumb is that every 6 dB increase in measured intensity is equal to doubling the stimulus energy. Range of human sensitivity is the astronomical 0 to 120 dB (SPL); i.e., from the softest to the loudest sound we can deal with, that is a ratio of approximately 1 to 1 billion in pressure. 3. Sound Source: Direction of a sound source is defined on the basis of the origin of the sound source. Units: degrees azimuth on the horizontal plane (0-360). When sound encounters an object it can be reflected, absorbed or pass through (or around) the object. The result will depend on the density of the object, and the size of the object. For practical purposes in air, sound will pass around objects that are smaller than one wavelength and objects that are larger than one wavelength will form a barrier, casting a “sound shadow” behind them. This becomes important for understanding how the brain calculates the position of sound in space. Consider that the Speed of Sound in air is approximately 340 m/sec. Wavelength: 1000 Hz = 34 cm 500 Hz = 68 cm 440 Hz (middle C) = 77 cm 2 Figure 2: The lower curve shows the minimum audible sound-pressure level for human subjects as a function of frequency. The upper curve shows the upper limits of dynamic range, the intensity at which sounds are felt or cause discomfort. (After Durrant, J.D.; Lovrinic, J.H. Introduction to psychoacoustics. In Durrant, J.D.; Lovrinic, J.H., eds. Bases of Hearing Science. 138-169. Baltimore, Williams & Wilkins, 1984.) SENSORY SYSTEM GENERIC ORGANIZATION Figure 3. PERIPHERAL SPECIALIZIATIONS 1. Outer Ear Airborne sound waves consist of alternating rarefaction and condensation of airborne molecules. These relatively large, low force environmental patterns must be converted to high force, small volume changes at the oval window (Consider a high note [e.g. 1000 Hz]. At standard atmospheric pressure one cycle is over a foot long. Lower frequencies, for example those in the speech range, are progressively longer). This task is accomplished by the structures comprising the external and middle ear. Figure 4: The external ear, composed of the pinna and ear canal, protects the eardrum and directs sound waves toward it. These elements alter the acoustic spectrum reaching the eardrum differentially depending on the frequency and on the relative location of a sound source. See Figure 5: a sound emanating from the front will be enhanced by as much as 16 dB in the 1.6-10 kHz range at the eardrum; it can also be reduced by 212 dB at high frequencies. 3 Figure 5: The difference between the sound levels produced at the tympanic membrane and those produced at the same point in space with the person absent is plotted as a function of the frequency of the constant sound source. The curve results mainly from ear canal and pinna resonance. (After Shaw, E.A.G. Transformation of sound pressure level from the free field to the eardrum in the horizontal plane. Acoust. Soc. Am. 56:1848-1861, 1974.) 2. Middle Ear The impedance mismatch between air and the fluid filled cochlea would result in very little sound absorption without some mechanism to increase pressure at the oval window. Calculations suggest that his transmission loss would be on the order of 99.9%, which agrees well with clinically derived values of 30 dB hearing loss following fenestration of the stapedial footplate in patients with otosclerosis. The middle ear ossicles (malleus, incus, stapes) work in concert to provide a mechanical transformer. (See Figure 6). Figure 6: The impedance matching mechanisms of the middle ear. Both the difference in length between malleus handle (L1) and incus long process (L2) and the much larger ratio of areas of tympanic membrane (A1) to stapes footplate (A2) are shown. (After Abbas, P.J. Physiology of the auditory system. In Cumming, C.W., ed. Otolaryngology, Head and Neck Surgery, 2633-2677, St. Louis, C.V. Mosby, 1986.) The malleus attaches to the medial surface of the tympanic membrane and articulates with the incus. Attached to the other end of the incus is the head of the stapes. The footplate of the stapes is inserted into the oval window of the cochlea. The transformer action of the middle ear bones results principally from two factors. First and most important is the ratio between the sizes of the tympanic membrane and the footplate of the stapes (about 20:1). This ratio means that the movements of the tympanic membrane will be concentrated on a much smaller area at the oval window. The second factor is the lever ratio of the ossicular chain. Large movements 4 of the tympanic membrane result in smaller, more forceful, movements of the stapes. In all, the mechanical transformer action of the middle ear produces a gain of approximately 70-100 fold. Its significance can be clearly appreciated by the fact that the coefficient of sound transmission from the tympanic membrane to the cochlea is increased from less than 0.1% to over 50%. The middle ear can be thought of as a “transformer”. It dramatically increases the mechanical energy focused onto the fluid-filled inner ear, thereby partially alleviating the impedance mismatch between air and water. Attached to the ossicles are two small muscles, the tensor tympani and the stapedius. The tensor tympani attaches to the malleus and the stapedius attaches to the stapes. The trigeminal nerve innervates the tensor tympani muscle while the facial nerve innervates the stapedius muscle. Contraction of either muscle decreases sound transmission through the middle ear by placing increased tension on the middle ear conduction system. The middle ear muscles respond to a variety of stimuli, the most important of which are during vocalizations and during moderate to loud acoustic stimulation in either ear. The latter is the acoustic middle ear reflex, a 3-5 neuron brainstem circuit elicited by stimuli at or above 80 dB above threshold. This reflex culminates in a graded contraction of the middle ear muscles. At maximal contraction of the stapedius muscle sound transmission is damped by a factor of 0.6-0.7 dB/dB of increased sound pressure at the eardrum. The acoustic middle ear reflex probably serves several functions: 1. to protect the cochlea from overstimulation; 2. to increase the dynamic range of hearing; and 3. to reduce masking of high frequencies by low frequency sounds. Taken together the latter two functions will significantly improve speech discrimination, especially in noisy environments. In addition to loud sounds and during vocalizations, contraction of the middle ear muscles may be activated by nonacoustic exogenous and endogenous stimuli, for example cutaneous stimulation around the ear or eye. Some individuals can voluntarily contract their middle ear muscles. 3. The Cochlea The transduction of mechanical energy to electrochemical neural potentials takes place in the cochlea (auditory portion of the inner ear). The osseous or periotic cochlea is a bony, fluid filled tube enclosed within the petrous portion of the temporal bone, and coiled around a conical pillar of spongy bone called the modiolus. Embedded in the modiolus are the cell bodies of the spiral ganglion cells, whose axons make up the auditory portion of the eighth nerve. Within the cochlear duct are three longitudinal chambers (scalae). Fluid motions set up at the oval window by movement of the stapedial footplate are dissipated at the round window. Between the scala vestibuli and scala tympani is the scala media, or cochlear duct, containing the auditory receptor, the Organ of Corti (see conceptual buildup in Figure 7). Lining the lateral wall of the cochlear duct are the cells of the stria vascularis which maintain the ionic difference between the perilymph within the scala vestibuli and tympani, and the endolymph within the cochlear duct. The mammalian cochlea is a three-chambered spiraled tube which is about 34 mm long in humans and has anywhere between 1.5 and 3.5 turns, depending on the species (2.5 in humans). The principles of transduction are shown in the following figures. Fluid pressure changes are initiated by the vibratory pattern of the stapes at the oval window adjacent to the scala vestibuli, and “released” at the round window. The middle compartment, scala media, contains the Organ 5 of Corti and the stria vascularis. The organ of Corti is situated on the basilar membrane and contains the transduction apparatus, which can be thought of as a repeating structure containing 1 inner hair cell and 3 outer hair cells, plus a complex group of associated support cells and structures. Hence, the structures shown in Figures 8 and 9 below are repeated all along this tube, resulting in a single row of inner hair cells (~3,500 in humans) and 3 rows of outer hair cells (~12,000 in humans). The stria vascularis provides the high potassium concentration and resulting resting potential (~ +80 mV) of the scala media. This sets up an enormous potential difference (driving force) of about 150 mV across the apical surfaces of the inner and outer hair cells. Figure 7: Drawings showing the fundamental plan of the cochlea. A: The cochlea represented as a fluid-filled container with an elastic membrane (oval and round window membranes) covering each end. The rigid wall of the container corresponds to the bony capsule of the cochlea. B: A driving piston, the stapes, and a middle elastic membrane the basilar membrane - are added. C: Sensory (hair) cells with attached nerve fibers are placed on the basilar membrane, and a hole (the helicotrema. D: The wall of the cochlea near the helicotrema is extended; its mechanical properties varying systematically with longitudinal position along the membrane. The sensory cells are differentiated into inner and outer hair cells. The auditory nerve fibers shown are radial fibers that innervate the base of inner hair cells. A far smaller proportion of fibers (not shown) branch extensively to innervate outer hair cells. E: The elongated cochlea is coiled along its length to arrive at a simplified model - of the mammalian cochlea. The actual transduction of mechanical to electrical potentials takes place in the hair cells. Differential movements of the basilar membrane and the overlying tectorial membrane are thought to cause minute shearing forces on the hair cell stereocilia bundles. When the bundle moves in one direction (toward the longest stereocilia) transduction channels are opened, allowing influx of K+ and Ca++ ions (Figures 10 and 11). Channels close when the bundle is moved in the opposite direction. The details of this process have been studied in considerable detail, but are not the subject here. Suffice it to say that the hair cells and supporting structures are highly specialized for remarkable sensitivity and rapid response properties. 6 150 mM K+ +80mV 4 mM K+ 0mV RETICULAR LAMINA (tight junctions) PERMEABLE Figure 8: Top: Cross section of cochlear duct. The boundaries of the scala media with the scala vestibuli and with the scala tympani are Reissner's membrane and the basilar membrane respectively. Bottom: Organ of Corti showing most important structures and cell types. 7 Figure 9: The cochlea contains the Organ of Corti, which rests on the basilar membrane and contains hair cells that are surrounded by an elaborate network of supporting cells. There are two types of hair cells: inner hair cells and outer hair cells. The hair cells are innervated at their bases by afferent fibers. These nerve fibers have cell bodies in the spiral ganglion. Efferent fibers from the central nervous system synapse on afferent terminals beneath the inner hair cells and on the bases of the outer hair cells. Figure 10: Diagram showing how an "upward" (toward scala vestibuli) displacement of the cochlear partition can create a shearing force tending to bend hair cell stereocilia in an excitatory direction. (After Ryan, A., Dallos, P. Physiology of the Inner Ear. In Northern, J.L. ed. Communicative Disorders: Hearing Loss, 80-101. Boston. Little, Brown & Co., 1976.) 8 Figure 11: Deflection of the hair bundle toward the tallest row of sterocilia opens poorly selective cationic channels near the sterocilia tips. A, Influx of potassium depolarizes the cell. B, Voltage-sensitive calcium channels open in turn, permitting C, neurotransmitter release across the synapse to the afferent neuron (After Hudspeth, A.J. The hair cells of the inner ear. Sci. Am. 248:54-64, 1983.) Functionally, an extremely important feature of the cochlear duct is that the spectral pattern of footplate vibration at the oval window is translated into a spatial pattern of basilar membrane movement ("The cochlea performs a spectral to spatial transformation"). The basilar membrane is narrowest near the oval window (basal portion) and gradually widens toward the apex (Figure 12). Due to this fact and the associated changes in stiffness, high frequency sounds produce maximal displacement of the basilar membrane toward its basal end and progressively lower frequencies produce maximal movement toward the apex. This translation of acoustic frequency into a spatial array was first elegantly demonstrated by George von Békésy. (Using stroboscopic illumination of the basilar membrane von Békésy was able to view its movements through a microscope. He found that acoustic stimuli produced deflections of the basilar membrane with a period equal to the stimulating tone and which travel down the cochlear duct in a wave-like motion (Figure 13). The shape of the "traveling wave", the distance it travels from the oval window, or base of the cochlea, and the position of maximum deflection are all dependent on frequency.) High frequencies produce relatively narrow waves that have their maximum deflection near the oval window and then are rapidly damped. As the frequency of the stimulating tone is decreased the wave front become progressively broader, the position of maximum displacement moves apically, and the traveling wave move progressively further down the cochlear partition. Thus, the cochlea is seen to be a very efficient acoustic spectrum analyzer, transforming the spectrum of mechanical movements at the oval window into a spatial array of basilar membrane deflections (Figures 14 - 17). The hair cells, in line along the basilar membrane, are maximally activated by maximal deflections of the membrane, thus preserving the tonotopicity. At the base of the hair cells are found synaptic specializations which are thought to use glutamate as the afferent transmitter. Very importantly, the general principle of a spatial representation of frequency (tonotopicity), which is first manifest in the movements of the basilar membrane, is preserved throughout the entire auditory system and is probably responsible for behavior frequency selectivity. 9 Figure 12: The snail-shaped cochlea is straightened out, and the basilar membrane is shown as a tapered ribbon. An instantaneous picture of the traveling wave is indicated by the shape of the basilar membrane, assuming the sound input is a pure tone. The amplitude of the traveling wave is grossly exaggerated. Figure 13: Traveling waves in the cochlea. The full lines show the pattern of the deflection of the cochlear partition at successive instants, as numbered. The waves are contained within an envelope which is static (dotted lines). Stimulus frequency: 200 Hz from von Békésy (1960, Fig 12.17) Figure 14: The dimensions of the basilar membrane change along its length. In this surface view, the basilar membrane is shown diagrammatically as if it were uncoiled and stretched out flat. 10 Figure 15: Plots of data from von Békésy's experiments on the mechanics of the basilar membrane show that the peak amplitude of the traveling wave occurs at different points for that these curves reflect only the overall envelope of motion of a complex wave along the basilar membrane. Figure 16: Schematic of traveling wave to three different frequencies. Note that the envelope is broader and travels further toward the apex as the frequency is lowered. Depicted with “unrolled” cochlea. 11 Figure 17: Frequency organization of human cochlea. Von Békésy’s original observations suggested that the traveling wave is very broadly tuned to frequency, which was in sharp contrast to the very sharp frequency tuning near threshold of the axons of the cochlear nerve (see below). Von Békésy was studying cadavers and dead animals. Modern instrumentation, allowing direct visualization of the basilar membrane motion in live preparations, has shown that at low sound levels the tuning of basilar membrane motion matches the properties of 8th nerve axons. This sharp tuning is referred to as frequency specificity and is the result of an active process that enhances response amplitudes at low sound levels, but progressively this enhancement as sound level is increased - a “compressive nonlinear” amplification (Figures 18, 19). Figure 18: Tuning of basilar membrane response. Note that tuning is much sharper at low intensities than at high intensities (e.g. 80 dB). Note also that the response is highly nonlinear at the optimum frequency (14 kHz) but linear at low frequency (3 kHz). 12 Threshold Amp Cochlear Amplifier Figure 19: Response envelope of basilar membrane to low level stimulus at a particular frequency. Note that contribution of the outer hair cells (cochlear amplifier) increases the response selectively at one place along the basilar membrane. Base Apex Ganglion Cells Auditory Nerve 4. The Role of Outer Hair Cells In mammals, there exist two types of hair cells, inner and outer. Their detailed structures, functions and innervation patterns are quite different (as discussed below) but both convert mechanical energy into electrical signals. To accomplish this, both depend on stereocilia with mechanically gated ion channels to open, allowing cations (K+ and Ca++) to enter and depolarize the cell. The battery powering this process is provided by the relatively high concentration of K+ in the endolymph, establishing an electrochemical gradient with respect to the hair cell and perilymph. Figure 20: Inner and outer hair cell. Note that afferent terminal on inner hair is contacted by efferent fiber, while efferent fibers directly contact outer hair cells. Almost all of the information getting to the brain comes from the inner hair cells via the spiral ganglion cells. The outer hair cells however, seem to play a critical role in terms of both the sensitivity and tuning of the cochlea. Figure 18 shows the mechanical tuning of one place 13 along the living cochlea. Without the outer hair cells the sharp tuning and high sensitivity (i.e. response to 20 and 40 dB) are lost. Most hearing loss is due to loss of outer hair cells. The nonlinearity of this "cochlear amplifier" can be measured acoustically in the form of otoacoustic emissions - sounds measured in the ear canal. Figure 21. Activity in Outer Hair Cells. Figure 22. Tones emitted in response to the primary tones, f1 and f2, are dependent on OHC activity. 5. Neural Innervation of the Hair Cells The precise pattern of hair cell innervation has been extensively studied during the past two decades. At the base of the hair cells two types of ending can be recognized. The afferent endings, opposite the above mentioned synaptic specializations in the hair cells, are the distal dendrites of the cochlear ganglion cells, whose cell bodies are embedded in the body modiolus along the length of the cochlea. The second type of ending has synaptic vesicles within the nerve terminal and can be stained with acetylcholinesterase or choline-acetyltransferase and is therefore thought to be efferent and presynaptic to the hair cells. These efferent terminals have 14 their cell bodies near the superior olivary nuclei of the brainstem and comprise the final termination of the axons of the olivo-cochlear system. Figure 23. Innervation of inner and outer hair cells by spiral ganglion neurons. The distribution of afferent and efferent endings on the outer and inner hair cells differs strikingly. The vast majority of spiral ganglion cells (95%) terminate on inner hair cells. Only about 5% of these afferent fibers cross the tunnel of Corti to receive synaptic connections from the outer hair cells. 6. Spiral Ganglion Cells and Auditory Nerve The spiral ganglion cells are distributed within the spiral (Rosenthal's) canal of the modiolus, along most of the length of the cochlea. (In man there are about 23, 000 spiral ganglion cells). The distal processes of the ganglion cells (dendrites) pass through the habenula perforata and become unmyelinated prior to entering the Organ of Corti. The proximal processes (axons) form the auditory portion of the eighth cranial nerve. After passing through the central core of the modiolus the axons form the cochlear nerve that travels with the vestibular and facial nerves in the internal auditory meatus. The axons are myelinated throughout their length. Just before entering the cranial cavity Schwann cells are replaced by neuroglia cells. Upon entering the cranium the auditory and vestibular fibers segregate to arrive at their neural targets. Physiological studies on the response properties of cochlear nerve fibers of experimental animals (cats and monkeys) show that: 1. Most fibers have a moderate spontaneous activity that appears to be abolished by hair cells destruction. 2. There is a single excitatory best frequency to which the fibers respond at lowest intensity. 3. The fibers have a simple V- or U-shaped excitatory "tuning curves" surrounding the best frequency. 4. The fibers display a monotonic increase in firing rate up to a maximal level as the intensity of the preferred tone is increased and then show a constant or slightly reduced firing rate. 15 In addition, the spike train of most fibers that respond best to low frequencies is time locked to the sinusoid of the stimulating tone so that the intervals between successive spikes tend to be at the period of the sinusoid or some integer multiple thereof (Figure 27). This latter property is termed "phase-locking" and is probably important both for low frequency sound localization and for frequency discrimination at normal listening levels. Figure 24. Action potentials (spikes) recorded in 8th nerve in response to various sound stimuli. Figure 25: "Tuning curve" of individual auditory nerve axon. 16 Figure 26: Increasing rate of spike discharge for auditory nerve axon with increasing stimulus levels. Variable is frequency of stimulus (kHz). Figure 27: Spike train of auditory nerve axon, showing "phase locking" Time Figures 28 and 29: Post Stimulus Time Histograms 17 8. Efferent Control of Cochlear Output Within the auditory system information processing cannot be considered a simple sequential event. Instead, the functional organization is best characterized by a system of feedback loops whereby the information that is transmitted through the central nervous system is being continually modified on the basis of the immediately preceding auditory events, other sensory inputs, and internal events. It was noted earlier that at the first stage of auditory processing, the middle ear, the nervous system regulates the amount of mechanical energy impinging on the cochlea through contractions of the middle ear muscles. This same influence is seen at the level of the receptor cell output where the terminations of the olivocochlear bundle can markedly influence the neural events transmitted to the central nervous system. Although the degree of specificity with which the olivocochlear efferents influence information processing has not been determined, the effects of gross stimulation on cochlear output have been investigated. Electrical stimulation of the olivocochlear bundle during sound presentation markedly reduces the compound eighth nerve potential, and reduces single fiber responses in the eighth nerve. In the middle frequency range the maximum inhibition of cochlear output is equivalent to approximately a 25 dB reduction in acoustic stimulus intensity. The normal biological significance of this feedback loop is not known for certain. The current opinion is that it may function to enhance the signal-to-noise resolving power of the inner ear, especially under noisy conditions. ORGANIZATION OF THE CENTRAL AUDITORY PATHWAYS The anatomical subdivisions and connections of the mammalian auditory system are both complex and not fully understood. Many excellent laboratories studying a variety of animals offer new data each year regarding both the macro- and micro-circuitry of the central auditory pathways as well as physiologic and behavioral data on the possible functions of various subcircuits. It will take many more years (or decades) to unravel all of these pathways and determine their relevance within the human brain. In this limited overview the major pathways are subdivided into binaural and monaural. This classification should not be considered either exclusive or exhaustive but is an attempt to define broad classes of functionally related pathways. It breaks down altogether in the forebrain (thalamus and cerebral cortex) which will be considered separately. Within each system there are as many descending pathways as ascending pathways. The descending pathways undoubtedly provide feedback, feedforward, and gating information, markedly influencing information flow. Auditory nerve fibers enter the brain stem at the cerebellopontine recess. The fibers pass the inferior portion of the resiform body and enter the cochlear nucleus. Immediately upon entering the cochlear nucleus, most, if not all, axons bifurcate. The ascending branch enters the anteroventral cochlear nucleus, the descending branch projects to the posteroventral and the dorsal cochlear nuclei. 18 The Anteroventral Cochlear Nucleus (AVCN) initiates what is called the binaural brain stem pathways (Figure 30). Throughout most of this nucleus the cochlear nerve fibers terminate on the cell bodies of spheroid or globular shaped neurons with large calyceal endings (end bulbs of Held) that engulf a large portion of the postsynaptic cellular surface. These endings appear specialized for highly reliable and rapid transmission of nerve impulses from the cochlea. The major output of AVCN is to the cell groups of the ipsilateral and contralateral superior olivary complex. Here binaural information first converges. Within the superior olivary complex many cellular populations have been identified, only a few of which are discussed here. Medial Superior Olivary Nucleus (MSO). The MSO is a dorsoventrally aligned sheet of cells with bipolar dendrites. The medially radiating dendrites receive input from the contralateral AVCN and the lateral dendrites are innervated by fibers from the ipsilateral AVCN. Each of these inputs is excitatory, primarily low frequencies are represented, and the cells respond best to a similar frequency presented to each ear. These attributes are consistent with the finding that cells of the medial superior olivary nucleus differentially respond to binaural time or phase differences, known to be important for localization of low frequency sounds. The relatively large size of this nucleus in man may be related to the importance of localizing speech signals. The primary output of MSO is into the ipsilateral lemniscus, to terminate in the inferior colliculus. Medial Nucleus of the Trapezoid Body (MNTB) and Lateral Superior Olivary Nucleus (LSO). The second major brain stem circuit involved in binaural information processing involves the AVCN projections to these nuclei. The lateral superior olive receives an ipsilateral excitatory input from cells in the ventral cochlear nucleus which respond best to high frequencies. The contralateral input to these cells is disynaptic. High frequency responsive AVCN cells send heavily myelinated axons across the midline in the trapezoid body to synapse on large neurons in the MNTB. This rapid conducting pathway then projects to the homolateral LSO and is inhibitory. Thus, the neurons of the lateral superior olive receive excitatory input from the ipsilateral ear and inhibitory input from the contralateral ear. Since the spectral content of high frequency sounds reaching the eardrum varies considerably as a function of the localization of the sound source, the "push-pull" organization of these projections would seem to provide amplification of such cues. This is consistent with the fact that the LSO, although small in man, is large in animals such as bats and some rodents who are specialized for high frequency sound communication and localization. The major outflow from the LSO neurons is into the lateral lemniscus bilaterally, to terminate in the inferior colliculus. Periolivary Nuclei. In the region of the superior olivary nuclei there are a number of smaller dispersed cell groups which also receive bilateral input from the cochlear nuclei. The precise nature of the afferentation to these cells is poorly understood in man. At least some of these cells are the origin of the olivocochlear-bundle and other cells in this region project back to the cochlear nuclei. Efferent projections from the inferior colliculus to these nuclei are abundant. Nuclei of the Lateral Lemniscus. As the lateral lemniscus ascends the dorsolateral brain stem two cell groups are intermingled among the fibers, the dorsal and ventral nuclei of the lateral lemniscus. Little is known about the function of the lateral lemniscal nuclei. Inferior Colliculus. The inferior colliculi are the paired elevations forming the caudal half of the tectal place. Projections from all of the nuclei mentioned above converge on this region. In addition, direct projections from the contralateral anteroventral cochlear nucleus converge on the same region of the inferior colliculus that receives olivary input. The two colliculi communicate with each other through the collicular commissure. Cells in the inferior colliculus respond 19 differently to binaural time and intensify differences and lesions of this area disrupt sound localization on the contralateral side. In addition to the ascending binaural pathways stressed here, it should be noted that most of these cell groups receive descending input from the midbrain and possibly from other regions of the central nervous system (Figure 32). Figure 30: Binaural auditory pathways in the brain stem illustrated for the left cochlear nucleus. The connections from the other cochlear nucleus would form a mirror image. AVCN = anteroventral cochlear nucleus: PVCN = postventral cochlear nucleus; LSO = lateral superior olive: MSO = medial superior olive, MNTB = medial nuclear of the trapezoid body; VLL = ventral nucleus of the lateral lemniscus; DLL = dorsal nucleus of the lateral lemniscus; IC = inferior colliculus; MG = medial geniculate nucleus. MONAURAL PATHWAYS OF THE BRAINSTEM In parallel with the binaural pathways terminating in the inferior colliculus there is a system of chiefly monaural pathways. Axons from the dorsal cochlear nucleus project into the lateral lemniscus via the dorsal acoustic stria (of Monakow) to end in the contralateral inferior colliculus. Collaterals of these fibers (or other axons) also terminate in one of the lateral lemniscus nuclei. Similar projections are thought to arise from cells of the posteroventral cochlear nucleus whose axons cross the midline in the intermediate acoustic stria (of Held) and issue collaterals to the periolivary nuclei. The dorsal cochlear nucleus also receives a variety of inputs form other auditory nuclei as well as nonauditory centers. For example, the dorsal cochlear nucleus receives descending input from the inferior colliculus bilaterally, from the ventral cochlear nucleus, from the cerebellum, from the reticular formation, and probably from several other midbrain and brainstem regions. Cells in the dorsal cochlear nucleus have complex coding characteristics. 20 FOREBRAIN AUDITORY PATHWAYS (FIGURE 31) As indicated above, the inferior colliculus appears to be an obligatory synapse in the auditory pathways. That is, brainstem pathways projecting to the forebrain terminate in the inferior colliculus as do descending pathways from the forebrain bound for auditory regions of the brain stem. Thus, consideration of the forebrain auditory system begins with the ascending brachia of the inferior colliculus, which have their origin in the inferior colliculus and terminate bilaterally in the medial geniculate body of the thalamus. The medial geniculate body is a laminated structure, tonotopically organized orthogonally to the lamina. The geniculate body should not be considered purely auditory. Like other areas of the thalamus this nucleus probably serves to combine information from the cortex and the midbrain through the intermediate thalamic nuclei. From the medial geniculate body the axons extend beneath the pulvinar nucleus, into the posterior extremity of the internal capsule, under the putamen and then into the cortical radiations. In man, the primary auditory cortex is on the inner surface of the superior temporal gyrus (of Heschl) buried deep in the Sylvian fissure (Brodmann's area 41). Topographically within this area high frequencies appear represented dorsally, with lower frequencies more ventral. Surrounding the "primary" auditory cortex are a series of "secondary" auditory fields (e.g. Brodmann's area 22) which may receive direct projections from the medial geniculate body, but also connect reciprocally with the primary region as well as with the thalamic nuclei. In addition, there are transcallosal connections linking auditory regions of the two hemispheres, and connections between the temporal lobe and "auditory" regions in the parietal cortex. As was true of the brain stem pathways, the forebrain auditory pathways cannot be considered as simply relaying information to higher level structures. At each level there is a complex and as yet poorly understood system of feedback loops. This forebrain descending system includes massive projections from the cortical regions to the ipsilateral medial geniculate body and to both inferior colliculi (Figure 32). Figure 31: Connections of the intermediate brain stem pathway (solid lines) and monaural brain stem pathway (dotted line). As in Figure 30, only projections from one side are shown. Forebrain auditory pathways are dashed (abbreviations as in Figure 30). 21 Figure 32: The major descending auditory pathways for one side of the brain (abbreviations as in Figure 30). (Adapted with permission from Thompson, G. Seminars in Hearing, Vol.4, pp.8195, Thieme Medical Publishers, Inc., New York, 1983). Little is known regarding the cortical circuitry involved in audition in primates, including man. Basic issues such as "What cortical areas are receiving auditory information and how are they connected?" are only now beginning to be learned. The questions "How are speech versus nonspeech sounds gated?" and "How does auditory information gain access to the classical speech centers?" remain to be answered. TONOTOPIC ORGANIZATION An important principle carried through each level of the auditory pathways is the maintenance of the topography of the receptor surface. Figure 33: Schematic of the topographic projection of points on the cochlea to the anteroventral cochlear nucleus (AVCN). Each region of the cochlea is innervated by many spiral ganglion cells (G) whose central axons terminate as a sheet in the cochlear nucleus, forming an isofrequency lamina. These isofrequency lamina are stacked in the order of their cochlear innervation to form a tonotopic organization. 22 Figure 34: Sagittal section through the cochlear nucleus of the cat showing an electrical penetration through the dorsal cochlear nucleus (Dc) and posteroventral cochlear nucleus (Pv). The best frequency (in kHz) of neurons encountered at successive points along the electrode tract are indicated to the right. Note that there is a systematic decrease in best frequencies until the electrode enters Pv, at which point a new decreasing sequence begins. (From Rose, J.E. et al., Bull. Johns Hopkins Hosp. 104:211-251, 1959). Figure 35. A: Cutaway drawing of monkey brain exposes auditory cortical areas on supratemporal plane. Horizontally shaded area indicates region in which click stimuli evoke slowwave potentials in deeply anesthetized animals. Crosshatched area is region within which lesions produce retrograde degeneration in medical geniculate. B: Enlarged drawing corresponding to crosshatched area in A and showing patterns of representation of frequencies within this region determined by evoked potential method. SOUND SOURCE LOCALIZATION Sound source localization (i.e. directionality) is an important parameter of sound. The auditory system begins to define the source of sound at the periphery, where head shape and pinna shape influence intensity and frequency information related to a sound. This information is transmitted to the CNS along pathways discussed previously, where temporal precision in action potential encoding maintains frequency- information on arrival times and intensity level at each ear. Some of the cellular and physiological mechanisms maintaining action potential precision include: large, secure synapses; fast, glutamatergic receptors; post-synaptic currents that limit the 23 window for action potential recruitment. The mechanisms by which binaural information is processed at the superior olivary complex (SOC) and more centrally are areas of active research. The role of the lateral superior olive (LSO) in processing of intensity information is well established. The role of the medial superior olive (MSO) in processing intraural time differences (ITDs) is subject to active study. The Jeffress model of delay lines is well supported by data from barn owls. The analogous Laminaris model describes ITD processing in chickens. In mammals, there is no strong structural evidence for the existence of delay lines. Current research is investigating the possible role of inhibitory glycinergic input into the MSO as critical for the processing of ITD information in mammals. Figure 36. Binaural processing at the Lateral Superior Olive. Neuronal responses in the LSO are sensitive to sound intensity differences between ipsilateral and contralateral ears. 24 Figures 37. The Jeffress model proposed to account for binaural information processing in the avian auditory brainstem. 25