RTF - NCSU COE People

advertisement

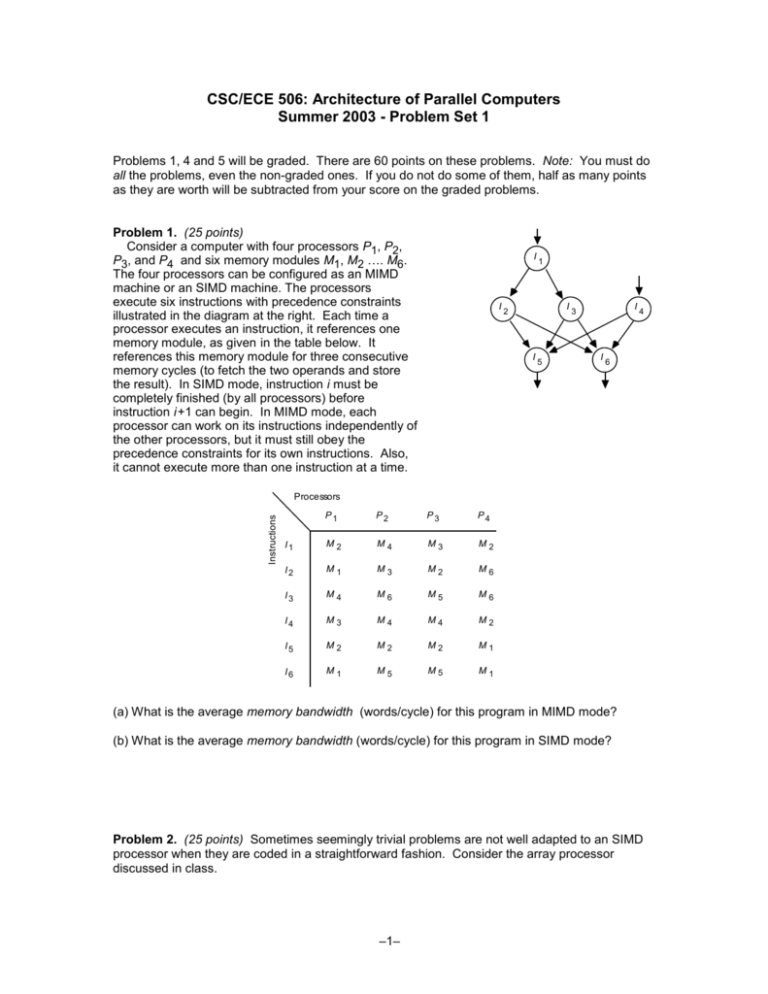

CSC/ECE 506: Architecture of Parallel Computers Summer 2003 - Problem Set 1 Problems 1, 4 and 5 will be graded. There are 60 points on these problems. Note: You must do all the problems, even the non-graded ones. If you do not do some of them, half as many points as they are worth will be subtracted from your score on the graded problems. Problem 1. (25 points) Consider a computer with four processors P1, P2, P3, and P4 and six memory modules M1, M2 …. M6. The four processors can be configured as an MIMD machine or an SIMD machine. The processors execute six instructions with precedence constraints illustrated in the diagram at the right. Each time a processor executes an instruction, it references one memory module, as given in the table below. It references this memory module for three consecutive memory cycles (to fetch the two operands and store the result). In SIMD mode, instruction i must be completely finished (by all processors) before instruction i+1 can begin. In MIMD mode, each processor can work on its instructions independently of the other processors, but it must still obey the precedence constraints for its own instructions. Also, it cannot execute more than one instruction at a time. I I 1 I 2 I 5 I 3 I 4 6 Instructions Processors P1 P2 P3 P4 I1 M2 M4 M3 M2 I2 M1 M3 M2 M6 I3 M4 M6 M5 M6 I4 M3 M4 M4 M2 I5 M2 M2 M2 M1 I6 M1 M5 M5 M1 (a) What is the average memory bandwidth (words/cycle) for this program in MIMD mode? (b) What is the average memory bandwidth (words/cycle) for this program in SIMD mode? Problem 2. (25 points) Sometimes seemingly trivial problems are not well adapted to an SIMD processor when they are coded in a straightforward fashion. Consider the array processor discussed in class. –1– (a) Write an assembly-language program for it to find the transpose of an N N matrix. That is, given an N N matrix A, find B such that B = AT. Comment your program. In addition to the instructions presented in class, you may also use the following. RRL RRR i, j i, j Rotate index register i circularly left by j bits. Rotate index register i circularly right by j bits. … A [0, N –1] A [1, N –1] A [1, 0] … A [1, N –2] A [2, N –2] A [2, N –1] … A [2, N –3] … A [ N –1, 0] A [N –1, 1] A [N –1, 2] … A [0, 1] … A [0, 0] … (b) How could your program be improved if skewed storage were used for the array A ? That is, assume that the elements of A were stored as shown at the right. You need not actually code the program; just explain how it would be better than the program for part (a). Assume that each ALU has its own private index registers, in addition to those in the control unit. Problem 3. (25 points) Consider the simple problem of “triangulating” a polygon. To study the properties of a polygonal surface that depend on each point on that surface, the surface is divided into n small triangular regions. Each triangular region is an aggregation of points represented by a single point in the triangle. The complex equations are solved on each such representative point, and the resulting values at each point are summed to determine the current state of the surface at that instant. Assume that it takes 1 unit of time per point to solve the equations and 1 unit of time per point to calculate the sum. (a) What would be the total cost of computation in a sequential program for this application? (b) If this application is decomposed into a 2-phase program, describe each phase. What would be the cost of computation for the serial phase and the parallel phase, total cost of computation and the maximum achievable speedup taking into consideration the number of processors, regardless of the processors? (c) If the application is instead decomposed into a 3-phase program, what would be the answer to the above question? Instead of determining the maximum achievable speedup regardless of the processors, please explain what happens to the speedup as the number of processors increases, assuming n >> p. Problem 4. (10 points) Which of our case-study applications (Ocean, Barnes-Hut, Raytrace, and Data Mining) do you think are amenable to decomposing data rather than computation, and using an owner-computes rule in parallelization? What do you think the problem(s) would be with using a strict data-distribution and owner-computes rule in the others? Problem 5. (25 points) A parallel computation on an n-processor system can be characterized by a pair P(n), T(n), where P(n) is the total number of instructions executed by all the processors and T(n) is the elapsed execution time for the entire system (measured in number of –2– instructions). (You may assume that all instructions take the same amount of time.) P(n), for n > 1, may be greater than P(1) because some of the processors have to do extra “redundant” work to synchronize or avoid excessive memory contention. However, assume that P(n) is never less than P(1). In a serial computation, all instructions are performed by a single processor, so P (1) = T (1). Usually, for n > 1, T(n) < P(n) because the computation will finish faster on a multiprocessor. Lee (1980) has suggested five performance indices for comparing a parallel computation with a serial computation. T(1) S(n) = T(n) (The speedup) T(1) E(n) = nT(n) (The efficiency ) P(n) R(n) = P(1) (The redundancy) P(n) U(n) = n T(n) (The utilization) Q (n) = T 3(1) n T 2(n) P(n) (The quality) Note: T 2(n ) = T (n ).T (n ) (a) Prove that the following relationships hold in all possible comparisons of parallel to serial computations: (1) 1 ≤ S (n ) ≤ n (3) U (n ) = R (n ).E (n ) (2) E (n ) = S (n ) n (4) Q (n ) = S (n ).E (n ) R (n ) (b) Based on the above definitions and relationships, give physical meanings of these performance indices. –3–