Two major steps in parallel computing

advertisement

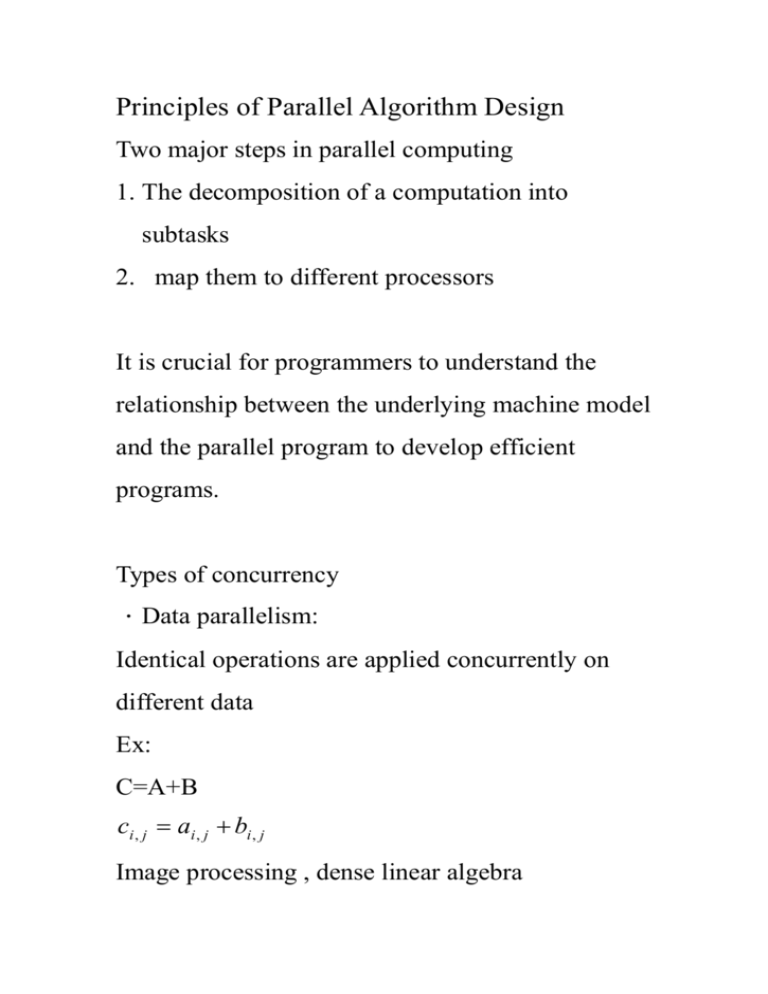

Principles of Parallel Algorithm Design Two major steps in parallel computing 1. The decomposition of a computation into subtasks 2. map them to different processors It is crucial for programmers to understand the relationship between the underlying machine model and the parallel program to develop efficient programs. Types of concurrency .Data parallelism: Identical operations are applied concurrently on different data Ex: C=A+B ci , j ai , j bi , j Image processing , dense linear algebra .Task parallelism: ID# Model Year Color 4523 Accord 1997 Blue 3476 Taurus 1997 Green 7623 Camry 1996 Black 9834 Civic 1994 Black 6734 Accord 1996 Green 5342 Contour 1996 Black 3845 Maximum 1996 Blue 8354 Malibu 1997 Black 4395 Accord 1996 Red 7352 Accord 1997 Red We want to find (Model=Accord and Year=1996) and (Color=Green or Color=Blue) 1. creating task: 2. join : the merger of two (tasks) flows. 3. Synchronize (barrier) Wait at some point for all the other tasks to catch up. .Functional(stream)Parallelism : refers to the simultaneous executing of different programs on a data stream. Remarks: Many problems exhibit a combination of data, task, stream parallelism. .Two important notes 1. load balance : equal computational load with each task 2. communication costs : O( n 2 / p ) less communication & less data are needed. Runtime: Ts : serial runtime (one processor) The time elapsed between the beginning and the end of its execution on a sequential computer. T p : parallel runtime (execution time on P processors) The time elapsed from the moment that a parallel computation starts to the moment that the last processor finishes execution, which includes essential computation, overheads of parallelism communication load unbalances idliey serial components in program Serial components in program -something can NOT be broken down any farther -critical path : The smallest chain of instruction that have a serial ordering among them Ex: adding n numbers t c :time of adding two numbers T p 3t c Ts 7t c ( log2 n tc is the fastest possible runtime) Speed up s Ts Tp is a measure that captures the relative benefit of solving a problem in parallel. Note: We would like to choose the best algorithm run time to be Ts . Theoretically, speedup can never exceed the number of processors. However, sometimes super-linear speedup does occur due to non-optimal sequential algorithm or to machine characteristic (cache memory). Ex: n2 n 1.T p p n2 n 100 2. T p p n2 n 0 .6 p 2 3.T p p n-problem size p-number of processors Remark: we need to analysis to speed up Efficiency : is a measure of the fraction of time for which a processor is usefully employed E Ts p Tp Cost of an algorithm: Cost p p T p Cost p Ts p T p ideally Ts or E 1 p Tp real world : Cost optimal ~E~O(1) Cost p ~ O(T s ) Ex: Summing up n(=p) numbers Ts (n 1)t c ~ O (n) T p (t c t s t w ) log n Cost p n log n(t c t s t w ) ~ O (n log n) Not cos t optimal Now n>p n numbers per processor p n T p t c ( 1) (t c t s t w ) log p p t c (n 1) s n t c ( 1) (t c t s t w ) log p p t c (n 1) E t c (n p ) (t c t s t w ) p log p n 1 tc t s t w (n p) p log p tc 1. keep n fixed , n>>p tc E 1 ts tw E p E 2.keep p fixed n E 1 Remark: How to scale problem & processor for optimal performance? memory generally increase linearly with number of processors let n= kp 1 E= (k 1) p p c log p kp 1 (k p 1) if p E 0 Conclusion : Increasing processors Increasing efficiency. Problem size: the total number of basic operations required to solve the problem. A data parallelism of a matrix: Model 1: n2 n T p t c (t s t w )4 p p n 2tc E n 2 t c 4 p (t s t w n ) p 1 1 k1 p p k 2 n n2 To keep E constant n 2 O( p) So, the problem size p. Model 2: (2-dim problem Memory size O(n 2 ) n2 T p t c (t s t w n) p n 2tc E 2 n t c p (t s t w n) 1 1 t t n p p p 1 s w 2 1 k1 2 k 2 n n tc n To keep E constant we need n O( p) Not efficient. Scalability of Parallel Systems Very often, programs are designed and tested for smaller programs on fewer processors. However, the real problems the programs are intended to solved are much larger, and the machines contain larger number of processors. Parallel runtime: The time elapsed from the moment that a parallel computation starts to the moment that the last processor finishes execution, which includes essential computation: Interprocessor communication: Load Imbalance: sometimes (for example, search and optimization), it is impossible (or at least difficult) to predict the size of the subtasks assigned to various processors. Quick sort pick a pivot half of the elements are less than ideally pivot & half are grather pivot=5 Ts n log n tc each level contains n elements need ntc operations n T p (n )t c ~ 2n t c 2 Cost n(2n t c ) O (n 2 ) O(n log n) step1: sort local lists n n ( log )t c p p step2: merge need 2n tc p 2n 4n pn n ( ) ~ O (log n ) p p p p