Lessons Learned from eClass - uclic

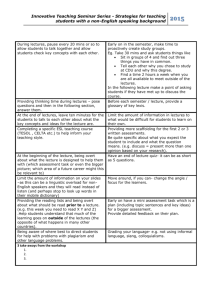

advertisement