Representation and Acquisition of the Word Meaning for Picking out

advertisement

Representation and Acquisition of the Word Meaning

for Picking out Thematic Roles

Dan-Hee Yang and Mansuk Song

Department of Computer Science

Yonsei University, Seoul, 120-749, Korea

http://december.yonsei.ac.kr/~{dhyang, mssong}

Abstract: Argument structures and selectional restrictions are essential to a proper semantic

analysis of linguistic expressions. In this paper, we propose the concept of Case prototypicality1

that is a direct knowledge for picking out the Case of each argument in a sentence. Also, we

show that the meaning of words that belong to noun and verb categories can be defined by

using this concept and that it can be acquired from a corpus by machine learning in virtue of the

characteristics of Case particles--every language has a corresponding device to them. In

addition, we show two techniques that can reduce the manual burden of building sufficient

training data: First, we use the characteristics of the complexity types for picking out Case.

Second, we incorporate both supervised and unsupervised machine learning.

Keywords: Korean semantic analysis, Thematic Roles, Case prototypicality, Case, Machine

learning, Word meaning

1. Introduction

Much work has been done on automatically acquiring linguistic knowledge from linguistic

resources such as corpora and MRD in mid 1990s. Ribas [1] used syntactic selectional restrictions from a

parsed corpus. Pedersen [2] studied a system that automatically acquires the meaning of unknown nouns

and verbs from a corpus according to P. Kay’s view (1971) that human lexicons are largely organized as

concept taxonomies. Won-Seok Kang [3] defined a set of 106 semantic features based on WordNet’s

hyponymy for the English-Korean machine translation. Young-Hoon Kim [4] added thematic roles to the

meaning of a noun based on the classification of hierarchical semantic features in order to translate

Korean particles into English prepositions in Korean-English translation.

However, most of such studies showed the common defects as follows: First, the meaning of words

is represented by the componential analysis under the semantic feature hypothesis. Second, they do not

consider Cases as the essential meaning of a word. Third, they use only the collocational information

among subject, predicate, and object. In order words, using Case particles only in the level of surface

Case, they do not think much of the fact that a Case particle can be used to represent various deep Cases.

Note that Young-Me Jeong [5] asserted that just by a statistical processing of the collocational

information or mutual information, we can know that words clustered into the same categories are related

1

to each other in some way, but we do not know how they are related.

The componential analysis has been mainly used to represent and construct the meaning of words,

but it is problematic whether they are done manually or automatically. Above all, it is extremely difficult,

if not perhaps impossible in principle, to find a suitable, linguistically universal collection of semantic

primitives in which all words can be decomposed into their necessary properties. Even simple words

whose meanings seem straightforward are extremely difficult to characterize [6]. Furthermore, the

componential analysis requires a hierarchical taxonomy. The prime example of scientific taxonomies is

the classification of plants and animals based on the proposals made by the Swedish botanist Linnaeus.

The Thesaurus of English Words and Phrases by Mark Roget is representative of linguistic classification.

It took many years for such experts to build it. To make matters worse, never can a single classification

satisfy the ultimate need of NLP because a great variety of criteria depending on contexts need to apply

for classifications.

In addition, the componential analysis might not conform to the cognitive plausibility in that

humans, even linguists, cannot naturally enumerate the semantic features of a word, but they can speak

fluently. Therefore, the knowledge that humans have for picking up Case 2 seems to be direct enough not

to require any inference in understanding a sentence. In other words, humans instantly grasp the meaning

of a word by its Case without the componential analysis. Only when there is ambiguity do they try to

analyze the meaning more deeply. However, in a general situation, they are not even conscious of any

ambiguities in the sentence. Accordingly, we should represent the knowledge in a direct way that

computers can process such a general situation well.

As a consequence, we should be free ourselves not only from the hierarchical taxonomy, but also

from the procedural representation of knowledge bearing inference in mind. Hence we will define the

meaning of words in order to satisfy the following conditions: First, it should be plausible in view of

psycholinguistics. Second, computers can automatically acquire it. Finally, it should be adequate for

picking out the Case of each argument in a sentence. In order to show that the representation of word

meaning is feasible, we will construct it by machine learning on the basis of Case particles and the

collocational information from a large corpus. In addition, we will introduce two techniques that can

reduce the manual burden of building sufficient training data, which is a critical problem in practical

application of machine learning.

2. Case Prototypicality and New Representation of Word Meaning

2.1 Word meaning and Case prototypicality

When we talk about word meaning, it is generally considered as referring to a lexicographical

1

2

how degree each word is a typical exemplar of each Case concept

In Case particle, the Case means a surface Case, but in the other context, it means a deep Case or a thematic role.

2

meaning. However, we do not generally communicate with each other by the lexicographical meaning. In

addition, there is no agreement among linguists upon what the meaning is and this fact gives rise to

various types of semantics: Bunge (1974) classifies semantics into ten types, and Lyons (1981) into six

types. For example, behavioristic semantics, which is one of the semantics considered to be very peculiar

even though they have a long history, defines the meaning of an expression as a stimulus which causes it,

a response which it causes, or both of them. Also, cognitive linguists think that the meaning of a word

cannot be defined in the way that A is defined as B, but it should be defined as the relationship among

words like a semantic net. In the extreme, they think that the meaning of a word can be considered as the

knowledge about the world [7,8].

In view of NLP, how should the meaning be defined at all? To begin with, since it should exist as an

internal data in NLP systems, we cannot think it apart from a lexicon. The lexicon required by a

morphological analyzer will be different from that required by a discourse analyzer. Therefore, we should

consider the meaning of words, not in view of the answer to ‘What is it (e.g., love, life)?’, but in view of

‘To what linguistic processing is it pertinent?’. This implies that it is desirable to use the term in such a

way as the word meaning pertinent to morphological analysis or the one pertinent to semantic analysis. It

is because whatever definition of word meaning cannot meet a linguistic or philosophical question such

as ‘Is the definition correct or complete?’ This study will talk about the word meaning pertinent to picking

out Cases. Hence, we will use the terms the meaning of words and the knowledge of words alternatively as

the case may be.

The Case for an argument can be determined within the context of a sentence. However, we already

have previous knowledge about potential Cases, which helps us to understand various situations where

the word may be used. If we did not have such knowledge, we could never communicate with each other.

For example, if we do not know about N, or Cases that N may be used in a different situation, we cannot

use N except for ‘What is N?’. Fortunately, we are able to infer the Cases through looking at its usage in

various contexts. This is the very acquisition process of the meaning of words.

Nelson (1974) insisted by the functional core concept theory that we acquire meaning as we

recognize the functions of objects by some other non-linguistic means and emphasized the role of

functional semantic features such as roll, spatter, move, etc. when children acquire the meaning of words

[9]. Rosch et al’s (1973) prototype theory is an approach developed to account for the representation of

meaning in adult language. According to the theory, the meaning of words is not a set of invariant features,

but rather a set of features that capture family resemblance. Some objects will be most typical of the

meaning of a word by sharing more of the features of the word than others. Certain features are more

important in determining class membership than others, but none are required by all members [9,10].

These two theories are similar to the traditional semantic feature theory to some degree, but also they

have critically conflicting aspects.

So, we will use a Case, a deep Case, and a thematic role as synonyms.

3

We will consider Cases as the functional core concept, hence Cases have a role of semantic features.

Accordingly, we define the meaning of words as representing to what degree each word is an exemplar of

each Case concept, or prototypicality. Case prototypicality will be used a general term including both

Case and its prototypicality. Notice that we do not insist that Case is the smallest unit of linguistic

meaning, but we consider it as the smallest unit in a high level of cognitive process. Hence, Case

prototypicalities are not whole meaning of a word, but direct relevant knowledge just to pick out thematic

roles of arguments.

2.2 New representation of word meaning

In consequence of the discussion in the previous section, we present a new representation of Table 2

[11] based on two hypotheses of Table 1 for word meanings.

Table 1. Hypothesis for word meaning representation

Hypothesis 1: The meaning of a word is represented by its Case or thematic

role in context at a surface level or intuitive level. The Case assumes a role of

semantic primitive.

Hypothesis II: Selectional restrictions are not represented by binary semantic

features, but probabilistic or fuzzy one. There is no strict boundary of category,

but the membership is the degree of similarity to the prototype.

Particles in Korean belong to an uninflected and closed word class. There are about 20 Case

particles except minute variants. When they occur after nominals, they are often called ‘postpositions’ in

contrast to ‘prepositions’ in English. In Chinese, there is an intervening part of speech (介詞) for this

function. For instance, ‘으로’, ‘向’, ‘に’ and ‘to’ are used for denoting an orientation in 그 탁자는 북쪽

으로 놓여 있다. ‘這卓子面向北方’, ‘そのタイブルは北にあります’, and ‘The table lies to the north.’,

respectively. For denoting an instrument, ‘으로’, ‘以’, ‘で’, and ‘by’ each are used in 회의로 결정하다,

‘由會以決定’, ‘會議できめる’, and ‘conclude by a meeting’.

Their main usage is to manifest the grammatical relations of words within a sentence. Case particles

are classified into seven major types. Nominative particle following a subject, objective particle following

an object, and the like. Adverbial particles are used in a variety of ways depending upon the preceding

noun and the predicate. A Case particle manifests a surface Case in the n: m relationship so that one Case

particle can be used to represent several deep Cases and vice versa. This phenomenon also occurs in

Chinese, English, Japanese, and the like in a similar way.

Table 2. Meaning representation of nouns and verbs

4

Meaning representation of noun

n = { (cp, z) }, where n is a noun, p is a Case particle, c is the Case which may be

assigned to the noun when it co-occurs with the Case particle p, and z is a

prototypicality.

Meaning representation of verb

v = { (cp, z) }, where v is a verb, p is a Case particle, c is the Case which the verb v

may require when it co-occurs with the Case particle p, and z is a prototypicality.

The reason to define the meaning of words as in Table 2 is in order to reflect the fact that most

nouns can be used to represent GOAL, ATTR, INST, etc. (refer to Section 3) within a sentence, but the

possibilities that each noun are used for each Case are different. In other words, we intend to reflect

human’s intuition that 숟가락 swutkalak ‘spoon’ is mostly likely to be used INST rather than GOAL. By

the definition of Table 2, for example, a noun 수레 swuley ‘wagon’ is represented by { (GOALulo, 0.936),

(ATTRulo, 0.936), (INSTulo, 2.321) }. The bigger the numeric value is, the closer it to the corresponding Case

prototypicality.

A verb 逃走하다 tocwuhata ‘flight’, by { (GOALulo, 0.494), (ATTRulo, 0.371), (INSTulo, 0.509) }. This

means that when 수레 swuley ‘wagon’ is used an argument together with Case particle 으로 ulo ‘to,

towards, as, into, for, of, from, with, etc.’, it has 0.936 as the meaning of GOAL, 0.936 as the meaning of

ATTR,

and 2.321 as the meaning of INST. When 逃走하다 tocwuhata ‘flight’ is used together with Case

particle 으로 ulo, it means that the degree to require GOAL as its argument is 0.494, 0.371 for ATTR,

0.509 for INST. This example shows that the word 逃走하다 tocwuhata ‘flight’ strongly demands nouns

representing INST when used with the Case particle 으로 ulo and otherwise ones representing GOAL as a

second choice. Consequently, when there is a semantic category named GOAL, the concept of Case

prototypicality is used to show how much the noun 수레 swuley ‘wagon’ is typical of the Case category

and how much the verb 逃走하다 tocwuhata ‘flight’ needs GOAL.

As Cases refer to the semantic relation between nominals 3 and the predicate that is a thread and

needle relationship within a sentence, we treat them by means of semantic categories in the same way.

The above way of representing the meaning of words has advantages as follows: First, the meaning can

be constructed from a corpus through machine learning. Second, the process of picking out Cases of an

argument might reduce to a remarkably plain mechanical task, which we will show later with Figure 1.

Finally, metaphor and metonymy, which are difficult to be treated by the componential analysis, can be

treated in the same way without any additional efforts.

3

This study treats only nouns.

5

For example, in the sentence 그녀의 마음은 사랑으로 가득찼다. Kunye-uy maum-un salangulo katukchassta. ‘Her mind was filled with love.’ 사랑 salang ‘love’ can be represented as having a

material role (INST), by metaphor, exactly like the sentence 양동이는 물로 가득찼다. Yangtongi-nun

mwu-lo katukchassta. ‘The bucket was filled with water.’ However, the traditional componential analysis

cannot classify 사랑 salang ‘love’ as a hyponym of material. Otherwise, in the same way it must treat all

possible cases that the word may be used by metaphor. This will create a new hierarchical structure, hence

the stability of the previous hierarchical structure will collapse that are essential to the componential

analysis. This is true of metonymy.

3. Learning of Case Concepts and Acquisition of Word Meaning

We experiment with the Case particle 으로 ulo that is the most complex particles in Korean, for we

expect that we can perform for any other particle in the similar way if we can deal with this particle

satisfactorily. We classify thematic roles that the Case particle 으로 ulo4 can Case-mark into three types:

GOAL for

orientation, path, goal; INST for instrument, material, cause; and ATTR for qualification, attribute,

purpose. This macro classification is not only to avoid possible controversy in view of linguistics, but also

because it is doubtful whether such a detailed classification is really needed in view of NLP [7,11]. For

example, one classification system suggested by linguists does not distinguish between instrument and

material. The other system between instrument and cause. However, most linguists agree that other Case

particles except -으로 -ulo (instrumental particle), -에 -ey (dative particle), -가 -ka (nominative

particle), -을 -ul (objective particle)--in order of its complexity and controversy--can manifest only one

to three thematic roles.

The process of experiment is as follows: First, we will extract triples [noun, Case particle, verb]

from a large corpus. Second, we will build training and test data from the extracted triples. Third, we will

make a computer learn the concepts of Cases by machine learning. Finally, we will define word meaning

by a set of Case prototypicalities.

3.1 Building pattern data

Morphological and partial syntactic analyzers are essential to extract the collocational information

from a corpus. We used NM-KTS5 (New Morphological analyzer and KAIST Tagging System). They have

the analytical accuracy rate of about 96% and the guess probability of less than 75% on unregistered

words. In partial syntactic analysis, it is difficult to extract only necessary arguments. To increase the

4

Refer to M. Song (1998) for more details of this classification.

6

possibility that nouns are a necessary argument, this study extracts the triple [noun, case particle, verb] if

and only if the verb immediately follows the Case particle.

However, this strategy cannot completely handle complex sentences, because, for example, in the

sentence 그는 도끼로 썩은 枯木을 쳤다. kunun tokki-lo ssekun komokul chyessta. ‘He stroke the

rotten old tree with an axe.’, the expression 도끼로 썩다 tokki-lo ssekkta ‘rot with an axe’ is extracted.

For this, additional work is required to split a complex sentence into several simple ones. This is one of

the difficult problems to be resolved in Korean syntactic analyzers [11,16]. In our experiment, 14,853

triples [noun, Case particle, verb] from YSC-I corpus6 were extracted. From the triples, we exclude the

following ones:

The triples that are not found in the Korean dictionary, for either they were created by the

morphological analyzer’s errors or they are proper nouns or compound nouns

A pseudo-noun plus 으로 ulo (for example, 主로 cwu-lo ‘mostly’, 때때로 ttayttay-lo

‘sometimes’, etc.) and nouns followed by suffixes such as 上 sang ‘on’, 順 swun ‘in order of’, 式 sik

‘in way of’, and the like, for they are not actual nouns but adverbials.

Table 3. Corpus and Triples

Corpus

Extracted triples

旦熙가 學校로 갔다. Dan-Hee-ka hakkyo-lo kassta. ‘DanHee went to school’

[學校 hakkyo ‘school’, 으로 ulo ‘to’, 가다 kata ‘go’]

翼熙가 房으로 왔다. Ik-Hee-ka pang-ulo wassta. ‘Ik-Hee

came to the room.’

[房 pang ‘room’, 으로 ulo ‘to’, 오다 ota ‘come’]

眞聖이가 집으로 갔다. Cinseng-ika cip-ulo kassta. ‘Cinseng

went home.’

[집 cip ‘house’, 으로 ulo, 가다 kata ‘go’]

철수가 學校로 들어갔다. Chelswu-ka hakkyo-lo tulekassta.

‘Chelswu went into the school.’

[學校 hakkyo ‘school’, 으로 ulo ‘into’, 들어가다

tulekata ‘go’]

영희가

房으로

움직였다.

Yenghee-ka

wumcikyessta. ‘Yenghee moved into the room.’

[房 pang ‘room’, 으로 ulo ‘into’, 움직이다 wumcikita

‘move’]

pang-ulo

After this treatment, 12,187 triples remain and these are called Pattern Set (PSET). There are 3,727

different verbs among them. To clarify the above procedure, here is a simple example. Suppose that a

corpus consists of the left part in Table 3. Then, the extracted triples are in the right part. In the future

exemplification, we will continue to quote from this table.

3.2 Building of training data and test data

Building sufficient training data is a critical problem in machine learning. To reduce the burden, this

study uses the collocational information related to the Case particle. Let [noun, Case particle] be a noun

5

6

NM was developed in our laboratory, KTS in KAIST.

Yonsei Corpus I (about 3 million words) was compiled by the Center of Korean Lexicography at Yonsei University.

7

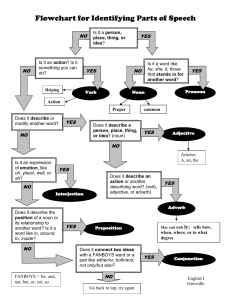

part and [Case particle, verb] a verb part out of [noun, Case particle, verb]. Then, we can classify the

information type for picking out the Case of arguments as in Table 4. We can see here that Case particles

in Korean, to an extent, have a role of selectional restrictions in that Case particles restrict nouns that can

occur before 으로 ulo in connection with verbs.

Table 4. Complexity types for picking out Case

Type

Definition

I

II

III

Only the verb part can determine the Case of its argument regardless of the noun part.

Both noun and verb parts should be considered.

The relation to other arguments should be considered.

For example, the expression -으로 생각하다 -ulo sayngkakhata ‘think as’ is COMPLEXITY-TYPE I

because we are sure that a noun adequate for ATTR will come up as an argument without really looking at

the noun. Since the meaning of verbs is unique in this type, it will not get any influence from the noun.

Thus only the verb part will determine the thematic role of the noun. COMPLEXITY-TYPE II is exemplified

in the expression -으로 살다 -ulo salta ‘live on’. 물 mwul ‘water’ in 물로 살다 mwul-lo salta ‘live

on water’ is construed as INST. 先生 sensayng ‘teacher’ in 先生으로 살다 sensayng-ulo salta ‘live as a

teacher’ is construed as ATTR. 물 mwul ‘water’ in 물로 變하다 mwul-lo pyenhata ‘be turned into water’

is construed as ATTR.

Earlier studies [13] assumed that only the noun part can determines the Case. However, we exclude

this case because it does not seem to occur in reality in consequence of thorough investigation. For

instance, when we look at 東쪽으로 tongccok-ulo ‘to the east’, we assumed that we can construe 東쪽

tongccok ‘east’ as GOAL without looking at the following verb. However, we should construe it as ATTR if

we look at verbs such as 變하다 pyenhata ‘be turned into’ and 생각하다 syangkakhata ‘think’.

COMPLEXITY-TYPE III is the case where we cannot determine the Case only with the noun part and verb

part. In 배로 가다 pay-lo kata ‘go by boat’, for example, 배 pay ‘boat’ in 濟州島에 배로 가다

Ceycwuto-ey pay-lo kata ‘go by boat to Ceycwu island’ is construed as INST, but 배 pay ‘boat’ in 닻을

올리러 배로 가다 tach-ul ollile pay-lo kata ‘go to the boat to weigh anchor’, as GOAL. This

phenomenon shows that such an ambiguity can be resolved only by referring to the other parts (in this

case, 濟州島에 Ceycwuto-ey ‘to Ceycwu island’ and 닻을 올리러 tach-ul ollile ‘to weigh anchor’).

One might consider this as the inherent limitation of the meaning representation of Table 2.

However, this problem is related to the technique of semantic analysis rather than the meaning

8

representation itself. If we use a more sophisticated technique (for example, to consider the relations

among other arguments or Case particles) for picking out Case, which will possibly be our research topic

in future, we can solve such an ambiguity. For example, Case particle -에 -ey ‘at’ in 濟州島에

Ceycwuto-ey ‘to Ceycwu island’ will be construed as GOAL because it belongs to COMPLEXITY-TYPE I.

Hence, according to the theta criterion of Chomsky (Each argument is assigned one and only one

thematic role, and each thematic role is assigned to one and only one argument.) [14], we can know that

배 pay ‘boat’ in 濟州島에 배로 가다 Ceycwuto-ey pay-lo kata ‘go by boat to Ceycwu island’ should

be construed as INST.

In case of COMPLEXITY-TYPE I, therefore, we can pick out the Case of an argument only with the

verb part without any consideration of the semantic relation between the predicate and its argument. This

fact can considerably reduce the manual work for preparing a large training data. However, it may also be

a factor that drops the accuracy of machine learning because there may be errors in human’s intuition. It is

relatively easy to pick out thematic roles in sentences that belong to COMPLEXITY-TYPE I and II because

we need to observe only the noun part and the verb one. Especially, COMPLEXITY-TYPE I is very simple,

hence we can make a computer learn Case concepts relatively easily by putting COMPLEXITY-TYPE I to

good use. After such learning, a computer can deal with COMPLEXITY-TYPE II by means of the pretty

simple algorithm (even if further research is needed) described in Section 3.5. However, for COMPLEXITYTYPE

III, as we mentioned above, semantic analysis in the level of sentence is required. Notice that the

meaning representation per se according to the componential analysis cannot also perform semantic

analysis, needless to say, ambiguity resolution.

To prepare training and test data, let’s select verbs under COMPLEXITY-TYPE I from the PSET and

then manually tag verbs with Cases. For example, since 가다 kata ‘go’ and 오다 ota ‘come’ belong to

COMPLEXITY-TYPE I,

GOAL]

they will present the following result: { [가다 kata ‘go’, GOAL], [오다 ota ‘come’,

}. Training set (TSET) is a set of quadruples [noun, Case particle, verb, Case], which are

automatically created from the above tagged set. For example, we will have the quadruple set as follows:

{ [學校 hakkyo ‘school’, 으로 ulo ‘to’, 가다 kata ‘go’, GOAL], [房 pang ‘room’, 으로 ulo ‘in’, 오다

ota ‘come’, GOAL], [집 cip ‘house’, 으로 ulo ‘to’, 가다 kata ‘go’, GOAL] }. Notice that the PSET is a

simple set of patterns without Case-tagging, and the TSET is a set of patterns with Case-tagging for

machine learning. Only the verbs 가다 kata ‘go’, 오다 ota ‘come’ was Case-tagged manually.

By means of using the characteristics of COMPLEXITY-TYPE I, there is no need to Case-tag about [學

校 hakkyo ‘school’, 가다 kata ‘go’], [房 pang ‘room’, 오다 ota ‘come’], [집 cip ‘house’, 가다 kata

‘go’], respectively. The larger the PSET is, the more the burden of manual tagging is reducible since a

9

verb co-occurs with a lot of nouns in a corpus. After that, selecting several verbs from the PSET, we build

test data (ESET), which consist of a set of [verb, Case]. The ESET and TSET are mutually exclusive. The

result is { [들어가다 tulekata ‘enter’, GOAL] }. In addition, unspecified set (USET), which is used later

for unsupervised learning, is defined as PSET – (TSET + ESET). In the above example, USET becomes

{ [房 pang ‘room’, 으로 ulo ‘to’, 움직이다 wumcikita ‘move’] }.

In this experiment, we select 60 training verbs and 30 test verbs considering how easy we can

manually Case-tag verbs and how often verbs occur in the PSET. Note that the set of training verbs does

not intersect that of test verbs. The easiness (or reliability) of Case-tagging is needed to enhance the

accuracy of training data and test data because it is very difficult for even humans to Case-tag (i.e., pick

out thematic roles) without any controversy according to the classification criteria. In the long run,

controversial training data and test data will prevent us from evaluating the result of this experiment

correctly. Also, the criterion of high frequency helps to get more training data automatically. As a result,

1,466 training data were automatically created from 60 tagged verbs. This means that we could get 1,466

training data by tagging only 60 verbs.

3.3 Case learning algorithm

There are five paradigms for machine learning: neural networks, instance-based or case-based

learning, genetic algorithms, rule induction, and analytic learning. The reasons for the distinct identities

of these paradigms are more historical than scientific. The different communities have their origins in

different traditions, and they rely on different basic metaphors. For instance, proponents of neural

networks emphasize analogies to neurobiology, case-based researchers to human memory, students of

genetic algorithms to evolution, specialists in rule induction to heuristic search, and backers of analytic

methods to reasoning in formal logic [15]. By the way, the main reason that we are willing to adopt the

neural network paradigm among them is based on cognitive reality. That is, it is because we do not make

any conscious calculation to pick out thematic roles in a sentence, but seems to respond (or understand)

instantly and reflectively by means of neurobiological mechanism and repetitive learning.

Case Learner Algorithm (CLA) in Table 6 is based on the perceptron revision method (PRM) which

is an incremental approach to inducing linear threshold units (LTUs) using the gradient descent search

[15,16]. If we let c be a Case, a verb is represented by { (n, f) }, where n is a noun which co-occurs with

the verb in the TSET, and f is the relative frequency fn,v / fn of the noun in the TSET. Here, fn,v is the

frequency that the noun co-occurs with the verb, and fn is the total frequency of the noun in the TSET.

Then, a training instance is represented by [v, c], where c is the Case-tag for the verb v. The set of such

training instances (ISET) is one input of the CLA. For example, ISET becomes { i1 = 가다 kata ‘go’ =

[ (學校 hakkyo ‘school’, 1.0), (집 cip ‘house’, 1.0), GOAL], i2 = come = [ (房 pang ‘room’, 1.0)] }.

The other input is a set of hypothesized LTUs (HSET), which is also an output. In this study, since

10

we consider only three Case types, the HSET consists of three LTUs. The learning goal of the CLA is to

find a relevant HSET as an output. LTU represents an intensional concept of a Case, so that there is one

LTU per each Case concept. Much of the work on threshold concepts has been done within the

‘connectionist’ or ‘neural network’ paradigm, which typically uses a network notation to describe

acquired knowledge, based on an analogy to structures in the brain. In this study, LTU is defined as in

Table 5.

Table 5. LTU definition

If wifi then ck, where ck { GOAL, ATTR, INST}, wi is a weight for a noun ni, in

other words, the degree how much the noun contributes to the LTU concept, fi is the

attribute’s value of a noun ni ,, and is a threshold.

Notice that nouns are used as attributes of verbs in learning Case concepts. This means that if a verb

가다 kata ‘go’ requires GOAL as its argument, the nouns co-occurred with it will be used as GOAL, so that

it can be regarded that the nouns inherently have the Case concept. One Case concept ck is represented by

whether sum = wifi is greater than or equal to a certain threshold () or not. Not to mention, the nouns

may also have another Case concept. However, during the machine learning, the weights of nouns that are

frequently used as ck in the ISET shall be increased, otherwise they shall be decreased. Eventually, the

nouns shall have a desirable weight that reflects their own characteristics in the ISET. In line 5 of Table 6,

LTUk is denoted by Hk = [w, ], where w is initialized to an empty set and should be set to a certain

value considering to the characteristics of the ISET to increase the speed of convergence and to prevent

divergence within a given iteration.

In this experiment, H1 is ready for GOAL, H2 for ATTR, and H3 for INST. HSET will classify the verbs

in ISET into Case concept categories. We multiply each given attribute’s value (i.e., relative frequency fi )

by its weight (wi) and sums the products, and see if the result exceeds a current threshold (). If the verb

satisfies this condition, then we can consider the verb as belonging to the Case (ck) concept category. Note

that the CLA uses numeric values to denote Case types: 1 for GOAL, 2 for ATTR, 3 for INST. For example,

let us H1 ‘if 0.5 f1 + 0.4 f2 + 0.6 f3 0.5 then GOAL’, where 0.5 is the weight for 학교 hakkyo

‘school’, 0.4 for 집 cip ‘house’, and 0.6 for 房 pang ‘room’ respectively and 0.5 for a threshold. If a verb

가다 kata ‘go’ applies to H1, since 0.5 1.0 + 0.4 1.0 + 0.6 0.0 = 0.9 0.5, where two 1.0s and one

0.0 multiplied by the weights are the relative frequencies of their nouns, we will consider it as GOAL. This

means that the verb 가다 kata ‘go’ requires GOAL as its argument. In line 29 of Table 6, if it satisfies the

condition of Hk, ck has k value and it is said that Hk predicts it is positive, otherwise it has -1 value and it

is said that Hk predicts it is negative.

Table 6. Case learning algorithm (CLA)

11

1 Inputs

2 ISET: a set of training instance it = [v, c], where v =

3 { (ni, fi) | ni is a noun, fi is the relative frequency of ni},

4 and c is the given Case.

5

6

HSET: a set of Hk = [w, ], where w = {wi | wi is

the weight for the noun ni }, and is a threshold.

7 Output

8 HSET: a revised one

9 Parameters

10 : a momentum term that reduces oscillation

11 : a gain term that determines revision rate

12 : a gain term for thresholds

13 Variables

14 wi(h): a current delta of weight, where, h denotes

a current phase

15 wi(h-1): a previous delta of weight, where, h-1

denotes a previous

16 s: the direction of weight change

17 Procedure error_check(ck, c, k: input; s: output)

18 {

19 if ck = -1 and k = c then s = 1;

20 else if ck > -1 and k c then s = -1;

21 else s = 0;

22 }

23 Procedure CLA (ISET,HSET: input; HSET: output)

24 {

25 for each training instance it in ISET

26 {

27

for each Hk in HSET

28

{

29

ck = Case which Hk predicts for it[v, c];

30

error_check(ck, c, k, s);

31

if (s = 0) continue;

32

for each attribute ni of v[ni, fi] of it[v, c]

33

{

34

wi = the weight for ni in Hk;

35

wi(h) = sfi + wi(h-1);

36

wi = wi + wi(h);

37

}

38

k = k + s ;

39

}

40 }

41 return the revised HSET;

42 }

In principle, an arbitrary LTU can characterize any extensional definition that can be separated by a

single hyperplane drawn through the instance space, with the weights specifying the orientation of the

hyperplane and the threshold giving its location along a perpendicular. For this reason, target concepts

that can be represented by linear units are often referred to as being linearly separable [15]. Accordingly,

since the HSET has a role of classifiers, it can decide Case concepts to which a new verb belongs. In line

29, the weight wi of the Hk for a newly added noun ni is set to fi, for fi reflects the relative importance of

the noun in the ISET.

However, the result of this experiment shows that this initial setting of wi seems to influence only on

the speed of convergence. In line 17-22, if Hk predicts that the Case for instance it is negative and it is

tagged positive in the ISET, we set the direction of change (s) for the weight to 1 in order to turn it into

positive by increasing its weight. On the contrary, if H k predicts that it is positive and it is negative in the

ISET in order to decrease the weight, we set s to -1. In every other case, s sets to 0 to make no change as

the computer predicts correctly.

In line 32-37, where weights are actually changed, by introducing a momentum, a phenomenon of

oscillation is prevented by reflecting a proportion () of a previous delta (wi(h-1)) into a current weight

(wi). In addition, we will divide a gain term into two, which determines the revision rate of weights. One

is for a delta. The other for a threshold. These two variations are very important in order to make the CLA

converge in a finite number of iterations on the HSET that make no errors on these training data. It is

observed that the HSET gets to be diverged within the given iterations if the values set to be wrong. Also,

we use the perceptron convergence procedure (PCP) which induces HSET nonincrementally by applying

the CLA iteratively to the ISET until it produces an HSET that make no errors or until it exceeds a

12

specified number of iterations.

This PCP guarantees to converge in a finite number of iterations on the HSET that make no errors

on the ISET [15]. Our system starts with the TSET in a supervised learning mode. After completing the

supervised training with the TSET, it changes to an unsupervised mode with the USET. The HSET that is

the result of the training predicts Case concept categories for verbs in the USET. For example, in [房

pang ‘room’, 으로 ulo ‘to’, 움직이다 wumcikita ‘move’], 움직이다 wumcikita ‘move’ is represented

by [(房 pang ‘room’, 1.0)] and if it is applied to H1, we will get 0.5 0.0 + 0.4 0.0 + 0.6 1.0 = 0.6

0.5, thereby getting GOAL. This process is applied to H2 and H3 in turn. From the USET, we extract the

verbs predicted to be unique Case7. After that, we select verbs and add them to the TSET in order of

having the greatest sum from the above extracted verbs.

We go through supervised machine learning with the resultant TSET. The smaller the value is, the

better the accuracy of this system is , but on the contrary the slower the speed of convergence will be. is

set to 10 in this experiment. To give an example for this procedure, [房 pang ‘room’, 으로 ulo ‘to’,

GOAL

] is added to the existing TSET. This process may be construed that the computer per se makes

training data for itself. After that, selecting higher reliable instances (i.e., with higher prototypicality),

from the predicted results, we grant to the instances an equal ATTR with the training data that are manually

tagged by humans. This process continues iteratively until such verbs can be selected no further.

Incorporating both supervised and unsupervised learning has the same reason as using COMPLEXITY-TYPE

information in Table 4. That is, it reduces manual efforts for humans to build a large training data.

In addition, this study compares incremental learning with non-incremental one in accuracy. In case

of an incremental learning mode, new verbs are added into TSET by from USET and then they are

deleted from the USET not to be selected again in future. However, a non-incremental learning mode

each time disregards all the verbs selected until now (i.e., the TSET is set to an empty set) and selects

verbs plus the same number of verbs as in the TSET in order of their prototypicalities from the PSET.

Notice that since all verbs in the TSET belong to a unique Case concept category, they are positive on

their tagged (or predicted) Case and negative on the others.

3.4 Case prototypicality of nouns and verbs

Prototypicality means the degree how much a word is close to an exemplar of Case concept

category. The prototypicality of a verb for each Case is calculated by the left part (sum = wifi) of LTUs

obtained by the above learning process and is scaled down between 0 and 1 by a sigmoid function 1 /

(1+e-sum). The prototypicality of a noun for each Case is wk / k in the corresponding LTU, where wi is the

weight of the noun. We consider the wi as prototypicality because it represents the Case discretability in

7

It positive only in one of H1, H2 and H3 and negative in the other two.

13

the LTU. For example, on H1 (if 0.5 f1 + 0.4 f2 + 0.6 f3 0.5 then GOAL), the noun 學校 hakkyo

‘school’ has 0.5 / 0.5 = 1.0, 房 pang ‘room’ has 0.4 / 0.5 = 0.8, and 집 cip ‘house’ has 0.6 / 0.5 = 1.2 as

its own prototypicality to GOAL.

The greater the value is, the greater the possibility to be used as GOAL is when the noun occurs with

the Case particle 으로 ulo. In other words, the noun connotes the meaning of GOAL in proportion to its

numeric value. As far as the verb 움직이다 wumcikita ‘move’ is concerned, since the sum is 0.6 and k

0.5 for H1, its prototypicality to GOAL becomes 1 / (1+e0.5-0.6), which represents the degree how much the

verb requires GOAL as its argument when it is used with the Case particle 으로 ulo. If this value is highly

great, the verb part can completely determine the Case of its argument without considering the noun part.

Note that thresholds should be controlled to have a positive value when the CLA is completed by

means of initializing other parameters (lines 10-12 of Table 6) properly because wi will be divided by k

for normalization. Geun-Bae Lee [17] reported that how to transfer neural net-based knowledge to other

system has not been known so far. To solve this issue, however, we translated the knowledge acquired by

a neural net mechanism to a symbolic representation by the method described above.

4. Experimental Results

In this experiment, initially k is set to 0.5, 0.07 for , 0.0005 for , and 0.03 for . This

experiment showed that and need to be set a certain values that keep be near the initial value

during the training process for a fast convergence. If they are set to be wrong, a divergent phenomenon

was observed during the given iterations. Table 7-8 are the partial results of this experiment. According to

the definitions in Table 2, for example, the noun 수레 swuley ‘wagon’ is represented by { (GOALulo,

0.936), (ATTRulo, 0.936), (INSTulo, 2.321) }, The verb 逃走하다 tocwuhata ‘flight’, by { (GOALulo, 0.494),

(ATTRulo, 0.371), (INSTulo, 0.509) }. By the way, given the meaning of words in this way, how can we pick

out the Case for arguments?

Table 7. Cases prototypicalities of nouns

Noun

Case

swuley ‘wagon’

kakwu ‘furniture’

kasum ‘breast’

kaul ‘autumn’

kaceng ‘household’

sikmwul ‘family’

kanpwu ‘plant’

GOAL

ATTR

INST

0.936

0.936

9.472

2.203

-0.006

0.936

0.936

0.936

3.310

0.262

3.469

-1.437

3.310

2.357

2.321

2.169

0.571

1.861

-0.006

2.169

0.922

Noun

Case

kyeyhoyk ‘plan’

kokayk ‘customer’

kokyo ‘high school’

kongkan ‘space’

kolye ‘consideration’

kwenlyek ‘power’

kopal ‘accusation’

GOAL

ATTR

INST

2.357

0.936

-0.654

1.414

2.357

0.936

0.936

0.936

0.936

2.516

-1.437

2.203

0.936

0.936

0.922

2.321

2.169

-1.415

2.169

2.321

2.321

GOAL

ATTR

INST

Table 8. Cases prototypicalities of verbs

Verb

Case

GOAL

ATTR

INST

Verb

14

Case

tocwuhata ‘flight’

selchitoyta ‘be set’

kkiwuta ‘seal’

namta ‘remain’

palcenhata ‘develop’

nophita ‘heighten’

nulkekata ‘grow old’

0.494

0.529

0.493

0.505

0.649

0.649

0.388

0.371

0.262

0.649

0.381

0.493

0.493

0.388

0.509

0.545

0.491

0.218

0.491

0.491

0.387

icwuhata ‘move’

calicapta ‘seat’

hapuyhata ‘consent’

cenmangtoyta ‘prospect’

cencinhata ‘advance’

phatantoyta ‘judge’

cicenghata ‘designate’

0.887

0.388

0.493

0.013

0.633

0.009

0.388

0.633

0.388

0.493

0.554

0.792

0.751

0.388

0.632

0.387

0.648

0.605

0.632

0.486

0.387

If we try to grasp the Case by rules, we should have as many rules as the number of noun categories

the number of Case particle categories the number of verb categories. Seong-Hee Cheon [18] studied

a selectional network of sense that represents rules to determine thematic roles by seeing the Case particle

and the semantic features of the noun and verb. It needs a lot of case-by-case rules that we should make

manually. Moreover, one should manually tag the semantic features of every noun and verb. However,

our method can resolve this unduly manual work. In fact, it is the merit of learning-based system

compared to rule-based one. For example, in 수레로 逃走하다 swuley-lo tocwuhata ‘flight by wagon’,

to pick out the Case for the argument 수레 swuley ‘wagon’, we can simply add the noun prototypicality

and the verb one for each Case as in Figure 1. And then, we consider the Case with the greatest sum

among them as its Case. This is a truly typical working mechanism in neural network.

Word

Case

GOAL

ATTR

INST

0.936

0.936

2.321

逃走하다 tocwuhata ‘flight’

0.494

+

0.371

0.509

Sum of prototypicalities

1.430

=

1.307

2.830

수레 swuley ‘wagon’

Figure 1. Mecheanism for picking out Case

However, Figure 1 is too naive because this study does not normalize the prototypicalities of nouns

and verbs. This means that further work is required in order that they can be simply added. In addition,

we can put a weight on verbs since they have a role of head in a sentence. This will produce a more

sophisticated neural net. In the model, the formula to pick out Case will result in finding i such that Maxi

{ GOAL, ATTR, INST }

(Ni + kVi), where k is the weight for verbs, Ni is the Case prototypicality of nouns, and Vi

is the one of verbs. In cognitive view, Figure 1 can be interpreted as follows: the moment we look at the

수레 swuley ‘wagon’ and the Case particle 으로 ulo, the possibilities that the noun has a role of GOAL,

ATTR,

and INST respectively are activated in our memory in proportion to their Case prototypicalities. As

soon as we look at the 逃走하다 tocwuhata ‘flight’, we will select the Case with the highest sum of

Case prototypicalities.

Table 9 summarizes the experimental results evaluated with the ESET. We got 57.76% accuracy rate

only by using the supervised learning, but 73.45% by incorporating both the supervised and unsupervised

15

one since the unsupervised learning can afford to compensate for the smallness (or data sparseness) of the

TSET that was manually built initially. The reason that the supervised learning shows the same accuracy

in both incremental and non-incremental mode lies in PCP that runs non-incrementally even in supervised

mode. This experiment showed that the incremental learning is better than the non-incremental one. It

seems because the incremental learning preserves initial training data to the end. However, we cannot

make a clear conclusion only with this experiment.

Table 9. Accuracy of this experimental results.

Manner

Mode

Incremental learning

Non-incremental learning

Supervised learning

Combined learning

57.76%

57.76%

73.45%

62.72%

Since we used a real corpus rather than an artificial data for this experiment, there may be a lot of

factors in errors. First, the original PSET involves triples erroneously extracted by the partial syntactic

analyzer in Section 3.1. We tried to restrain such errors by completely excluding unregistered words in the

Korean dictionary and adverbials. However, it is very difficult to distinguish necessary arguments from

optional ones even in view of linguistics. Second, Miller reported only 90% of accuracy in human CaseTagging in ambiguous situation [19]. This means that there will be considerable errors in building training

and test data in Section 3.2. Third, Case definition is so ambiguous [20] that it is difficult even to evaluate

the result of this system correctly. Finally, there are a lot of homonyms and polysemants in nouns and

verbs. For example, 우리말 큰 辭典 Wulimal Khun Dictionary has about 15% homonyms. This is a

critical problem because the task of extracting the information for semantic analysis from a corpus

requires the very semantic information. One possible solution to this problem is to exclude all such words

in the first machine learning. Once after acquiring the meaning of words not excluded, we can try to find

another method to acquire the meaning of words excluded, based on the previously acquired knowledge.

5. Conclusions and Future Work

Until now, on the assumption that there exists knowledge that is not likely to be offered at present or

within a reasonable period owing to technical or practical problems, much work has been done. However,

such work has not been very helpful to build practical NLP systems. Moreover, such work makes it

difficult to distinguish NLP from linguistics. In addition, there have been a lot of studies to extract

linguistic knowledge from a corpus, nevertheless, most of them are inclined to disregard semantic

relations, but they only use syntactic ones.

Therefore, we insist that supervised machine learning is indispensable to acquire meanings. We

focused how to acquire the knowledge needed for semantic analysis before proposing a technique for it.

We showed that the meanings of words that belong to nouns or verbs category can be acquired from a

corpus, based on the characteristics of Case particles by machine learning. In addition, we showed that the

16

representation of word meaning by a set of Case prototypicalities is the knowledge that can be directly

used for semantic analysis. We proposed two methods to reduce the practical difficulty to build sufficient

training data: One is to use the COMPLEXITY-TYPE. The other is to incorporate both supervised and

unsupervised learning.

In this study, we considered only one Case particle 으로 ulo and its corresponding three thematic

roles categories. From these facts, one might be anxious whether the CLA could be easily scaled up or not.

Notice that the CLA needs only to learn the concept of its corresponding thematic role for each Case

particle. There are about 10-30 thematic roles and about 20 Case particles--the number and classification

of thematic roles and Case particles are a little different depending on each scholar. As we mentioned in

the beginning of Section 3, other Case particles except -으로 -ulo (about 7-9 thematic roles), -에 -ey

(about 6-9 ones), -가 -ka (about 7-8 ones), -을 -ul (about 5 ones) can manifest only one to three thematic

roles. This implies that the quantity of learning, strictly speaking the number of training data, is not much

large. Once the CLA has learned thematic roles for each Case particles, it can automatically make a

lexicon for picking out thematic roles like Table 7 and Table 8 from a large corpus, for instance, the YSC

Corpus (about 50 million words).

To improve this study, we have additional work to do. First, we should classify the Case types in

more detail. For this, the meaning of each Case should be defined more strictly. Second, we should think

out how to discriminate necessary arguments, optional arguments, and adverbials by computers. Third,

we should improve the accuracy rate of the CLA. For this, the mutual relations to other Case particles

should be considered. In addition, only the instances with statistically meaningful relative frequency

should be involved in this experiment. Fourth, Case prototypicalities should be normalized by more

plausible techniques. Finally, Once after satisfactory accuracy rate is accomplished, verification in

semantic analysis is needed to see how efficient this proposed representation of word meanings is.

Acknowledgement

This work was funded by the Ministry of Information and Communication of Korea under contract 98-86.

References

[1]

[2]

[3]

[4]

[5]

F. Ribas, “An Experiment on Learning Appropriate Selectional Restrictions from a Parsed Corpus,”

in Proceedings of the 15th International Conference on Computational Linguistics (COLING-94),

Kyoto, Japan, 1994, pp. 165-174.

T. Pedersen, “Automatic Acquisition of Noun and Verb Meanings,” Technical Report 95-CSE-10,

Southern Methodist University, Dallas, 1995.

W. S. Kang, C. Seo, and G. Kim, “A Semantic Cases Scheme and a Feature Set for Processing

Prepositional Phrases in English-to-Korean Machine Translation,” in Proceedings of the 6th Korea

Conference on the Hangul and Korean Information Process (KCHKIP), Seoul, 1994, pp. 177-180.

Y. H. Kim, “Meaning Classification of Nouns and Representation of Modification Relation,” Master

Dissertation, Keongbuk University, Seoul, 1989.

Y. M. Jeong, An Introduction to Information Retrieval, Gumi Trade Press, Seoul, 1993.

17

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

[16]

[17]

[18]

[19]

[20]

G. Hirst, Semantic Interpretation and the Resolution of Ambiguity, Cambridge University Press,

New York, 1987, pp. 28-29.

I. H. Lee, An Introduction to Semantics, Hansin-Munhwa Press, Seoul, 1995, pp. 1-56, 196-228.

G. Chierchia, M.G. Sally, Meaning and Grammar, MIT Press, Cambridge, 1990, pp. 349-360.

D. Ingram, First Language Acquisition: Method, Description and Explanation, Cambridge

University Press, Cambridge, 1989, pp. 398-432.

J. R. Taylor, Linguistic Categorization: Prototypes in Linguistic Theory, Oxford University Press,

Oxford, 1995.

D. H. Yang, I. H. Lee, and M. Song, “Automatically Defining the Meaning of Words by Cases,” in

Proceedings of the International Conference on Cognitive Science ’97 (ICCS-97), Seoul, 1997, pp.

317-318.

J. G. Lee, “Knowledge Representation for Natural language Understanding,” Technical Report,

KOSEF 923-1100-011-2, The Korea Science Foundation, Seoul, 1989.

D. H. Yang, S. H. Yang, Y. S. Lee, and M. Song, “Definition and Representation of Word meaning

Suitable for Natural Language Processing,” in Proceedings of SOFT EXPO ’97, Seoul, 1997, pp.

247-256.

L. Haegeman, Introduction to Government & Binding Theory, Blackwell Publishers, Cambridge,

1994, pp. 31-73.

P. Langley, Elements of Machine Learning, Morgan Kaufmann, San Francisco, 1996, pp. 67-94.

D. H. Yang, I. H. Lee, and M. Song, “Using Case Prototypicality as a Semantic Primitive,” in

Proceedings of the Pacific Asia Conference on Language, Information, and Computation 12

(PACLIC-12), Singapore, 1998, pp.163-171.

G. B. Lee, “Comparison of Connectionism and Symbolism in Natural Language Processing,” In

Journal of the Korea Information and Science Society (KISS), Vol. 20, No. 8, Seoul, 1993, pp. 12301238.

S. H. Cheon, A Study of Korean Word Ambiguity on the Basis of Sense Selection Network. Master

Dissertation, Korea Foreign Language University, Seoul, 1986.

P. S. Resnik, “Selectional Preference and Sense Disambiguation,” in ACL SIGLEX Workshop on

Tagging Text with Lexical Semantics: Why, What, and How?, Washington, D.C., 1997, pp. 235-251.

M. Song, G. S. Nam, D. H. Yang et al., “Automatic Construction of Case Frame for the Korean

Language Processing,” The Korea Ministry of Information and Communication ’97 Research

Report, Seoul, 1998, pp. 35-47.

18