SMCB_Kernel_CMAC

advertisement

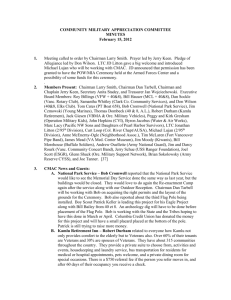

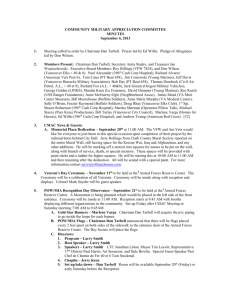

> SMCB-E-08182005-0575 < 1 Kernel CMAC with Improved Capability Gábor Horváth Senior Member, IEEE and Tamás Szabó Abstract— Cerebellar Model Articulation Controller (CMAC) has some attractive features: fast learning capability and the possibility of efficient digital hardware implementation. Although CMAC was proposed many years ago, several open questions have been left even for today. Among them the most important ones are about its modeling and generalization capabilities. The limits of its modeling capability were addressed in the literature and recently certain questions of its generalization property were also investigated. This paper deals with both modeling and generalization properties of CMAC. First it introduces a new interpolation model then it gives a detailed analysis of the generalization error, and presents an analytical expression of this error for some special cases. It shows that this generalization error can be rather significant, and proposes a simple regularized training algorithm to reduce this error. The results related to the modeling capability show that there are differences between the one-dimensional and the multidimensional versions of CMAC. This paper discusses the reasons of this difference and suggests a new kernel-based interpretation of CMAC. The kernel interpretation gives a unified framework; applying this approach both the one-dimensional and the multidimensional CMACs can be constructed with similar modeling capability. Finally the paper shows that the regularized training algorithm can be applied for the kernel interpretations too, resulting in a network with significantly improved approximation capabilities. Index Terms— Cerebellar Model Articulation Controller, neural networks, modeling, generalization error, kernel machines, regularization. I. INTRODUCTION C erebellar Model Articulation Controller (CMAC) [1] - a special neural architecture, which belongs to the family of feed-forward networks with a single linear trainable layer - has some attractive features. The most important ones are its extremely fast learning capability and the special architecture that lets effective digital hardware implementation possible [2], [3]. The CMAC architecture was proposed by Albus in the middle of the seventies [1] and it is considered as a real alternative to MLP and other feed-forward neural networks Manuscript received August 18, 2005, revised February 9, 2006. This work was supported in part by the Hungarian Scientific Research Fund (OTKA) under contract T 046771. G. Horváth is with the Budapest University of Technology and Economics, Department of Measurement and Information Systems, Budapest, H-1117, Hungary, Phone +36 1 463 2677; fax: +36 1 463 4112; (e-mail: horvath@mit.bme.hu). T. Szabó was with Budapest University of Technology and Economics, Department of Measurement and Information Systems, Budapest, Hungary. Now he is with the Hungarian Branch Office of Seismic Instruments, Inc. Austin, TX. USA. (e-mail: szabo@mit.bme.hu). . [4]. Although the properties of the CMAC network were analyzed mainly in the nineties [5]-[14], some interesting features were only recognized in the recent years. These results show that the attractive properties of the CMAC have a price: both its modeling and generalization capabilities are inferior to those of an MLP. This is especially true for multivariate cases, as multivariate CMACs can learn to reproduce the training points exactly only if the training data come from a function belonging to the additive function set [8]. The modeling capability can be improved if the complexity of the network is increased. More complex versions were proposed in [10], but as the complexity of the CMAC depends on the dimension of the input data, in multivariate cases the high complexity can be an obstacle of implementation in any way. Concerning the generalization capability the first results were presented in [15], where it was shown that the generalization error can be rather significant. Now we will deal with both the generalization and the modeling properties. We will derive an analytical expression of the generalization error. The derivation is based on a novel piecewise linear filter model of the CMAC network that connect CMAC to polyphase multirate filters [16]. The paper also proposes a simple regularized training rule to reduce the generalization error. The second main result of the paper is that a CMAC network can be interpreted as a kernel machine. This kernel interpretation gives a general framework: it shows that a CMAC corresponds to a kernel machine with second order Bspline kernel functions. Moreover the paper shows that using the kernel interpretation it is possible to improve the modeling capability of the network without increasing its complexity even in multidimensional cases. The kernel interpretation may suffer from the same poor generalization capability, however it will be shown that the previously introduced regularization can be applied for the kernel CMAC too. This means that using kernel CMAC with regularization both the modeling and the generalization capabilities can be improved significantly. Moreover, the paper shows that similarly to the original CMAC even the regularized kernel versions can be trained iteratively, which may be important in such applications where real-time on-line adaptation is required. The paper is organized as follows. In Section II a brief summary of the original CMAC and its different extensions is given. This section surveys the most important features, the advantages and drawbacks of these networks. In section III the new interpolation model is introduced. Section IV deals with the generalization property, and - for some special cases – it > SMCB-E-08182005-0575 < gives an analytical expression of the generalization error. Section V presents the proposed regularized training rule. In Section VI the kernel interpretation is introduced. This section shows what kernel functions correspond to the classical binary CMACs. It also shows that with a properly constructed kernel function it is possible to improve the modeling capability for multivariate cases. Finally it presents the regularized version of the kernel CMAC, and shows that besides analytical solutions both kernel CMAC versions can also be trained iteratively. Section VII discusses the network complexity and gives some examples to illustrate the improved properties of the proposed kernel CMACs. Some mathematical details are given in Appendices. 2 ai ai+1 ai+2 ai+3 ai+4 ai+5 u1 u2 wi wi+1 wi+2 wi+3 wi+4 wi+5 C=4 u3 u discrete input space a a aj aj+1 aj+ 2 aj+ 3 a II. A SHORT OVERVIEW OF THE CMAC y y wj wj+1 wj+2 wj+3 w association vector weight vector A. The classical binary CMAC CMAC is an associative memory type neural network, which performs two subsequent mappings. The first one which is a nonlinear mapping - projects an input space point u N into a M-bit sparse binary association vector a which has only C << M active elements: C bits of the association vector are ones and the others are zeros. The second mapping calculates the output y of the network as a scalar product of the association vector a and the weight vector w: y(u)=a(u) Tw (1) CMAC uses quantized inputs and there is a one-to-one mapping between the discrete input data and the association vectors, i.e. each possible input point has a unique association vector representation. Another interpretation can also be given to the CMAC. In this interpretation for an N-variate CMAC every bit in the association vector corresponds to a binary basis function with a compact N-dimensional hypercube support. The size of the hypercube is C quantization intervals. This means that a bit of the association vector will be active if and only if the input vector is within the support of the corresponding basis function. This support is often called receptive field of the basis function [1]. The two mappings are implemented in a two-layer network architecture (see Fig. 1). The first layer implements a special encoding of the quantized input data. This layer is fixed. The second layer is a linear combiner with the trainable weight vector w. B. The complexity of the network The way of encoding - the positions of the basis functions in the first layer - and the value of C determine the complexity, and the modeling and generalization properties of the network. Network complexity can be characterized by the number of basis functions what is the same as the size of the weight memory. In one-dimensional case with r discrete input values a CMAC needs M r C 1 weight values. However, if we follow this rule in multivariate cases, the number of basis functions and the size of the weight memory will grow expo- Fig. 1. The mappings of a CMAC nentially with the input dimension. If there are ri discrete values for the i-th input dimension an N-dimensional CMAC needs M Π ri C 1 weight values. In multivariate cases the N i 1 weight memory can be so huge that practically it cannot be implemented. As an example consider a ten-dimensional binary CMAC where all input components are quantized into 10 bits. In this case the size of the weight memory would be enormous, approximately 2100. To avoid this high complexity the number of basis functions must be reduced. In a classical multivariate CMAC this reduction is achieved by using basis functions positioned only at the diagonals of the quantized input space as it is shown in Fig. 2. overlapping regions (i) u2 points of subdiagonal receptive fields of one overlay points of main diagonal u1 quantization intervals Fig. 2. The receptive fields of a two-variable Albus CMAC The shaded regions in the figure are the receptive fields of the different basis functions. As it is shown the receptive fields are grouped into overlays. One overlay contains basis functions with non-overlapping supports, but the union of the supports covers the whole input space, that is each overlay o v e r l a p p i n g r e g i o n s > SMCB-E-08182005-0575 < covers the whole input N-dimensional lattice. The different overlays have the same structure; they consist of similar basis functions in shifted positions. Every input data will select C basis functions, each of them on a different overlay, so in an overlay one and only one basis function will be active for every input point. The positions of the overlays and the basis functions of one overlay are represented by definite points. In the original Albus scheme the overlay-representing points are in the main diagonal of the input space, whereas the basis-functionpositions are represented by the sub-diagonal points, as it is shown in Fig. 2 (black dots). In the original Albus architecture the number of overlays does not depend on the dimension of the input vectors; it is always C. This is in contrast to the socalled full overlay version when CN overlays are used. In a multivariate Albus CMAC the number of basis function will not grow exponentially with the input dimension, it will be 1 N M N 1 ri C 1 , where denotes integer part. C i 1 Using this architecture there will be “only” 255 weight values in the previous example. Although this is a great reduction, the size of the memory is still prohibitively large so further complexity reduction is required. In the Albus binary CMAC hash-coding is applied for this purpose. It significantly reduces the size of the weight memory, however, it can result in collisions of the mapped weights and some unfavorable effects on the convergence of CMAC learning [17], [18]. As it will be seen later the proposed new interpretation solves the complexity problem without the application of hashing, so we will not deal with this effect. The complexity reduction will have an unwanted consequence too, even without hashing. An arbitrary classical binary multivariate CMAC can reproduce exactly the training points only if they are obtained from an additive function [8]. For more general cases there will be modeling error, i.e. error at the training points. More details can be found in [9]. Another way of reducing the complexity is to decompose a multivariate problem into many smaller-dimensional ones [19] - [26]. [19] - [22] propose such modular architectures where a multivariate network is constructed as a sum of several submoduls, and the submodules are formed from smalldimensional (one- or two-dimensional) CMACs. The output of a submodule is obtained as a product of the elementary lowdimensional CMACs (sum-of-product structure). In [23] a hierarchical architecture called HCMAC is proposed, which is a multilayer network with log2N layers. Each layer is built from two-variable elementary CMACs, where these elementary networks use finite-support Gaussian basis functions. A similar multilayer architecture is introduced in [24]. The MS_CMAC, or its high-order version HMS_CMAC [25] applies one-dimensional elementary networks. The resulted hierarchical, tree-structured network can be trained using time inversion technique [27]. Although all these modular CMACvariants are significantly less complex, the complexity of the 3 resulted networks is still rather high. A further common drawback of all these solutions is that their training will be more complex. Either a proper version of backpropagation algorithm should be used (e.g. for sum-of-product structures and for HCMAC) or several one-dimensional CMACs should be trained independently (in case of MS_CMAC). Moreover in this latter case all of the simple networks except in the first layer need training even in the recall phase increasing the recall time greatly. A further deficiency of MS_CMAC is that it can be applied only if the training points are positioned at regular grid-points [24]. C. The capability of CMAC An important feature of the binary CMAC is that it applies rectangular basis functions. Consequently the output – even in the most favorable situations – will be piecewise linear, and the derivative information is not preserved. For modeling continuous functions the binary basis functions should be replaced by smooth basis functions that are defined over the same finite supports (hypercubes) as the binary functions in the Albus CMAC. From the beginning of the nineties many similar higher-order CMACs have been developed. For example Lane et al. [10] use higher-order B-spline functions to replace the binary basis functions, while Chian and Lin apply finitesupport Gaussian basis functions [12]. Gaussian functions are used in HCMAC network too [23]. When higher-order basis functions are applied a CMAC can be considered as an adaptive fuzzy system. This approach is also frequently applied. See e.g. [28]-[31]. Higher-order CMACs result in smooth input-output mapping, but they do not reduce the network complexity as the number of basis functions and the size of the weight-memory is not decreased. Moreover, applying higher-order basis functions the multiplierless structure of the original Albus binary CMAC is lost. Besides basis function selection CMACs can be generalized in many other aspects. The idea of multi-resolution interpolation was suggested by Moody [32]. He proposed a hierarchical CMAC architecture with L levels, where different quantization resolution lattices are applied in the different levels. In [33] Kim et al. proposed a method to adapt the resolution of the receptive fields according to the variance of the function to be learned. The generalized CMAC [13] assigns different generalization parameter C to the different input dimensions. The root idea behind all these proposals is that for functions with more variability more accurate representation can be achieved by decreasing the generalization parameter and increasing the number of training points. But using smaller C without having more training data will reduce the generalization capability. The multi-resolution solutions try to find a good compromise between the contradictory requirements. D. Network training The weights of a CMAC – as they are in the linear output layer – can be determined analytically or can be trained using an iterative training algorithm. > SMCB-E-08182005-0575 < 4 The analytical solution is w A† y d (2) T 1 where A † A T AA is the pseudo inverse of the association matrix formed from the association vectors a(i) = a(u(i)) i=1,2,…P, as row vectors, and y d T y d 1 y d 2 . . . y d P is the output vector of the desired values of all training data. In iterative training mostly the LMS algorithm wk 1 wk ak ek , (3) is used that converges to the same weight vector as the analytic solution if the learning rate is properly selected. Here ek y d k yk y d k w T ak is the error, y d k is the desired output, y(k) is the network output for the k-th training point, and μ is the learning rate. Training will minimize the quadratic error, so w can be obtained as: 1 P (4) w argmin J ek 2 2 k 1 w An advantage of the CMAC networks is that in one training step only a small fraction of all weights should be modified. Using the simple LMS rule with properly selected learning rate rather high speed training can be achieved [11]. In some special cases only one epoch is enough to learn all training samples exactly [7]. Although the training of the original CMAC is rather fast, several improved training rules have been developed to speed further up the learning process. In [34] a credit-assigned learning rule is proposed. In CA-CMAC the error correction is distributed according to the credibility of the different basisfunctions. The credibility of a basis function is measured by the number of updatings of the weight that is connected to the basis function. Recently the RLS algorithm was suggested for training CMAC networks [35], [36]. Using RLS rule the number of required training iterations is usually decreased, although the computation complexity of one iteration is larger than in the case of LMS rule. The response of the trained network for a given input u can be determined using the solution weight vector (2): yu aT uw* aT uAT AAT 1 yd (5) or for all possible inputs T 1 y Tw TA AA T yd y TA B y d T (6) (7) where T is the matrix formed from all possible association vectors and B AAT 1 . III. A NEW INTERPOLATION MODEL OF THE BINARY CMAC In this section we will give a new interpolation model of the binary CMAC. According to (5) and (6) it can be seen that every response of a trained network depends on the desired responses of all training data. To understand this relation better, to determine what type of mapping is done by a trained CMAC these equations should be interpreted. 1 In this interpretation the two terms TA T and B AAT of (6) will be considered separately. The general form of both matrices depends on the positions of the training data as well as the generalization parameter of the network C. TA T can be 1 written easily for any case, however to interpret B AAT an analytical expression of this inverse should be obtained. Although AAT is a symmetrical matrix, its inverse can be determined analytically only in special cases [36], [38]. Such special cases are if a one-dimensional CMAC is used (the input data are scalar) and the training data are positioned uniformly in the input space, where the distance between the neighboring training inputs is constant d. In this case A is formed from every d-th row of T and AAT will be a symmetrical Toeplitz matrix with 2z+1 diagonals where C z is an integer. d AAT the inverse B AAT determined analytically (see APPENDIX A): For such an Bi , j n1 1 n k 0 1 can be 2kπ j i 2kπ cos 1 n n C zd cos 2kπ z 1 zd d C cos 2kπ d n n cos (8) Here n=P is the size of the quadratic matrix B. A much more simple expression can be reached if z = 1. In this case AAT is a symmetrical tridiagonal Toeplitz matrix, and the (i, i + l)-th element of its inverse B can be written as (see APPENDIX B): 1C r Bi ,il 1l r 2b l (9) where r C 2 4b 2 and b C d . Eqs. (8) and (9) show that the rows of B are equals regardless of a one position right shift. This means that the v B y d operation can be interpreted as a filtering: vj the j-th element of v can be obtained by discrete convolution of yd with bj, the j-th row of B. To get the result of (6) our next task is to determine TA T . Using equidistant training data its (i,j)-th element will be: TA T i, j max C j 1d 1 i ,0 (10) From this matrix structure one can see easily that the rows of TA T are similar: the elements of the rows can be obtained as the sampling values of a triangle signal, where the sampling interval is exactly d and from row to row the sampling positions are shifted right by one position. So this operation can also be interpreted as filtering. The i-th output of the network can be obtained as: (11) yi TA T i B y d Gi v > SMCB-E-08182005-0575 < 5 where TA T i Gi is the i-th row of the matrix TA T . Thus it is seen that the binary CMAC can be regarded as a complex piecewise linear FIR filter, where the elementary filters are arranged in two layers. This filter representation is similar to polyphase multi-rate systems often used in digital signal processing [16]. Fig. 3 shows the filter representation of the network for the special case of equidistant training data. It must be mentioned that the filter model is valid not only for this special case, but for all cases where only the characteristics of the filters will be different. G0 cases when certain information about the function to be approximated is available [42]. The significance of these results is that it shows when and why the generalization error will be large. Moreover, it helps to find a way to reduce this error. The interpolation model presented in the previous section can serve as a basis for the derivation of a general expression of the generalization error at least in such cases when analytical expression of this model can be obtained. For these special cases the generalization error of a binary CMAC can be determined analytically. The generalization error can be obtained most easily if all desired responses are the same: y d D D ... DT . In this case the desired output and G1 B yd i=0 i=1 yi the result of linear interpolation will be the same, so the generalization error can be obtained as the difference of the network output at a training point u(i) and at an arbitrary input point u(k) between two neighboring training inputs: huk yui yuk where ui uk ui 1 . According to (11) huk TA T i TA T i=d-1 Gd-1 Fig. 3. The filter representation of the binary CMAC that at the training points the responses of the network equal with the true outputs (desired outputs) whereas in between the response is obtained by linear interpolation. This error definition is reasonable because – as we will see soon piecewise linear approximation can be obtained from a trained one-dimensional CMAC in some ideal cases. A further reason that emphasizes the importance of piecewise linear interpolation is that it helps to obtain an upper bound on the true generalization error hu yd u yu , at least in such k (12) d With constant output vector all elements of By d will be the same (disregarding some boundary effect), thus the generaliza tion error will be proportional to TA T i . j TA T k . j . j j Using (10) and the result of (8) or (9) the generalization error will be: H IV. THE GENERALIZATION ERROR min mod C , d d mod C , d D C C C 2 1C 1d d d d (13) where C and d are as defined before, mod(C,d) is C modulo d and D is the instantaneous value of the mapping to be approximated by the network. The same expression can be obtained more easily if the kernel interpretation – that will be introduced in section VI - is used. This alternative derivation will be presented in Section VI.C. The relative generalization error h = H/D for the range of z = 1 … 8 is shown in Fig. 4. h The generalization error of a neural network is the error of its response at such input points that were not used in training. In general the generalization error of a neural network cannot be determined analytically. Even in case of support vector machines only some upper bounds can be obtained [39]. Concerning the generalization error of the binary CMAC there are only a few results. Some works recognize that this generalization error can be rather large [40], [41], but in these works no detailed analysis is given. The relation between the parameter C and the generalization error was first presented in [15]. Here these results will be discussed in more details. In this paper a special generalization error is defined as the difference between the response of the CMAC network y(u) and a piecewise linear approximation of the mapping ylin(u): hl u ylin u yu . Piecewise linear approximation means By 0.2 0.18 0.16 0.14 0.12 0.1 0.08 0.06 0.04 0.02 0 1 2 3 4 5 6 7 8 C/d Fig. 4. The maximal relative generalization error h as a function of C/d The figure shows that the response of a CMAC is exactly the same as the linear interpolation, if C/d is integer, that is in these cases the relative generalization error defined before is zero. In other cases this error can be rather significant. For > SMCB-E-08182005-0575 < 6 univariate CMACs there is a special case when C=d. Here not only linearly interpolated response can be obtained, but this is the situation when the so-called high-speed training is achieved [7]. The interesting feature of CMACs is that there will be generalization error in all cases when C/d is not an integer. The error can be reduced if larger C is applied, however, too large C will have another effect: the larger the C the smoother the response of the network, and this may cause larger modeling error. This means that C can be determined correctly only if there is some information about the smoothness of the mapping to be learned. A further effect is that increasing the value of C will increase the computational complexity, because every output is calculated as the sum of C weights. The generalization error or at least an upper bound of this error can also be determined for more general cases. It can be shown that the relative generalization error for a onedimensional CMAC where the distances between the neighboring training inputs are changing periodically as d1, d2, d1, d2, . can be as high as 33%. The derivation of this latter result and the results for more general cases (general training point positions, multidimensional cases) are beyond the scope of this paper as the goal now is to show that depending on the positions of the training points and the value of generalization parameter C the generalization error can be rather significant. V. MODIFIED CMAC LEARNING ALGORITHM row indices of AT . The error can be reduced if during a training step the selected C weights are forced to be similar to each other. This can be achieved if a modified criterion function and – accordingly – a modified training rule is used. The new criterion function has two terms. The first one is the usual squared error term, and the second one is a new, regularization term. This will be responsible for forcing the weights selected by an input data to be as similar as possible. The new criterion function is: y k 2 1 yd k yk d wi k . 2 2 i:ai k 1 C compromise between the two terms. VI. KERNEL CMAC Kernel machines like Support Vector Machines (SVMs) [39], Least Squares SVMs (LS-SVMs) [43] and the method of ridge regression [44] apply a trick, which is called kernel trick. They use a set of nonlinear transformations from the input space to a "feature space". However, it is not necessary to find the solution in the feature space, instead it can be obtained in the kernel space, which is defined easily. All kernel machines can be applied for both classification and regression problems, but here we will deal only with the regression case. The goal of a kernel machine for regression is to approximate a (nonlinear) function yd f u using a training data set uk , yd k kP1 . First the input vectors are projected into a higher dimensional feature space, using a set of nonlinear functions u : N M , then the output is obtained as a linear combination of the projected vectors: M The main cause of the large generalization error is that if C/d is not an integer the weights can take rather different values even if the desired output is constant for all training samples. This follows from the fact that the different weight values are adjusted in different times if iterative training is used. The same consequence can be obtained from the analytic solution of (2). For constant desired response the neighboring elements of the weight vector are obtained as the sum of the elements of the corresponding neighboring rows of A† , and C ATi B ATj B if integer where i and j are two adjacent d J k according to the new criterion function is as follows: y (k ) (15) wi k 1 wi k ek d wi k , C for all weight values with index i: ai(k) =1. Here the first part is the standard LMS rule (see (3)), and the second part comes from the regularization term. is a regularization parameter, and the values of and are responsible for finding a good 2 (14) where ai(k) is the i-th bit of the association vector selected by the k-th training input. The modified training equation yu w j j u b w T u b , (16) j 1 where w is the weight vector and b is a bias term. The dimensionality (M) of the feature space is not defined directly, it follows from the method (it can even be infinite). The kernel trick makes it possible to obtain the solution in the kernel space P yu k K u ,uk b (17) k 1 where the kernel function is formed as K uk ,u j T uk u j (18) In (17) the k coefficients serve as the weight values in the kernel space. The number of these coefficients equals to or less (in some cases it may be much less) than the number of training points [39]. A. The derivation of the kernel CMAC The relation between CMACs and kernel machines can be shown if we recognize that the association vector of a CMAC corresponds to the feature space representation of a kernel machine. For CMACs the nonlinear functions that map the input data points into the feature space are the binary basis functions, which can be regarded as first-order B-spline functions [9] of fixed positions. To get the kernel representation of the CMAC we should apply (18) for the binary basis functions. In univariate cases > SMCB-E-08182005-0575 < 7 1 0.8 0.6 0.4 0.2 0 20 15 10 5 0 (a) 20 15 10 5 1 0.8 0.6 0.4 0.2 0 20 15 20 15 10 10 5 (b) 5 0 (c) Fig. 5. Two-dimensional kernel functions with C=8. Binary Albus CMAC (a), kernel-CMAC quantized (b), and kernel-CMAC continuous (c) second-order B-spline kernels are obtained where the centre parameters are the input training points. In multivariate cases special kernels are obtained because of the reduced number of basis functions (see Fig. 5(a)). However, we can apply the fulloverlay CMAC, (although the dimension of the feature space would be unacceptable large). Because of the kernel trick the dimension of the kernel space will not increase: the number of kernel functions is upper bounded by the number of training points irrespectively of the form of the kernel functions. Thus we can build a kernel version of a multivariate CMAC without reducing the length of the associate vector. This implies that this kernel CMAC can learn any training data set without error independently of the dimension of the input data. The multivariable kernel function for a full-overlay binary CMAC can be obtained as tensor product of univariate second order B-spline functions. This kernel function in two-dimensional cases is shown in Fig. 5 (b). As the input space is quantized this is the discretized version of the continuous kernel function obtained from second-order B-splines (Fig. 5 (c)). This kernel interpretation can be applied for higher-order CMACs too [10], [12], [23] with higher order basis functions (k-th order B-splines with support of C). A CMAC with k-th order B-spline basis function corresponds to a kernel machine with 2k-th order B-spline kernels. Kernel machines can be derived through constrained optimization. The different versions of kernel machines apply different loss functions. Vapnik’s SVM for regression applies -insensitive loss function [39], whereas an LS-SVM can be obtained if quadratic loss function is used [43]. The classical CMAC uses quadratic loss function too, so we obtain an equivalent kernel representation if in the constrained optimization also quadratic loss function is used. This means that the kernel CMAC is similar to an LS-SVM. As it was written in Section II the response of a trained network for a given input is: 1 (19) yu aT uw* aT uAT AAT y d To see that this form can be interpreted as a kernel solution let construct an LS-SVM network with similar feature space representation. For LS-SVM regression we seek for the solution of the following constrained optimization [43]: 1 P (20) min J w ,e wT w ek 2 w 2 2 k 1 such that (21) yd k wT ak ek Here there is no bias term, as in the classical CMAC bias term is not used. The problem in this form can be solved by constructing the Lagrangian P Lw ,e, J w ,e k wT ak ek yd k k 1 (22) where k are the Lagrange multipliers. The conditions for optimality can be given by P Lw ,e , 0 w k ak w k 1 Lw ,e , 0 k ek e( k ) Lw ,e , 0 w T auk ek y d k 0 k k 1 ,..., P (23) k 1 ,..., P > SMCB-E-08182005-0575 < 8 Using the results of (23) in (22) the Lagrange multipliers can be obtained as a solution of the following linear system 1 (24) K I α y d Here K AAT is the kernel matrix and I is a PP identity matrix. The response of the network will be P P yu aT u w aT u k ak k K u ,uk K T u α k 1 i 1 1 1 1 1 aT u AT K I y d aT u AT AA T I y d (25) The obtained kernel machine is an LS-SVM or a ridge regression solution [44]. Comparing (5) and (25), it can be seen that the only difference between the classical CMAC and the ridge regression solution is the term (1/)I, which comes from the modified loss function of (20). However, if the matrix AAT is singular or it is near to singular that may cause numerical stability problems in the inverse calculation, a regularization term must be used: instead of computing AA T 1 the regularized inverse AA T I 1 is computed, where is the regularization coefficient. In this case the two forms are equivalent. A. Kernel CMAC with weight-smoothing This kernel representation improves the modeling property of the CMAC. As it corresponds to a full-overlay CMAC it can learn all training data exactly. However, the generalization capability is not improved. To get a CMAC with better generalization capability weight smoothing regularization should be applied for the kernel version too. For this purpose a new term is added to the loss function of (4) or (20). Starting from (20) the modified optimization problem can be formulated as follows: 1 P P y k min J w ,e w T w ek 2 d wk i (26) w 2 2 k 1 2 k 1 i:ai k 1 C 2 where wk i is a weight value selected by the i-th active bit of a(k). As the equality constraint is the same as in (4) or (20), we obtain the Lagrangian 1 P P Lw ,e , w T w ek 2 2 2 k 1 2 k 1 P y k d wi k (27) i : a i k 1 C 2 P 2 k 1 P diagak . k 1 The response of the network becomes y( u ) aT u I D1 AT α y d (30) C Similar results can be obtained if we start from (4). In this case the response will be: y( u ) aT u D1 AT α y d . (31) C where is also different from (29). 1 1 (32) α AD1 AT I I AD 1AT y d C B. Real-time on-line learning One of the most important advantages of kernel networks is that the solution can be obtained in kernel space instead of the possibly very high dimensional (even infinite) feature space. In the case of CMAC the dimension of the feature space can be extremely large for multivariate problems especially if fulloverlay versions are used. However, another feature of the kernel machines is that the kernel solutions are obtained using batch methods instead of iterative techniques. This may be a drawback is many applications. The classical CMAC can be built using both approaches: the analytical solution is obtained using batch method or the networks can be trained from sample-to-sample iteratively. Adaptive methods are useful when one wants to track the changing of the problem. A further advantage of adaptive techniques is that they require less storage than batch methods since data are used only as they arrive and need not be remembered for the future. The kernel CMACs derived above (Eqs. 24, 25, 31, 32) all use batch methods. Adaptive solution can be derived if these equations are written in different forms. The response of any kernel CMAC can be written in a common form: (33) yu Ωuβ where Ωu is the kernel space representation of an input point u, and β is the weight vector in the kernel space. Ωu a uAT for the classical CMAC (25), whereas kernel CMACs with weight-smoothing (Eqs. (30) and (31), k ak T diagak w ek yd ( k k 1 P where K D AI D1 AT and D (29) Ωu aT uI D1 AT and Ωu aT u D1 AT for the that can be written in the following form: 1 P Lw ,e , wT w ek 2 2 1 1 α K D I I K D y d C T k w T ak ek yd k k 1 again as a solution of a linear system. k (28) 2 k 1 C P yd2 d k P ak T diagak w w T diagak w 2 k 1 k 1 C Here diagak is a diagonal matrix with a(k) in its main diagonal. The conditions for optimality can be derived similarly to (23), and the Lagrange multipliers can be obtained respectively). β α for the classical CMAC and β α yd C for the versions with weight-smoothing. Adaptive solution can be obtained if β is trained iteratively. Using the simple LMS rule the iterative training equation is: (34) βk 1 βk 2Ωk ek where Ωk is a matrix formed from the kernel space rep- > SMCB-E-08182005-0575 < 9 resentations of the training input vectors Ω ui , i =1 ,…, k TABLE I THE MEMORY COMPLEXITY OF DIFFERENT CMAC VERSIONS and ek e1, e2,..., ek T at the k-th training step. It should be mentioned that the size of Ωk and the lengths of βk and e(k) are changing during training. Memory complexity Albus CMAC r C 1N N 1 C ≈225 r C 1N ≈260 C. The generalization error based on kernel interpretation The kernel interpretation has a further advantage. The generalization error of the CMAC network in the special cases defined in section IV can be derived much more easily than using equations (8) and (9). According to (17) the response of a kernel machine for an arbitrary input value u will be yu P i for all i. This directly follows from the basic equations of the binary CMAC. Because Kα y d , where K AAT be obtained i AA T 1 i, j as for all i. As α K 1y d AAT AA 5 HCMAC [23] k 1 responses are the same: y d D D ... DT . In this case T 1 1 yd and B according to (8) j and (9) the sum of the elements of the rows of B are equal disregarding some boundary effect. That is if all desired responses are the same the response of the network is the sum of P properly positioned triangle kernel functions with the same maximal value. As the output layer is linear to get the output values superposition can be applied, and the generalization error given by (13) can be determined using elementary geometrical considerations. The details are given in APPENDIX C. It should be mentioned, that the kernel based network representation and the simple geometrical considerations can be used to determine analytical expressions of the generalization error for multidimensional cases too. This can be done both for the Albus CMAC and for kernel version if training data are positioned in the vertices of an equidistant lattice. (N-D) 1 r C 1 N 1 C (N-D) N-1 MS-CMAC [24] r C Kernel-CMAC 1.2*218 (2-D) r C 1 r i 1 C N C N i r ≈225 N 1 (1-D) 1 N ≤218 (N-D) 6 shows memory complexities as functions of input dimension, N for C=128 and r=1024. From the expressions and from the figure it is clear that the lowest complexity is achieved by HCMAC - at least for higher input dimensions. A further advantage of this solution is that the self-organizing version of HCMAC [23] can reduce the size of weight memory further. However, computational complexity and the training time are significantly larger than in case of classical CMAC and kernel CMAC. It can also be seen that the memory complexity of the kernel CMAC is exponential with N if the training points are positioned on a regular equidistant lattice. On the other hand in a kernel CMAC the size of the weight memory is upper bounded by the number of training points, so this exponentially increasing memory size is obtained if the training points are placed into all vertices of a multidimensional lattice. Using less training data the memory complexity will decrease. It must be mentioned that memory complexity can be further reduced if a sparse kernel machine is constructed. In this respect the general results of the construction of sparse LS-SVMs can be utilized (see e.g. [43], [45], [46]). 10 10 VII. COMPLEXITY ISSUES AND ILLUSTRATIVE EXPERIMENTAL RESULTS 10 Memory size To evaluate kernel CMAC its complexity and the quality of its results should be compared to other CMAC versions. The complexity of the networks can be characterized by the size of the required weight memory and the complexity of its training, while its quality, the approximation capability of the network can be measured by mean square error. For memory complexity kernel CMAC is compared to Albus CMAC, full-overlay binary CMAC, HCMAC [23], and MS-CMAC [24]. Table I gives expressions of memory complexity and an example for C=128, N=6 and r=1024. Fig. (dimension) 1 2 k K u ,uk if no bias is used. For a univariate kernel CMAC K u ,uk is a second-order B-spline function (a triangle function with 2C support), so the output of a CMAC is a weighted sum of triangle functions where the centres of the triangle kernels are at the training points in the input space. The generalization error will be derived again with the assumption that all desired can Full-overlay CMAC Number of elementary CMACs N=6, C=128 r=1024 Network version 10 10 10 10 10 35 Full overlay CMAC Albus CMAC MS-CMAC HCMAC Kernel CMAC 30 25 20 15 10 5 0 2 3 4 5 6 7 8 9 10 N Fig. 6. The memory complexity of different CMAC versions. > SMCB-E-08182005-0575 < For comparing the different CMAC versions computational complexity is also an important feature. As training time greatly depends on the implementation, here we compare the number of elementary CMACs. In Table I the number of elementary CMACs and the number of their dimensions are given. If more than one network is used, the elementary networks should be trained separately (MS-CMAC) or a special backpropagation algorithm should be applied (HCMAC). As HCMAC applies Gaussian basis functions, and kernel CMAC applies B-spline kernel functions these two architectures need multipliers. And this is the case for all networks using higher-order basis functions. The different kernel versions of the CMAC network were validated by extensive experiments. Here only the results for the simple (1D and 2D) sinc function approximation will be presented. For the tests both noiseless and noisy training data were used. Figs. 7 and 8 show the responses of the binary CMAC and the CMAC with weight-smoothing regularization, respectively. Similar results can be obtained for both the original and the kernel versions. In these experiments the inputs were quantized into 8 bits and they were taken from the range [-4,+4]. 10 binary Albus CMAC and the regularized version for the onedimensional sinc function. As it is expected the errors in the two cases are the same when C/d is an integer, however for other cases the regularization will reduce the generalization error significantly, in some cases by orders of magnitude. TABLE II COMPARISON OF RMS APPROXIMATION ERRORS OF THE DIFFERENT CMAC VERSIONS C=8 No. of training points (P) Albus CMAC Kernel CMAC d=2 576 0.0092 0.0029 d=3 256 0.0233 d=4 144 d=5 Regularized Kernel CMAC H-CMAC MSCMAC 0.0029 0.0058 0.0092 0.0209 0.0083 0.0146 0.0233 0.0166 0.0124 0.0124 0.0254 0.0166 81 0.0480 0.0458 0.0117 0.0406 0.0480 d=6 64 0.0737 0.0658 0.0094 0.0398 0.0737 d=7 49 0.0849 0.0711 0.0436 0.0664 0.0849 d=8 36 0.0534 0.0484 0.0484 0.0929 0.0534 Fig. 7. The response of the CMAC (Eq. 6) with C=8, d=5. Fig. 9. The response of the CMAC (Eq. 6) with C = 32, d = 3. (noisy data) Fig. 8. The response of the CMAC with weight smoothing C=8, d=5. Figs. 9, 10 and 11 show responses for noisy training data. In these cases the input range was [-6,+6], and the inputs were quantized into 9 bits. Gaussian noise with zero mean and = 0.3 was added to the ideal desired outputs. In all figures the solid lines with larger line-width show the response of the network, while with the smaller line-width show the ideal function. The training points are marked with . In Fig. 12 the mean squared true generalization error is drawn for both the Fig. 10. The response of the kernel CMAC without weight-smoothing (Eq. 25) C=32, d=3, = 0.1. > SMCB-E-08182005-0575 < 11 CMAC network output over test mesh C=4 d=3 mse=0.001341 1 0.8 0.6 0.4 0.2 0 -0.2 -0.4 50 45 40 50 35 45 30 40 35 25 30 20 25 15 20 15 10 10 5 0 Fig. 11. The response of the kernel CMAC with weight-smoothing (Eq. 30) C = 32, d = 3, =4000, = 0.1. 10 Fig. 13. The response of the kernel CMAC without weight-smoothing (Eq. 25) C=4, d=3, =0.1. Generalization error C=16, d=2...16 -2 Albus CMAC Regularized CMAC Mean squared error 10 10 5 0 Regularized kernel-CMAC output over test mesh C=4 d=3 mse=2.3777e-005 -3 1 -4 0.8 10 -5 0.6 0.4 10 -6 0.2 0 10 -7 -0.2 -0.4 50 10 -8 1 45 2 3 4 5 6 7 8 C/d 40 50 35 45 30 40 35 25 30 20 Fig. 12. The generalization mean squared error as a function of C/d in case of sinc function Similar results can be obtained for multivariate problems as it is shown in Figs. 13 and 14. These figures show that concerning the generalization error - the response of a multidimensional kernel CMAC will be similar to that of the one-dimensional networks. It can also be seen that weight-smoothing regularization reduces this error by approximately two orders of magnitude. Table II compares the RMS error of the different CMAC versions for 2D sinc function with C=8. The input space was quantized into 48*48 discrete values, and all the 48*48 test points were evaluated in all cases. The figures show that regularized kernel CMAC gives the best approximation. Similar results can be obtained for other experiments. Simulation results also show that on-line training is efficient, the convergence is fast: few (2-3) epochs of the training data are enough to reach the batch solutions within the numerical accuracy of MATLAB if is properly selected. Fig. 15 shows the training curve of the 1D sinc problem with the same parameters as in Fig. 7 if =0.85. 25 15 20 15 10 10 5 0 5 0 Fig. 14. The response of the kernel CMAC with weight-smoothing (Eq. 30) C=4, d=3, =0.1, =4000. and its generalization error can be rather significant; moreover its complexity for multivariate cases can be extremely high that may be an obstacle of the implementation of the network. Although in the last ten-fifteen years many aspects of the CMAC were analyzed and several different extensions of the network have been developed, the modeling and generalization capability have been studied only in a rather limited extent. VIII. CONCLUSIONS In this paper it was shown that a CMAC network - in spite of its advantageous properties – has some serious drawbacks: its modeling capability is inferior compared to that of an MLP, Fig. 15. Training curve of an iteratively trained regularized kernel CMAC. > SMCB-E-08182005-0575 < 12 The main goal of this paper was to deal with these properties, to analyze the reason of these errors and to find new possibilities to reduce both generalization and modeling errors. The reason of the modeling error is the reduced number of basis functions, whereas the poor generalization error comes from the architecture of the Albus CMAC. Weight smoothing introduced in the paper is a simple way to reduce generalization error. On the other hand the new kernel interpretation gives a general framework, and helps to construct such network version where the modeling error is reduced without increasing the complexity of the network. Although there is a huge difference in complexity between the binary Albus CMAC and the full overlay CMAC in their original forms, this difference disappears using the kernel interpretation. Here only the kernel functions will be different. In the paper it was also shown that the weight-smoothing regularization can be applied for kernel CMAC (and for other kernel machines with kernels of compact supports) too, so both modeling and generalization errors can be reduced using the proposed solution. As the effect of weight smoothing depends on the positions of the training input points, weightsmoothing regularization actually means that adaptive, datadependent kernel functions are used. Finally the possibility of adaptive training ensures that the main advantages of the classical CMAC (adaptive operation, fast training, simple digital hardware implementation) can be maintained, although the multiplierless structure is lost [47]. It is known that the inverse of AAT is not a Toeplitz matrix [48], however the inner part of the inverse - if we do not regard a few rows and columns of the matrix near its edges will be Toeplitz. Moreover forming a cyclic matrix from AAT by adding proper values to the upper right and the lower left corners of AAT , there is a conjecture that the inverse will be a Toeplitz one, which can be determined quite easily, and the inner parts of the two inverses will be the same [49]. Applying this approach – regardless of some values near the edges of the matrix - a rather accurate inverse can be obtained as it was justified by extensive numerical tests. We will begin with an elementary cyclic matrix : 0 0 0 0 1 0 0 0 0 1 0 0 0 0 1 0 0 0 1 0 0 0 1 0 (A.2) The cyclic extension of AAT can be written as a matrix polynomial of : AA Tcyc C I C d 1 C 2d 2 2 C zd z z (A.3) APPENDIX A Assuming that the training data are positioned uniformly where the distance of the neighboring samples is d a good approximation of B AAT 1 can be obtained as follows. The general form of AAT in this case is C d C 2d . . . C zd 0 0 ... C Cd C C d C 2d . . . C zd 0 ... AA T C 2d C d C C d C 2d . . . C zd 0 ... 0 0 . . . 0 C zd . . . C 2d C d 0 0 0 C (A.1) This shows that AA is a Toeplitz matrix with 2z+1 diagonals. There is no known result of the analytical inverse of a general Toeplitz matrix, however for some special cases, where z=1 or z=2, analytical results can be obtained [36], [37]. The case of z=1 is an important one and it will be discussed in APPENDIX B. The case of z=2 could be solved according to [37], however the result is extremely complicated and cannot be used directly for getting an analytical result of the generalization error. For larger z there is no known result at all. Here another approach will be used which will result in T where I is the identity matrix. The spectral representation of Ω can be written as [49]: 0 1 2 0 e n Ω F 0 0 0 0 0 0 4 j en 0 0 0 FH 0 2 n 1 j 0 e n 0 where F is the Fourier matrix, i.e. Fk ,l 1 n For spectral representation it is true that if AA Tcyc F Λ F H H where F is a conjugate transpose of F, then f AA Tcyc F f k F H e 2 k 1l 1 n (A.4) . (A.5) (A.6) and AA T 1 cyc T 1 only approximation of B AA , however, according to extensive simulations the approximation is highly accurate. 0 j Using that 1 F k H F (A.7) > SMCB-E-08182005-0575 < 13 2k 4k C C d 2cos C 2d 2cos k n n 1 (A.8) 1 2zk C zd 2cos n the (i,j)-th element of the inverse matrix can be written as: AA T cyc 1 Bi , j 2kπ j i n (A.9) z z 2klπ 2klπ C 2C cos 2d lcos n n l 1 l 1 cos 1 n 1 n k 0 Determining the finite sums in the denominator, the result of (8) is obtained. Bi , j 1 n 1 n k 0 2kπ j i 2kπ cos 1 n n (A.10) 2kπ z 1 2kπ C zd cos zd d C cos d n n cos APPENDIX B When z=1 AAT is a tridiagonal Toeplitz matrix : C d 0 ... C C d C C d 0 .. T AA 0 C d C C d 0 0 0 ... 0 . 0 0 0 0 ... C d 0 0 0 (B.1) C its inverse can be determined using R xI K 1 where x 1 0 xI K 0 0 1 0 x 1 1 x 0 0 1 x 0 0 1 0 0 0 1 x (B.2) Here I is the identity matrix and K is a matrix where only the first two subdiagonals contain ones, all other elements are zeros. As 1 1 AA T CI C d K 1 R (B.3) C d C where x , the requested inverse can be determined if C d C R is known. When z = 1, x 2 and using the C d substitution of x 2chΘ we can find the solution [48], [50]. The elements of R [n n] can be written as: Ri, j i j 1 shiΘ shn 1 j Θ if i j 1 shΘ shn 1Θ i j 1 sh( jΘ ) shn 1 i Θ if i j 1 shΘ shn 1Θ i 1,2,..., n (B.4) and R i , j l 1n l 1n l shiΘ shiΘ chlΘ chiΘ shlΘ shΘ 2sh iΘ chiΘ 1 shiΘ chlΘ chiΘ shlΘ 2shΘ chiΘ shiΘ chlΘ shlΘ 1n l 2shΘ chiΘ 2shΘ n 1 chlΘ shlΘ 1n l th Θ 2shΘ 2shΘ 2 (B.5) If n is large enough we can use n 1 chlΘ shlΘ R i , j l lim 1n l th Θ n 2shΘ 2shΘ 2 1 chlΘ shlΘ 1l 1 2shΘ 1 chΘ shΘ l 1l 1 2shΘ from which the result of (9) follows directly. (B.6) APPENDIX C For equidistant training input positions and constant desired responses three different basic situations can occur. These are shown in Fig. C.1. The figure shows the kernel functions (triangle functions), the true output values (constant D) and the response of the trained CMACs (thin solid line) according to the three basic situations. In a.) (C-d) > 0.5d, in b.) (C-d) = 0.5d and in c.) (CC d) > 0.5d. In all these cases 1 . Similar three situations d C can be distinguished in the more general cases when z d and z > 1. These are a.) mod(C,d)>0.5d, b.) mod(C,d)=0.5d, and c.) mod(C,d)>0.5d. As it can be seen in the figure the responses of the network at the training points in all three situations are exactly equal with the desired response (small circles), but in between there will be generalization error (denoted by H). H can be determined directly from the figure. As the maximal value of the triangle functions is the generalization error for the three different cases will be as C follows if 1 : d Ha 2d C C (C.1) > SMCB-E-08182005-0575 < 14 H H H D d C d d d C C d C d (b) D d C (a) D d C Fig. C.1 The derivation of the generalization error Cd 2d C (C.2) C C 2d C (C.3) Ha C The three equations can be united min c d ,2d C H . (C.4) C The generalization error can be similarly described for more C general cases when z . In this case the united expression d of the generalization error will be min mod C , d ,d mod C , d H . (C.5) C The next step is to determine the value of . This can be done if we write the output value of the network at the training points. The response of a CMAC for the three special cases can be written in the following form. z C ld d y ui 1 2 z z 1 z (C.6) C C l 1 Hb As according to our assumption y (ui ) D we will get D D d 1 2 z z 1 z 1 2 C C d 1 C d d C d C minmod C , d d mod C , d D, C C C d C 1 2 1 d d C d [1] [2] [3] [4] [5] [6] [7] [8] [9] [10] (C.7) [11] [12] From (C.7) and (C.4) the generalization error will be H REFERENCES [13] (C.8) [14] [15] which is the same as (13). [16] ACKNOWLEDGEMENT The authors would like to thank the anonymous reviewers for their valuable comments. [17] J. S. Albus, "A New Approach to Manipulator Control: The Cerebellar Model Articulation Controller (CMAC)," Transaction of the ASME, pp. 220-227, Sep. 1975. J. S. Ker, Y. H. Kuo and B. D. Liu, "Hardware Realization of Higherorder CMAC Model for Color Calibration," Proc. of the IEEE Int. Conf. on Neural Networks, Perth, vol. 4, pp. 1656-1661, 1995. J. S. Ker, Y. H. Kuo, R. C. Wen and B. D. Liu, “Hardware Implementation of CMAC Neural Network with Reduced Storage Requirement,” IEEE Trans. on Neural Networks, vol. 8, pp. 15451556, Nov. 1997. T. W. Miller III., F. H. Glanz and L. G. Kraft, "CMAC: An Associative Neural Network Alternative to Backpropagation," Proceedings of the IEEE, vol. 78, pp. 1561-1567, Oct. 1990. P. C. Parks and J. Militzer, “Convergence Properties of Associative Memory Storage for Learning Control System,” Automat. Remote Contr. vol. 50, pp. 254-286, 1989. D. Ellison, "On the Convergence of the Multidimensional Albus Perceptron," The International Journal of Robotics Research, vol. 10, pp. 338-357, 1991. D. E. Thompson and S. Kwon, "Neighbourhood Sequential and Random Training Techniques for CMAC," IEEE Trans. on Neural Networks, vol. 6, pp. 196-202, Jan. 1995. M. Brown, C. J. Harris and P. C. Parks, “The Interpolation Capabilities of the Binary CMAC,” Neural Networks, vol. 6, No. 3, pp. 429-440, 1993. M. Brown and C. J. Harris, Neurofuzzy Adaptive Modeling and Control, Prentice Hall, New York, 1994. S. H. Lane, D. A. Handelman and J. J. Gelfand, "Theory and Development of Higher-Order CMAC Neural Networks," IEEE Control Systems Magazine, vol. 12. pp. 23-30, Apr. 1992. C. T. Chiang and C. S. Lin, ‘‘Learning Convergence of CMAC Technique,’’ IEEE Trans. on Neural Networks, Vol. 8. pp. 1281–1292, Nov. 1996. C. T. Chiang and C. S. Lin, ‘‘CMAC with general basis function,’’ Neural Networks, vol. 9. No. 7, pp. 1199–1211, 1998. F. J. Gonzalez-Serrano, A. R. Figueiras and A. Artes-Rodriguez, ‘‘Generalizing CMAC architecture and training,’’ IEEE Trans. on Neural Networks, vol. 9. pp. 1509–1514, Nov. 1998. T. Szabó and G. Horváth, “CMAC and its Extensions for Efficient System Modelling,” International Journal of Applied Mathematics and Computer Science, vol. 9. No. 3. pp. 571-598, 1999. T. Szabó and G. Horváth, "Improving the Generalization Capability of the Binary CMAC,” Proc. Int. Joint Conf. on Neural Networks, IJCNN’2000. Como, Italy, vol. 3, pp. 85-90, 2000. R. E. Crochiere and L. R. Rabiner, “Multirate Digital Signal Processing”, Prentice Hall, 1983. L. Zhong, Z. Zhongming and Z. Chongguang, “The Unfavorable Effects of Hash Coding on CMAC Convergence and Compensatory Measure,” IEEE International Conference on Intelligent Processing Systems, Beijing, China, pp. 419-422, 1997. > SMCB-E-08182005-0575 < [18] Z. Q. Wang, J. L. Schiano and M. Ginsberg, “Hash Coding in CMAC Neural Networks,” Proc. of the IEEE International Conference on Neural Networks, Washington, USA. vol. 3. pp. 1698-1703, 1996. [19] C. S. Lin and C. K. Li, “A New Neural Network Structure Composed of Small CMACs,” Proc. of the IEEE International Conference on Neural Networks, Washington, USA. vol. 3. pp. 1777-1783, 1996. [20] C. S. Lin and C. K. Li, “ A Low-Dimensional-CMAC-Based Neural Network”, Proc. of IEEE International Conference on Systems, Man and Cybernetics, Vol. 2. pp. 1297-1302. 1996. [21] C. K. Li and C. S Lin, ”Neural Networks with Self-Organized Basis Functions” Proc. of the IEEE Int. Conf. on Neural Networks, Anchorage, Alaska, vol. 2, pp. 1119-1224, 1998. [22] C. K. Li and C. T. Chiang, “Neural Networks Composed of Singlevariable CMACs”, Proc. of the 2004 IEEE International Conference on Systems, Man and Cybernetics, The Hague, The Netherlands, pp. 3482-3487, 2004. [23] H. M. Lee, C. M. Chen and Y. F. Lu, “A Self-Organizing HCMAC Neural-Network Classifier,” IEEE Trans. on Neural Networks, vol. 14. pp. 15-27. Jan. 2003. [24] S. L. Hung and J. C. Jan, “MS_CMAC Neural Network Learning Model in Structural Engineering” Journal of Computing in Civil Engineering, pp. 1-11. Jan. 1999. [25] J. C. Jan and S. L. Hung, “High-Order MS_CMAC Neural Network,” IEEE Trans. on Neural Networks, vol. 12. pp. 598-603, May, 2001. [26] Chin-Ming Hong, Din-Wu Wu, "An Association Memory Mapped Approach of CMAC Neural Networks Using Rational Interpolation Method for Memory Requirement Reduction,"¨ Proc. of the IASTED International Conference, Honolulu, USA, pp.153-158, 2000. [27] J. S. Albus, "Data Storage in the Cerebellar Model Articulation Controller," J. Dyn. Systems, Measurement Contr. vol. 97. No. 3. pp. 228-233, 1975. [28] K. Zhang and F. Qian, "Fuzzy CMAC and Its Application," Proc. of the 3rd World Congress on Intelligent Control and Automation, Hefei, P.R. China, pp. 944-947, 2000. [29] H. R. Lai and C. C. Wong, “A Fuzzy CMAC Structure and Learning Method for Function Approximation, ” Proc. of the 10th International Conference on Fuzzy Systems, Melbourne, Australia, vol. 1. pp. 436439, 2001. [30] W. Shitong and L. Hongjun, “Fuzzy System and CMAC Network with B-Spline Membership/Basis Functions can Approximate a Smooth Function and its Derivatives,” International Journal of Computational Intelligence and Applications, vol. 3. No. 3. pp. 265-279, 2003. [31] C. J. Lin, H. J. Chen and C. Y. Lee, “ A Self-Organizing Recurrent Fuzzy CMAC Model for Dynamic System Identification” Proc. of the 2004 IEEE International Conference on Fuzzy Systems, Budapest, Hungary, vol. 3, pp. 697-702, 2004. [32] J. Moody, “Fast learning in multi-resolution hierarchies” in Advances in Neural Information Processing Systems, vol. 1. Morgan Kaufmann, pp. 29-39. 1989. [33] H. Kim and C. S. Lin, “ Use adaptive resolution for better CMAC learning”, Baltimore, MD, USA. 1992. [34] S. F. Su, T. Tao and T. H. Hung, “Credit assigned CMAC and Its Application to Online Learning Robot Controllers,” IEEE Trans. on Systems Man and Cybernetics- Part B. vol. 33, pp. 202-213. Apr. 2003. [35] T. Qin, Z. Chen, “A Learning Algorithm of CMAC based on RLS,” Neural Processing Letters, vol. 19. pp. 49–61, 2004. [36] T. Qin, Z. Chen, H. Zhang and W. Xiang, “Continuous CMAC-QRLS and Its Systolic Array,” Neural Processing Letters, vol. 22, pp. 1–16, 2005. [37] S. Simons, "Analytical inversion of a particular type of banded matrix," Journal of Physics A: Mathematical and General, vol. 30, pp. 755763, 1997. [38] D. A. Lavis, B. W. Southern and I. F. Wilde, "The Inverse of a Semiinfinite Symmetric Banded Matrix," Journal of Physics A: Mathematical and General, vol. 30, pp. 7229-7241, 1997. [39] V. Vapnik, Statistical Learning Theory, Wiley, New York, 1995. [40] R. L. Smith: "Intelligent Motion Control with an Artificial Cerebellum" PhD thesis. University of Auckland, New Zeland, 1998. [41] J. Palotta, L.G. Kraft, "Two-Dimensional Function Learning Using CMAC Neural Network with Optimized Weight Smoothing" Proc. of 15 [42] [43] [44] [45] [46] [47] [48] [49] [50] the American Control Conference, San Diego, USA. 1999, pp. 373377. C. de Boor, “A Practical Giude to Splines” Revised Edition, Springer, 2001. J. A. K. Suykens, T. Van Gestel, J. De Brabanter, B. De Moor and J. Vandewalle, Least Squares Support Vector Machines, World Scientific, Singapore, 2002. C. Saunders, A. Gammerman and V. Vovk, "Ridge Regression Learning Algorithm in Dual Variables. Machine Learning," Proc. of the Fifteenth Int. Conf. on Machine Learning, pp. 515-521, 1998. J. Valyon and G. Horváth, „A Sparse Least Squares Support Vector Machine Classifier”, Proc. of the International Joint Conference on Neural Networks IJCNN 2004, 2004, pp. 543-548. J. Valyon and G. Horváth, „A generalized LS–SVM”, SYSID'2003, Rotterdam, 2003, pp. 827-832. G. Horváth and Zs. Csipak, "FPGA Implementation of the Kernel CMAC," Proc. of the International Joint Conference on Neural Networks, IJCNN'2004. Budapest, Hungary, pp. 3143-3148, 2004. G. Golub and C. V. Loan, Matrix computations, John Hopkins University Press, 3rd edition, 1996. P. Rózsa, Budapest University of Technology and Economics, Budapest, Hungary, private communication, May, 2000. P. Rózsa, Linear algebra and its applications, Tankönyvkiadó, Budapest, Hungary, 1997. (in Hungarian) Gábor Horváth (M’95–SM’03) received Dipl. Eng. Degree (EE) from the Budapest University of Technology and Economics in 1970 and the candidate of science (PhD) degree from the Hungarian National Academy of Sciences in digital signal processing in 1988. Since 1970 he has been with the Department of Measurement and Information Systems Budapest University of Technology and Economics where he has held various teaching positions. Currently he is an Associate Professor and Deputy Head of the Department. He has published more than 70 papers and he is the coauthor of 8 books. His research and educational activity is related to the theory and application of neural networks and machine learning. Tamás Szabó was born in Budapest, Hungary. He received an MSc degree in electrical engineering and a PhD degree from Budapest University of Technology and Economics in 1994 and in 2001 respectively. From 1997 to 1999, he was research assistant at the Department of Measurement and Information Systems, Budapest University of Technology and Economics. From 1999 to 2002 he worked as a DSP research and development engineer. Since 2002 he has taken part in seismic digital data acquisition system development. His research interest covers the areas of digital and analog signal processing, neural networks. He has published more than 15 scientific papers, and owns a patent in the field of acoustic signal processing.