Evaluating Interactions in Mixed Models

advertisement

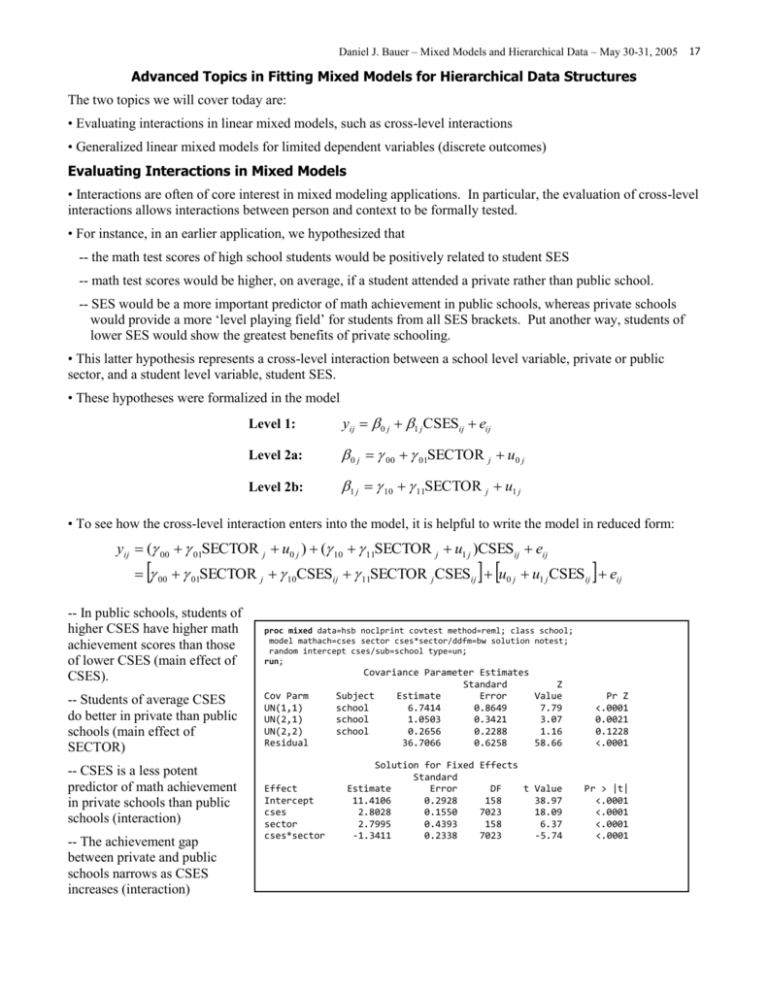

Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 17 Advanced Topics in Fitting Mixed Models for Hierarchical Data Structures The two topics we will cover today are: • Evaluating interactions in linear mixed models, such as cross-level interactions • Generalized linear mixed models for limited dependent variables (discrete outcomes) Evaluating Interactions in Mixed Models • Interactions are often of core interest in mixed modeling applications. In particular, the evaluation of cross-level interactions allows interactions between person and context to be formally tested. • For instance, in an earlier application, we hypothesized that -- the math test scores of high school students would be positively related to student SES -- math test scores would be higher, on average, if a student attended a private rather than public school. -- SES would be a more important predictor of math achievement in public schools, whereas private schools would provide a more ‘level playing field’ for students from all SES brackets. Put another way, students of lower SES would show the greatest benefits of private schooling. • This latter hypothesis represents a cross-level interaction between a school level variable, private or public sector, and a student level variable, student SES. • These hypotheses were formalized in the model Level 1: yij 0 j 1 j CSESij eij Level 2a: 0 j 00 01SECTOR j u0 j Level 2b: 1 j 10 11SECTOR j u1 j • To see how the cross-level interaction enters into the model, it is helpful to write the model in reduced form: yij ( 00 01SECTOR j u0 j ) ( 10 11SECTOR j u1 j )CSESij eij 00 01SECTOR j 10CSESij 11SECTOR j CSESij u0 j u1 j CSESij eij -- In public schools, students of higher CSES have higher math achievement scores than those of lower CSES (main effect of CSES). proc mixed data=hsb noclprint covtest method=reml; class school; model mathach=cses sector cses*sector/ddfm=bw solution notest; random intercept cses/sub=school type=un; run; -- Students of average CSES do better in private than public schools (main effect of SECTOR) Cov Parm UN(1,1) UN(2,1) UN(2,2) Residual -- CSES is a less potent predictor of math achievement in private schools than public schools (interaction) -- The achievement gap between private and public schools narrows as CSES increases (interaction) Effect Intercept cses sector cses*sector Covariance Parameter Estimates Standard Z Subject Estimate Error Value school 6.7414 0.8649 7.79 school 1.0503 0.3421 3.07 school 0.2656 0.2288 1.16 36.7066 0.6258 58.66 Pr Z <.0001 0.0021 0.1228 <.0001 Solution for Fixed Effects Standard Estimate Error DF t Value 11.4106 0.2928 158 38.97 2.8028 0.1550 7023 18.09 2.7995 0.4393 158 6.37 -1.3411 0.2338 7023 -5.74 Pr > |t| <.0001 <.0001 <.0001 <.0001 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 18 • So we have obtained a lot of useful information from the model estimates. But there are some additional questions that we may like to answer. For instance, we do not yet know if CSES is a significant predictor in private schools or at what levels of CSES private schools convey a performance advantage. Plotting and Probing Simple Slopes: Is the effect of CSES on math achievement significant in private schools? • To better understand how to answer this question, we can write the prediction equation for the model by taking the expectation of the reduced form equation with respect to both individuals and groups: yˆ ˆ00 ˆ01SECTOR ˆ10CSES ˆ11SECTOR * CSES • We can then rearrange this equation as yˆ ˆ00 ˆ01SECTOR ˆ10 ˆ11SECTOR CSES Notice that written this way, it is easy to see that SECTOR modifies both the intercept and the slope of the regression of math achievement on CSES, the first and second bracketed terms above. • From this, we can see that if SECTOR=0 (public schools) then the prediction equation simplifies to yˆ ˆ00 ˆ10CSES 11.41 2.80CSES We will refer to this equation as the simple regression line for math achievement on CSES within public schools. The values 11.41 and 2.80 will be referred to as the simple intercept and simple slope, respectively. These terms are borrowed from the classic text by Aiken & West (1991) on evaluating interactions in standard regression models. • We can also see that if SECTOR=1 (private schools) then the prediction equation will be 11.41 2.80 2.80 1.34CSES This is the simple regression line of math achievement on CSES within private schools. Notice that the simple intercept and simple slope are compound coefficients for which we have no direct test of significance. • To get a descriptive sense of the two simple regression lines, we can plot both equations. This is as far as most multilevel textbooks describe probing interactions. Math Acheivement yˆ ˆ00 ˆ01 ˆ10 ˆ11 CSES 25 20 15 Private 10 Public 5 0 -3.75 -2.5 -1.25 0 1.25 CSES • We would like to know, however, whether the slope of the simple regression line for private schools is significantly different from zero, and for that we will need the standard error of the simple slope. • Analytically, this can be determined to be a simple function of the asymptotic variances and covariances of the fixed effects estimates (see references for further detail): SE (ˆ10 ˆ11 ) VAR(ˆ10 ) 2COV (ˆ10 , ˆ11 ) VAR(ˆ11 ) • The asymptotic covariance matrix of the fixed effects is routinely computed by SAS PROC MIXED (and other mixed modeling software) and is used to calculate the standard errors of the fixed effects, but it is not routinely output. To obtain it, you must add the option covb to the model statement: proc mixed data=hsb noclprint covtest method=reml; class school; model mathach=cses sector cses*sector/ddfm=bw solution notest covb; random intercept cses/sub=school type=un; run; 2.5 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 • We now have all of the information we need to compute and test the simple slope of CSES within private schools. 19 Covariance Matrix for Fixed Effects Row 1 2 3 4 • Specifically: Effect Intercept cses sector cses*sector Col1 0.08575 0.01191 -0.08575 -0.01191 Col2 0.01191 0.02401 -0.01191 -0.02401 Col3 -0.08575 -0.01191 0.1930 0.02715 Col4 -0.01191 -0.02401 0.02715 0.05465 ˆ10 ˆ11 14.21 SE (ˆ10 ˆ11 ) VAR(ˆ10 ) 2COV (ˆ10 , ˆ11 ) VAR(ˆ11 ) .0241 2( .0241) .05465 .175 tˆ1 0 ˆ1 1 ˆ10 ˆ11 14.21 8.35 SE (ˆ10 ˆ11 ) .175 The t-test has the same df as the t-test of the CSES effect in public schools, namely 7023, so a t-value of 8.35 is highly significant. In other words, CSES continues to a have a significantly positive effect on math achievement in private schools even if the magnitude of this effect is diminished relative to public schools. • This process is a bit laborious, however, and can be prone to rounding errors. Fortunately, SAS will do the computations for us by way of the estimate statement: proc mixed data=hsb noclprint covtest method=reml; class school; model mathach=cses sector cses*sector/ddfm=bw solution notest covb; random intercept cses/sub=school type=un; estimate 'simple intercept for private' intercept 1 sector 1; estimate 'simple slope for private' cses 1 cses*sector 1; run; • In general, the estimate statement can be used to calculate and test any weighted linear combination of fixed effects estimates from the model. Each fixed effect is represented by the corresponding predictor in the model statement. -- So the command estimate 'simple intercept for private' intercept 1 sector 1; tells SAS to compute 1 * ˆ00 1 * ˆ01 , a unit weighted linear combination of the fixed intercept and the SECTOR effect (providing the simple intercept for private schools), and to perform a significance test of the resulting compound estimate. -- Similarly, the command estimate 'simple slope for private' cses 1 cses*sector 1; tells SAS to compute 1 * ˆ10 1 * ˆ11 , a unit weighted linear combination of the fixed CSES main effect and the CSES*SECTOR interaction (providing the simple slope for private schools), and to perform a significance test of the resulting compound estimate. Solution for Fixed Effects Effect Intercept cses sector cses*sector Estimate Standard Error DF t Value Pr > |t| 11.4106 2.8028 2.7995 -1.3411 0.2928 0.1550 0.4393 0.2338 158 7023 158 7023 38.97 18.09 6.37 -5.74 <.0001 <.0001 <.0001 <.0001 Label simple intercept for private simple slope for private of Estimates Standard Estimate Error 14.2102 0.3274 1.4617 0.1750 DF 158 7023 t Value 43.40 8.35 Pr > |t| <.0001 <.0001 Regions Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 20 Significance: At what levels of CSES is the achievement gap between private and public schools significant? • This question examines the interaction from the other side. Here, CSES is viewed as the moderator of the expected difference in performance between students of public and private schools. • To begin to answer this question we will first rewrite the prediction equation to highlight that the simple regression of y on SECTOR varies as a function of CSES: yˆ ˆ00 ˆ01SECTOR ˆ10CSES ˆ11SECTOR * CSES ˆ00 ˆ10CSES ˆ01 ˆ11CSESSECTOR • The SECTOR effect is then the weighted linear combination ˆ01 ˆ11CSES , where the first coefficient is unit weighted and the second coefficient is weighted by a particular value of CSES. • We could go about trying to answer this new question just like the last. That is, we could select specific values of CSES at which to evaluate the SECTOR effect. • When probing interactions involving continuous predictors, a common suggestion is to use M +/- SD as high and low values. • For CSES, SD=.66, and M=0 (since it was centered). • Setting CSES at .66 and -.66 we see that the linear combinations we wish to estimate are 1 * ˆ01 .66 * ˆ11 proc means data=hsb; var CSES; run; proc mixed data=hsb noclprint covtest method=reml; class school; model mathach=cses sector cses*sector/ddfm=bw solution notest; random intercept cses/sub=school type=un; estimate 'sector effect at high CSES' sector 1 cses*sector .66; estimate 'sector effect at low CSES' sector 1 cses*sector -.66; run; N Mean Std Dev Minimum Maximum ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ 7185 -0.0059951 0.6605881 -3.6570000 2.8500000 ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ Effect Intercept cses sector cses*sector Solution for Fixed Effects Standard Estimate Error DF t Value 11.4106 0.2928 158 38.97 2.8028 0.1550 7023 18.09 2.7995 0.4393 158 6.37 -1.3411 0.2338 7023 -5.74 and 1 * ˆ01 .66 * ˆ11 • We can then use the estimate statement again to obtain tests of the SECTOR effect at high and low levels of CSES. Label sector effect at high CSES sector effect at low CSES Estimates Standard Estimate Error 1.9144 0.5026 3.6847 0.4254 • The results indicate that the SECTOR effect is significant at both high and low CSES, or at the two locations indicated in the plot to the right. • What we really want to know is the range of values of CSES for which the SECTOR effect is significant and the range for which it is not. DF 7023 7023 t Value 3.81 8.66 Pr > |t| 0.0001 <.0001 25 Math Acheivement • But it seems a bit arbitrary to evaluate this difference at just these two points. Of course, we could select lots of other values of CSES at which to evaluate the SECTOR effect, but this would be ad hoc and tedious. Pr > |t| <.0001 <.0001 <.0001 <.0001 20 15 * Private 10 * Public 5 0 -3.75 -2.5 -1.25 0 CSES 1.25 2.5 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 21 • To answer this question, we will return to our formula for the t-test of the simple effect of SECTOR: tˆ0 1ˆ1 1CSES ˆ01 ˆ11CSES SE (ˆ01 ˆ11CSES) Rather than selecting specific values of CSES to solve for t, we will instead reverse the unknown in this equation and solve for the value of CSES that returns the critical value of t. Given the high df of the present model, the critical value will be t crit 1.96 for a 2-tailed t-test. Inserting this value we have tcrit ˆ01 ˆ11CSES SE (ˆ01 ˆ11CSES) where CSES is the specific value(s) of CSES at which t crit 1.96 . • We can also expand the SE expression in terms of the variances and covariances of the fixed effect estimates in the linear combination so that t crit ˆ01 ˆ11CSES VAR(ˆ01 ) 2CSESCOV (ˆ01 , ˆ11 ) CSES2VAR(ˆ11 ) • Some straightforward algebraic manipulation of this expression (e.g., squaring both sides, collecting terms) shows that it can be rewritten as a simple quadratic function: aCSES2 bCSES c 0 where 2 a t crit VAR(ˆ11 ) ˆ112 2 b 2(t crit COV (ˆ01 , ˆ11 ) ˆ01ˆ11 ) 2 2 c t crit VAR(ˆ01 ) ˆ01 • Given the quadratic form of this expression, we can plug in our estimated values for the fixed effects and their asymptotic variances and covariances to solve for a, b, and c, and then use the quadratic formula: CSES b b 2 4ac 2a • The quadratic formula will return two roots, or values of CSES that satisfy the quadratic equation aCSES2 bCSES c 0 . In the present case, these values are 1.23 and 3.63. • This means that: 25 -- The difference in math achievement scores is nonsignificant when 1.23 < CSES < 3.63. -- Students of public schools actually outperform students of private schools when CSES > 3.63. However, given there are no CSES scores in the sample greater than 2.86, we should not ascribe much importance to this extrapolation of the model results. ns Region of significance, p < .05 Math Acheivement -- Students of private schools perform significantly better, on average, than students of public schools for values of CSES < 1.23 (1.89 SDs). 20 15 Private 10 Public 5 0 -3.75 -2.5 -1.25 0 CSES 1.25 2.5 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 22 • Again, there is a simpler way to accomplish computation of the region of significance. Although SAS has no built-in functions for doing this, there is a web-based calculator at http://www.unc.edu/~preacher/interact/ that will produce the plots when you input the relevant model estimates. • Recall that our model estimates and their asymptotic covariance matrix are: • The calculators are described for three different cases of moderation. Our particular situation doesn’t fall neatly into any one of the cases, however the computations are identical. • For purposes of using the calculator, we will use the Case 1 calculator below and label SECTOR as x1 and CSES as x2 in the calculator. I’ve also relabeled the effects in the SAS output to the right to be consistent with the calculator (these are not quite the same as in our equations above). Solution for Fixed Effects Standard Effect Estimate Error DF t Value Intercept 11.4106 0.2928 158 38.97 ˆ 00 2.8028 cses 0.1550 7023 18.09 ˆ20 2.7995 sector 0.4393 158 6.37 cses*sector ˆ10 -1.3411 0.2338 7023 -5.74 ˆ30 Row 1 2 3 4 Pr > |t| <.0001 <.0001 <.0001 <.0001 Covariance Matrix for Fixed Effects Effect Intercept cses sector cses*sector Col1 ˆ00 0.08575 0.01191 ˆ-0.08575 00 ˆ-0.01191 20 Col2 0.01191ˆ20 0.02401 -0.01191 -0.02401 Col3 -0.08575 -0.01191 0.1930 0.02715 Col4 ˆ10 -0.01191 ˆ30 -0.02401 0.02715 0.05465 ˆ10 ˆ30 • Inserting the appropriate estimates into the calculator (as shown) and pressing the calculate button produces the output in the lower window. • Note that the region boundaries are calculated to be precisely the same values that we computed longhand. • In addition, by putting in -.66 and .66 as conditional values for x2 (=CSES), I obtained the same simple effects of CSES at high and low values as SAS provided me previously with the estimate statement. • Advantages of this calculator are thus that it provides the regions of significance and tests of simple effects simultaneously and that it can be used with other mixed effects modeling software than SAS. • A last advantage is that it provides an interesting graphical supplement to the regions of significance, confidence bands for the effect of sector on math achievement. These bands provide additional information that can be useful for interpreting the interaction. Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 23 Confidence Bands: How precise is my estimate of the expected difference between students in private and public schools at different levels of CSES? • This new plot would show the expected mean difference in math achievement between a student in private versus public school at each level of CSES, or the simple effect of SECTOR as a function of CSES. • We could also plot a 95% confidence interval around this mean difference at every level of CSES. Doing so produces confidence bands. 25 ns Region of significance, p < .05 Math Acheivement • Recall our earlier plot of the interaction effect and region of significance. We could produce a new plot by subtracting the predicted value for public from the predicted value for private. That is, rather than plot both simple regression lines. 20 15 Private 10 Public 5 0 -3.75 -2.5 • The basic idea is to take the usual formula for a confidence interval -1.25 0 1.25 CSES effect margin of error and write it out in terms of our effect of interest (the simple effect of SECTOR, conditional on CSES), or ˆ01 ˆ11CSES tcrit SE (ˆ01 ˆ11CSES) ˆ01 ˆ11CSES tcrit VAR(ˆ01 ) 2CSESCOV (ˆ01 , ˆ11 ) CSES2VAR(ˆ11 ) • We then insert our model estimates and plot three functions of CSES: Simple Effect Estimate: ˆ01 ˆ11CSES Upper Bound on Effect: ˆ01 ˆ11CSES+tcrit VAR(ˆ01 ) 2CSESCOV (ˆ01 , ˆ11 ) CSES2VAR(ˆ11 ) Lower Bound on Effect: ˆ01 ˆ11CSES tcrit VAR(ˆ01 ) 2CSESCOV (ˆ01 , ˆ11 ) CSES2VAR(ˆ11 ) • These confidence bands can also be produced using the web calculator. The bottom window of the web calculator, which I did not show you before, looks like this: 2.5 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 24 • This is automatically generated code for the statistical software package R. If you push the “Submit above to Rweb” button, this code will be submitted to an Rweb server to produce the desired plot of confidence bands. • Note that here the straight line in the middle is the simple effect of SECTOR at each level of CSES (or expected mean difference in math achievement between students in private versus public schools). At CSES = -.66 and .66, we have the same simple effects that SAS output for us with the estimate statement. • The curved outer lines are the confidence bands. These bands indicate that the precision of our estimate of SECTOR effect is greatest at levels of CSES between around -1 and 0. • The dotted vertical line demarcates the region of significance, which is also the area of the plot where the confidence bands include the horizontal line indicating zero effect. • The confidence bands plot thus coveys all of the information about the interaction that we gleaned from other procedures, plus additional information about precision. • Overall, by probing the CSES*SECTOR interaction further, we now have a much richer set of conclusions than before. -- Although the effect of CSES is diminished in private schools relative to public schools, it is still significantly positive (test of simple slopes). -- Students from lower-class to upper-middle-class socioeconomic brackets benefit from private schooling, whereas the most affluent students show little difference in math achievement as a function of private versus public schooling (regions of significance). -- Our estimate of the effect of SECTOR is most certain between CSES of -1 and 0. This corresponds to about 1.5 standard deviations below the mean and the mean, indicating that we can most precisely identify the effect for students with low to moderate CSES. Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 25 Generalized Linear Mixed Models • To this point we have considered outcome variables that could reasonably be regarded as continuous in nature. In many empirical applications, however, outcomes are binary, ordinal, nominal, or counts. • Standard linear models are inappropriate for these limited dependent variables. In the single-level modeling case, multiple regression models have been extended to limited dvs through the generalized linear modeling framework. In mixed models, generalized linear mixed models accomplish the same thing, but with the difference that there are random effects in the model. • Three key concepts in generalized linear models are -- The response distribution. The conditional distribution assumed for the outcome variable. -- The linear predictor. This is a linear combination of the independent variables that can theoretically range from -∞ to ∞. The linear predictor is typically denoted i, -- The link function. The link function relates the linear predictor to the outcome variable. • In the case of the standard single-level linear regression model with fixed-effects only, we have: Response Distribution: yi | i ~ N ( i , 2 ) E ( y i | i ) i V ( y i | i ) 2 Link Function: i i (Identity Link) Linear Predictor: i 0 p x pi P p 1 • Given our familiarity with this model, it might be tempting to apply it even in cases where the outcome variable is really discrete. This can often be a bad idea. For instance, let’s consider the consequences of estimating this model with, say, a dichotomous outcome: -- First, because of the fact that i i and i theoretically ranges from -∞ to ∞, E ( yi | i ) i can fall outside of the [0,1] interval. Obviously, we would prefer that these values be constrained to the [0,1] interval since, for a dichotomous outcome, they correspond to predicted probabilities that yi 1 . -- Second, notice that the variance function implied by the response distribution is V ( yi | i ) 2 ; that is, it is a constant and is not a function of the expected value. This is the assumption of homoscedasticity that we make with standard linear regression models. However, when response variables are not normally distributed, the variance will often be a function of the expected value, and hence this assumption will be violated. • A more sensible single-level model for a binary outcome might be the following: Response Distribution: yi | i ~ BER ( i ) E ( y i | i ) i V ( yi | i ) i (1 i ) Link Function: i (Logit or Log-Odds Link) 1 i i log P Linear Predictor: i 0 p x pi p 1 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 26 • Notice that three things have happened here: -- First, the equation for the linear predictor is the same as it was before and the linear predictor is still continuous and assumed to range from -∞ to ∞. This is a good thing because it allows us to use predictors that are also continuous and theoretically range from -∞ to ∞. -- Second, E ( yi | i ) i now falls on a [0,1] interval. We can see this from the inverse of the link function: i 1 1 exp( i ) If i = ∞, then exp( i ) = 0 and so i = 1. If i = -∞, then exp( i ) = ∞ and i = 0. Hence for any value of i between -∞ and ∞ we obtain an expected value (predicted probability) in the [0,1] interval. -- Third, the variance function implied by the Bernoulli response distribution is V ( yi | i ) i (1 i ) ; that is, it is a direct function of the expected value. Thus, the heteroscedastic nature of the conditional response distribution is explicitly modeled. • The ability to specify the response distribution and link function makes the generalized linear model very flexible. The linear predictor is always the same, regardless of these choices; that is, it is always a linear function of the predictors, hence the term ‘generalized linear model.’ • The model given above is a special variant of the generalized linear model known as the logistic regression model. This model can be extended to either ordinal or nominal variables with more than two response categories, other special cases of the generalized linear model. Other link functions can also be considered, such as the probit link or complementary log-log, although the logit link is most commonly used in practice. • Similarly, for count variables, the use of a log link function and a Poisson response distribution results in the special case of Poisson regression. Generalized Linear Mixed Models • Of course, what we ultimately want to do is extend this to a model involving both fixed and random effects. • Let’s again consider the special case of a binary outcome variable. To make this a mixed model, we need only to modify our model to the following: Response Distribution: yij | ij ~ BER ( ij ) E ( yij | ij ) ij V ( yij | ij ) ij (1 ij ) Link Function: ij (Logit or Log-Odds Link) 1 ij ij log P Linear Predictor: ij 0 j pj x pij p 1 Q 0 j 00 0 q wqj u0 j q 1 Q pj p 0 pq wqj u pj q 1 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 27 • So the major change is to substitute our standard 2-level multilevel model expression for the single-level expression in the equation for the linear predictor. Here, x references the P Level 1 predictors and w references the Q Level 2 predictors. Notice also that the subscript j has been applied to all expressions to indicate that responses vary by individuals i within groups j. • Normality of the random effects is typically assumed regardless of the response distribution of y. • Because of the nonlinearity of the link function, PROC MIXED cannot be used to estimate the model, however PROC GLIMMIX and PROC NLMIXED can do so. Estimation Issues • Unlike the linear mixed model, the likelihood function for generalized linear mixed models requires the evaluation of a relatively intractable integral. Given this, two general approaches have been adopted to fitting generalized linear mixed models, methods that approximate the model (implemented in PROC GLIMMIX) and methods that approximate the integral (implemented in PROC NLMIXED). • Methods that approximate the model include the Penalized Quasi-Likelihood (PQL) and Maginal QuasiLikelihood (MQL). These methods essentially take the nonlinear generalized linear mixed model and “linearize” it. More specifically, a first-order Taylor Series is used to make the model for y linear. Once the model is linearized, it can be fit by the same methods as PROC MIXED uses for linear mixed models. • Advantages and disadvantages of PQL and MQL (PROC GLIMMIX): -- Fast and flexible. Because the model is ultimately fit as a kind of linear mixed model, these methods of estimation will allow any of the same model specifications as linear mixed models. -- Not always reliable. MQL has performed worse that PQL. Even PQL doesn’t always perform well, in part because the linearization of the model doesn’t always work well and it can be hard to know when that is. Some research has shown that with large variance components, PQL produces quite biased estimates. • Methods that approximate the integral include quadrature, adaptive quadrature, and a Laplace approximation. Quadrature-based methods numerically approximate the continuous integral by a series of either fixed (nonadaptive) or flexible (adaptive) discrete points. Adaptive quadrature takes longer, but may require fewer quadrature points and can be more accurate. The Laplace approximation is not available in SAS but is also accurate. • Advantages and disadvantages of quadrature (PROC NLMIXED): -- Accurate. Given sufficiently many quadrature points, adaptive and nonadaptive quadrature will produce highly accurate estimates and do not show the same bias as PQL and MQL. -- Slow and inflexible. Many of the more complex modeling options available for linear models are not available for generalized linear mixed models fit with numerical integration methods. In addition, quadrature becomes less feasible as the dimensions of integration increases, or as one expands beyond one or two random effects. Consider that with one random effect, you are integrating over one continuous dimension with a few discrete points, but with two random effects you have a 2-dimensional integral to approximate, etc. -- Sensitivity to start values. Quadrature can be sensitive to start values, whereas PQL and MQL are more robust. • When fitting models without too many random effects, it is probably best to use integral approximation methods, or PROC NLMIXED, when possible. The model approximation methods in PROC GLIMMIX should be used for models that are too complex for quadrature, or in initial analyses to generate start values for use in PROC NLMIXED. • We will now consider an application of these methods in a multilevel logistic regression model. Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 28 Applications of multilevel logistic regression in PROC GLIMMIX and PROC NLMIXED • This example comes from Snijders & Bosker (1999, p 291-292). The data was collected from former East Germany and investigated changes in personal relations associated with the downfall of commnism and German reunification. The primary question to be examined here is, what influences whether someone distrusts another person to whom he or she is related? • The data records consist of the following: -- Each of 402 participants (referred to as EGOs) was aked to nominate other persons to whom he/she is related (referred to as ALTERs). Since each ego nominates multiple alters, the data have the hierarchical structure of nominations nested within subject (alters nested within egos). -- For each alter, the participant indicated whether or not the person was distrusted, a binary response (FOE) that will serve as the dependent variable for the analysis. -- Of interest was whether distrust would be a function of membership in the communist party. The party membership of the ego was known and included as a dummy variable (PMEGO). Also dummy-coded was whether the alter’s position required membership in the communist party and/or political supervision (POLITIC). -- The nature of the relationship between the ego and each alter was also specified, specifically, whether the alter is an acquaintance, colleague, superior, subordinate, or neighbor. These relations were coded via 4 dummy variables (COL, SUP, SUB, NEIGH), with acquaintance being the reference category. data beate; infile SB14; input ego alter foe politic pmego acq col sup sub neigh; run; proc print data=beate; run; Obs 1665 1666 1667 1668 1669 1670 1671 1672 1673 1674 ego 1294 1295 1295 1295 1295 1296 1296 1297 1297 1297 alter 7 3 5 10 12 4 6 1 3 4 foe 0 0 1 0 0 0 0 0 0 0 politic 0 0 0 0 0 0 0 0 0 0 1677 1678 1679 1680 1681 1682 1683 1297 1297 1297 1298 1298 1299 1299 16 17 19 2 4 2 11 0 0 0 0 0 0 1 0 0 0 0 0 0 1 pmego 0 0 0 0 0 1 1 0 0 0 acq 0 0 0 0 0 0 0 1 0 1 col 1 1 1 0 1 1 1 0 1 0 sup 0 0 0 1 0 0 0 0 0 0 sub 0 0 0 0 0 0 0 0 0 0 neigh 0 0 0 0 0 0 0 0 0 0 1 0 1 1 1 0 0 0 0 0 0 0 0 0 0 1 0 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 <Snip> 0 0 0 0 0 0 0 • To begin with, we might simply want to ask the question whether there are individual differences in the probability of nominating an alter as distrusted. That is, do some individuals tend to distrust more people than other individuals? Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 29 • This simple model would be specified as follows where i indexes alter and j indexes ego: Response Distribution: yij | ij ~ BER ( ij ) E ( yij | ij ) ij V ( yij | ij ) ij (1 ij ) ij (Logit or Log-Odds Link) 1 ij Link Function: ij log Linear Predictor: ij 0 j 0 j 00 u0 j where u0 j ~ N (0, 00 ) • This is, in effect, a random intercept model, only the random intercepts in the equation for the linear predictor are nonlinearly transformed into predicted probabilities via the link function. • Let us first estimate this model in PROC GLIMMIX using PQL estimation. Covariance Parameter Estimates *multilevel logistic regression with random intercept in GLIMMIX; proc glimmix data=beate nclprint; class ego; model foe(event="1")=/link=logit Standard dist=binary ddfm=bw solution; Cov Parm intercept/subject=ego; Subject Estimate Error random Intercept ego 0.8058 0.1659 run; for Fixed Effects ModelSolutions Information Standard Data Set WORK.BEATE Effect Estimate Error DF t Value Response Variable foe Intercept -1.5044 0.08052 -18.68 Response Distribution Binary 425 Link Function Logit Variance Function Default Variance Matrix Blocked By ego Estimation Technique Residual PL Degrees of Freedom Method Between-Within Number of Observations Read Number of Observations Used Pr > |t| <.0001 1683 1683 Response Profile Ordered Value 1 2 foe 0 1 Total Frequency 1359 324 The GLIMMIX procedure is modeling the probability that foe='1'. Dimensions G-side Cov. Parameters Columns in X Columns in Z per Subject Subjects (Blocks in V) Max Obs per Subject 1 1 1 426 14 Convergence criterion (PCONV=1.11022E-8) satisfied. -2 Res Log Pseudo-Likelihood 7825.11 • Here we see that our estimate of the random intercept variance is .81. This is over 2 times the standard error, so it is statistically significant. This tells us that some individuals appear to be more distrustful, or at least distrust more people, than others. Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 30 • Before we go too far in our interpretation of this estimate, however, we need to bear in mind that PQL is known to produce biased variance component estimates in some circumstances. Since this model can also be fit in PROC NLMIXED, we will want to compare the results from refitting the model using numerical integration methods. • Unlike PROC GLIMMIX, PROC NLMIXED does not conserve many of the statements from PROC MIXED. We must literally write out our model equations. In some ways, this is actually easier, as we can write out our model in Level 1 and Level 2 format, as opposed to forming the reduced form. *multilevel logistic regression with random intercept in NLMIXED; proc nlmixed data=beate; parms g00=-1.5 t00=.8; *parameters of the model & start values; b0j = g00 + u0j; *model for random coefficients; logit = b0j; *model for linear predictor; p = exp(logit)/(1+exp(logit)); *inverse of link function; model foe~binary(p); *Bernoulli distribution; random u0j~normal(0,t00) subject=ego; *random effects distribution; run; Specifications Data Set WORK.BEATE Dependent Variable foe Distribution for Dependent Variable Binary Random Effects u0j Distribution for Random Effects Normal Subject Variable ego Optimization Technique Dual Quasi-Newton Integration Method Adaptive Gaussian Quadrature Dimensions Observations Used 1683 Observations Not Used 0 Total Observations 1683 Subjects 426 Max Obs Per Subject 14 Parameters 2 Quadrature Points 10 NOTE: GCONV convergence criterion satisfied. Parameter Estimates Parameter g00 t00 Estimate -1.7861 1.4183 Standard Error 0.1154 0.3324 DF 425 425 t Value -15.47 4.27 Pr > |t| <.0001 <.0001 Alpha 0.05 0.05 Lower -2.0129 0.7649 Upper -1.5592 2.0717 Gradient -0.00008 -0.00002 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 31 • Notice that our effect estimates have changed – the variance of the random effect, in particular, has shifted nearly doubled in magnitude from .8 to 1.4. We should view the NLMIXED results as more accurate. • Again, we can see from the model output that the estimated variance component for the random intercept is statistically significant, suggesting that individuals in the sample differ in terms of how likely they are to nominate an alter as someone they distrust. Unfortunately, because of the heteroscedastic Level 1 variance, V ( yi | i ) i (1 i ) , we cannot easily compute an ICC to gauge the magnitude of this effect. • We can, however, proceed to predict the random intercepts, for instance, on the basis of whether the ego is a member of the communist party. To do this, we would modify the model for the linear predictor to ij 0 j Linear Predictor: 0 j 00 01PMEGO u0 j where proc nlmixed data=beate qpoints=10; parms g00=-1.56 g01=.22 t00=.8; b0j = g00 + g01*pmego + u0j; logit = b0j; p = exp(logit)/(1+exp(logit)); model foe~binary(p); random u0j~normal(0,t00) subject=ego; run; u0 j ~ N (0, 00 ) *parameters of the model & start values; *model for random coefficients; *model for linear predictor; *inverse of link function; *bernoulli distribution; *random effects distribution; Parameter Estimates Parameter g00 g01 t00 Estimate -1.8464 0.2520 1.3963 Standard Error 0.1287 0.2165 0.3295 DF 425 425 425 t Value -14.35 1.16 4.24 Pr > |t| <.0001 0.2450 <.0001 Alpha 0.05 0.05 0.05 Lower -2.0993 -0.1735 0.7485 Upper -1.5935 0.6776 2.0440 Gradient 0.000107 7.442E-6 0.000021 • We now see that party membership is not a significant predictor of the random intercepts. The variance component associated with the random intercept is still of approximately the same magnitude as before. • A further elaboration of this model would be to consider whether there is an effect of the political function of the alter on whether or not the alter is distrusted, and whether this effect differs for different egos. To test this model, we would write Linear Predictor: ij 0 j 1 j POLITIC 0 j 00 01PMEGO u0 j 1 j 10 u1 j proc nlmixed data=beate qpoints=10; parms g00=-1.59 g01=.21 g10=.29 t00=.86 t01=-.32 t11=.62; b0j = g00 + g01*pmego + u0j; b1j = g10 + u1j; logit = b0j + b1j*politic; p = exp(logit)/(1+exp(logit)); model foe~binary(p); random u0j u1j~normal([0,0],[t00,t01,t11]) subject=ego; run; Parameter Estimates Standard Parameter Estimate Error DF t Value Pr > |t| g00 -1.9243 0.1401 424 -13.73 <.0001 g01 0.2818 0.2324 424 1.21 0.2261 g10 -0.3643 0.8227 424 -0.44 0.6582 t00 1.5847 0.3894 424 4.07 <.0001 t01 -0.1134 1.0200 424 -0.11 0.9115 t11 5.5669 5.6895 424 0.98 0.3284 where Alpha 0.05 0.05 0.05 0.05 0.05 0.05 0 00 u 0 j ~ N , u 1 j 0 10 11 Lower -2.1998 -0.1751 -1.9813 0.8192 -2.1183 -5.6162 Upper -1.6489 0.7386 1.2528 2.3501 1.8914 16.7501 Gradient 0.004783 0.002029 0.000821 -0.00038 0.000559 -0.00003 Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 32 • From these results we see that the fixed effect of POLITIC, like PMEGO, is not significant. Nor is the random effect of POLITIC significant, which has a very large standard error. • Given the nonsignificance of the random effect of POLITIC, we would probably not try to predict this effect, however, for the sake of completeness, let us also consider a model with a predictor of the random slope: Linear Predictor: ij 0 j 1 j POLITIC 0 j 00 01PMEGO u0 j 1 j 10 11PMEGO u1 j 0 00 u 0 j ~ N , u1 j 0 10 11 where • Notice that if the Level 2 equations are substituted into the Level 1 equation, the prediction of random slopes results in a cross-level interaction between POLITIC and PMEGO. *multilevel logistic regression with random intercept and slope & cross-level interaction; proc nlmixed data=beate; parms g00=-1.61 g01=.29 g10=.48 g11=-.76 t00=.86 t01=-.31 t11=.59; b0j = g00 + g01*pmego + u0j; b1j = g10 + g11*pmego + u1j; logit = b0j + b1j*politic; p = exp(logit)/(1+exp(logit)); model foe~binary(p); random u0j u1j~normal([0,0],[t00,t01,t11]) subject=ego; run; Parameter Estimates Parameter g00 g01 g10 g11 t00 t01 t11 Estimate -1.9379 0.3340 0.02527 -1.1371 1.5874 -0.1773 4.6992 Standard Error 0.1416 0.2319 0.7397 0.9154 0.3899 0.9685 4.9242 DF 424 424 424 424 424 424 424 t Value -13.69 1.44 0.03 -1.24 4.07 -0.18 0.95 Pr > |t| <.0001 0.1505 0.9728 0.2149 <.0001 0.8548 0.3405 Alpha 0.05 0.05 0.05 0.05 0.05 0.05 0.05 Lower -2.2161 -0.1218 -1.4287 -2.9364 0.8210 -2.0811 -4.9798 Upper -1.6596 0.7898 1.4792 0.6623 2.3538 1.7264 14.3781 Gradient 0.001222 0.000139 0.000312 0.000098 -0.00009 0.000027 0.000082 • As we could have anticipated, the cross-level interaction is non-significant. • Finally, we might want to evaluate whether the type of relationship between the ego and the alter influences whether the ego distrusts the alter. The model is now: Linear Predictor: ij 0 j 1 j POLITIC 2 j COL 3 j SUP 4 j SUP 4 j NEIGH 0 j 00 01PMEGO u0 j 1 j 10 11PMEGO u1 j 2 j 20 3 j 30 4 j 40 where 0 00 u 0 j ~ N , u1 j 0 10 11 5 j 50 • Here the effects of the dummy-coded relationship variables have been designated as fixed, despite the fact that they occur at Level 1. Not all Level 1 variables need have random effects. In this case, it would be quite difficult to estimate random effects for all of these variables. However, we must feel comfortable that whatever individual differences there are in the effects of these variables are negligible. Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 33 proc nlmixed data=beate noad qpoints=10; parms g00=-2.5 g01=.21 g10=.44 g11=-.69 g20=1 g30=1.19 g40=0 g50=1.87 t00 = .87 t01=-.13 t11=.40; b0j = g00 + g01*pmego + u0j; b1j = g10 + g11*pmego + u1j; b2j = g20; b3j = g30; b4j = g40; b5j = g50; logit = b0j + b1j*politic + b2j*col + b3j*sup + b4j*sub + b5j*neigh; p = exp(logit)/(1+exp(logit)); model foe~binary(p); random u0j u1j~normal([0,0],[t00,t01,t11]) subject=ego; run; Parameter Estimates Parameter g00 g01 g10 g11 g20 g30 g40 g50 t00 t01 t11 Estimate -2.9623 0.2612 -0.1470 -1.0978 1.1871 1.3328 -0.2103 2.2970 1.6638 0.2625 5.5386 Standard Error 0.2423 0.2382 0.8018 0.9913 0.2335 0.2551 0.7417 0.3587 0.4158 1.1022 5.7949 DF 424 424 424 424 424 424 424 424 424 424 424 t Value -12.22 1.10 -0.18 -1.11 5.08 5.22 -0.28 6.40 4.00 0.24 0.96 Pr > |t| <.0001 0.2735 0.8546 0.2688 <.0001 <.0001 0.7769 <.0001 <.0001 0.8119 0.3397 Alpha 0.05 0.05 0.05 0.05 0.05 0.05 0.05 0.05 0.05 0.05 0.05 Lower -3.4385 -0.2070 -1.7229 -3.0463 0.7282 0.8313 -1.6683 1.5920 0.8464 -1.9040 -5.8516 Upper -2.4860 0.7294 1.4289 0.8507 1.6461 1.8343 1.2476 3.0021 2.4811 2.4289 16.9289 Gradient 0.0012 0.001558 0.002376 0.003922 0.001052 0.001329 0.000825 -0.00145 -0.00047 -0.00096 0.000731 • Several significant effects were identified for the relationship variables. Relative to acquaintances, colleagues and superiors are more likely to be distrusted and neighbors are especially likely to be distrusted. Subordinates are distrusted at about an equal rate to acquaintances. • Just as in regular logistic regression, aside from direction and relative magnitude, the coefficients associated with these predictors are rather difficult to interpret, as they indicate units change in the log-odds of distrusting the alter. Exponentiating the coefficients gives the expected odds-ratios. • We can either perform the exponentiation by hand, or have PROC NLMIXED do it for us with the estimate command. In PROC MIXED, estimate computed and tested linear combinations of parameter estimates, but in NLMIXED it computes and tests (by the Delta method) nonlinear combinations of parameter estimates as well. proc nlmixed data=beate noad qpoints=10; parms g00=-2.5 g01=.21 g10=.44 g11=-.69 g20=1 g30=1.19 g40=0 g50=1.87 t00 = .87 t01=-.13 t11=.40; b0j = g00 + g01*pmego + u0j; b1j = g10 + g11*pmego + u1j; b2j = g20; b3j = g30; b4j = g40; b5j = g50; logit = b0j + b1j*politic + b2j*col + b3j*sup + b4j*sub + b5j*neigh; p = exp(logit)/(1+exp(logit)); model foe~binary(p); random u0j u1j~normal([0,0],[t00,t01,t11]) subject=ego; estimate "OR col v. acq" exp(g20); estimate "OR sup v. acq" exp(g30); estimate "OR sub v. acq" exp(g40); estimate "OR neigh v. acq" exp(g50); run; Daniel J. Bauer – Mixed Models and Hierarchical Data – May 30-31, 2005 34 Additional Estimates Label OR col v. acq OR sup v. acq OR sub v. acq OR neigh v. acq Estimate 3.2776 3.7918 0.8103 9.9445 Standard Error 0.7653 0.9674 0.6010 3.5671 DF 424 424 424 424 t Value 4.28 3.92 1.35 2.79 Pr > |t| <.0001 0.0001 0.1783 0.0055 Alpha 0.05 0.05 0.05 0.05 Lower 1.7733 1.8902 -0.3711 2.9331 Upper 4.7819 5.6933 1.9917 16.9559 • Here we see that colleagues are distrusted about 3 times more often than acquaintances, superiors are distrusted about 4 times more often, and neighbors are distrusted almost 10 times more often than acquaintances! • Although the strong result for neighbors may be initially puzzling, it is actually quite consistent with the fact that neighbors were actively encouraged to inform on one another. • Before taking these interpretations much further, we should take heed of the note in the log file: NOTE: At least one element of the (projected) gradient is greater than 1e-3. This note suggests that the model may not have fully converged. Adding more quadrature points increases the computing time but often helps to solve this problem. Typically the estimates will change a little with more quadrature points but the interpretations will remain more-or-less the same. As such, one may wish to use these provisional results as a guide to model building or trimming, and then re-estimate the final model with more quadrature points. Recommended Readings Aiken, L.S., & West, S.G. (1991). Multiple regression: Testing and interpreting interactions. Newbury Park, CA, US: Sage Publications, Inc. Bauer, D.J. & Curran, P.J. (in press). Probing interactions in fixed and multilevel regression: Inferential and graphical techniques. Multivariate Behavioral Research Goldstein, H. I. (1995). Multilevel statistical models. Kendall's Library of Statistics 3. London: Arnold. Hox, J. (2002). Multilevel analysis: Techniques and applications. Mahwah, NJ: Lawerence Erlbaum Associates. Kreft I, deLeeuw J. Introducing multilevel modeling. London: Sage, 1998. McCulloch C, Searle S (2001). Generalized, Linear and Mixed Models. John Wiley & Sons, Inc., NewYork. Raudenbush, S.W. & Bryk, A.S. (2002). Hierarchical linear models: applications and data analysis methods (2nd. Ed.). Thousand Oaks, CA: Sage. Singer, J. (1998). Using SAS PROC MIXED to fit multilevel models, hierarchical models, and individual growth models. Journal of Educational and Behavioral Statistics, 24, 323-355. Snijders, T.A.B. & Bosker, R.J. (1999). Multilevel Analysis. London: Sage Publications.