DNA COMPUTING

advertisement

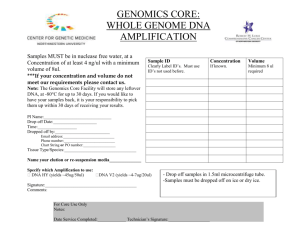

BVB-IEEE SAE-INDIA BVB Collegiate Club Manthan ‘06 DNA COMPUTING Samira T P Shruthi N samiratp@gmail.com shruthi.nag@gmail.com SIT, Tumkur Abstract DNA computing is a nascent technology that seeks to capitalize on the enormous informational capacity of DNA, biological molecules that can store huge amounts of information and are able to perform operations similar to a computer's through the deployment of enzymes, biological catalysts that act like software to execute desired operations. The appeal of DNA computing lies in the fact that DNA molecules can store far more information than any existing conventional computer chip. It has been estimated that a gram of dried DNA can hold as much information as a trillion CDs. Moreover, in a biochemical reaction taking place in a tiny surface area, hundreds of trillions of DNA molecules can operate in concert, creating a parallel processing system that mimics the ability of the most powerful supercomputer. The chips that drive conventional computers represent information as a series of electrical impulses using ones and zeros. Mathematical formulas are used to manipulate that binary code to arrive at an answer. DNA computing, on the other hand, depends on information represented as a pattern of molecules arranged on a strand of DNA. Certain enzymes are capable of reading that code, copying and manipulating it in predictable ways. The speed of computations is such that, a DNA computer can solve a problem (travelling salesman problem with 5 lakh cities) in 5 seconds compared 5 years as taken by the world’s fastest super computer(KRAY 4). This paper illustrates the computing capability of DNA molecules by taking ‘travelling salesman problem with 5 cities’ 1. Introduction The Moore’s law as stated by Gordon Moore, the founder of Intel states that the number of electronic devices on a microprocessor doubles every 18 months and it has been so till date. However many experts have predicted that Moore’s law will soon reach its end due to limitations on speed and miniaturization. This brought about the need of a new material that could be used to design computing systems and scientists have found the material inside our own body. The DNA. DNA (deoxyribonucleic-acid) molecules, the material our genes are made of have the potential to perform calculations many times faster than the world’s most powerful human-built computers. DNA might one day be integrated into a computer chip to create a so-called biochip that will push computers even faster. DNA molecules have already been harnessed to perform complex mathematical problems. While still in their infancy, DNA computers will be capable of storing billions of times more data than your personal computer. DNA computing is an offspring of nanotechnology where genetic material is used to create nano-computers that might take the place of silicon-based computers in the next decade. DNA is used by genes to remember the intricate patterns of life will be used in DNA computers to store data. DNA performs massive parallel processing which makes DNA computers faster than the fastest super computer. DNA computers solve highly complex problems within a blink of the eye, while a super computer may take months. The main benefit of using DNA computers to solve complex problems is that different possible solutions are created all at once which is due to parallel processing capability of DNA by which it can perform trillions of calculations at a time. The storage capacity of DNA is tremendous. A single gram occupies a volume of 1 cm3 and can hold as much information as a trillion compact discs, approximately 750 terabytes. DNA computers use a different methodology to compute solutions; it uses molecular automations and preset reactions to arrive at the solution. The solutions are obtained by the links that the DNA generates amongst them. The wrong solutions are eliminated and the correct one is retained. DNA computers have already surpassed the super computers in their abilities and are the future of computing. BVB-IEEE SAE-INDIA BVB Collegiate Club The success of the Adleman DNA computer proves that DNA can be used to calculate complex mathematical problems. However, this early DNA computer is far from challenging silicon-based computers in terms of speed. The Adleman DNA computer created a group of possible answers very quickly, but it took days for Adleman to narrow down the possibilities. Another drawback of his DNA computer is that it requires human assistance. The goal of the DNA computing field is to create a device that can work independent of human involvement. Nevertheless, his work is significant for a number of reasons. It illustrates the possibilities of using DNA to solve a class of problems that is difficult or impossible to solve using traditional computing methods. It's an example of computation at a molecular level, potentially a size limit that may never be reached by the semiconductor industry. It demonstrates unique aspects of DNA as a data structure It demonstrates that computing with DNA can work in a massively parallel fashion. Manthan ‘06 2. Fundamentals about dna structure The DNA(deoxyribonucleic acid) is a biological structure that forms the building block of any living organism. The DNA has a double helix structure and determines the genetics of the living organisms. The data density of DNA is impressive. Just like a string of binary data is encoded with ones and zeros, a strand of DNA is encoded with four bases, represented by the letters A, T, C, and G. The bases (also known as nucleotides) are spaced every 0.35 nanometers along the DNA molecule, giving DNA an remarkable data density of nearly 18 Mbits per inch. In two dimensions, if you assume one base per square nanometer, the data density is over one million Gbits per square inch. Compare this to the data density of a typical high performance hard drive, which is about 7 Gbits per square inch -- a factor of over 100,000 smaller. BVB-IEEE SAE-INDIA BVB Collegiate Club Manthan ‘06 y We can be confident that we have all possible combinations including the correct one by using an excess of DNA encodings. We now have a test tube full of various lengths of DNA that encode possible routes between cities. What we want are routes that start with Mumbai and end with Kolkata. To accomplish this we can use a technique called Polymerase Chain Reaction (PCR), which allows you to produce many copies of a specific sequence of DNA. PCR is an iterative process that cycles through a series of copying events using an enzyme called polymerase. Polymerase will copy a section of single stranded DNA starting at the position of a primer, a short piece of DNA complimentary to one end of a section of the DNA that we are interested in. By selecting primers that flank the section of DNA we want to amplify, the polymerase preferentially amplifies the DNA between these primers, doubling the amount of DNA containing this sequence. After many iterations of PCR, the DNA we’re working on is amplified exponentially. So to selectively amplify the itineraries that start and stop with our cities of interest, we use primers that are complimentary to Mumbai and Kolkata. What we end up with after PCR is a test tube full of double stranded DNA of various lengths, encoding itineraries that start with Mumbai and end with Kolkata. STEP 2:Sort the DNA by length and select the DNA whose length corresponds to 5 cities. Our test tube is now filled with DNA encoded itineraries that start with Mumbai and end with Kolkata, where the number of cities in between Mumbai and Kolkata varies. We now want to select those itineraries that are five cities long. To accomplish this we can use a technique called Gel Electrophoresis, which is a common procedure used to resolve the size of DNA. The basic principle behind Gel Electrophoresis is to force DNA through a gel matrix by using an electric field. DNA is a negatively charged molecule under most conditions, so if placed in an electric field it will be attracted to the positive potential. However since the charge density of DNA is constant (charge per length) long pieces of DNA move as fast as short pieces when suspended in a fluid. This is why you use a gel matrix. The gel is made up of a polymer that forms a meshwork of linked strands. The DNA now is forced to thread its way through the tiny spaces between these strands, which slows down the DNA at different rates depending on its length. What we typically end up with after running a gel is a series of DNA bands, with each band corresponding to a certain length. We can then simply cut out the band of interest to isolate DNA of a specific length. Since we known that each city is encoded with 6 base pairs of DNA, knowing the length of the itinerary gives us the number of cities. In this case we would isolate the DNA that was 30 base pairs long (5 cities times 6 base pairs). BVB-IEEE SAE-INDIA BVB Collegiate Club Manthan ‘06 So we now affinity purify fives times, using a different city complement for each run. For limiting the number of iterations we can do successively before the probability of producing an error becomes greater. example, for the first run we use Mumbai'beads (where the ' indicates compliment strand) to fish out DNA sequences which contain the encoding for Mumbai (which should be all the DNA because of step 3), the next run we use Bangalore'-beads, and then finally Delhi'-beads, Chennai'-beads, Kolkata'-beads. The order and 3.Applications of DNA Computing isn’t The primary advantage offered by most proposed models of DNA based computation is the ability to handle millions of operations in parallel. The massively parallel processing capabilities of DNA computers may give them the potential to find tractable solutions to otherwise intractable problems, as well as potentially speeding up large, but otherwise solvable, polynomial time problems requiring relatively few operations important. If an itinerary is missing a city, then it will not be "fished out" during one of the runs and will be removed from the candidate pool. What we are left with are the are itineraries that start in Mumbai, visit each city once, and end in Kolkata. This is exactly what we are looking for. If the answer exists we would retrieve it at this step. One possible way to find the result would be to simply sequence the DNA strands. However, since we already have the sequence of the city encodings we can use an alternate method called graduated PCR. Here we do a series of PCR amplifications using the primer corresponding to L.A., with a different primer for each city in succession. By measuring the various lengths of DNA for each PCR product we can piece together the final sequence of cities in our itinerary. Adleman's experiment solved a seven city problem, but there are two major shortcomings preventing a large scaling up of his computation. They are: 1. As the complexity of the problem increases exponentially, the amount of DNA to be used also increases exponentially. This places some restrictions on the number of cities that can be solved. 2. Since thee operations are not deterministic but stochastically driven, each step contains statistical errors, Massively Parallel Processing DNA computers derive their potential advantage over conventional computers from their ability to: Perform millions of operations simultaneously; Generate a complete set of potential solutions; Conduct large parallel searches; and Efficiently handle massive amounts of working memory. A working DNA based computer might hold an advantage over conventional computers when applied to decomposable problems, those problems that are able to be divided into separate, non-sequential tasks, because they can hold so much data in memory and conduct so many operations at once 4.Solving NP-Complete and Hard Computational Problems After Adleman's and Lipton's initial results, much of the work on DNA computing has continued to focus on solving NP-complete and other hard computational problems. Problems in NP-complete are such that BVB-IEEE SAE-INDIA BVB Collegiate Club Manthan ‘06 there is no polynomial time solution known to exist using conventional computer algorithms. That is, as the complexity of these problems increase, the time required to solve them increases at an exponential rate. These problems are also said to be intractable, but, if within the domain of NPcomplete a tractable solution can be found to one of these problems then it can also be used to solve for all other problems in the set. Adleman's original and subsequent works demonstrated a tractable solution to the directed Hamiltonian path problem, similar to the more familiar traveling salesperson problem. It has since been shown that a direct polynomial time solution can be obtained for any NP-complete problem using similar techniques of DNA computation . The ability to obtain tractable solutions to NPcomplete and other hard computational problems has many implications to real life problems, particularly in business planning and management science. Many of the cost optimization problems faced by managers are in NP-complete are currently solved using heuristic methods and other approximations. These problems include scheduling, routing, and optimal use of raw materials and correspond to problems already solved, either theoretically or experimentally, using DNA computation. Storage and Associative Memory DNA might also be used to mirror, and even improve upon, the associative capabilities of the human brain. Baum proposed a method for making a large content addressable memory using DNA. A truly content addressable memory occurs when a data entry can be directly retrieved from storage by entering an input that most closely resembles it over other entries in memory. This input may be very incomplete, with a number of wildcards, and in an associative memory might even contain bits that do not actually occur within the closest match. This contrasts with a conventional computer memory, where the specific address of a word must be known to retrieve it. Rather, the use of this technique would replicate what is thought by many to be a key factor in human intelligence. Baum's models for a DNA associative memory are quite simple, and build from the techniques used in other areas of DNA computation. Storing a word could be done by assigning a specific DNA subsequence to each component value pair and building a fixed length word from these subsequences. To then retrieve the word closest to the input, one would introduce marked complementary subsequences into the storage medium and chose the molecule that has the most matches to this input. This technique could be further refined to more closely approximate the brain by appending words to only store attributes that an object has, rather than wasting space using '0's to represent attributes that an object does not have. 5.DNA2DNA Applications Another area of DNA computation exists where conventional computers clearly have no current capacity to compete. This is the concept of DNA2DNA computations as suggested in [12] and identified as a potential killer app. DNA2DNA computations involve the use of DNA computers to perform operations on unknown pieces of DNA without having to sequence them first. This is achieved by recoding and amplifying unknown strands into a redundant form so that they can be operated on according to techniques similar to those used in the sticker model of DNA computation. Many of the errors inherent in other models of DNA computing can hopefully be ignored in DNA2DNA computing because there will be such a high number of original strands available for operations. The potential applications of re-coding natural DNA into a computable form are many and include: DNA sequencing; DNA fingerprinting; DNA mutation detection or population screening; and Other fundamental operations on DNA. Bibliography 1] www.aj.com 2] www.advancements.org/dnacomputations 3] “Molecular Biology and its Technological Implications” – Richard Moore 4] “DNA Sequencing, Mapping and Algorithms” – Douglas MacLean BVB-IEEE SAE-INDIA BVB Collegiate Club 5] “DNA Computations and the first DNA Computer” - Aldeman Manthan ‘06