12Week4

advertisement

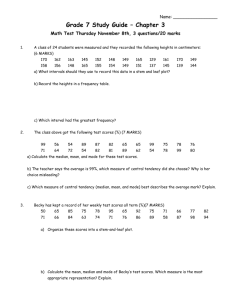

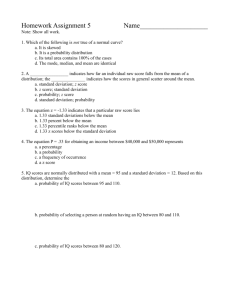

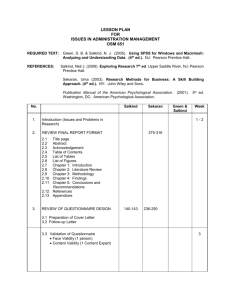

Salkind Ch. 2-4 Ch. 2: Means to an End Computing and Understanding Averages Focus: -Understanding measures of central tendency -Computing the mean for a set of scores -Computing the mode and the median for a set of scores -Selecting a measure of central tendency Notes: -after collecting data, information is organized into indexes to describe the data: “computing an average” -average: the one value that best represents an entire group of scores -central tendency (a.k.a. average): 3 types: mean, median, & mode -mean: the most common type of average computed, the sum of all the values in the group, divided by the number of values in that group. formula: xbar = ∑X n xbar: mean value for the scores ∑: sigma, is the summation sign telling you to add whatever follows it X: each individual score in the group of scores n: the size of the sample from which you are computing the mean Remember… -The mean is the “centermost point”: all the values on the side of the mean equal in weight to all the values on the other side of the mean. -The mean is very sensitive to extreme scores. An extreme score can pull the mean in either direction and make it less representative of the set of scores, making it less useful as a measure of “central tendency”. -weighted mean: where the same values occur more than once…to compute: -list all the values in the sample for which the mean is being computed (see table on pg. 23 Slakind) -list the frequency with which each value occurs -multiply the value by the frequency (again, see table, column 3) -sum all the values in the Value x frequency column (see table) - divide by the total frequency -median: (also an average) the midpoint in a set of scores: the point in which 50% of scores fall above, and 50% of scores fall below this midpoint. to calculate: -list the values in order, either from highest to lowest, or lowest to highest -find the middle score percentile points: ex.: “@ 75th percentile”, score is at or above 75% etc. -Q1 is 25th percentile, Q3 is 75th percentile, Q2 is the median skew: significantly distort (there can be extreme scores that throw off, or skew, the median in the set or distribution of scores) outliers: extreme scores that can skew the median mode: the value that occurs most frequently to compute… -list all the values in a distribution, but list each only once -tally the # of times that each value occurs -the value that occurs most often is the mode When to use what… - use “mode” when data are categorical and values can fit into only one class, i.e. hair color, pol. affiliation, etc. These categories are “mutually exclusive”. -use “median” when you have extreme scores and you don’t want to distort the average, i.e. income. -use “mean” when you have data that do not include extreme scores and are not categorical (ex.: test score, time to swim 50 yards) Ch. 3: Understanding Variability Focus: -why variability is valuable as a descriptive tool -how to compute the range, standard deviation, and variance -how the standard deviation and variance are alike and different Notes: variability: reflects how scores differ from one another: some variability ex.: 7, 6, 3, 3, 1 less variability (same mean) ex.: 3, 4, 4, 5, 4 range: (the most general measure of variability) general estimate of how far apart the scores are from one another. to compute… -subtract the lowest score in a distribution from the highest score in the distribution standard deviation: (s or SD) represents the average amount of variability in a set of scores, the average distance from the mean. formula: s = (square root all the following): ∑ (X – x bar)² n–1 *the formula is on pg. 37 Salkind *we use the “square root” to return to the same # of deviations we started with, because we squared them in the above equation *we use “n – 1” to force the standard deviation to be larger than it would be otherwise, its better to overestimate what the standard deviation of the population is (see pg. 40 Salkind for more…) mean deviation: (a.k.a. mean absolute deviation) is the sum of the absolute value of the deviations from the mean. This sum must equal ZERO or something was computed INCORRECTLY. variance: the standard deviation squared formula: s = ∑ (X – x bar)² n–1 *the formula is on pg. 42 Salkind Ch. 4: A Picture Really Is Worth a Thousand Words Focus: -why a picture is really worth a thousand words -how to create a histogram and a polygon -different types of charts and their uses -using Excel and SPSS to create charts Ten Ways to a Great Figure! 1. Minimize chart or graph junk, less is more 2. Plan out your chart before you start creating the final copy, use scratch/graph paper before the computer 3. Say what you mean and mean what you say—no more and no less, avoid clutter that may confuse reader 4. Label everything so nothing is left to the misunderstanding of the audience 5. A graph should communicate only ONE idea 6. Keep things balanced, center tiles and label your axes 7. Maintain the scale in a graph, in relationship to the horizontal and vertical axes. The ratio should be about three to four (3:4), so a three inch-wide graph should be about four inches tall. 8. Simple is best, convey ideas straightforwardly: save any distracting info for accompanying text. A graph should stand alone; the reader should understand the message. 9. Limit the # of words you use, too many words can be distracting from the visual message of the graph 10. A chart alone should convey what you want to say, if not, go back to plan and try it again frequency distribution: a method of tallying, and representing, how often certain scores occur. Scores are often grouped into class intervals, or ranges of numbers. class interval: a range of numbers. The first step in the creation of a frequency distribution is to define how large each interval will be. *See example, including chart on pgs. 50-51 Salkind histogram: visual representation of the frequency distribution where the frequencies are represented by bars. *See instructions on how to “create a histogram” on pgs. 52-54 Salkind midpoint: point that represents the entire class interval to the height representing the frequency of that class interval *See example on pgs. 53-54 Salkind The Tally-Ho Method *Tally chart, see ex. on pg. 55, fig. 4.3 on pg. 55 Salkind frequency polygon: a continuous line that represents the frequencies of scores within a class interval *See example on pg. 56 Salkind how to draw… -place a midpoint at the top of each bar or column in a histogram (see figure 4.2 on pg. 54 Salkind) -connect the lines: a frequency polygon! cumulative frequency distribution: a visual representation of the cumulative frequency of occurrences by class intervals: based on the same data as a frequency distribution, but with an added column (see ex. on pg. 57 Salkind) ogive: another name for a cumulative frequency polygon: if the distribution of the data is normal, then the “ogive” represents what is known as a “bell” curve or “normal distribution” How distributions can be different from one another: -average value -variability -skewness -kurtosis -average value: ex.: ave. for distribution C is more than the average for distribution B, which is more than the average for distribution A *See example on pgs. 59-60 Salkind -variability: distributions have same average value but differ in variability: the variability in distribution A is less than in distribution B, and in turn, less than that found in C *See example on pg. 60 Salkind -skewness: a measure of the lack of symmetry (lopsidedness) of a distribution, in other words, the “tail” of the distribution is longer than another *See example on pg. 61 Salkind -kurtosis: has to do with “flow” or how “peaked” a distribution appears: platykurtic: is relatively flat compared to a normal, or bell-shaped, distribution leptokurtic: a distribution that is relatively peaked compared to a normal, or bellshaped, distribution *See example on pg. 62 Salkind How skewed a distribution is… formula: Sk = 3(x bar - M) s Sk: Pearson’s measure of skewness (Ch. 5) x bar: is the mean M: is the median *More detail on pg. 63 Salkind Other ways to chart data: column chart: should be used when you want to compare the frequencies of different categories with one another. Categories are shown horizontally (x-axis), values are shown vertically (y-axis). ex.: pol. affiliation, sales of various products, etc. bar charts: same as column chart, except categories are vertical (y-axis), values are horizontal (x-axis) line charts: used when showing trends in data at equal intervals. ex.: showing trends over time pie charts: when showing proportions of items that make up a series of data points. Instructions on how to create histograms, bar graphs, line graphs, and pie charts in SPSS on a computer are on pgs. 67-73 Salkind. Shively Pg 74-78 Summary In this section, Shively basically explains what a causal relationship is. There is a difference, he explains, between a coincidence and a cause. When “the values of one variable produce the values of other variable, the relationship is a causal relationship.” (Shively 74) A coincidental example would be between class and party votes. One might argue that class level in society causes the way a person will vote. However, one could also argue that some ethnic groups vote for Democrats; they also happen to be in the working class. This would be a coincidence. “To qualify as a ‘causal’ relationship, the coincidence of two phenomena must include the idea that one of them produces the other.” (Shively 75) Basically, we would use causal relationships to understand why variables coincide. One of the biggest problems Shively says we encounter is finding a causal relationship and not being able to determine which variable causes which. “If we can establish that change in one of the variables precedes change in the other, then if we are sure that there is causation between the two, it is clear which variable must be the cause” (Shively 77 pulling a trigger=a shot, doesn’t work the other way) Either causation is not involved, or it is. This is the relationship we must determine. Summary of Salkind chapter 7: One example that was used throughout the chapter to describe is in 1998 study was conducted on whether incumbent members of congress had an extra advantage n gathering votes. He studied the voting margins of incumbents running for reelection, however the number of seats were uncontested because the incumbent was so safe. The only available seats that were up for reelection that he could study were those in districts where someone felt the need to challenge the incumbents. If he had counted the unchallenged incumbents as getting 100% of the vote he would have been overstating their safety. Random sampling: People use computer generated random numbers to identify which members of the population should join the sample. And if the sample is drawn from random we can be sure that it mirrors the relationship we are interested in. The reason that random sampling is so important in an observation is because the randomization ensures that any two groups that are chosen will be alike. If they were different it would interfere with the interpretation of the experiment. Quasi-random sampling: This is a type of sampling that has a systematic mechanism to it, it differs from just a random sample because it makes the sampling process easier. For example, it is very expensive and time consuming to go and interview people at every single house, so instead you sample every 6th hose. That way it is still a random sample but also easier for the researcher. Purposive sampling: In other experiments one would want to have a nonrandom sample that would serve for the purpose of the research. A purposive sample does not draw from the whole population; rather it draws subjects to maximize variation in the independent variable. People usually don’t use purposive sampling because usually when we draw samples it is meant to serve dual purposes, and new analysis might occur while sampling that we want to consider. But a purposive sample focuses on the independent variable and the single task we started with. You would not want to maximize your dependent variable because it would ruin the true relationship of the purposed experiment. When you maximize the independent variable it will mirror your true relationship purposed but f you maximize the dependent variable you will mess up the original experiment. When you maximize the dependent variable it does not parallel the research question in the same way. Censored data: The problem with censored data is that it is either the research is unavailable, either by choice or by the researchers choice. But the problem is that because of this people start to assume about what might have been. In the example of the incumbent congressmen Zaller estimated how many votes the unchallenged incumbents would have received. To avoid censored data you just have to broaden your rage of information. Main points: - Sampling larger samples - Problems with censored data -Selecting on the basis of your independent variable - The relationship observed among the cases you selected must mirror the true relationship among all potential cases. Causality Lecture (10/16) Summary This lecture relates directly to Shively’s book, pages 74-78. First, we talked about validity. Precision leads to accuracy, and we must trust our information, yet verify its trustworthiness. The Logic of testing validity” relates to the logic of hypothesis testing. In an example, we used equal opportunity of women. Procedural Due Process is when we set up laws so that everyone is treated equally. Substantive Due Process is when the outcome is so much at odds that you want to doubt the claim to fairness. Basically, a causal relationship is not just about the dependent and independent variables; it must have a story about how the dependent variable causes/produces a change in the independent variable. In order to find this relationship out, we must compare our explanation to other people’s explanations. We could also look for the temporal order, or the “pre-post” relationship. Descriptive Statistics Lecture (10/21) Summary We started out this lecture with an example about Regan. From February to April of 1981, Regna support jumped up. It could have been from his major budget plan, but we must think about any other events that might have been involved in that time period. We know he was shot in March of that year, so we would consider this a threat to the validity of the inference – “history.” Next we went into detail about the mean, median and mode. Mean is the average (add up all the numbers and divide by the total), median is the middle number, (or the average of the two middle numbers) and mode is the number that appears the most. Statistics summarize the variables and ask the question of “how good is that summary.” The main way to measure the numbers is by looking at the central tendencies: the mean and median are “middle” numbers, and the mode is the “typical” numbers. Summary is better if we have a cluster of information, rather than a widely dispersed set of information. To get the dispersion, find the difference between each value and the average. The deviances added up are 0, so this doesn’t really help. Once we get these differences, if we take the absolute value and add them all up, we get a positive number. Once again divided by the total numbers will give us the average absolute deviation. If we take the deviances and square each value, then add them up and divide by the total number, we get the variance. The square root of the variance is the standard deviation. I know this may be hard to follow but these terms will be useful for the test, so we might want to know how to solve for them. Coefficients are mean, median, mode, and now variance, standard deviation, and range. Next we went into actual graphs. A graph that is skewed to the right means the average is being pulled up by the outliers. This is the opposite with a graph skewed to the left. Summary 10/23 lecture: Reviewing central tendency, dispersion, and shape: Central tendency: measuring the middle, for example mean median and mode. Mode is the only one that doesn’t measure the middle it measures the most typical score. Dispersion: asks how spread out are the scores, and are scores spread out around the center. There are two ways to measure this one is variance, and standard deviation. The standard deviation is used to return to the right form of measure. Shape: shape checks the mode, which is the number of peaks that occur. And symmetry looks at if the shape is skewed or not. It also checks the steepness and the tightness of the scores. Box plots: A box plot shows the upper quartile which is the upper 75th percentile, the lower quartile which is the lower 25th percentile. It also shows the outliers and the mean/median or the central tendency. Box plots measures central tendency, shape, and dispersion all on one graph. Comparing means: The t-test is used for uncertainties, from measurement error. Is the difference big enough for us to worry about. The t-test equation is: = observed-expected Variation/ standard error Numerator: measures the difference Denominator: this is measuring the how much variation within scores. The larger the denominator the smaller the fraction. A variation in scores=uncertainty.