Lesson 7 - Math Forum

advertisement

Lesson 7. Does God play the dice?

Objective:

Coin tossing and dice rolling are the familiar means to simulate events

occurring randomly. They are however not a practical means to generate

a long sequence of random events. Hence, a pseudo-random number

generator is used to quickly simulate several thousands of coin tossing

(Prog#7a), dice rolling (Prog#7b – 7d), and random tree branching

(Prog#7e – 7g). For one die roll, the chances for facing up any side are

the same and equal to one out of six, so that they have a uniform

distribution

But, the sums of faced-up pips of two dice favor the median value 7, and

obey a triangle distribution.

Moreover, the distribution of sums of three dice gets rounded in the

middle and falls off at the edges.

As more and more dice are rolled, the distribution of their sums becomes a

bell-shaped curve. This is the Central Limit Theorem. The branch

scaling factor and spreading angle controls the growth of binary tree

introduced in Lesson 1. When we assign only the scaling factor randomly

the binary tree grows into all different sizes. But, when both the scaling

factor and spreading angle are chosen randomly, there appear more

middle-sized binary trees and less of the extreme small and large ones, as

suggested by the Central Limit Theorem.

1

Scary words: Randomizer, pseudo-random number generator, random walk, event,

sample space, probability distribution, uniform distribution, bell-shaped

(normal) distribution, random variable, Central Limit Theorem.

Last update: 8 Jan 2003

2

Lesson

Randomizer

We encounter the laws of chance in our daily life; for example, tossing a coin at

the beginning of a football game, throwing dice for a board game, picking a winning

lottery ticket, and forecasting the probability of rain. Of these, coin tossing is the simplest

with two possible outcomes of head (H) and tail (T). It is the randomizer to generate

either H or T in an unpredictable manner. Given a fair coin, our intuition tells us the

chances for H or T are the same and hence equal to one in two, i.e., 12 . After 10, 50,

and 100 throws of a coin, you record the numbers of H and T in table 1 and then compute

the ratio of H or T to the total number of throws. Are the ratios close to 12 ? Do they

approach

1

2

, as the number of coin throws increases?

Table 1. Coin tossing

Throws

Number of

occurrences

Compute

the ratio

10

50

100

H

T

H

T

H

/10 =

/10 =

/50 =

/50 =

/100 =

T

/100 =

It must be noted that the long-term probability of 12 is one of many tests to guarantee a

random coin tossing. For instance, {H, T, H, T, H, T,…} is obviously not random,

though the probability for H or T turns out exactly 12 . Now, a die throw generates one

out of six numbers {1, 2, 3, 4, 5, 6}. To test the randomness of 60 die throws, you

record in table 2 the number of occurrences of (1, 2, 3, 4, 5, 6) and then compute the

ratio of occurrences to the total number of throws. In theory, for a true die the odds for

any side to face up are one out of six, i.e., 16 . But, are the ratios equal to 16 in table 2?

Table 2. 60 die throws

Pip

Number of

occurrences

Compute

the ratio

1

2

3

4

5

6

/60 =

/60 =

/60 =

/60 =

/60 =

/60 =

Although coin tossing and dice throwing are the familiar randomizers, it is

difficult to visualize how a winning ticket can be chosen randomly out of millions of

3

lottery tickets. Perhaps, randomness is seriously lacking if one imagines a large metal

cage containing millions of lottery tickets being tumbled, prior to pulling out a winning

ticket. Moreover, the uncertainties in weather forecasting are due to the random

variations in geophysical flow computations over several days, for which there are no

simple randomizers to simulate the meteorological fluctuations. For this reason, the realworld randomizers are based on pseudo-random number generator. First of all, a

random number generator is a recipe to come up with a number that is not in an obvious

manner related to all the numbers that it has previously generated. Since it is difficult to

prove that such numbers are truly random, we simply call them pseudo-random numbers.

Random walk by coin tossing

Suppose that you are standing in the middle of a room facing north, as indicated

by the green head with a nose pointing to north. If head, you turn right 90 and take a

step forward, or turn left 90 and take a step forward if tail, as denoted by the orange

heads with H or T, respectively

Figure 1 shows the path of {H, T, T, H, T} and you can extend it for the next 10 steps by

coin tossing. Did you return to the starting position in figure 1? How about after 20,

30 or more steps?

Figure 1. Five steps by {H, T, T, H, T}

4

This is called a random walk to simulate the zigzag path of a drunken person.

When a ray of sun pierces through a window, we sometimes can see the dust particles

floating in the air and their path of movement resembles a random walk. A pseudorandom number generator coded into Prog#7a simulates coin tossing. To facilitate

visualization, Prog#7a displays the first 25% of random walk path in red, the second

25% in blue, the third 25% in green, and the last 25% in red. Also, the final location of

random walk is indicated by a red . You record the final positions after 1,000, 2,000,

3,000, 4,000, and 5,000 steps of random walk. Are you getting closer to or moving

away from the starting point as the number of random steps increases?

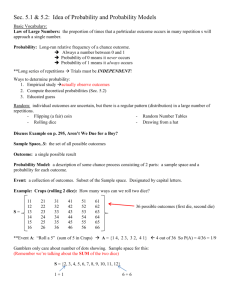

Uniform distribution

For one die roll, the chances for any one of the six sides to face up are the same

and equal to 16 0.167. This is indicated in figure 2 by six bars of the equal height 16 .

It is called the probability distribution, indicating how the probabilities of each event are

distributed over the sample space of outcomes {1, 2, 3, 4, 5, 6}. It is a uniform

distribution of equal probabilities. You may also indicate in figure 2 the six ratios of

table 2 by vertical bars for {1, 2, 3, 4, 5, 6}. Do they obey a uniform probability

distribution after 60 die throws?

Figure 2. One die roll

A pseudo-random number generator simulates the dice roll in Prog#7b, so that you can

quickly generate thousands of die throws. For instance, beginning from 500 die throws

you can test for the equal probability of 16 by incrementing the number of die rolls by 500

with Prog#7b.

5

Bell-shaped distribution

Two dice roll: We now roll two dice of white and blue, and add up the pips of faced-up

sides. The smallest sum ‘2’ is given by (

(

,

) and the largest sum is 12 when

), so that {2, 3, 4, …, 11, 12} is the sample space.

,

But, sum ‘3’ can result

from two events (

,

) and (

,

), and similarly (

,

) and (

,

)

give sum ‘11.’ In table 3, we list all possible events for the sums of {2, 3, 4, …, 11, 12}

of two dice roll.

Table 3. Sum of the facing-up pips of two dice roll

Pips

2

3

4

5

6

7

8

9

10

11

12

Events

(

,

)

(

,

), (

,

)

(

,

), (

,

), (

,

)

(

,

), (

,

), (

,

), (

,

)

(

,

), (

,

), (

,

), (

,

), (

,

)

(

,

), (

,

), (

,

), (

,

), (

,

), (

(

,

), (

,

), (

,

), (

,

), (

,

)

(

,

), (

,

), (

,

), (

,

)

(

,

), (

,

), (

,

)

(

,

), (

,

)

(

,

)

,

)

Since there are in all 36 events in table 3, the probabilities of sum ‘2’ and ‘12’ are 1/36,

sum ‘3’ and ‘11’ are 2/36, sum ‘4’ and ‘10’ are 3/36, and so on, as they appear in the bar

graph of figure 3 over the sample space {2, 3, 4, …, 11, 12}. Here comes a surprise.

The uniform distribution (figure 2) of one die roll has become a triangle distribution

(figure 3) when two dice are rolled and the faced-up pips are added up. That is, you are

more likely to get sum ‘7’ than any other sums in two dice throw. With Prog#7c you

6

can check how closely a triangle distribution is realized by increasing the number of dice

throws up to 5,000.

Figure 3. Two dice throw

Three dice roll: Let’s now roll three dice of white and blue and red. The smallest sum

‘3’ is given by (

,

,

) and the largest sum ‘18’ occurs when (

,

,

). Hence, the sums of three faced-up pips have the sample space of {3, 4, 5, …, 17,

18}. Similar to table 3, we can list the events of three dice roll. For instance, (

,

,

) and (

,

,

) and (

,

,

) give sum ‘4.’ However, since it

gets tedious to list all events, we summarize in table 4 the number of events of three dice

rolls, the total of which is 216. Then, the probabilities for sum ‘3’ and ‘18’ are 1/216,

sum ‘4’ and ‘17’ are 3/216, and finally sum ‘10’ and ‘11’ are 27/216 =1/8, as they are

shown in figure 4 by vertical bars over the sample space of {3, 4, 5,…, 17, 18}. We

notice that the distribution of figure 4 is more rounded in the middle and falls off more

quickly at the low and high ends than the triangle distribution of figure 3. It begins to

look like what is commonly called the bell-shaped distribution curve. You can also

experiment with Prog#7d to see how closely the probability distribution of figure 4 is

observed as the number of dice throws becomes large.

Table 4. Three dice roll

Pip sum

3 or 18

4 or17

5 or 16

6 or 15

7 or 14

8 or 13

9 or 12

10 or 11

Number

of events

1

3

6

10

15

21

25

27

7

Figure 4. Three dice roll

To sum up, the probability distributions of figures 2, 3, and 4 have the following

message. Let us begin by defining a random variable, which varies from one instance to

another in an unpredictable way, like the outcome of a coin tossing and die rolling. Other

examples are the measurement errors in experiment, wind speed and temperature

variations in weather forecasting, daily stock price fluctuations, etc. Although a single

random variable obeys a constant distribution (figure 2), the sum of two random variables

follows a quite different distribution of triangle shape (figure 3). Now, for the sum of

three random variables we begin to see a bell-shaped distribution (figure 4). According

to the Central Limit theorem, the distribution is truly bell-shaped or normal as the number

of random variables becomes very large.

Binary tree

In Lesson 1, the growth of binary tree depends on two parameters, the scaling

factor a and spreading angle . As shown in figure 5, the main trunk of, say, 1 meter

gives rise to two shorter branches of ( 1a ) meter for the first branching, since a has a value

in the range (1.2, 1.8). Also, the tree height is controlled by angle ; that is, the smaller ,

the taller the binary tree. Now, there are four branches of ( 1a ) ( 1a ) meter for the second

branching, eight branches of ( 1a ) ( 1a ) ( 1a ) meter for the third branching, and so on.

Here, we assign the values of a and randomly in a given range.

8

Figure 5. A binary tree

One random variable: We first fix the spreading angle at 40 , but let a to vary

randomly at each stage of branching. This is coded into Prog#7e by a pseudo-random

number generator, which picks a value for a randomly in the range (1.2, 1.8). After

running Prog#7e a dozen of times or more, you record in table 5 the number of small,

medium, and large binary trees that are observed. Be as objective as possible, though no

quantitative measures are provided here for the classification of binary trees.

Table 5. Binary trees with random scaling factor

Size

Small

Medium

Large

Number of observations

Two random variables: Not only the random variation of a in the range (1.2, 1.8),

Prog#7f also assigns angle randomly in the range ( 20 , 60 ). Again, after running

Prog#7f a dozen of times or more, you record in table 6 the number of small, medium,

and large binary trees that are observed.

Table 6. Binary trees with random scaling factor and spreading angle

Size

Small

Medium

Large

Number of observations

Comparing tables 5 and 6, you may notice that there are more or less the same number of

small and medium and large binary trees in table 5, whereas more medium binary trees

are observed in table 6 than either the small or large ones. This is because randomizing

9

both a and decreases the small and large binary trees by the triangle distribution of

figure 3.

Pythagoras tree (Modification #2)

The Pythagoras tree of modification #2 introduced in Lesson 3 has the motif

which involves three parameters, the branching ratio r and two angles 1 and 2 , as

shown in figure 6. Here, we assign the three parameters randomly at each stage of

branching. That is, we choose r in the range (0.3, 0.5) and both 1 and 2 in the range

( 5 , 35 ), but angle 1 is always smaller than 2 . All this has been coded into

Prog#7g. It appears that Prog#7g generates a more naturally looking tree than the

original program Prog#3f, because the extreme small and large variations are being

suppressed by a bell-shaped distribution of figure 4. This is a testament of the Central

Limit theorem, favoring a median over the extremes.

Figure 6. Motif for Pythagoras tree (modification #2)

10