Basic Probability

advertisement

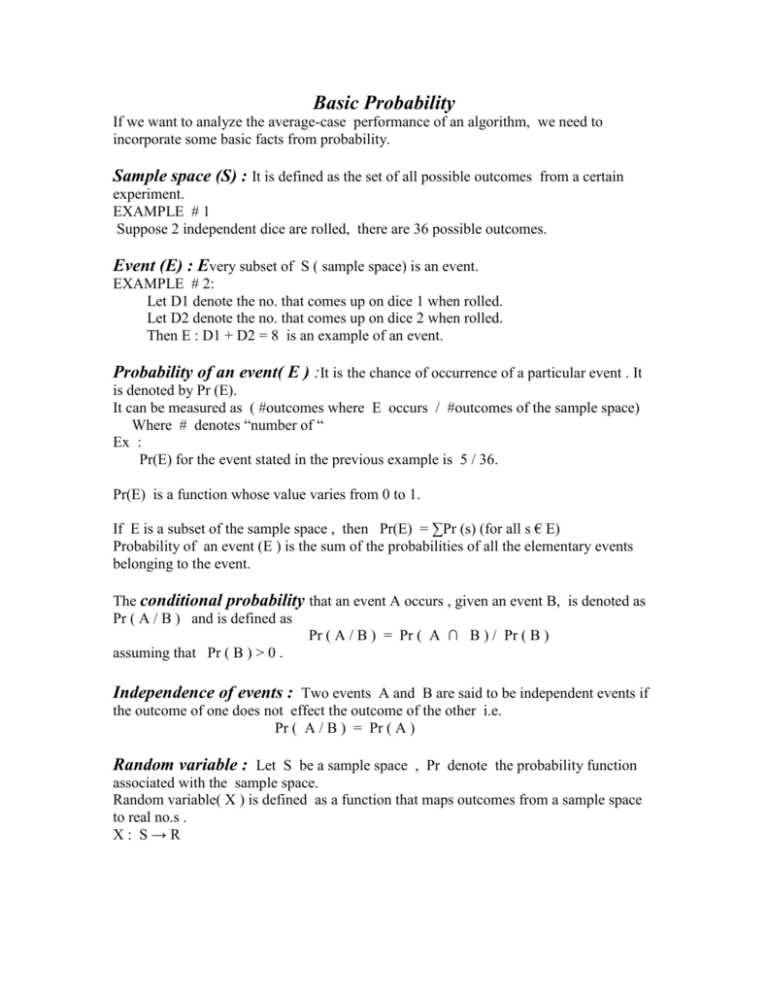

Basic Probability If we want to analyze the average-case performance of an algorithm, we need to incorporate some basic facts from probability. Sample space (S) : It is defined as the set of all possible outcomes from a certain experiment. EXAMPLE # 1 Suppose 2 independent dice are rolled, there are 36 possible outcomes. Event (E) : Every subset of S ( sample space) is an event. EXAMPLE # 2: Let D1 denote the no. that comes up on dice 1 when rolled. Let D2 denote the no. that comes up on dice 2 when rolled. Then E : D1 + D2 = 8 is an example of an event. Probability of an event( E ) :It is the chance of occurrence of a particular event . It is denoted by Pr (E). It can be measured as ( #outcomes where E occurs / #outcomes of the sample space) Where # denotes “number of “ Ex : Pr(E) for the event stated in the previous example is 5 / 36. Pr(E) is a function whose value varies from 0 to 1. If E is a subset of the sample space , then Pr(E) = ∑Pr (s) (for all s € E) Probability of an event (E ) is the sum of the probabilities of all the elementary events belonging to the event. The conditional probability that an event A occurs , given an event B, is denoted as Pr ( A / B ) and is defined as Pr ( A / B ) = Pr ( A ∩ B ) / Pr ( B ) assuming that Pr ( B ) > 0 . Independence of events : Two events A and B are said to be independent events if the outcome of one does not effect the outcome of the other i.e. Pr ( A / B ) = Pr ( A ) Random variable : Let S be a sample space , Pr denote the probability function associated with the sample space. Random variable( X ) is defined as a function that maps outcomes from a sample space to real no.s . X: S→R Pr[ X = x ] = ∑ Pr ( w ) such that X (w) = x for all w € S EXAMPLE # 3 Suppose two dice are rolled as stated in the Ex 2 and let the random variable X = D1 + D2 , then X can take any value from 2 to 12. For X = 8 , Pr ( X ) = 5/ 36. For X = 2 , Pr ( X ) = 1 / 36. So the probability of a random variable is not unique as in the case of an event. It takes different values depending on the value of X taken. An indicator random variable( Z ) is a random variable that maps the outcomes to either 0 or 1 i.e. Z = 1 if E ( event ) occurs = 0 otherwise EXAMPLE # 4 Consider the tossing of 3 coins Z = 1 if all coins show heads up. =0 otherwise Independence of Random Variables : Let X and Y be two random variables X : S → R and Y : S → R Two Equivalent Definitions of Independence: (i) Pr[ X=x / Y=y ] = Pr[ X=x ] (ii) Pr[ X=x ∩ Y=y] = Pr[ X=x ].Pr[ Y=y ] EXAMPLE # 5 Let X = D1 + D2 And Y = 1 if D1 = 5 If X = 2 , then Pr[X] = 1/36 If Y = 1, then Pr[ X=2 / Y=1 ] = 0 So these two random variables X and Y are not independent. EXAMPLE # 6 Let D1 be a random variable already defined in EXAMPLE # 2 Let I7 = 1 if D1 + D2 = 7 =0 otherwise Pr[ I7 = 1 ] = 1/6 Pr[ I7 = 1 / D1 = x ] = 1/6 Since these two values are equal, it is clear that D1 and I7 are independent random variables. Expected Value of a Random Variable : E[ X ] = ∑x€X x.Pr[ X=x ] Expectation of Random Variable is a weighted average of the probability of all its values. E[D1] = 1(1/6) + 2(1/6) + 3(1/6) + 4(1/6) + 5(1/6)+ 6(1/6) = 21/6 E[I7] = 1(1/6) + 0(5/6) = 1/6 To find the expected value of the sum of 2 random variables X1 and X2 E[X1 + X2] = E[X1] + E[X2] E[X + Y] = ∑e€S Pr[e]( X[e] + Y[e] ) = ∑e€S Pr[e] X[e] + ∑e€S Pr[e]Y[e] = E[X] + E[Y] EXAMPLE # 7 X = D1 + D2 Y=1 if D1 = 5 E[X] = 2(1/36) + 3(2/36) + 4(3/36) + 5(4/36) + 6(5/36) + 7(6/36) +8(5/36) + 9(4/36) + 10(3/36) + 11(2/36) + 12(1/36) =7 E[Y] = 1/6 E[X + Y] = 43/6 = E[X] + E[Y] This shows that the proof stated above is valid for any 2 random variables irrespective of their independence or not.