McNamara et al ESM

advertisement

McNamara et al ESM: Appendices

Appendix 1: Specific example

This appendix considers a specific case (i.e., particular functions) to allow the magnitude

of the effect to be quantified.

We assume that the unknown predator density has a Gamma( , ) distribution. The

mean and variance of the predator density are then E{} / and Var{} /2 .

Assuming all that matters is overwinter survival (rather than condition having survived),

we take V(u) to be constant, so that V (u ) 0 . The background mortality is set to zero,

although any fixed sources of mortality can be incorporated into the function a(u).

With μ fixed, we have

a ' (u f )

b' (u f )

.

With μ not fixed,

~ E ( )

E ( )

Cov( S , )

a' (u*)

.

b' (u*)TE ( S )

b' (u*)

, Var ( ) 2 .

From Eμ(SY) = 0, we have

E(-exp{-a(u*)T} exp{-μb(u*)T}(μ + a’(u*)/b’(u*))) = 0.

i.e.

E(exp{-μb(u*)T}( μ + a’(u*)/b’(u*))) = 0.

Let X = exp{-μb(u*)T}, so E(XY) = 0.

E(XY) = E(μ exp{-μb(u*)T} + (a’(u*)/b’(u*)) exp{-μb(u*)T}).

E(μ exp{-μ b(u*)T}) =

exp( bT ) 1 exp( )d

( ) 0

( 1)

1

( ) ( bT )

bT

1

.

E(X) = E( exp{-μ b(u*)T}) =

exp( bT ) 1 exp( )d

( ) 0

.

bT

1

Thus, E(XY) =

bT

E (Y ) E ( )

a (u*)

.

b(u*)

+

a' (u*)

.

b' (u*) bT

Now Cov(S,Y) = Cov(Xexp(-(a(u*) + θ)T), Y) = Cov(X, Y) exp(-(a(u*) + θ)T).

And Cov(X, Y) = E(X)E(Y) – E(XY)

=

b

(

u

*)

T

=

b(u*)T

a' (u*)

b

'

(

u

*)

b

(

u

*)

T

b(u*)T

1

a' (u*)

.

b' (u*) b(u*)T

= 2 bT

b(u*)T

~ E ( )

.

Cov( S , Y )

Cov( X , Y ) exp( (a(u*) )T )

.

b' (u*)TE ( S )

E (S )

Thus ~

1

b(u*)T

2

b

(

u

*)

T

1

exp( (a(u*) )T )

.

E(S )

Now E(S) = exp(-(a(u*) + θ)T)E(X)

.

exp( (a(u*) + )T )

b(u*)T

b(u*)T

. i.e.,

So, ~

b(u*)T

~

b(u*)T

.

Thus ~ E ( ) when T = 0; otherwise, ~ E ( ) .

a' (u*)

Also knowing that ~

, we obtain an equation for u*:

b' (u*)

b(u*)T

a' (u*)

.

b' (u*)

We let α = , so the mean value of μ is constant (always 1), and the variance of μ is 1/.

The equation for u* becomes

b' (u*) a' (u*)(1 b(u*)T / ) 0 .

If, for example, we let b(u) = u and a(u) = k/u, then b’(u) = 1, a’(u) = -k/u2.

Substituting in the above equation for u*, we obtain

u*

kT / (kT / ) 2 4k

.

2

For the fixed case, the optimal u is uf = k .

Letting k = 1, and considering only the positive solution, we obtain (for the variable case)

T / (T / ) 2 4

u*

.

2

a' (u*)

From u*, we can calculate the effective predation risk, ~

. and expected

b' (u*)

survival, S = exp{-(a(u*) + θ + μb(u*))T}.

Appendix 2: Lifetime reproductive success

Our model incorporates two types of mortality. One type is independent of the

organism’s behaviour. The other source depends on behaviour. We incorporate both

sources of mortality in the context of a trade-off between reproduction and predation. We

let represent a “background” rate of mortality that is independent of the organism’s

behaviour [cf. 10, 11] and represent the density of predators in the local environment.

We assume that an organism that reproduces at rate u has rate of mortality M (u ) .

Here M (u ) is an increasing function of u that captures the trade-off between

reproduction and predation. We also make the standard assumption that M (u ) is an

accelerating function of u [cf. 13]. At rate of reproduction u the organism’s mean lifetime

is L(u ) 1 /( M (u )) and the organism’s lifetime reproductive success

is R(u ) u / L(u ) .

Natural selection should favour the value u* of u that maximises R(u). This value

satisfies the derivative condition R (u*) 0 , from which it follows that

u * M (u*) M (u*).

(A2.1)

For example, when M (u ) u 2 this equation predicts that u* . Thus the rate of

reproduction increases with increasing background mortality and decreases with

increasing density of predators. These trends always apply when the function M (u ) is

accelerating.

Now suppose that the organism does not know the local value of either or , but is

adapted to the distribution of these unknown parameters. Let denote the mean of and

denote the mean of . These means are rates of mortality in a randomly selected

environment. It seems reasonable to claim that the organism should behave as if the

means are the actual rates, but we show that this claim is false.

Natural selection should favour the value û of u that maximises the mean lifetime

reproductive success E ( R(u )) R (u ) . From the condition R (uˆ ) 0 it follows that

uˆM (uˆ ) M (uˆ )

E

0.

( M (uˆ )) 2

Set X

(A2.2)

1

. Then equation (A2.2) can be written as

( M (uˆ )) 2

~

~ (uˆM (uˆ ) M (uˆ )) 0 ,

(A2.3)

~

~ Cov(, X )/ E{X } .

where Cov( , X ) / E{ X } and

~

Comparing equations (A2.1) and (A2.3) we see that has been replaced by and has

been replaced by ~ .

If is fixed and varies, then the covariance Cov( , X ) is negative, so ~ .

~

Similarly, if is fixed and varies, Cov( , X ) is negative so . Thus in both

cases, the organism should act as if the risk of mortality is less than the mean risk.

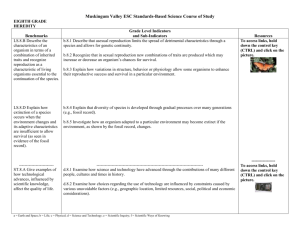

Figure A2.1 illustrates the case in which is known and unknown. We investigate the

effect of increasing the variance in while keeping its mean constant. As the variance

increases, the optimal rate of reproduction increases (Fig A2.1a). In other words, the

organism is behaving as if the predation risk is less than its mean value and the

discrepancy increases as the variance in increases. We can represent the situation in

terms of the organism’s effective predation risk. This is the value of a known predation

risk that would produce the same optimal behaviour as the optimal behaviour under the

unknown predation risk. As Fig A2.1b shows, this effective risk decreases strongly as the

variance increases. The mean lifetime reproductive success under the optimal strategy

increases as variance increases (Fig A2.1c).

Fig A2.1(a)

Fig A2.1(b)

Fig A2.1(c)

Figure A2.1. Effect of the variance in the density of predators on the optimal

reproductive strategy. The trade-off between reproduction and predation is represented by

the function M (u ) u 2 (see text). It is assumed that the rate of reproduction is

constrained to lie in the range 0 u 1 . The density of predators, , is unknown and is

assumed to have a gamma distribution. The parameters of the gamma distribution are

changed so as to change the variance while holding the mean fixed at 1 . The

background mortality rate, , is constant, and results are illustrated for three values of

this parameter. (a) The optimal rate of reproduction. (b) The effective predation risk. (c)

Mean lifetime reproductive success.

Appendix 3: Bandit models

In this appendix, we use two bandit models to identify how behaviour can appear

optimistic with respect to the risk of mortality, and show that the effect still occurs when

decisions are based on learnt parameters.

Model 1

Let us assume that an animal has a choice of two locations at which it could forage in any

given period of time (e.g., each day of winter). It knows the probability of survival per

time step, p, at one of the locations (e.g., where it reached maturity) but has no indication

of its survival chances elsewhere. We assume that as an adult, it learns about predation

risk in previously unknown locations only through the fact that it is still alive.

If the animal is to maximise its probability of survival for a given number of time steps,

N, then the animal should remain for life in whichever location it chooses first. This is

because if the known location is chosen first, no additional information is gained and

there is no reason to switch, while if the unknown patch is tried first, the only way the

animal learns anything bad about the environment (i.e., not to use it in the future) is by

being killed. However, the number of time steps over which survival is to be maximised

will still be relevant to its initial choice.

The probability of surviving N time steps on the known patch is pN. We shall assume a

beta prior for the probability of survival at the unknown location, with hyperparameters α

and β. The mean of the distribution, α/(α+β), is the initial expected probability of

survival on the unknown location; with each time step that the animal remains alive, the

animal learns that the environment is probably less dangerous than the initial estimate.

This equates to incrementing α with each time step (cf. Trimmer et al. 2011). The

probability of surviving N time steps at the unknown location is then

1

N 1

( N 1)!( 1)!

.

...

1 N 1 ( 1)!( N 1)!

For a uniform prior, α = β = 1, this reduces to 1/(N+1), so the critical probability, pc,

above which it would be better to choose the known location (i.e., when p > pc) is given

by

pc

1

.

( N 1)1 / N

This critical probability is shown in Figure A3.1.

Figure A3.1: The critical probability of survival (pc) on the known patch above which the

unknown patch should not be visited, when maximising the probability of surviving for N

time steps.

Because the critical probability is greater than 0.5 (the average probability of survival at

the unknown location), we see that the unknown location can be preferred even though

the expected probability of survival at the unknown location is initially less than that of

the known location. This occurs for any survival probability (at the known location)

between 0.5 and the critical probability.

Although in the model above the animal can learn about the risk of mortality in the

unknown environment, there is no benefit to learning, because learning can only occur in

the positive direction, so does not affect behaviour. We now consider a case where the

animal can learn about the new environment and modify its behaviour accordingly.

Model 2

We consider a situation in which an animal must survive for some known period (e.g.,

winter) before it can reproduce. We divide the time period up into a series of N discrete

time steps, in each of which, the animal must choose between two locations (e.g., to

forage). These locations differ only in their predation risk.

To allow learning about the risk of mortality to take place in a situation where the animal

can modify its behaviour accordingly, we assume that at each time step, the animal may

see a predator without necessarily being killed. Having seen a predator, there is some

probability, d, that the animal is killed. For simplicity, we shall assume that if it is killed,

it will have seen the predator before it dies. The probability of seeing a predator in a

given time step is assumed to be independent of previous time steps, depending only

upon the current location.

We assume that the probability of seeing (or not seeing) a predator at the unknown

location has a beta distribution (with uniform initial prior), so each update results in a

new beta distribution with one of the hyperparameters having been incremented. The

bandit problem can then be formulated as a recurrence relation. To provide some

consistency with the first model, in the unknown environment, we increment α each time

a predator is not seen and β each time one is seen. In the known environment, p is now

used to denote the probability of not seeing a predator.

The overall probability of survival from behaving optimally for the N time steps is:

(1 p)(( d (1 d ) R( , , p, N 1)) pR( , , p, N 1),

R( , , p, N ) min

R( 1, , p, N 1) (d (1 d ) R( , 1, p, N 1)

with R( , , p,1) min (1 p)d ,

d .

Note that if at any point, the known location is chosen, no new information is obtained,

so it there is no reason to switch back to the unknown location.

Due to the benefit of gaining information - and potentially finding a location with lower

mortality risk - we again find that the animal can rationally choose the unknown location

when the expected risk of mortality is greater than at the known location; this is depicted

in Figure A3.2.

Figure A3.2: The critical probability of success (pc) on the known location, below which

the unknown location should be visited first, in relation to the total number of time steps

(N). The solid line relates to the learning situation, in which the animal can switch

patches based upon what it learns, the dashed line shows the smaller bias when learning

is irrelevant because the first location to be chosen is fixed for the duration. [d = 0.05]

Figure A3.2 also shows the line corresponding to the non-learning (or not being able to

change one’s decision) case of Model 1. (The trend is less pronounced in this figure due

to the lower mortality risk.) The discrepancy between the lines indicates that rather than

learning situations reducing any apparent optimism about mortality, the very fact that one

will be able to learn, and take advantage of that information, can lead to much greater

differences (apparent optimism) about the risk of mortality at the outset.

In summary in each model, we see that the unknown location can be preferred even

though the expected (i.e., average) probability of survival at the unknown location is

(prior to gaining any information) less than that of the known location. Fundamentally,

this is because the animal is interested in maximising its overall probability of survival,

not just the expected survival probability in a single time step.

The driving force in each model is the same as that of main text. In the face of

uncertainty, if the potential for long-term gains (if the unknown environment turns out to

be better than average) outweighs the potential for losses (if worse than average), then it

is better to take the lesser known option.