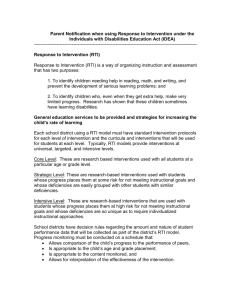

Response to Intervention Models - Oregon Department of Education

advertisement