Thesis Proposal - School of Computer Science

advertisement

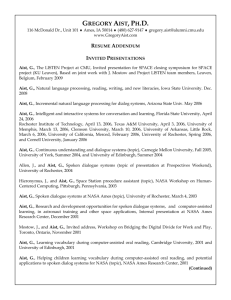

Gregory Aist Page 1 of 27 2/13/2016 Improving Elementary Students’ Reading Abilities with Skill-Specific Spoken Dialogs in a Reading Tutor that Listens Ph.D. Thesis Proposal Gregory Aist Language Technologies Institute Carnegie Mellon University 213 Cyert Hall, 4910 Forbes Avenue Pittsburgh, PA 15213-3720 aist+@andrew.cmu.edu Thesis Committee: Jack Mostow, mostow@cs.cmu.edu, Robotics Institute/LTI, advisor Albert Corbett, al.corbett@cs.cmu.edu, HCII Alex Rudnicky, air@cs.cmu.edu, CSD/LTI Charles Perfetti, perfetti+@pitt.edu, University of Pittsburgh Gregory Aist Page 2 of 27 2/13/2016 Abstract The Literacy Challenge. Reading is fundamental. If children don't learn to read independently by the fourth grade, they fall further and further behind in school. One-on-one instruction by trained human tutors succeeds in helping kids learn to read, but is expensive and sometimes unavailable. Efforts to duplicate the effects of one-on-one tutoring in large group settings have typically not matched the performance of human tutors. The Technology Opportunity. Advances in speech recognition and spoken dialog technology have made possible computer-based reading tutoring. Intelligent tutoring systems based on cognitive principles have previously proven successful in such varied domains as algebra and computer programming. By combining spoken dialogue and intelligent tutoring systems we hope to come closer to the goal of a "two-sigma" computer tutor for reading -- one that duplicates the twostandard-deviation gain in reading skill observed for human-human tutoring. Research Strategy. One methodology in reading research is to study successful human tutors and identify what makes tutorial dialog effective. Unfortunately, human-human tutorial dialog cannot be directly imitated by the computer. Errors in speech recognition, combined with the broad range of discourse, domain and world knowledge used by human tutors, require an indirect approach. Therefore, we intend to identify a few critical reading skills and explore which features of human- Gregory Aist Page 3 of 27 2/13/2016 human dialog effectively train those skills. For each skill, by combining a cognitive model of the skill with the dialog features that effectively train components of that skill, we will design human computer multimodal dialogues that successfully train the desired skill. Organization of this Proposal. In this proposal, we briefly describe related research in beginning reading and in educational software for reading. We then describe Project LISTEN’s Reading Tutor, including some of the technological and design considerations that will guide our dialog design for reading tutoring. Next, we suggest several examples of reading skills we might focus on: word attack, word comprehension, and passage comprehension. For each example skill, we describe how that skill is learned and taught, discuss hypothetical computer human dialogs designed to train that skill, propose methods for evaluating the effectiveness of such dialogs, and consider the expected contributions of developing successful dialogs. (As implemented in the thesis, these dialogs may be newly designed, or they may be modifications of existing dialogs within the Reading Tutor.) We claim that skill specific human computer multimodal dialogs, based on cognitive skill models and successful human tutoring strategies, can improve elementary students' reading abilities. Related Research Beginning Reading. Many factors involved in achieving competence in early reading. For poor readers, word recognition skills are critical (Ehrlich 1993, Stanovich 1991). For good readers, other factors including metacognitive skills and motivation are also important: “Basic word decoding and perceptual skills are necessary in order to read; if a child lacks these cognitive skills, even the most adaptive attribution and self-efficacy beliefs will not magically reveal the meaning behind the text. Thus for poor readers, word decoding skill is highly related to comprehension Gregory Aist Page 4 of 27 2/13/2016 ability. In contrast, for good readers who possess adequate decoding skills, motivational variables such as perceived competence emerge as influential factors determining reading performance.” (Ehrlich 1993). In addition to predicting immediate ability, poor word decoding skills are a good predictor of long-term reading difficulties (Ehrlich 1993). Beyond word recognition, fluent reading relies on lower-level cognitive skills such as symbol-naming ability (Bowers 1993). Phonological awareness is also a factor, but it is not clear whether this is an result of word-recognition skills or an independent contribution to reading success (Bowers 1993). Individual differences also play a role in achieving reading fluency. For example, while some poor readers learn better with instruction including “Listening Previewing”, or hearing a passage read aloud while following along in the text (Daly and Martens 1994), some learn better without previewing (Tingstrom 1995). What role do segmentation skills play in beginning reading? Nation and Hulme (1997) found that phonemic segmentation predicts early reading and spelling skills more than onset-rime segmentation. Peterson and Haines (1992) found that training kindergarten children to construct rhyming words from onsets and rimes improved children's segmentation ability, letter-sound knowledge, and ability to read words by analogy. What about selection of material? Rosenhouse et al. (1997) found that interactive reading aloud to firstgraders led to increases in decoding, passage comprehension, and picture storytelling. Rosenhouse et al. also found that reading serial stories (stories with the same characters and moderately predictable plots or conflicts) had a positive impact on the number of books bought for pleasure reading. A review of the literature by Roller (1994) reveals that teachers interrupt poor readers more frequently than good readers, but which comes first (poor reading or interruption) is not clear. Also, with good readers, more emphasis is placed on meaning. Again, the reasons and causal relationships are unclear. Gregory Aist Page 5 of 27 2/13/2016 Because of the importance of word recognition in learning to read, reading software should encourage the development of word decoding skills and aim to increase the sight vocabulary of the student. However, since motivational variables become important for good readers, and the goal of the software is to enable poor readers to become good readers, reading software should ideally also encourage the development of positive motivational attitudes towards reading and provide experiences that let the student experience the joy of reading. Educational software for reading. While the literature on intelligent tutoring systems is quite substantial, we will focus here on automated reading tutors. Commercial reading systems provide help on demand, and some (Vanderbilt 1996) even provide the opportunity for students to record their own readings of the material. Use of speech recognition in reading systems is much more rare and is at the research stage. Some reading software systems provide spoken assistance on demand (Discis 1991), (Edmark 1995), (Learning Company 1995), and the use of synthesized speech has been explored in a research context (Lundberg and Olofsson1993). The Little Planet Literacy Series (Vanderbilt 1996) provides help on demand and allows children to record their own voices, but it does not use speech recognition and therefore cannot judge the quality of the child's reading or offer help based on such a judgement. Previous research has demonstrated that mouse- or keyboard-oriented computer-assisted instruction can improve reading skills such as phonological awareness and word identification (e.g. Barker and Torgesen 1995). Lewin (1998) in a study of “talking book” software, found that such software was typically used with students in pairs (82%) or as individuals (29%), or occasionally with students in larger groups (11%). Pairs or groups were more commonly normally progressing readers, perhaps to ensure that all students Gregory Aist Page 6 of 27 2/13/2016 were able to use the software while allowing poorer readers more individual time. Teachers requested additional feedback from the software, such as onset and rime, and “hints”. Teachers also requested more "reinforcement activities" for particular skills . Most teachers, however, made little use of records of which words kids clicked on. As a cautionary note, in software filled with animated or talking characters, children may spend large amounts of time clicking on the characters to see the animation (Underwood and Underwood 1998), to the detriment of time spent reading. What is it that distinguishes human tutoring from most reading software? One fundamental difference is that in human tutoring, to one extent or another, the student produces the desired sounds (e.g. pronouncing an unfamiliar word), instead of recognizing them. Not only does the student make the sound; the student makes the effort to make the sound. By contrast, in reading software that does not listen, students may be restricted to receptive activities such as matching up rhyming words, or to constructive activities that redirect what would normally be speech into some other medium, such as putting blocks together to make words. Automatic speech recognition (Huang 1993), while it has appeared in other language-related educational systems (such as single-word foreign language pronunciation training, and speech pathology software), is still a rarity in reading software (but see Edmark 1997). DRA Malvern has developed a system called STAR (Speech Training Aid) that listens to isolated words without context (Russell 1996). Russell et al. (1996) also describe an ongoing research effort with aims similar to those of Project LISTEN, the Talking and Listening Book project, but they use word spotting techniques to listen for a single word at a time. They also require the child to decide when to move on to the next word (fully user-initiated) or completely reserve that choice to the system (fully system-initiated). For other systems using speech recognition with reading tutoring, see (Edmark 1997, IBM 1998). Gregory Aist Page 7 of 27 2/13/2016 Research Context: Project LISTEN Project LISTEN: A Reading Tutor that Listens. Project LISTEN’s Reading Tutor (Mostow and Aist AAAI 1997, Mostow et al. 1995, Mostow et al. 1994, Mostow et al. 1993) adapts the Sphinx-II speech recognizer (Huang et al. 1993) to listen to children read aloud. The Reading Tutor runs on a single stand-alone Pentium™. The child uses a noise-cancelling headset or handset microphone and a mouse, Figure 1. Reading Tutor, Fall 1997. (This screen Tutor looks nearly identical in the Fall 1998 Reading Tutor, with Reader relabeled Goodbye.) Gregory Aist Page 8 of 27 2/13/2016 but not a keyboard. Roughly speaking, the Reading Tutor displays a sentence, listens to the child read it, provides help in response to requests or on its own initiative based on student performance. (Aist 1997) describes how the Reading Tutor decides when to go on to the next sentence. The student can read a word aloud, read a sentence aloud, or read part of a sentence aloud. The student can click on a word to get help on it. The student can click on Back to move to the previous sentence, Help to request help on the sentence, or Go to move to the next sentence (Figure 1). The student can click on Story to pick a different story, or on Goodbye to log out. The Reading Tutor can choose from several communicative actions, involving digitized and synthesized speech, graphics, and navigation (Aist and Mostow 1997). The Reading Tutor can provide help on a word (e.g. by speaking the word), provide help on a sentence (e.g. by reading it aloud), backchannel (“mm-hmm”), provide just-in-time help on using the system, and navigate (e.g. go on to the next sentence). With speech awareness central to its design, interaction can be natural, compelling, and effective (Mostow and Aist WPUI 1997). Writing. The Reading Tutor has the capability, so far used mostly in the laboratory, to allow new stories to be typed in and then recorded with automatic quality control on the recordings using automatic speech recognition. Taking turns. People in general exhibit a rich variety of turn-taking behavior: interruption, backchanneling, and multiple turns (Ayres et al. 1994, Duncan 1972, Sacks, Schegloff, and Jefferson 1974, Tannen 1984, Uljin 1995). Turn-taking is important in tutorial dialog as well (Fox 1993). Humans use different conversational styles when speaking to computers than when speaking to humans. Shorter sentences, a smaller vocabulary, fewer exchanges, fewer interruptions, and fewer justifications of requests are all characteristic of human conversational style during human-computer spoken dialogue, but it is not clear whether the difference is due to the (supposed or actual) identity of the interlocutor, or Gregory Aist Page 9 of 27 2/13/2016 the interlocutor's conversational style (Johnstone 1994). Apparently when computers behave similarly to humans, human-computer interaction more closely resembles human-human interaction (Johnstone 1994). In general, spoken language systems follow strict turn-taking behavior, and even Wizard of Oz studies tend to use a simple “my turn”—“your turn” approach to low-level discourse behavior in terms of their generation abilities (Johnstone 1994). The Reading Tutor, however, employs a conversational architecture (Aist 1998) that allows interruption by either the Tutor or the student, overlap, backchanneling, and multiple turn-taking (cf. Donaldson and Cohen 1997, Keim, Fulkerson, and Biermann 1997, Ward 1996, Ball 1997). The nature of feedback. In prior work, the Reading Tutor has been designed to never tell the student she was right, and to never tell the student she was wrong. Because of error in the speech recognition, explicit right/wrong feedback would be incorrect sometimes, which might confuse the student. Therefore, the Reading Tutor generally simply gives the correct answer and leaves the right/wrong judgment partially as an exercise for the reader. This turns out to match well with the observation of Weber and Shake (1988) that teachers’ rejoinders to student responses in comprehension discussions were most frequently null or involved repetition of the student’s answer. Giving the correct answer may seem like corrective feedback if the student was right, and may seem like confirmation if the student was wrong. This interpretation depends on the ability of the student to contrast his or her answer with the answer given by the system. Visual design. Throughout the visual design of the Reading Tutor, we will continue to use buttons labeled with both text and pictures, for maximum clarity and ease of use (King et al. 1996). Skill: Word Attack Description. For the first example skill, let us consider “word attack”, or decoding skills. Here the goal Gregory Aist Page 10 of 27 2/13/2016 is to learn how to take unknown words and turn them into sound. There are many stages in taking a word from printed symbol to speech: 1. Visual stimulus "cat" 2. Visual stimulus "c", "a", "t" 3. Orthographic symbols 'c', 'a', 't' 4. Phonological representation: phonemes /k/, /a/, /t/ 5. Phoneme string /kat/ 6. Sound of the word 'cat' This skill concentrates on that part of the reading process that transforms letters into sounds – the orthographic to phonological mapping (#4 above). One reasonable skill model is thus a probabilistic context-sensitive unidirectional grapheme-to-phoneme mappings, at various levels of subword detail. There are several sources for deriving the subword units: inferring them from English text, inferring them from kids’ performance, or from the literature on subword components in reading. Human tutoring strategies. What constitutes a successful human-human dialog for teaching word attack? In a study of 30 college student-elementary student tutoring dyads, Juel (1996) analyzed videotaped interactions for successful tutoring strategies. Two activities were found to be particularly important in successful dyads: (a) the use of texts that gradually and repetitively introduced both high-frequency vocabulary and words with common spelling patterns, and (b) activities in which children were engaged in direct letter-sound instruction. Two forms of verbal interactions were found to be Gregory Aist Page 11 of 27 2/13/2016 particularly important: (a) scaffolding of reading and writing, and (b) modeling of how to read and spell unknown words. A synergistic relationship was found to exist between the form and content of instruction. (Juel 1996). In this study, direct letter sound instruction included making index cards with words on them together. Most of the children’s responses in cited dialogue are words that are answers to tutor's questions. Juel also notes that successful pairs share "affection, bonding, and reinforcement." Why are dialogs like those described by Juel successful? Perhaps, by sounding out real words, kids get to practice decoding rules in the contexts in which they are used. Perhaps, by providing scaffolding, human tutors keep kids from mislearning rules and provide correct examples of decoding. Computer-human dialog. What would a computer-human dialog that successfully taught word attack skills look like? One possibility is to adapt the current Reading Tutor sentence-reading dialog to a word-list reading dialog. Using information about what rules are used to pronounce words, and using its records of student performance, the Reading Tutor would select a grapheme-to-phoneme rule for the student to practice. The Reading Tutor would present a list of words that involved a specified rule. The student would then read each of these words, with the Reading Tutor focusing on modeling correct sounding-out of the words to reinforce the particular rule. By placing the correct stimulus (the words) on the screen, we would hope to reduce off-task or non-reading speech, and thus make the speech recognition task feasible. What kind of scaffolding might the Reading Tutor provide during this task? The Reading Tutor already provides help such as sounding out words and providing rhyming words. In the future, the Reading Tutor might employ other help, such as orthographic units slightly apart as a subtle aid to visually grouping letters together: “team” might get redisplayed briefly as “t ea m”. Another possibility is for the Reading Tutor to dynamically construct short bits of text for the student to read that, when read, get the student to “practice” sounding out a word: “t ea m. t ea m. team.” Gregory Aist Page 12 of 27 2/13/2016 Evaluation. How would such a dialog be evaluated? We could test the effectiveness of practice on an individual rule (say, d /d/) by looking for improvements on real words or pseudo-words with that mapping. For example, students could be presented with two pseudo-words to read, where the items are identical except that one contains the rule used during training and the other contains a different rule. Besides pedagogical effectiveness, the dialog must also be understandable to students and fun enough to get them to participate. Expected Contribution. Successful design of a spoken dialog to teach word attack skills would be an important achievement in the field of reading education. In addition, such a dialog is expected to raise important questions about integrating intelligent tutoring systems with speech recognition, two fields that are ripe for combination. Skill: Word Comprehension Description. Once a word has been decoded (or recognized, if it is a familiar word), a student must be able to access the meaning of the word in order to understand the sentence the word is in. What is the goal of training word comprehension skills? Essentially, vocabulary growth – kids should learn the meaning, spelling, pronunciation, and usage of new words. Human tutoring strategies. What are techniques that work when human tutors help students learn new words? For beginning readers, many words may be learned through written context, by having stories read and re-read to them (Eller et al. 1988). In order for children to encounter many new words, however, they may need to read material hard enough to traditionally be considered at their frustration level (Carver 1994) – and children may not choose material this difficult on their own, either in traditional free reading time or with computerized instruction. Gregory Aist Page 13 of 27 2/13/2016 Human tutors also introduce and explain new vocabulary, and help students practice spellings of words. When should definitions or other word specific comprehension assistance be presented? For high school readers, Memory (1990) suggests that the time of instruction (before, during, or after the reading passage) for teaching technical vocabulary may not matter as much as the manner of instruction. The implication is that the Reading Tutor may be able to choose when to present a definition or other word comprehension help at several different times without substantially harming the student’s ability to learn from the assistance. What kind of word-specific comprehension should be given? Definitions, in particular context-specific definitions, are one obvious candidate. What are some other options? Example sentences may be of some help (Scott and Nagy 1997), but learning new words from definitions is still very hard even with example sentences. Which words should be explicitly taught? Zechmeister et al. (1995) suggests that explicit vocabulary instruction be focused on functionally important words, which they operationalize as main entries in a medium-sized dictionary. Human-computer dialog. Here a direct approach to generating a human-computer dialog that captures the essence of human tutorial strategies – such as constructing a dialog where the Reading Tutor interactively explains the meaning of new words, or augmenting stories with specially written, contextsensitive definitions – results in excessive requirements for curriculum design or is beyond the state of the art in spoken dialog. How can we design an alternate interaction that places fewer requirements on instructional content and speech recognition? One possibility is to adapt the Writing capability of the Reading Tutor to allow kids to build up a “My Words” portfolio that serves as an explicit record of the student’s growing vocabulary. The trick here is to try to get the classroom teacher involved in the process by commenting on, and Gregory Aist Page 14 of 27 2/13/2016 indirectly supervising, the student’s production of this portfolio. Words could be selected for inclusion in the My Words portfolio when the student encountered them in stories read with the Reading Tutor. In order to get students to encounter new words, the Tutor could influence or partly control the student’s choice of stories to read in order to steer the student towards harder material. Part of adding a word to “My Words” might include practice sessions for spelling, or reading sentences that contained that word drawn from the stories used by the Reading Tutor. Should the computer or the student take the initiative in selecting word specific comprehension assistance? A study by Reinking and Rickman (1990) indicated that mandatory computer presentation of context specific definitions was better than offline access. Students selected computer presentation was also better than offline access, and mandatory computer presentation was better than student selected computer presentation but not significantly. Perhaps a mixed-initiative strategy, where the Reading Tutor selects extra comprehension activities for some "hard" words but students can choose to pursue them for other words as well, would allow students to feel in control while ensuring some additional comprehension assistance. Evaluation. How would such a portfolio-based interaction be evaluated? As kids read stories, the Reading Tutor would seek to identify new, unusual, or difficult words. Some percentage of those words might be selected for "my words"; the rest would be used as control condition. Students would build up a “My Words” list by reading words and drawing pictures of them. (The resulting objects could be used later in illustrating stories). For each word in “My Words”, students would write definitions and sample sentences for the words - expository writing, to harness kids' communicative intent in order to "get words right". The underlying message here is that learning new words is important because they help you read and write interesting stories – and students should learn the importance of new words through experience. Gregory Aist Page 15 of 27 2/13/2016 How could such an interaction be evaluated? One method would be to determine if students are better at recognizing and understanding the words they've put into “My Words”, as compared to other words that were in the stories they read, but were not selected for “my words”. Several factors make evaluation of this sort of interaction difficult, however. Even if spending time on “My Words” led to improvement in recognizing and understanding the words it contained, it might still be the case that that time might have been better spent reading (different, and challenging) stories. The evaluation for this interaction will have to address these issues. Expected Contribution. A successful human-computer dialog for teaching word comprehension as described above would “close the gap” between reading, writing, and illustrating in the Reading Tutor, allowing students to participate in several literacy activities in a coherent instructional situation. One expected technical contribution is the development of automated techniques for the Reading Tutor to pick out difficult words – from any material -- for students to spend more time on, thus extending comprehension assistance to student-authored material. In addition, the presence of “My Words” as a writing (and therefore narrating) exercise would bring the “authoring” capability of the Reading Tutor to use in real classrooms by students. Another contribution would be the use of speech recognition – constrained to be less difficult than full-scale dictation – in a constructive learning activity, another bridge between spoken dialog systems and instructional tutoring systems. Skill: Passage Comprehension Description. Being able to read and understand individual words is not by itself sufficient. More is needed to be a skilled reader. What is the goal of teaching passage comprehension? Reading with understanding – making meaning out of print. Human tutoring strategies. While a student is reading, human tutors supply words in response to pauses, Gregory Aist Page 16 of 27 2/13/2016 interrupt students to engage in phonologically motivated interventions, complete students' words, and provide backchannel feedback (e.g. Oops!) (Roller 1994). One of the simplest strategies for improved comprehension is rereading. Reading a text twice increases retention of facts (Barnett and Seefeldt 1989). Other skills play a role in passage comprehension. For example, word recognition is a good predictor of reading comprehension, especially for poor readers (Ehrlich et al. 1993). One skill of good readers is adjusting reading rate to passage difficulty (Freese 1997). Providing prior information about relevant subject matter may improve comprehension of text about unfamiliar topics (Stahl and Jacobson 1986). However, improving comprehension on a particular passage is not necessarily the same as helping the student learn how to comprehend other passages better. What about pictures as an aid to comprehension? Pictures have appeal for young students, but may not teach skills that are transferable to text without pictures. For pictures to improve passage comprehension, O’Keefe and Solman (1987) suggest that pictures must be displayed simultaneously with the text they depict, rather than prior to reading the text or after reading the text. Human-computer dialog. Human tutors teach comprehension in a variety of ways, but the current Reading Tutor interaction is based on one-on-one oral reading tutoring. The resulting dialog is called “shared reading”, where the student reads wherever possible and the computer reads wherever necessary. How can shared reading be improved? The current Reading Tutor allows kids free choice of all material on the system. Some kids make what appear to be poor choices -- reading material that's too easy, or reading the same story over and over again. During a 1996-1997 pilot study, six third-grade children who started out below grade level gained almost two years in fluency in only eight months of Reading Tutor use (Mostow and Aist WPUI 1997). The aide (Ms. Brooks) who helped kids pick stories most likely played a role in the observed two-year fluency gain. In order to improve the shared reading Gregory Aist Page 17 of 27 2/13/2016 process, perhaps the Reading Tutor should do better at helping kids pick appropriate material. One possibility here are "curriculum adjustment" tools, where the teacher or some administrator gets to adjust an individual kid's reading list. However, we have found it difficult to involve teachers in directly using the Reading Tutor for such things as entering student data; they seem to prefer interacting with the Reading Tutor indirectly. For example, one teacher put a list of stories to read on an 3x5 index card and set the card on top of the Reading Tutor monitor. So, an alternate approach is to have the Reading Tutor “filter” or “sort” the list of stories that students can access to restrict or guide students to appropriate material. This approach would have to be balanced to allow the teacher to “indirectly” use the computer through influencing her students’ choices, if desired. Evaluation. How should a revised story selection scheme be evaluated? Perhaps the most basic question is whether kids will tolerate the interaction design that lets the Tutor affect story choice. In terms of pedagogical evaluation, the question of appropriate material has at least two subcomponents: is the story of the right difficulty? and, is the story interesting for that kid at that moment?) In addition, since teachers may affect student choice of stories, teacher choices may affect the results of evaluation of Tutor-mediated story selection. One possibility is to separate out good story choices from poor choices, based on records of kids' performance. Note that this is a tricky proposition. What should we assume makes a “good” choice or a “poor” choice? An acceptable range of fluency on that story? Should we seek an expert consensus on which stories are obviously too hard or obviously too easy? If so, by watching videotapes of the student reading the story, or by prediction from the material? One exciting alternate possibility is to test the goodness of the story choice based on the actual learning from that story. It is an open question as to whether or not we can detect learning at that fine of a level. Expected Contribution. By modifying the Reading Tutor to better guide the story choices kids make, we would hope to improve the overall effectiveness of the Reading Tutor. In addition, using the results Gregory Aist Page 18 of 27 2/13/2016 of speech recognition – in the form of a student model – to guide students’ selection of stories from an open-ended set of material forges an important link between automatic speech recognition and intelligent tutoring systems. Summary We propose to enhance Project LISTEN’s Reading Tutor by developing skill-specific human-computer spoken dialogs that train elementary students in fundamental reading skills. Reading is fundamental, and the time is ripe in intelligent tutoring systems and in spoken dialog systems to address beginning reading using techniques from both fields and using the issues that arise to build bridges between the two fields. The major expected scientific contributions are an improved Reading Tutor and a better understanding of how spoken language technology can be used with intelligent tutoring systems. We have briefly described relevant research both in reading and in automated reading tutoring. We have described the work to date on Project LISTEN’s Reading Tutor, which will also serve as the platform for the proposed work. We have described three example skills to train: word attack, word comprehension, and passage comprehension. For each skill we have discussed how human tutors train that skill, how a human-computer spoken dialog might train that skill, and how such a dialog might be evaluated. Finally we will conclude with a projected timeline for completion. Gregory Aist Page 19 of 27 2/13/2016 Proposed Schedule August 1998: Proposal (September 1998 - December 1998: visiting Macquarie University, Sydney, Australia) January 1999 - May 1999: Collect and analyze videotapes and transcripts of successful human-human dialogs, perhaps by courtesy of other researchers; collect examples and descriptions of successful human tutoring strategies from the literature June 1999 - December 1999: Design and implement computer-human dialogs that capture the important characteristics of successful human-human tutoring dialogs January 2000 - May 2000: Usability testing and code hardening June 2000 - May 2001: Evaluation and writeup May 2001: Defense. Acknowledgments This material is based upon work supported in part by the National Science Foundation under Grant No. IRI-9505156 and CDA-9616546 and by the author's National Science Foundation Graduate Fellowship and Harvey Fellowship. Any opinions, findings, conclusions, or recommendations expressed in this publication are those of the author(s) and do not necessarily reflect the views of the National Science Foundation or the official policies, either expressed or implied, of the sponsors or of the United States Government. Gregory Aist Page 20 of 27 2/13/2016 We thank first our committee members; the Principal of Fort Pitt Elementary School, Dr. Gayle Griffin, and the teachers at Fort Pitt for their assistance; Drs. Rollanda O'Connor and Leslie Thyberg for their expertise on reading; Raj Reddy and the CMU Speech Group (especially Ravi Mosur) for the Sphinx-II speech recognizer; and the many present and past members of Project LISTEN whose work contributed to current and previous versions of the Reading Tutor; and many students, educators, and parents for tests of the Reading Tutor tests in our lab and at Fort Pitt Elementary School. References Aist, G. S. 1998. Expanding a time-sensitive conversational architecture for turn-taking to handle content-driven interruption. To appear in ICSLP 1998, Sydney, Australia. Aist, G. S. 1997. Challenges for a Mixed Initiative Spoken Dialog System for Oral Reading Tutoring. AAAI 1997 Spring Symposium on Computational Models for Mixed Initiative Interaction. AAAI Technical Report SS-97-04. Aist, G. S., and Mostow, J. 1997. Adapting Human Tutorial Interventions for a Reading Tutor that Listens: Using Continuous Speech Recognition in Interactive Educational Multimedia. In Proceedings of CALL 97: Theory and Practice of Multimedia in Computer Assisted Language Learning. Exeter, UK. Ayres, J., Hopf, T., Brown, K, and Suek, J. M. 1994. The Impact of Communication Apprehension, Gender, and Time on Turn-Taking Behavior in Initial Interactions. The Southern Communication Journal, 59(2):142-152. Gregory Aist Ball, G. 1997. Page 21 of 27 2/13/2016 Dialogue Initiative in a Web Assistant. AAAI 1997 Spring Symposium on Computational Models for Mixed Initiative Interaction. Barker, Theodore Allen, and Torgesen, Joseph K. 1995. An evaluation of computer-assisted instruction in phonological awareness with below average readers. Journal of Educational Computing Research 13(1), pp. 89-103. Barnett, Jerrold E., and Seefeldt, Richard W. 1989. Read something once, why read it again?: Repetitive reading and recall. Journal of Reading Behavior 21(4), pp. 351-360. Bowers, P. G. 1993. Text reading and rereading: Determinants of fluency beyond word recognition. Journal of Reading Behavior 25(2) 133-153. Carver, Ronald P. 1994. Percentage of unknown vocabulary words in text as a function of the relative difficulty of the text: Implications for instruction. Journal of Reading Behavior 26(4) pp. 413-437. Daly, E.J., and Martens, B. K. 1994. A comparison of three interventions for increasing oral reading performance: application of the instructional hierarchy. Journal of Applied Behavior Analysis 27(3) 459-469. Discis Knowledge Research Inc.. 1991. DISCIS Books. Macintosh software for computer-assisted reading. Gregory Aist Page 22 of 27 2/13/2016 Donaldson, T. and Cohen, R. 1997. A Constraint Satisfaction Framework for Managing MixedInitiative Discourse. AAAI 1997 Spring Symposium on Computational Models for Mixed Initiative Interaction. Duncan, S. 1972. Some signals and rules for taking speaking turns in conversations. Journal of Personality and Social Psychology 23(2):283-292. Edmark. 1995. Bailey's Book House. Edmark. 1997. Let’s Go Read. http://www.edmark.com/prod/lgr/island/. Ehrlich, Marie-France, Kurtz-Costes, Beth, Loridant, Catherine. 1993. Cognitive and motivational determinants of reading comprehension in good and poor readers. Journal of Reading Behavior 25(4), pp. 365-381. Eller, Rebecca G., Pappas, Christine C., and Brown, Elga. 1988. The lexical development of kindergarteners: Learning from written context. Journal of Reading Behavior 20(1), pp. 5-24. Fox, B. A. 1993. The Human Tutorial Dialogue Project: Issues in the Design of Instructional Systems. Hillsdale NJ: Lawrence Erlbaum. Freese, Anne Reilley. 1997. Reading rate and comprehension: Implications for designing computer technology to facilitate reading comprehension. Computer Assisted Language Learning 10(4), pp. 311- Gregory Aist Page 23 of 27 2/13/2016 319. Huang, X. D., Alleva, F., Hon, H. W., Hwang, M. Y., Lee, K. F., and Rosenfeld, R. 1993. The SphinxII Speech Recognition System: An Overview. Computer Speech and Language 7(2):137-148. IBM. 1998. Watch Me Read. http://www.ibm.com/IBM/IBMGives/k12ed/watch.htm. Johnstone, A., Berry, U., Nguyen, T., and Asper, A. 1994. There was a long pause: Influencing turntaking behaviour in human-human and human-computer spoken dialogues. International Journal of Human-Computer Studies 41, 383-411. Juel, Connie. 1996. What makes literacy tutoring effective? Reading Research Quarterly 31(3), pp. 268289. Keim, G. A., Fulkerson, M. S., and Biermann, A. W. 1997. Initiative in Tutorial Dialogue Systems. AAAI 1997 Spring Symposium on Computational Models for Mixed Initiative Interaction. King, Kira S., Boling, Elizabeth; Anneli, Janet; Bray, Marty; Cardenas, Dulce; and Theodore Frick. 1996. Relative perceptibility of hypercard buttons using pictorial symbols and text labels. Journal of Educational Computing Research 14(1), pp. 67-81. The Learning Company. 1995. Reader Rabbit’s® Interactive Reading Journey™. Gregory Aist Page 24 of 27 2/13/2016 Lewin, Cathy. 1998. Talking book design: What do practitioners want? Computers in Education 30(1/2), pp. 87-94. Lundberg, I., and Olofsson, A. 1993. Can computer speech support reading comprehension? Computers in Human Behavior 9(2-3), 283-293. Memory, David M. 1990. Teaching technical vocabulary: Before, during or after the reading assignment? Journal of Reading Behavior 22(1), pp. 39-53. Mostow, J., Hauptmann, A. G., Chase, L. L., and Roth. S. 1993. Towards a Reading Coach that Listens: Automatic Detection of Oral Reading Errors. In Proceedings of the Eleventh National Conference on Artificial Intelligence (AAAI-93), 392-397. Washington DC: American Association for Artificial Intelligence. Mostow, J., Roth, S. F., Hauptmann, A. G., and Kane, M. 1994. A Prototype Reading Coach that Listens. In Proceedings of the Twelfth National Conference on Artificial Intelligence (AAAI-94), Seattle WA. Selected as the AAAI-94 Outstanding Paper. Mostow, J., Hauptmann, A., and Roth, S. F. 1995. Demonstration of a Reading Coach that Listens. In Proceedings of the Eighth Annual Symposium on User Interface Software and Technology, Pittsburgh PA. Sponsored by ACM SIGGRAPH and SIGCHI in cooperation with SIGSOFT. Mostow, J., and Aist, G. S. 1997. The Sounds of Silence: Towards Automatic Evaluation of Student Gregory Aist Page 25 of 27 2/13/2016 Learning in a Reading Tutor that Listens. In Proceedings of the 1997 National Conference on Artificial Intelligence (AAAI 97), pages 355-361. Mostow, J., and Aist, G. S. 1997. When Speech Input is Not an Afterthought: A Reading Tutor that Listens. Workshop on Perceptual User Interfaces, Banff, Alberta, Canada, October 1997. Nation, K., and Hulme, C. 1997. Phonemic segmentation, not onset-rime segmentation, predicts early reading and spelling skills. Reading Research Quarterly 32(2) pp. 154-167. O'Keefe, Elizabeth J., and Solman, Robert T. 1987. The influence of illustrations on children's comprehension of written stories. Journal of Reading Behavior 19(4), pp. 353-377. Peterson, Margareth E. and Haines, Leonard P. 1992. Orthographic analogy training with kindergarten children: Effects on analogy use, phonemic segmentation, and letter-sound knowledge. pp. 109-127. Reinking, David, and Rickman, Sharon Salmon. 1990. The effects of computer-mediated texts on the vocabulary learning and comprehension of intermediate-grade learners. Journal of Reading Behavior 22(4), pp. 395-411. Roller, C. 1994. Teacher-student interaction during oral reading and rereading. Journal of Reading Behavior 26(2), pp. 191-209. Rosenhouse, J., Feitelson, D., Kita, B., and Goldstein, Z. 1997. Interactive reading aloud to Israeli first graders: Its contribution to literacy development. Reading Research Quarterly 32(2), pp. 168-183. Gregory Aist Page 26 of 27 2/13/2016 Russell, M., Brown, C., Skilling, A., Series, R., Wallace, J., Bohnam, B., and Barker, P. 1996. Applications of Automatic Speech Recognition to Speech and Language Development in Young Children. In Proceedings of the Fourth International Conference on Spoken Language Processing, Philadelphia PA. Sacks, H., Schegloff, E. A., and Jefferson, G. 1974. A simplest systematics for the organization of turntaking for conversation. Language 50(4): 696-735. Scott, Judith A., and Nagy, William E. 1997. Understanding the definitions of unfamiliar verbs. Reading Research Quarterly 32(2), pp. 184-200. Stahl, Steven A., and Jacobson, Michael G. 1986. Vocabulary difficulty, pior knowledge, and text comprehension. Journal of Reading Behavior 18(4), pp. 309-323. Stanovich, K. E. 1991. Word recognition: Changing perspectives. In Handbook of Reading Research vol. 2, p. 418 – 452. Tannen, D. 1984. Conversational style: Analyzing talk among friends. Norwood NJ: Ablex. Tingstrom, D. H., Edwards, R. P., and Olmi, D. J. 1995. Listening previewing in reading to read: Relative effects on oral reading fluency. Psychology in the Schools 32, 318-327. Ulijn, J. M., and Li, X. 1995. Is interrupting impolite? Some temporal aspects of turn-taking in Gregory Aist Page 27 of 27 2/13/2016 Chinese-Western and other intercultural business encounters. Text 15(4): 598-627. Underwood, Geoffrey, and Underwood, Jean D. M. 1998. Children's interactions and learning outcomes with interactive talking books. Computers in Education 30(1/2), pp. 95-102. Vanderbilt (The Cognition and Technology Group at Vanderbilt). 1996. A multimedia literacy series. Communications of the ACM 39(6), 106-109. Now a commercial product from Little Planet Publishing, Nashville TN. Ward, N. 1996. Using Prosodic Clues to Decide When to Produce Back-channel Utterances. In Proceedings of the 1996 International Symposium on Spoken Dialogue, pages 1728-1731, Philadelphia PA. Weber, Rose-Marie, and Shake, Mary C. 1988. Teachers' rejoinders to students' responses in reading lessons. Journal of Reading Behavior 20(4), pp. 285-299. Zechmeister, E. B., Chronis, A. M., Cull, W. L., D'Anna, C. A., and Healy, N. A. 1995. Growth of a functionally important lexicon. Journal of Reading Behavior 27(2), pp. 201-212.