Similarity and Duplicate Detection system for an OAI Compliant

advertisement

Similarity and Duplicate Detection system for an OAI

Compliant Federated Digital Library

Haseebulla M Khan, Kurt Maly, and Mohammad Zubair

Computer Science Department, Old Dominion University, Norfolk VA-23529, USA

{hkhan,zubair,maly}@cs.odu.edu

The Open Archives Initiative (OAI) is making feasible to build high level services such as a federated search service that harvests metadata from different

data providers using the OAI protocol for metadata harvesting (OAI-PMH) and

provides a unified search interface. There are numerous challenges to build and

maintain a federation service, and one of them is managing duplicates. Detecting exact duplicates where two records have identical set of metadata fields is

straight-forward. The problem arises when two or more records differ slightly

due to data entry errors, for example. Many duplicate detection algorithms exist, but are computationally intensive for large federated digital library. In this

paper, we propose an efficient duplication detection algorithm for a large federated digital library like Arc with over 7 million records and around 180 collections.

Introduction

There are numerous challenges to build and maintain a federation service, and one of

them is in managing duplicates. The duplicates arise either due to publication of the

same record in multiple collections or due to hierarchical harvesting that is made possible by the OAI framework. Detecting exact duplicates where two records have

identical set of metadata fields is straightforward. The problem arises when two or

more records differ slightly due to data entry errors, for example. Duplicate detection

problem has been extensively studied in the literature, for example [1-3], and many of

the algorithms proposed are application specifics. Most of these algorithms, for a

large federated digital library consisting of records with multiple metadata fields,

have a large over-head and are computationally intensive. In this paper, we propose

an efficient duplication detection algorithm for a large federated digital library like

Arc with over 7 million records and around 180 collections.

In our approach, we first rank the records such that all duplicates present in the federation aggregate within a window the size of which is a parameter. A record is assigned a rank based on how similar it is to a randomly picked record referred to as an

anchor record. The similarity is measured for all metadata fields in the two records,

though with different weights. We detect duplicates in a window of size w, with a

runtime complexity of O(n*w), where n is the total number of records.

2. Experimental Results and Conclusion

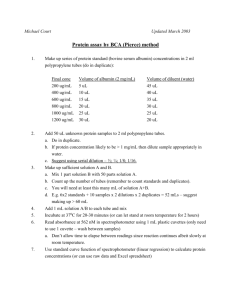

We chose randomly 73 archives with 465,440 records out of 180 archives with

7,156,195 records from Arc[3]. We selected title and author as the only attributes for

our performance study. We chose C*log2(465,440) = C*18 as window size(W) and

plotted the number of duplicates found. Fig 1.(a) shows that number to increase as C

is increased up to a point, after which it becomes almost constant. In Fig 1(b) we

plotted the number of duplicates found when the weights are changed.

Fig. 1 (a) Window Size Vs Duplicates

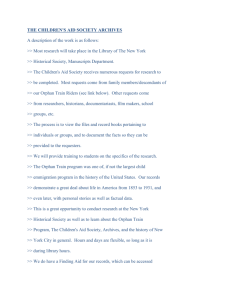

Fig. 2. (a) Relationships between archives

(b) Weights Vs Duplicates

(b) Archives with self duplicates

The results show that the title’s weight is more important than that of the author’s

weight. This is true as author names are not normalized and some times are represented by initials. We also ran our duplicate detection algorithm for the entire set of

the Arc collection. Fig 2(a) shows selected archives that have duplicates in common

For example, the table indicates that “lcoa1.loc.gov” and “lcoa1” archives have

around 30850 records with title-author similarity; they are both Library of Congress

OAI Repositories with different versions of OAI-PMH. Fig 2(b) shows samples of

archives with self duplicates.

We presented an efficient duplicate detection algorithm which uses ranking, sorting

and a new windowing scheme. If window size (w) is small then completeness cannot

be achieved and if it is large then more unnecessary comparisons will be done. The

ability to fine tune the algorithm for quick scans for large archives and then detailed

analysis of specific archives makes it a very suitable tool for duplicate management in

a large federation. As such it outperforms existing tools significantly.

3.0References

[1] Sam y. Sung Zhao li Peng Sun. “A Fast Filtering Scheme for Large Database Cleansing”

Proceedings 11th international conference on Inform & knowledge management, ACM2002

[2] M. Hernandez and S. Stolfo. The merge/purge problem for large databases. In Proceedings

of the ACM SIGMOD International Conference on Management of Data, May 1995.

[3] ARC - A Cross Archive Search Service ODU, Digital Library Research Group