- ePrints Soton - University of Southampton

advertisement

Competence-based System for Recommending Study Materials from the

Web: Design and Experiment

Athitaya Nitchot, Lester Gilbert, Gary B Wills

Learning Society Lab, School of Electronics and Computer Science

University of Southampton

United Kingdom

{an08r, lg3, gbw}@ecs.soton.ac.uk

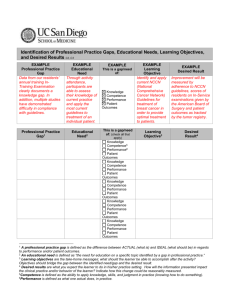

Abstract: Web-based education makes use of resources from the Web for an educational

purpose. In order to produce the effective Web-based educational system, this could engage

with problem of high development costs and require the update of study materials if the

content of knowledge changes. In addition, building such system needs careful preparation in

terms of describing the knowledge of experts, students and tutors. This paper proposes the

system to help learners find study materials as supplementary resources outside the classroom

without any help from a teacher. The advantage of this system is that it requires the

appropriate competence structure to be embedded within the system. System process considers

the method for deriving the recommended links of study materials from learners’ competences

which are listed based on a competence structure. There is an experimental study to find out

an appropriate algorithm to generate the query from the competence structure and to determine

if the mean ratings of overall system based on various criterions differ from rating value 3 of a

Likert scale (1 to 5). The results show insignificant differences on the mean rating between all

algorithms, but the participants significantly satisfy with a system overall. In order to develop

and improve our system, further experiments must be conducted.

1.

Introduction

The aim of this paper is to propose a Web-based system which recommends appropriate study material

links to a learner without any intervention from the teacher. The system is not intended to replace the teacher

role or reduce the number of teachers. The main objective of the system is to help learners find study materials

as supplementary resources. Other systems aim to reduce the teacher’s tasks, such as intelligent tutoring systems

(Contreras, Galindo, Caballero, & Caballero, 2007), but such systems involve high development costs, updating

when the informational content changes, and the need for careful preparation in terms of describing and

planning the knowledge content. This research proposes a system based upon a competence model which

suggests appropriate study materials as links from the Web for learners based upon their competences, where the

competence structure is represented as a directed acyclic graph (DAG). The benefits of this system are to base

appropriate study materials upon learners’ existing and desired competences, and to avoid the development of

specific informational content by simply exploiting existing Web-based material.

The structure of this paper is as follows. A brief review of intelligent tutoring systems and their

restrictions leads into an introduction to the competence-based system. A discussion of algorithms to traverse a

competence structure is followed by the presentation of an experimental study and its results to explore some of

these algorithms and to evaluate the system. The paper finally provides a discussion of the system and some

conclusions.

2.

Intelligent tutoring systems and their restrictions

The previous section introduced the idea of designing a system for providing study materials from the

Web without intervention from a teacher. Intelligent tutoring systems are designed for reducing teachers’ tasks,

and this section provides an overview of intelligent tutoring systems and their restrictions.

2.1.

Overview of intelligent tutoring system

An intelligent tutoring system is one kind of an intelligent computer-assisted instructional system which

simulates understanding of a given subject matter domain so that it can teach this domain and can respond to the

student’s problem solving strategies (Anderson, Boyle, & Reiser, 1985). An ITS system intends to provide the

student with the same instructional advantages as that of a sophisticated human tutor. The design and

development of ITS systems lie at the intersection of computer science, cognitive psychology, and educational

research (Nwana, 1990). Most ITSs are designed based on an artificial intelligent approach (AI) since they

attempt to produce in a computer behaviour which, if performed by a human, would be described as ‘intelligent’

(Elsom-Cook, 1987). An ITS architecture typically consists of four modules (Wenger, 1987):

1) The interface module: graphical user interface or simulation of the task domain the learner is learning.

This module is the communicating component of the ITS which controls the interaction between the

student and the system.

2) The expert module: domain model or cognitive model containing a description of the expert

knowledge. This module is designed by an expert.

3) The student module: the emerging knowledge and skill of the student.

4) The tutor module: teaching strategies which refer to the mismatch between a student’s behaviour or

knowledge and the expert’s presumed behaviour or knowledge.

There are other architectures in the literature, but most can be conceptually mapped to these four

modules. For example, Anderson, Boyle and Reiser (1985) replaced an expert model with a bug catalogue, an

extensive library of common misconceptions and errors for the domain.

2.2.

Examples of ITS systems

Some example ITSs follow, chosen because they are illustrative of ITS principles and problems.

2.2.1.

SCHOLAR

SCHOLAR was one of the first ITSs to be constructed. It was an innovative system when considered in

its historical context, a first effort in the development of computer tutors capable of handling unanticipated

student questions and of delivering instructional material at varying levels of detail (Carbonell, 1970). The

knowledge in the expert knowledge module was that of the geography of South America which was represented

in a semantic network. SCHOLAR has not been widely used due to some fundamental limitations such as

difficulty of representing procedural knowledge using semantic nets. However, SCHOLAR introduces many

methodological principles that have become central to ITS design, for example, student modelling and the

separation of tutorial strategies from domain knowledge (Nwana, 1990).

2.2.2.

GUIDON

GUIDON was an ITS for teaching diagnostic problem solving (Clancey, 1987). The project

represented a first attempt to adapt a pre-existing expert system into an ITS. GUIDON’s goal was to teach the

knowledge from the MYCIN expert system (Shortliffe, 1976). It attempted to teach students through case

dialogues where a sick patient was described to the student in general terms (Clancey, 1987). Then the student

was asked to undertake the role of a physician and ask for the information they thought might be relevant to the

case. This project produced many important finding about designing ITSs, for example, it clearly demonstrated

that an expert system is not a sound basis for tutoring (Elsom-Cook, 1987).

2.2.3.

ELM-ART (An ITS on the World Wide Web)

ELM-ART (Brusilovsky, Schwarz, & Weber, 1996) was an example of ITS on a WWW platform. It

was used to support learning programming in Lisp. ELM-ART was developed from ELM-PE (Weber &

Mollenberg, 1994), an Intelligent Learning Environment that supported example-based programming, advanced

testing, and debugging facilities. ELM-ART provided all the course materials in hypermedia form. It presented

the materials to the students and helped them in learning and navigating the course materials. In addition, the

student could investigate all examples and solve all problems on-line. To support the student navigating through

the course, the system used two adaptive hypermedia techniques: adaptive annotation and adaptive sorting of

links.

2.3.

Restrictions of ITSs

An ITS is one way for a learner to obtain learning materials without any intervention from a teacher,

however, there are some limitations as follows:

1) ITS is not necessary to specify every interaction with the student, but only the general problem-solving

principles from which these interactions can be generated (Anderson, et al., 1985).

2) The study materials need to be specially constructed. If the content or any information changes, then

the system must be updated.

3) An ITS is only as effective as the models it implements for expert student and tutor knowledge and

behaviour. Descriptions of the knowledge and possible behaviours of experts, students, and tutors are

embedded in the ITS. Any inadequacies in these models render the ITS inadequate.

4) Most ITSs implement AI, leading to high development costs and possibly slow response times (Polson

& Richardson, 1988).

5) Many ITSs are targeted to and only work on a specific platform. Porting to other platforms introduces

migration costs (Okazaki, Watanabe, & Kondo, 1997).

3.

Competence-based system

This section discusses a system design which suggests appropriate study materials as links from the

Web to a learner. The system is based on a competence model which provides a competence structure for a

particular knowledge domain. Web links for a learner based upon their competences.

3.1.

Competence

The standard definition of competence given by the HR-XML consortium (HR-XML, 2004) is:

“A specific, identifiable, definable, and measurable knowledge, skill, ability and/or other deploymentrelated characteristic (e.g. attitude, behaviour, physical ability) which a human resource may possess and

which is necessary for, or material to, the performance of an activity within a specific business context.”

The concept of competence has been associated with an education system (Stoof, Martens, & van

Merriënboer, 2007) and professional development (Eraut, 1994).

3.1.1.

Competence model

The proposed model for this research draws on the multi-dimensional competence model (COMBA)

from Sitthisak, Gilbert and Davis (2008). The COMBA model consists of three major components: subject

matter, capability, and context (figure 1). An intended learning outcome is a particular combination of capability

and subject matter.

Figure 1: COMBA Competence Model (Sitthisak, et al., 2008)

3.1.2.

Competence structure (competence DAG)

A competence structure links competence nodes through particular relationships. This paper reports

work that uses a simple knowledge domain of mathematical factor, common factor, and highest common factor

(H.C.F), comprising intended learning outcomes (capability and subject matter). The contextual component in

the competence model (figure 1) is not considered in this paper.

An appropriate structure for this knowledge domain is a directed acyclic graph (DAG) representation. The

finalized DAG representation for competence elements in the H.C.F knowledge domain are shown in figure 2.

Evaluate

Factor

Calculate

Factor

Define

Factor

C03

C13

C23

Evaluate

Common

Factor

Calculate

Common

Factor

Define

Common

Factor

C02

C12

C22

Evaluate

Highest

Common

Factor

Calculate

Highest

Common

Factor

Define

Highest

Common

Factor

C01

C11

C21

Figure 2: DAG Representation for Competences in H.C.F., Common Factor and Factorization Domains

This graph (figure 2) shows an activity network as an example of DAG representation. In activity

network, each node represents a task to be completed and the directed edges refer to the next tasks and their

associated time of completion.

There is a benefit of competence structure. It is the design of a competence structure can be conducted

by one people. The structure can be embedded within the system and used by many learners for learning the

same knowledge domain. However the competence structure should be constructed or designed by the developer

not a teacher, so the developer should be an expert in the specific knowledge domain in order to construct a

structure of competence elements properly. Otherwise the developer may require consulting from the expert of

knowledge domain before building a competence structure. The possible solution for the problem on difficulty

in constructing a competence structure could be to use the existing competence structure (if any).

3.2.

Process within a system

This part is a consideration of the process within a system. This illustrates how the system deals with

learners’ competences and how it provides appropriate study materials links from the Web to learners so that

learners can achieve their intended learning outcomes. Figure 3 shows the overview of the process within a

system design.

Learner’s

competences

Keywords

Google

Search

Links

Indicate the steps of the process within the system

Figure 3: Overview of Process within the System

For this research, there are two kinds of learner’s competences: desired and existing competence.

Desired competence refers to the learner’s intended learning outcome or the competence which the learner

wishes to gain. The current or existing competence is the estimation of the actual competence of the learner.

Firstly a sub-process must be considered for getting a learner’s competences so that a system can

generate keywords from corresponding competences. At this point, a structure of competences has been

designed (see section 3.1.2.). The options for desired and existing competences, which will be chosen by the

learner, depend on a structure of competences elements. Further details of how the system generates keywords

from learners’ competence can be obtained in section 4. After the keywords are obtained, a system

automatically obtains Google search results based on these keywords; the links from Google search will be

suggested to learners.

The reason of considering competence statements as the keywords for a search engine is explained as

follows. From the research statement “an approach to design a system for recommending study materials from

the Web without any interaction from the teacher”, it is necessary to consider the method to obtain information

on the Web. Gordon & Pathak (1999) discussed four different methods for locating information on the Web:

1) Directly go to a Webpage location

2) Hypertext links emanating from a Webpage provide built-in associations to other pages

3) Narrowcast services can push pages that meet particular user profile

4) Search engines to find and then furnish information on the Web that hopefully relates to that

description

As the learner’s competences are the information (input) that the system obtain from the learners, so the

system should recommend study materials based on these competences. The appropriate method is to use a

search engine for finding the study materials from competences search queries. There are many search engines

for example, Google, Bing, Yahoo, Alta Vista and Microsoft. The type of search engine is not considered as an

important point in this research but the search engine is just used as the intermediate tool to get relevant study

materials with the learners’ competences.

In this research, Google is used to consider since it gives the high probability that first result is relevant

(Hawking, Craswell, Bailey, & Griffihs, 2001). In addition, Google has offered the largest index, innovative new

services and highly optimized performance and usability (Mayr & Tosques, 2005). Google use PageRank

algorithm (Brin & Page, 1998) as the primary algorithm to find search results from the input query. Google

PageRank algorithm is an information retrieval which is a probability distribution used to represent the

likelihood that a person randomly clicking on links will arrive at any particular page (Thelwall, 2003).

Google becomes popular search engine since the frequency of its use and the simplicity of its display of

query results (Pan, et al., 2007). However, there is one argument about the restriction of PageRank algorithm.

They concluded that PageRank is not effective for identifying the best webpages in a university system because

of its domination by internal links (Thelwall, 2003). Normally Google search results can contain various kinds

of web pages such as blog, forum, electronics book and electronic files. Some of them could contain pages with

academic purposes but some may contain internal links with nonacademic purposes. However we can still rely

on Google since it is effective way to gather all resources from the Web space which is related with learner’s

competences.

4.

System Algorithms

From the system process in figure 3, there are two processes which deal with a competence structure:

1) Obtain desired/existing competences from the learners

2) Generate the search terms from desired/existing competences

Currently there are three algorithms which could be as the processes that deal with competence

structure.

4.1.

Algorithm 1 (Ignore all gaps)

1) Obtain desired/existing competences from the learners

A system begins by providing a choice of desired competences to learners. Next is a list of existing competences

which contains children (including children of children) nodes of desired competence.

2) Generate the search terms from desired/existing competences

The system sets a desired competence sentence (capability with subject matter) as the search terms without

considering the nodes between desired and existing competences.

4.2.

Algorithm 2 (Consider some gaps)

1) Obtain desired/existing competences from the learners

Same as an algorithm 1 (see section 4.1.1).

2) Generate the search terms from desired/existing competences

The search terms are considered based on a desired competence and some gap nodes between two chosen

competences. The learner will be required to obtain study materials based on some gap nodes competences

before reaching a desired competence.

4.3.

Algorithm 3 (Consider all gaps)

1) Obtain desired/existing competences from the learners

Same as an algorithm 1 (see section 4.1.1).

2) Generate the search terms from desired/existing competences

The search terms are considered based on a desired competence and all gap nodes between two chosen

competences. The learner will be required to obtain study materials based on all gap nodes competences before

reaching a desired competence.

5.

Experimental Study

5.1.

Aim of experiment

There are two major purposes in conducting the experiment. Firstly, this is to find an appropriate

algorithm (see section 4) to generate a query; this relies upon the competence structure with the input being from

the users. Secondly it is to determine if the mean ratings of an overall system based on various criterions differ

from a rating value of 3 on a Likert scale (1 to 5).

5.2.

Method

The method for investigating the results is that of an experimental study. The experiment receives

Ethics Committee approval under reference number ES/10/09/007. The independent variables are three

algorithms (see section 4).

The dependent variables are considered in two main sections. The first section consists of lists of

dependent variables to measure system effectiveness (overall system) as follows:

1) Clarity of the learner’s intended learning outcome

2) Clarity of the learner’s existing competence

3) Clarity of competence gap nodes

4) The quality of study materials

5) Ease of accessing information

6) The requirement of a teacher in order to explain some information within links

7) The wide range of study materials

8) Suggestions for future use

The second section consists of lists of dependent variables to compare with an appropriate algorithm as

follows:

1) Learning outcome achievement

2) Usefulness of competence gap nodes to help achieve an intended learning outcome

3) Usefulness of competence gap nodes to improve learner’s motivation

The conducted experiment covers the first level, ‘reaction level’ of Kirkpatrick’s (2007) four levels of

evaluation. The questions could ask the participants’ opinions of the provided study materials links, based on

each algorithm and overall system. Hence the targeted participant is a person who should have a background

knowledge of mathematical factorization, common factors and highest common factors. During the experiment,

the participants are asked to review and access the experimental system, and fill in the questionnaire. The

questions are based on the dependent variables. Each question is rated on a 5-point Likert scale.

5.3.

1)

2)

3)

4)

5)

6)

7)

8)

Experimental Procedure

The experimental procedure involves the following steps:

Participants are welcomed for becoming involved in the experiment.

They are given consent information verbally.

Before the experiment is conducted, all participants receive training in order to have a clear

understanding of the terms and definitions used.

The participants are asked to read the scenario and the instructions on interacting with the system and

filling in the questionnaire.

The participants interact with the system.

Questionnaire I (‘comparing algorithm’) is handed out at the end of reviewing the first algorithm.

After the participants finish reviewing all the algorithms and filling in Questionnaire I, Questionnaire II

(‘overall system’) is distributed.

Participants are given as much time as they require to complete what they are asked to do. Each

participant is involved in the experiment for 45-60 minutes.

6.

Experimental results

This section proposes the results from the experimental study. There are two main sections based on the

experiments: (1) testing for an appropriate algorithm and (2) the overall system evaluation.

6.1.

System algorithms evaluation

In order to find out an appropriate algorithm, we use a repeated measure, ANOVA, to analyze the

obtained data based on each dependent variable (‘learning outcome achievement’, ‘usefulness of competence

gaps’, and ‘learner motivation with competence gaps’). Tables 1 and 2 show the result of comparing three

algorithms based on one dependent variable: ‘learning outcome achievement’.

Dependent Variable for each algorithm

Mean

Std. Deviation

N

Algorithm1_LearningOutComeAchievement

3.4444

.72648

9

Algorithm2_LearningOutComeAchievement

3.4444

.72648

9

Algorithm3_LearningOutComeAchievement

4.2222

.66667

9

Table 1: Descriptive Statistics (‘Learning Outcome Achievement’)

Effect

Algorithm

Value

F

Hypothesis df

Error df

Sig.

a

2.000

7.000

.084

Pillai's Trace

0.506

3.592

Wilks' Lambda

0.494

3.592a

2.000

7.000

.084

Hotelling's Trace

1.026

3.592a

2.000

7.000

.084

1.026

a

2.000

7.000

.084

Roy's Largest Root

3.592

Table 2: Multivariate Tests (‘Learning Outcome Achievement’)

From Table 1 (‘descriptive statistics’), the mean rating of Algorithm 3 (‘consider all gaps’) is higher

than for the other two algorithms based on the criterion: ‘learning outcome achievement’. Table 2 (‘Multivariate

Test’) shows multivariate tests of mean rating for learning outcome achievement. The value p = 0.084 does not

show a significant result (p > 0.05). Hence there is no significant difference between the mean ratings of three

algorithms for learning outcome achievement.

Consider the same statistical test with other dependent variables (‘usefulness of competence gaps’, and

‘learner motivation with competence gaps’). For Algorithm 1 (‘ignore all gaps’), there is no consideration of

every gap, therefore these two dependent variables will be considered for comparison between algorithm 2 and

3.

Tables 3 and 4 show the result of comparing two algorithms (Algorithms 2 and 3) based on two dependent

variables: ‘usefulness of competence gaps’ and ‘learner motivation with competence gaps’.

Mean

Std. Deviation

N

Algorithm2_UsefulnessOfCompetenceGaps

3.8889

0.33333

9

Algorithm2_LearnerMotivationWithCompetenceGaps

4.1111

0.78174

9

Algorithm3_UsefulnessOfCompetenceGaps

4.1111

0.60093

9

Algorithm3_LearnerMotivationWithCompetenceGaps

4.1111

1.05409

9

Table 3: Descriptive Statistics (‘Usefulness and motivation with competence gaps’)

Effect

Value

Algorithm

Pillai's Trace

Wilks' Lambda

Algorithm * Measurement

.022

.978

F

Hypothesis df

Error df

Sig.

.182

a

1.000

8.000

.681

.182

a

1.000

8.000

.681

a

1.000

8.000

.681

Hotelling's Trace

.023

.182

Roy's Largest Root

.023

.182a

1.000

8.000

.681

Pillai's Trace

.056

.471a

1.000

8.000

.512

Wilks' Lambda

.944

.471a

1.000

8.000

.512

.059

.471

a

1.000

8.000

.512

.471

a

1.000

8.000

.512

Hotelling's Trace

Roy's Largest Root

.059

Table 4: Multivariate Tests (‘Usefulness and motivation with competence gaps’)

In Table 3 (‘descriptive statistics’), the mean rating of Algorithm 3 (‘consider all gaps’) is higher than

for Algorithm 2 (‘consider some gaps’) based on the criterion: ‘usefulness of competence gaps’ whilst the mean

ratings for both algorithms are the same for ‘learner motivation with competence gaps’. However, Table 4 shows

multivariate tests of the mean rating for two dependent variables. The value p = 0.681 does not show a

significant result (p > 0.05). Hence there is no significant difference between the mean ratings of two algorithms

for two dependent variables. In addition, there is no significant interaction effect between the algorithms and

dependent variables (p > 0.05).

6.2.

Overall system evaluation

We use one sample t-test to compare the mean rating of 8 dependent variables (see section 5.2) with a

test value of 3 on the Likert scale. Table 5 shows, respectively, descriptive statistics for 8 dependent variables

and values of the statistical test.

N

Mean

Std. Deviation

Std. Error Mean

1.Clarity of learner's intended learning outcome

9

4.0000

0.50000

.16667

2. Clarity of learner's existing competence

9

4.0000

0.50000

.16667

3. Clarity of competence gap nodes

9

4.1111

0.60093

.20031

4. Quality of study materials

9

3.7778

1.20185

.40062

5. Ease to access information

9

3.1111

0.92796

.30932

6. Requirement of teacher

9

3.5556

0.88192

.29397

7. Wide range types of study materials

9

4.3333

0.50000

.16667

8. Suggestion for future use

9

4.0000

0.50000

.16667

Table 5: One-Sample Statistics (‘Overall system’)

Test Value = 3

95% Confidence Interval

of the Difference

1. Clarity of learner's intended learning outcome

2. Clarity of learner's existing competence

3. Clarity of competence gap nodes

4. Quality of study materials

5. Ease to access information

6. Requirement of teacher

7. Wide range types of study materials

8. Suggestion for future use

t

Df

Sig. (2-tailed)

Mean

Difference

Lower

Upper

6.000

6.000

5.547

1.941

0.359

1.890

8.000

6.000

8

8

8

8

8

8

8

8

.000

.000

.001

.088

.729

.095

.000

.000

1.00000

1.00000

1.11111

0.77778

0.11111

0.55556

1.33333

1.00000

.6157

.6157

.6492

-.1460

-.6022

-.1223

.9490

.6157

1.3843

1.3843

1.5730

1.7016

.8244

1.2335

1.7177

1.3843

Table 6: One-Sample Test (‘Overall system’)

From table 5 and 6, we interpret the results as follows:

1) Dependent Variable: ‘Clarity of learner's intended learning outcome’

The value of calculated t is 6.0 and t-critical is 2.31. As t > t-critical, the system significantly provides clarity of

learner’s intended learning outcome.

2) Dependent Variable: ‘Clarity of learner’s existing competence’

The value of calculated t is 6.0 and t-critical is 2.31. As t > t-critical, the system significantly provides

clarity of learner’s existing competence.

3) Dependent Variable: ‘Clarity of competence gap nodes’

The value of calculated t is 5.55 and t-critical is 2.31. As t > t-critical, the system significantly provides

clarity of competence gap nodes.

4) Dependent Variable: ‘Quality of study materials’

The value of calculated t is 1.94 and t-critical is 2.31. As t < t-critical, the system does not significantly

provide good quality of study materials.

5) Dependent Variable: ‘Ease to access to information’

The value of calculated t is 0.36 and t-critical is 2.31. As t < t-critical, it is significantly difficult to

access information.

6) Dependent Variable: ‘Requirement of teacher’

The value of calculated t is 0.36 and t-critical is 2.31. As t < t-critical, no teacher is required to help a

learner to obtain study materials.

7) Dependent Variable: ‘Wide range of types of study materials’

The value of calculated t is 8 and t-critical is 2.31. As t > t-critical, the system provides a wide range of

types of study materials.

8) Dependent Variable: ‘Suggestion for future use’

The value of calculated t is 6 and t-critical is 2.31. As t > t-critical, the participants will suggest future

use to other people.

7.

Discussion and conclusion

A design for a competence-based system for recommending study materials to the learner from the

Web has been proposed in this paper. A major advantage of this system is the ability of the system to offer

learners study materials from the Web based on their competences in order for them to achieve the intended

learning outcome or a desired competence. In addition, the structure of competence can be embedded in the

system and used by many learners for the same knowledge domain. Hence the required update is considered

only for a competence structure, not for the learning materials.

As a competence structure is in the form of a DAG representation, there are some algorithms for

traversing a DAG structure. We conduct an experimental study to find an appropriate algorithm and to evaluate

a designed system. The result shows insignificant differences on the mean rating between three algorithms.

However we obtain positive feedback from the participant in the evaluation of an overall system. For example,

there are the clarities on ‘learner’s intended learning outcome’, ‘existing competence’ and ‘competence gaps’. In

addition, participants feel that the system provides wide range of study materials and would like to suggest the

competence-based system for future use. However, the participants do not agree on the quality of suggested

study materials and feel there is difficulty in accessing information.

In the first experiment, we do not have a final conclusion to find an appropriate algorithm. Hence future

work will include the new design of competence structure for another knowledge domain and we will conduct a

similar experiment. As part of designing a competence structure, we plan to design a competence structure in an

ontological form in order to make the structure human-readable and machine-processable.

References

Anderson, J. R., Boyle, C. F., & Reiser, B. J. (1985). Intelligent Tutoring Systems Science, 228(4698), 456-462.

Brin, S., & Page, L. (1998). The anatomy of a large-scale hypertextual Web search engine. Comput. Netw. ISDN

Syst., 30(1-7), 107-117.

Brusilovsky, P., Schwarz, E. W., & Weber, G. (1996). ELM-ART: An Intelligent Tutoring System on World

Wide Web. Paper presented at the Proceedings of the Third International Conference on Intelligent

Tutoring Systems.

Carbonell, J. R. (1970). AI in CAI: An Artificial-Intelligence Approach to Computer-Assisted Instruction. ManMachine Systems, IEEE Transactions on, 11(4), 190-202.

Clancey, W. J. (1987). Knowledge-based tutoring: the GUIDON program: MIT Press.

Contreras, W. F., Galindo, E. G., Caballero, E. M., & Caballero, G. M. (2007). An intelligent tutoring system

for education by web. Paper presented at the Proceedings of the sixth conference on IASTED

International Conference Web-Based Education - Volume 2.

Elsom-Cook, M. (1987). Intelligent Computer-Aided Instruction Research at the Open University.

Eraut, M. (1994). Developing Professional Knowledge and Competence (Paperback). London: Falmer Press.

Gordon, M., & Pathak, P. (1999). Finding information on the World Wide Web: the retrieval effectiveness of

search engines. Inf. Process. Manage., 35(2), 141-180.

Hawking, D., Craswell, N., Bailey, P., & Griffihs, K. (2001). Measuring Search Engine Quality. Information

Retrieval, 4(1), 33-59.

HR-XML (2004). Competencies (Measurable Characteristics Recommendation). HR-XML Consortium, from

http://ns.hr-xml.org/2_3/HR-XML-2_3/CPO/Competencies.html

Kirkpatrick, D. L. (2007). Implementing the four levels : a practical guide for effective evaluation of training

programs (1st ed. ed.): San Francisco : Berrett-Koehler Publishers.

Mayr, P., & Tosques, F. (2005). Google Web APIs - an Instrument for Webometric Analyses?

Nwana, H. S. (1990). Intelligent tutoring systems: an overview. Artificial Intelligence Review, 4(4), 251-277.

Okazaki, Y., Watanabe, K., & Kondo, H. (1997). An ITS (Intelligent Tutoring System) on the WWW (World

Wide Web). Systems and Computers in Japan, 28(9), 11-16.

Pan, B., Hembrooke, H., Joachims, T., Lorigo, L., Gay, G., & Granka, L. (2007). In Google We Trust: Users'

Decisions on Rank, Position, and Relevance Journal of Computer-Mediated Communication, 12(3).

Polson, M. C., & Richardson, J. J. (Eds.). (1988). Foundations of intelligent tutoring systems: L. Erlbaum

Associates Inc.

Shortliffe, E. H. (1976). Computer-based Medical Consultations: MYCIN (Artificial intelligence series) New

York: Elsevier.

Sitthisak, O., Gilbert, L., & Davis, H. (2008). TRANSFORMING A COMPETENCY MODEL TO ASSESSMENT

ITEMS Paper presented at the 4th International Conference on Web Information Systems and

Technologies (WEBIST).

Stoof, A., Martens, R., & van Merriënboer, J. (2007). Web-based support for constructing competence maps:

design and formative evaluation. Educational Technology Research and Development, 55(4), 347-368.

Thelwall, M. (2003). Can Google's PageRank be used to find the most important academic Web pages? .

Journal of Documentation, 59(2), 205-217.

Weber, G., & Mollenberg, A. (1994). ELM-PE: A Knowledge-based Programming Environment for Learning

LISP

Wenger, E. (1987). Artificial intelligence and tutoring systems: computational and cognitive approaches to the

communication of knowledge: Morgan Kaufmann Publishers Inc.