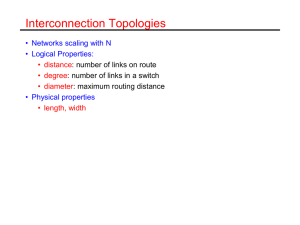

To calculate bisection bandwidth

advertisement

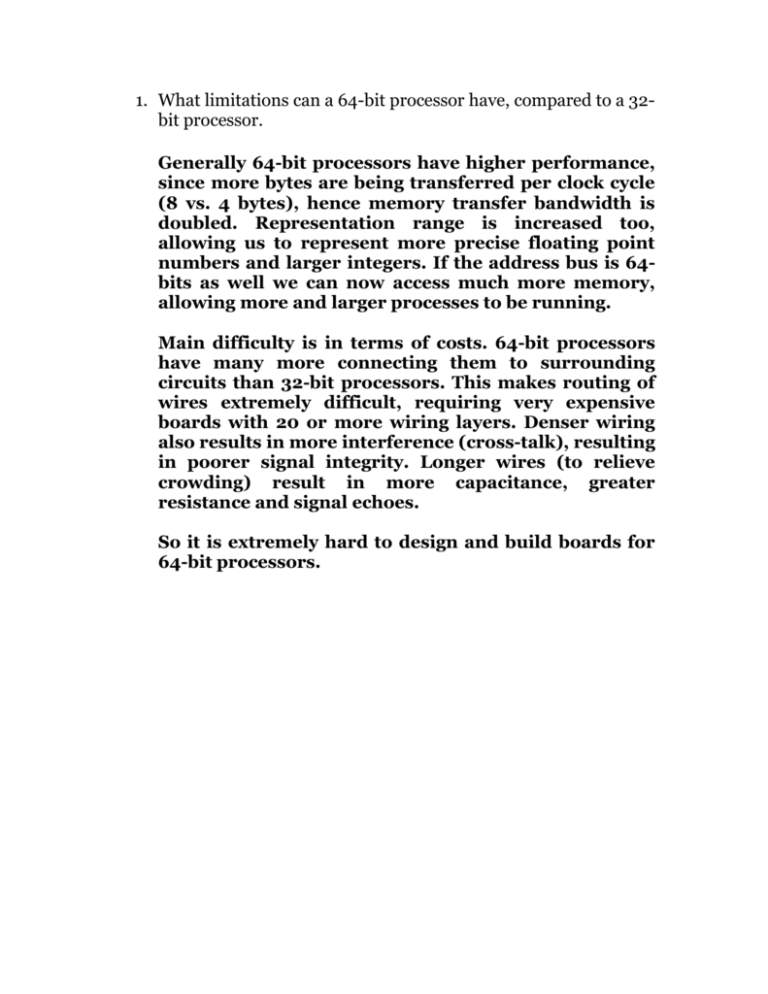

1. What limitations can a 64-bit processor have, compared to a 32bit processor.

Generally 64-bit processors have higher performance,

since more bytes are being transferred per clock cycle

(8 vs. 4 bytes), hence memory transfer bandwidth is

doubled. Representation range is increased too,

allowing us to represent more precise floating point

numbers and larger integers. If the address bus is 64bits as well we can now access much more memory,

allowing more and larger processes to be running.

Main difficulty is in terms of costs. 64-bit processors

have many more connecting them to surrounding

circuits than 32-bit processors. This makes routing of

wires extremely difficult, requiring very expensive

boards with 20 or more wiring layers. Denser wiring

also results in more interference (cross-talk), resulting

in poorer signal integrity. Longer wires (to relieve

crowding) result in more capacitance, greater

resistance and signal echoes.

So it is extremely hard to design and build boards for

64-bit processors.

2. Explain the main differences between a superscalar pipeline

and a vector pipeline.

Superscalar Pipeline

This actually means having multiple pipelines. E.g. the

Intel Pentium had 2 integer pipelines to handle normal

add, sub, mul, div, shl, shr, rol, ror etc, and 1 floating

point pipeline. Main issues in superscalar pipeline is

discovering instruction level parallelism (so that >1

instruction can be issued at a time), preventing

multiple issue hazards (RAW, WAR, WAW) and

retiring instructions in the correct order.

Vector Pipeline

These are special arithmetic pipelines that can work

on arrays (otherwise called “vectors”) rapidly. This is

done by pipelining the various stages of an arithmetic

operation. E.g. for floating point add:

Stage 1: Compare exponents (C)

Stage 2: Shift mantissa of number with larger

exponent left to reduce exponent until both

are the same. (S)

Stage 3: Add mantissas (A)

Stage 4: Normalize result (N)

So if we have the following operation:

for(i=0; i<6; i++)

c[i] = a[i] + b[i];

we will have:

Clock

a[0]+b[0]

a[1]+b[1]

a[2]+b[2]

a[3]+b[3]

a[4]+b[4]

a[5]+b[5]

1

C

2

S

C

3

A

S

C

4

N

A

S

C

5

N

A

S

C

6

7

8

9

N

A

S

C

N

A

S

N

A

N

Executing 6 iterations of this loop takes 9 clock cycles,

as opposed to 6 x 4 = 24 clock cycles without

pipelining.

Note that this is different from our normal idea of

pipelining (fetch, decode, execute, writeback). The

vector pipeline corresponds to the execute stage of our

normal pipeline.

3. What is the difference between “cluster” and “MIMD

distributed memory multiprocessor”?

Essentially the same thing: A cluster can be thought of

as a special case of an MIMD distributed memory

multiprocessor (MIMD-DMM)

In general the difference between a cluster and an

MIMD-DMM is the degree of coupling.

MIMD-DMM are generally tightly coupled machines.

All processing nodes and memories are connected via

custom-built very high speed interconnects (e.g.

omega-nets) that cost a lot to build (challenges include

maintaining signal integrity across long bus lines, fast

low-latency switches etc.). The entire arrangement is

usually in a single enclosure (this keeps wires short,

allows shielding, both of which allow much higher

bandwidth).

Low latency and fast bandwidth allow fine-grained

programs to be run (i.e. programs that do a little bit of

processing, exchange data, do more processing,

exchage data ad infinitum).

Clusters on the other hand usually consists of multiple

PCs connected via commodity LANs. E.g. the Beowulf

cluster consists of many PCs (up to about 128)

connected together via two commodity Ethernet

connections. Special device drivers spread traffic

evenly across both connections to get almost double

bandwidth. Another cluster at an Israeli University

connects 256 PCs through Myrinet, a fast LAN

standard.

Clusters generally will have high latency and low

bandwidth (most LAN are serial devices) and are thus

more suited for course-grained applications (much

processing, exchange a little data,

processing, exchange a little data).

much

more

4. Fig 6.9, Chapter 6.3, CA-AQA 3rd Ed.

A write-update protocol working on a snooping bus for a single

cache block with write-back cache:

Since it is write-back, why when CPU A writes a “1” to “X”, the

contents of memory location X is also updated to a “1”?

Proc Activity

CPU A reads X

CPU B reads X

CPU A writes to

X

CPU B reads X

Bus Activity

Cache miss for

X

cache miss for

X

Writebroadcast for X

A’s Cache

0

B’s Cache

-

Memory

0

0

0

0

1

-

0

1

1

1

Figure 6.9 is for write-broadcast. The steps are as follows:

i)

ii)

iii)

A reads X. Cache miss. Data is brought

from memory.

B reads X, cache miss, data is brought

from memory.

A writes to X. Write is broadcasted to all

who are interested in X, including the

memory system. CPU B’s cache and the

main memory are both updated with X=1.

5. Page 434, Chapter 5.6, CA-AQA 3rd Ed:

Using “blocking” compiler optimization to reduce miss rate:

What after applying “blocking” the code has two extra “for loop”

at the start of the code?

Problem: If a piece of code accesses arrays both rowwise and column-wise in each iteration, it is

impossible to achieve spatial locality. E.g.:

for(i=0; i<n; i++)

for(j=0; j<n; j++)

x[i][j] = y[j][i];

Regardless of whether arrays are stored row-major or

column-major at least one array will suffer cache miss

all the time, regardless of block size. Useless to swap

indices i and j since it will only cause the other matrix

to miss all the time.

Worst case # of memory accesses will be if block size is

1, and all matrices are larger than the total cache size.

Then the number of memory accesses (cache misses)

will be 2n2 (each matrix misses n2 times) for an n2

operation.

Blocking allows us to work on sub-portions of a matrix

at a time, so that the entire sub-portion can fit into the

cache, and this allows us to exploit temporal locality

(since we could not exploit spatial locality).

Let our blocking factor be B (i.e. we will work on this

number of blocks at a time. To re-write the code, we

must:

i)

Insert a new outer loop that cycles through

all sub-blocks (# of sub-blocks is of course

n/B) for i and j.

We need separate outer loops for each inner

loop index to ensure that we cover the entire

range of j for each sub-range of i.

ii)

Modify the existing loop to take into work in

sub-blocks.

Original code:

for(i=0; i<n; i++)

for(j=0; j<n; j++)

x[i][j] = y[j][i];

New Code:

for(ii=0; ii<n; ii+=B)

for(jj=0; jj<n; jj+=B)

for(i=ii; i<min(ii+B, n); i++)

for(j=jj; j<min(jj+B, n); j++)

x[i][j] = y[j][i];

Matrices x and y will miss n2/B times each, and hence

the total number of misses is now 2n2/B, an

improvement of B times.

6. RAID 4 and RAID 5 work almost the same, where the parity is

stored as blocks and associated with a set of data blocks, just

that RAID 4 is storing the parity information on a parity disk

while RAID5 spreads the parity information throughout all the

disk (as shown in Fig 7.19). Why in Fig 7.17, the “corresponding

check disks” column for RAID 4 and RAID 5 are both “1”?

Should it not be “0” for RAID 5?

Difference between RAID 4 and RAID 5:

RAID 4 – One disk is dedicated to storing all parity

checksums.

Disk 1

0

4

8

12

16

Disk 2

1

5

9

13

17

Disk 3

2

6

10

14

18

Disk 4

3

7

11

15

19

Disk 5

P0

P1

P2

P3

P4

Problem: All writes require update to the parity disk.

E.g. Blocks 0, 6 can be written to simultaneously (since

they’re on different disks), but writes to parities P0, P1

must be serialized because they’re on the same disk.

Hence this becomes a bottleneck.

RAID 5 solves this by distributing the parity across all

disks:

Disk 1

0

4

8

12

P4

Disk 2

1

5

9

P3

16

Disk 3

2

6

P2

13

17

Disk 4

3

P1

10

14

18

Disk 5

P0

7

11

15

19

A write to blocks 0, 6 require writes to Disk 1 (for block

0) and Disk 5 (for P0) and Disk 3 and Disk 4 (for 6 and

P1). Since these are all independent, they can be done

simultaneously.

However the number of blocks required for parity still

remains the same, and the parity still effectively

occupies the equivalent of an entire disk. Hence the

number of parity disks is still 1, and not 0.

7. What is bisection bandwidth? How to compute?

To calculate bisection bandwidth:

1. Split network so that both sides have

equal number of nodes

2. Count the number of links crossing the

dividing lines. Multiply by bandwidth of

each line to obtain bisection bandwidth

(or simply count links if individual

bandwidth is not known).

Fully interconnected network:

Take into account all the nodes on side A of the bisection.

Each node must connect with n/2 nodes on the other side of

the bisection (side B) (since the number of nodes is equally

distributed). There are n/2 nodes on side A. Therefore # of

connections crossing the bisection is (n/2) * (n/2) = (n/2)2.

Buses:

It is simpler for buses. Since there is one connection for all

nodes, if we divided all the nodes into two equal groups, the

number of links crossing the bisection will still remain as 1.

Ring:

Again this is trivial. Splitting a ring into two will always

result in two links crossing the bisection. Bisection

bandwidth is therefore 2.

2D Torus

Non-trivial to do analytically, since number of links

crossing depends on how nodes are arranged in the matrix.

The number of connection is always twice the number of

columns or rows (depending on how you divide the nodes)

because of the connection between immediate neighbours

on either side of the bisection, and the connection between

extreme nodes of the same row/column.

Assume a M x N (M = # of rows, N = # of columns) matrix of

nodes:

If nodes can be divided into two equal parts both

horizontally and vertically, we’d divide the nodes so that the

number of links is minimized:

bisection bandwidth = 2 x min(M, N)

If nodes can be divided equally only vertically, bisection

bandwidth is 2M. If it can be divided only horizontally,

bisection bandwidth is 2N.

If it cannot be divided equally either horizontally or

vertically (e.g. 7 x 5 network), bisection bandwidth is

undefined as it is impossible to get equal number of nodes

on each side.

3D HyperCube

From diagrams, we find that the number of links crossing

the bisection bandwidth from each node is 1. Since there are

n/2 nodes on one side, total number of crossings is n/2, and

the bisection bandwidth is n/2.

8. Example on page 325, Prof Yuen asked during the lecture

whether we can replace the array T[i] by using a variable T. Can

we? CA-AQA 3rd Ed

Original Code After Renaming:

for(i=0; i<=100; i++)

{

T[i] = X[i] + c;

X1[i] = X[i] + c;

Z[i] = T[i] + c;

Y[i] = c – T[i];

}

Yes, as T[i] has no more use beyond computing Z[i]

and Y[i], so it can be replaced by a single variable T:

for(i=0; i<=100; i++)

{

T = X[i] + c;

X1[i] = X[i] + c;

Z[i] = T + c;

Y[i] = c – T;

}

Note that you cannot do the same for X1[i], which

actually holds the correct result for X[i] after the

iteration is done. X1[i] is needed to correct the value in

X[i] (which is still holding old values because of the

renaming) after all 100 iterations are over. Neither can

you do this:

for(i=0; i<=100; i++)

{

T = X[i] + c;

X1 = X[i] + c;

Z[i] = T + c;

Y[i] = c – T;

X[i] = X1;

}

This will re-introduce the anti-dependency (which makes

the entire renaming exercise pointless).

9. Explain software pipelining (symbolic loop unrolling) page 329,

chapter 4.4 CA-AQA 3rd Ed.

Main gist: Instead of unrolling the loop, we just simply

take instructions from different iterations and schedule

them together.

E.g.:

for(i=0; i<n; i++)

a[i] = a[i]+6;

In assembly:

a1 = base address of a, r2 = Address of final element

Loop: lw r1, 0(a1)

addi r4, r1, 6

sw r4, 0(a1)

addi a1, a1, 4

; Increment to next element.

; Assumed 1 element = 4 bytes

bne a1, r2, Loop

We can unroll this 3 times:

Iteration i

lw r1, 0(a1)

addi r4, r1, 6

sw r4, 0(a1)

Iteration i+1

lw r1, 4(a1)

addi r4, r1, 6

sw r4, 4(a1)

Iteration i+2

lw r1, 8(a1)

addi r4, r1, 6

sw r4, 8(a1)

Suppose our CPU has 1 load unit, 1 add unit and 1 store

unit. We can pick 1 load, 1 add and 1 store from different

iterations to keep all three units full. We can pick the sw

from Iteration 0, the add from iteration 1 and the lw from

iteration 2 (shaded instructions):

Loop:

sw r4, 0(a1)

add r4, r1, 6

lw r1, 8(a1)

addi a1, a1, 4

bne a1, r2, Loop

; Store a[i]

; Add a[i+1]

; Load a[i+2]

; Increment index

Set up and shut-down code must be inserted for this to

work correctly.

The start-up code consists of all instructions from each

unrolled iteration before the shaded instruction.

The shutdown code consists of all instructions form each

unrolled iteration after the shaded instruction.

Startup:

Loop:

Shutdown:

lw r1, 0(a1)

addi r4, r1, 6

lw r1, 4(a1)

sw r4, 0(a1)

add r4, r1, 6

lw r1, 8(a1)

addi a1, a1, 4

bne a1, r2, Loop

sw r4, 4(a1)

add r4, r1, 6

sw r4, 8(a1)

; Load a[i]

; Add a[i]+6

; Load a[i+1]

; Store a[i]

; Add a[i+1]

; Load a[i+2]

; Increment index

; Store a[i+1]

; Add a[i+2]

; Store a[i+2]